Building a Q&A Bot over Private Documents with OpenAI and LangChain

Last Updated on July 25, 2023 by Editorial Team

Author(s): Sriram Parthasarathy

Originally published on Towards AI.

Chat with your own private finance documents

In today’s data-driven world, extracting valuable insights from private documents is a crucial aspect of decision-making in various domains. Whether it’s in finance, healthcare, or any other industry, having an efficient and intelligent system to access and analyze relevant information is paramount. In this article, we describe how to build a Q&A bot over private documents using the powerful combination of OpenAI and LangChain to unlock the treasure trove of knowledge hidden within private documents. For the sake of the demo, I will be using Finance documents that include analyst recommendations for various stocks.

Private data to be used

The example provided can be used with any dataset. I am using a data set that has Analyst recommendations from various stocks. For the purpose of demonstration, I have gathered publicly available analyst recommendations to showcase its capabilities. You can replace this with your own information to try this.

Below is a partial extract of the information commonly found in these documents. If you wish to try it yourself, you can download analyst recommendations for your preferred stocks from online sources or access them through subscription platforms like Barron’s. Although the example provided focuses on analyst recommendations, the underlying structure can be utilized to query various other types of documents in any industry as well.

Microsoft (MSFT) Analysis

Stock Price Forecast

The 41 analysts offering 12-month price forecasts for Microsoft Corp have a median target of 335.88, with a high estimate of 373.56 and a low estimate of 232.00. The median estimate represents a +8.52% increase from the last price of 309.50.

Analyst Recommendations

The current consensus among 51 polled investment analysts is to buy stock in Microsoft Corp. This rating has held steady since May, when it was unchanged from a buy rating.

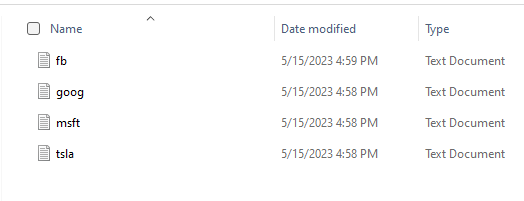

I have assembled such data for a few stocks for demonstration purposes. This includes Google, Microsoft, Meta, and Tesla.

To facilitate easy access and updating of analysts’ recommendations, all the recommendations can be organized into a designated folder. Each stock corresponds to a separate file within this folder. For example, if there are recommendations for 20 stocks, there will be 20 individual files. This organization enables convenient updating of information for each stock as new recommendations arrive, streamlining the process of managing and maintaining the most up-to-date data for each stock.

Questions this Q&A bot application can answer

The data we have for this application is stock market analyst recommendations for many stocks. Let's say you are looking for insight about Microsoft stock. You can ask any of the following questions as an example:

- What is the median target price for Microsoft (MSFT)?

- What is the highest price estimate for Microsoft (MSFT)?

- What is the lowest price estimate for Microsoft (MSFT)?

- How much percentage increase is expected in the stock price of Microsoft (MSFT)?

- How many analysts provided price forecasts for Microsoft (MSFT)?

- What is the current consensus among investment analysts regarding Microsoft (MSFT)?

- Has the consensus rating for Microsoft (MSFT) changed recently?

- When was the consensus rating last updated for Microsoft (MSFT)?

- Is the current recommendation for Microsoft (MSFT) to buy, sell, or hold the stock?

- Are there any recent analyst reports available for Microsoft (MSFT)?

These questions cover various aspects of the stock analysis, including price forecasts, analyst recommendations, and recent changes in ratings. The chat system can provide specific answers based on the information available in the financial documents.

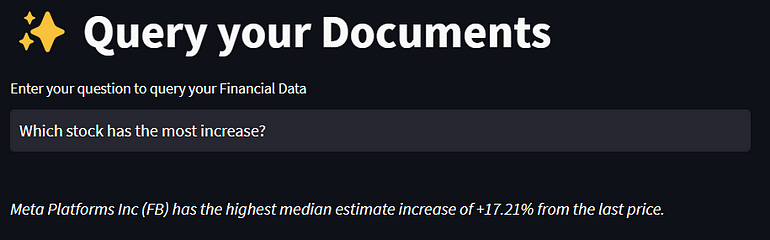

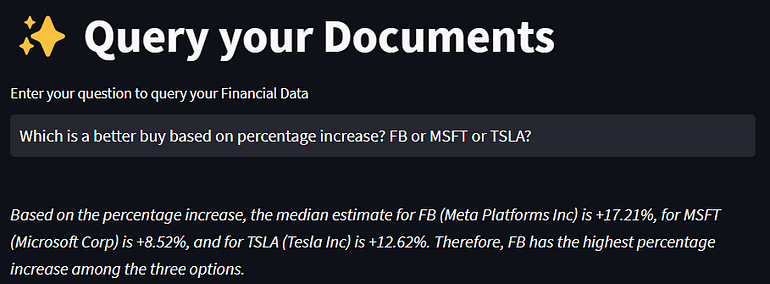

Please note that you can not only ask questions about an individual stock but can also ask comparative questions across stocks. For example, which stock has the most price increase? Here the system will compare the price increase across all the stocks and provide an answer.

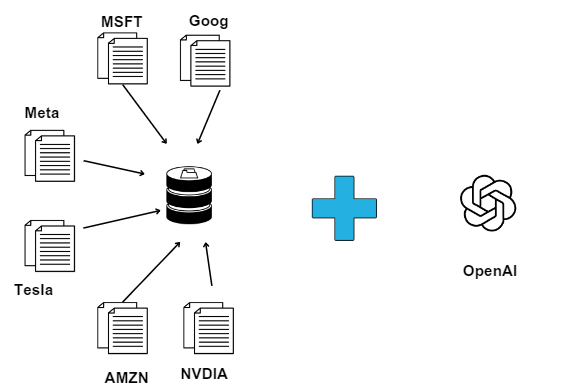

Quick summary of how the web application works

This web-based application allows users to input their questions in a text box and receive answers based on insights gathered from multiple documents. For instance, users can inquire, “What is the highest price estimate for Microsoft?” and the application will query the relevant documents to provide an accurate response.

Moreover, users can also compare stocks by asking questions such as, “Which stock, Meta or Microsoft, has a higher percentage increase in the stock price?” The application will analyze the data across the documents, enabling users to make informed investment decisions based on the comparative insights provided.

Application Overview

The application is built with LangChain and ChatGPT. Though it uses ChatGPT, we can also wire this to other LLMs as well.

LangChain is an innovative framework designed to empower you in building sophisticated applications driven by large language models (LLMs). By offering a standardized interface, LangChain facilitates the seamless integration of various components, including LLMs, data sources, and actions. This streamlined approach accelerates the development of robust applications, enhanced by features such as chaining, data awareness, and agentic capabilities.

To complement LangChain, the web application is built utilizing Streamlit, a Python library for creating interactive web applications and data dashboards. Streamlit’s open-source nature and user-friendly features simplify the process of developing web apps with minimal effort. This has made it a popular choice among developers, data scientists, and machine learning engineers seeking to build engaging and accessible applications.

Initial setup

Install OpenAI, LangChain, and StreamLit

pip install openai

pip install langchain

pip install streamit

Import the relevant packages

# Imports

import os

from langchain.llms import OpenAI

from langchain.document_loaders import TextLoader

from langchain.document_loaders import PyPDFLoader

from langchain.indexes import VectorstoreIndexCreator

import streamlit as st

from streamlit_chat import message

from langchain.chat_models import ChatOpenAI

Set the API keys

# Set API keys and the models to use

API_KEY = "Your OpenAI API Key goes here"

model_id = "gpt-3.5-turbo"

# Add your openai api key for use

os.environ["OPENAI_API_KEY"] = API_KEY

Define the LLM to use

# Define the LLM we plan to use. Here we are going to use ChatGPT 3.5 turbo

llm=ChatOpenAI(model_name = model_id, temperature=0.2)

Ingesting private documents

We used Langchain to ingest data. LangChain offers a wide range of data ingestion methods, providing users with various options to load their data efficiently. It supports multiple formats, including text, images, PDFs, Word documents, and even data from URLs. In the current example, text files were utilized, but if you wish to work with a different format, you simply need to refer to the corresponding loader specifically tailored for that format.

All the analysts’ recommendations documents are stored in a dedicated folder. You have the flexibility to either refer to individual documents or retrieve all the documents within a specific folder.

If you want to specify exact documents, you can do it the following way. To load the files you want to ingest, you can specify the path to each file individually. The loaded files can then be saved into a list. This list serves as the input that is sent to the vector database to store the data.

loader1 = TextLoader('pdfdocs/goog.txt')

loader2 = TextLoader('pdfdocs/fb.txt')

mylist = [loader1, loader2]

The alternative approach is a more versatile method in which we can load all pertinent documents from a designated folder and store the file locations in a list for subsequent processing. This approach offers flexibility and allows for the efficient handling of multiple documents by capturing their locations in a centralized list, enabling seamless data retrieval and analysis.

# Specify the directory path. Replace this with your own directory

directory = './pdfdocs'

# Get all files in the directory

files = os.listdir(directory)

# Create an emty list to store a list of all the files in the folder

mylist = []

# Iterate over the files

for file_name in files:

# Get the relative path of each file

relative_path = os.path.join(directory, file_name)

# Append the file to a list for further processing

mylist.append(TextLoader(relative_path))

Load the documents into the vector store.

When dealing with a vast number of documents, it becomes inefficient to send all documents (analyst recommendations) to your large language model (LLM) when seeking answers to specific questions. For instance, if your question pertains to MSFT, it would be more cost-effective to only send document extracts that reference MSFT to your LLM for answering the question. This approach helps optimize resource utilization. To achieve this, all documents are split into chunks and stored in a vector database in a numeric format (embeddings). When a new question is posed, the system queries the vector database for relevant text chunks related to this question, which is then shared with the LLM to generate an appropriate response.

Within the LangChain framework, the VectorstoreIndexCreator class serves as a utility for creating a vector store index. This index stores vector representations of the documents (in chromadb), enabling various text operations, such as finding similar documents based on a specific question.

When a user asks a question, a similarity search is performed in the vector store to get document chunks relevant to the question. The question, along with the chunks are sent to OpenAI to get the response back.

# Save the documents from the list in to vector database

index = VectorstoreIndexCreator().from_loaders(mylist)

Now we are ready to query these documents.

Setting up the web application

The application is presented in the browser using Streamlit, providing a user-friendly interface. Within the application, a text box is available for users to enter their questions. Upon submitting the question by pressing enter, the application processes the input and generates a corresponding response. This response is then displayed below the text box, allowing users to conveniently view the relevant information.

#------------------------------------------------------------------

# Setup streamlit app

# Display the page title and the text box for the user to ask the question

st.title('U+2728 Query your Documents ')

prompt = st.text_input("Enter your question to query your Financial Data ")

Create a prompt based on the question asked by the user and display the response back to the user

By calling index.query() with the specified parameters, you initiate the process of querying the vector database using the provided question. Vector database provides relevant text chunks that are relevant to the question asked. These text chunks, along with the original question, is passed to LLM. The LLM is invoked to analyze the question and generate a response based on the available data sent. The specific chaining process associated with the query is determined by the chain_type parameter, which is to use all the data (filtered by the question) sent to LLM.

# Display the current response. No chat history is maintained

if prompt:

# stuff chain type sends all the relevant text chunks from the document to LLM

response = index.query(llm=llm, question = prompt, chain_type = 'stuff')

# Write the results from the LLM to the UI

st.write("<br><i>" + response + "</i><hr>", unsafe_allow_html=True )

Now the entire application is ready, and let's take it for a spin next.

Ask few questions

Let's try few questions

The range of questions encompasses diverse facets of stock analysis, encompassing price forecasts, analyst recommendations, and recent rating changes. The chat system excels in delivering precise answers by leveraging the information contained within the financial documents.

The system extends beyond individual stock inquiries and accommodates comparative queries across multiple stocks. For instance, one can ask about the stock with the highest price increase, prompting the system to compare price increases across all stocks and provide a comprehensive response. This versatility allows users to gain insights and make informed decisions across a broader spectrum of stock analysis.

Conclusion

The development of a Q&A bot over private documents using OpenAI and LangChain represents a remarkable achievement in unlocking the invaluable knowledge hidden within private document repositories. This web-based Q&A bot has the potential to empower users from various industries, enabling efficient access and analysis of critical information and ultimately enhancing productivity and decision-making capabilities. While we showcased a finance example to illustrate the concept, the bot’s functionality extends to any domain. Simply by providing a folder with the relevant privacy documents, users can engage in natural language conversations with the bot. Once the data is ingested into a vector database, users can seamlessly query and retrieve information, propelling the capabilities of intelligent document analysis to new heights.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.