Are Your Human Labels of Good Quality?

Last Updated on June 15, 2023 by Editorial Team

Author(s): Deepanjan Kundu

Originally published on Towards AI.

Measuring and improving the quality of human labels for ML tasks.

What are labels?

In machine learning, a label is a categorical or numerical value assigned to a data point that serves as the target variable for a predictive model. The process of labeling involves manually or automatically assigning these values to the training data, which is then used to train a machine learning algorithm to predict the labels of unseen data. Labels can be binary, multi-class, or continuous values, depending on the type of problem and the nature of the data. Most of the content-based end tasks in applied ML use human labeling programs to collect their labels. We will be looking into human labels in this article.

Why is label quality important?

Labels are the bread and butter of supervised machine learning problems. When one looks at a supervised machine learning problem, they look at the data set and assume that label is the ground truth. But how do you ensure that human labels reflect the ground truth? These ML models are delivered to clients or end users and hence hold critical value. Data scientists need to be sure that the labels are of good quality and are a reflection of the problem they are solving. Accurately labeling data is a crucial step in the machine learning pipeline as it directly impacts the performance and generalization ability of the model. In this article, we will cover some commonly used definitions of label quality, how to measure quality, and best practices to ensure quality.

How can we measure label quality?

Inter annotator disagreement/ Labeler disagreement

It is considered best practice to have human labelers provide multiple labels for each data point. Different human labelers provide labels for the same data point, and then the final label is aggregated from each human label. This is also called labeler disagreement or inter-annotator disagreement. We will be using the term “labeler disagreement” for the purposes of this article. If all the labelers provide the same response for a given data point, the confidence in the label for such data points would be high. But as is common in most of the tasks, a significant portion of the data points have labeler disagreement. This also acts as an indication of the quality of the labels collected. Suppose the labeler disagreement is high for a large number of data points, which indicates a higher chance of collecting lower-quality labels. Here are a few methods by which the labeler disagreement rate is measured:

a. % of data points with disagreement: For each data point, check if each label response from all human raters is exactly the same. Take the ratio of the number of data points that have any disagreement and the total number of data points. Here is a sample code to make it easy to understand.

def has_disagreement(labels):

previous = labels[0]

for label in range(1, len(labels)):

if label ! = previous:

return True

return False

b. Fleiss’ kappa is a statistical measure for assessing the reliability of agreement between a fixed number of raters when assigning categorical ratings to a number of items or classifying items.[1] This helps to incorporate the relative weight of scenarios with partial agreement along with partial disagreement among labelers. If the raters are in complete agreement, then the Kappa is 1. If there is no agreement among the raters (other than what would be expected by chance), then Kappa ≤ 0.

import numpy as np

def fleiss_kappa(A: np.ndarray) -> float:

"""

Computes Fleiss' kappa score for a group of labelers.

"""

n_items, n_categories = A.shape

n_annotators = float(np.sum(A[0]))

total_annotations = n_items * n_annotators

category_sums = np.sum(A, axis=0)

# compute chance agreement

p= category_sums / total_annotations

p_sum = np.sum(p * p)

# compute observed agreement

p= (np.sum(A * A, axis=1) - n_annotators) / (n_annotators * (n_annotators - 1))

p_mean = np.sum(p) / n_items

# compute Fleiss' kappa score

kappa = (p_mean- p_sum) / (1 - p_sum)

return round(kappa, 4)

Golden Data

Golden Data is a collection of data points with labels that act as a definitive reference for the problem/labeling task at hand. For the use cases of content-based tasks, golden data is usually a collection of data that is manually curated with highly precise labels. They aim to cover the different dimensions of the problem and multiple edge cases. The goal is not to be a reflection of true distribution but to cover obvious and borderline cases across all the labels for the task. The size of these datasets is usually in the low hundreds and is fairly static.

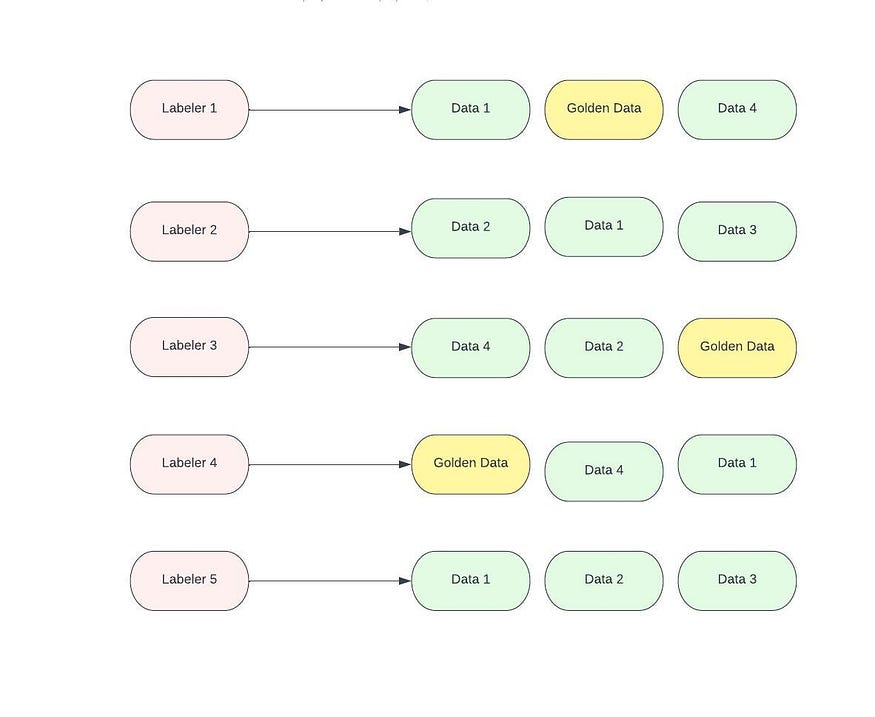

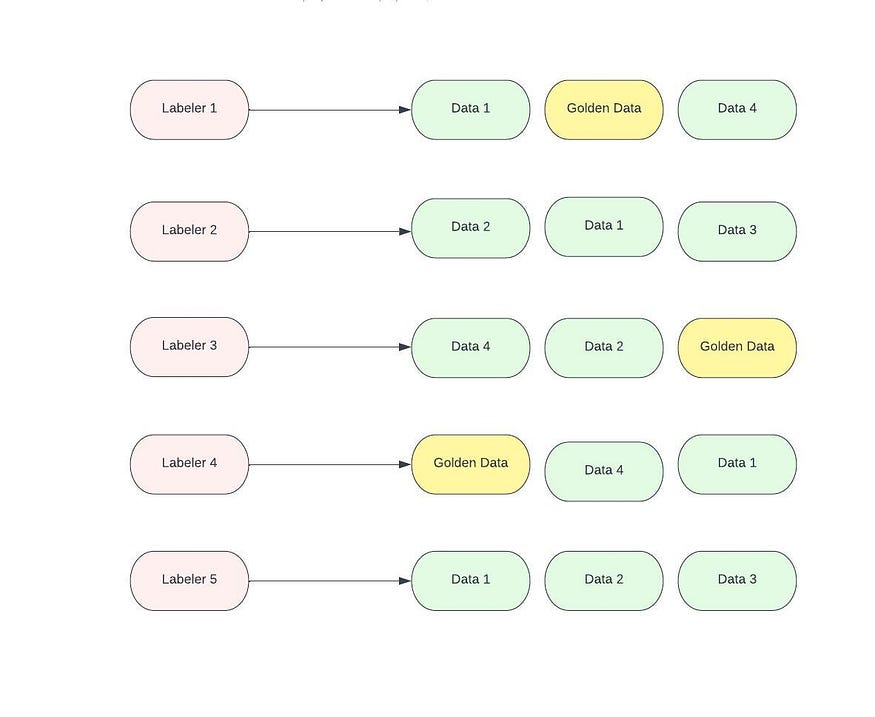

The way to measure label quality is to intermittently mix a subset of the golden dataset with each batch of labeling tasks. The aim would be to have about 50 of these golden data points in the dataset. If the labels are collected daily, then the golden data could be mixed once a week. A lower frequency of golden data is important to ensure golden data points are not leaked to the raters. Here is a diagram demonstrating the mixing process:

The accuracy, precision, and recall of the labels from the human raters vs the golden labels will be the key metrics to track label quality.

from sklearn.metrics import recall_score, precision_score, accuracy_score

def labels(H, G):

"""

H: A dict containing the ids as the key and aggregated human label as the value.

G: A dict containing the ids as the key and golden label as the value for all of Golden Data.

returns a tuple of human label array and golden label array for the human labeled Golden Data.

"""

golden_labels = []

human_labels. []

for id, label in H:

if id in G:

golden_labels.append(G[id])

human_labels.append(H[id])

return golden_labels, human_labels

golden_labels, human_labels = labels(H, G)

print(precision_score(golden_labels, human_labels))

print(recall_score(golden_labels, human_labels))

print(accuracy_score(golden_labels, human_labels))

The accuracy, precision, and recall of the labels from the human raters vs. the “golden labels” will be the key metrics to track label quality.. This will help you understand labeler quality as well.

How can we increase label quality?

- Track the quality: It is important to track label quality and be alerted when the label quality drops below a certain threshold. Each of the measures mentioned in the above section has its own pros and cons, and it would be advisable to track all of them.

- Reiterate on labeling instructions: Labeling instructions should be the usual suspect when irregularities in label quality are detected. It is important to identify if there are any holes in the template. Two key methods using which you can identify and fill those gaps are the following: One, the accuracy of the labels collected using the instructions on golden data should be high (>95%). The way to ensure this is to run pilot programs with the initial versions of the rating template on Golden Data and tune the rating template till you achieve the desired accuracy of human labeling. Second, for batches that have high labeler disagreement, go through the data points with labeler disagreement and manually identify gaps in the instructions for the data points that might be leading to confusion for labelers.

- Are you following the best practices?: There are a few recommended practices for collecting labels for your data:

a. Replication: A standard practice is to collect multiple labels for the same data to ensure better quality labels. An aggregate of these labels is used as the final label. For classification, the median of the labels collected could be used, and for regression, the average of the labels could be used.

import statistics

def aggregated_label_classification(labels):

return statistics.median(labels)

def aggregated_label_regression(labels):

return statistics.mean(labels)

b. Limit the number of tasks per labeler: It is important to minimize human bias in the labels being collected. If all the tasks are executed by the same labeler, there is a higher chance of human bias in the data. Limiting the number of tasks per labeler is really important. A simple way to achieve this is by increasing the number of distinct labelers. You can use Golden Data to identify good vs. bad labelers and assign more tasks to good labelers and fewer tasks to bad labelers.

c. Appropriate amount of time for the labelers: It is important to measure the amount of time taken by the labelers to optimize the balance between label accuracy and costs. You should run multiple experiments to ensure labelers have enough time to read the rules and label the data. The only guidance here is the quality of the labels. The minimum amount of time required to achieve the desired level of accuracy and labeler disagreement should be the goal. If the average time taken by the labelers is closer to the total time provided, it does not necessarily indicate efficiency. The labelers could be wasting time, and it would seem that they are taking up the entire time. It is important to track the accuracy and labeler disagreement.

Conclusion

Data labeling may seem like an insignificant part of machine learning, but it’s essential for success. In this article, we listed some methods to measure label quality and some methods to improve label quality that has worked well in practice. These principles will help ensure that you can spend more time on designing and building cool machine learning models while labels, and the fuel powering these models continue to be of the best quality.

References:

- https://en.wikipedia.org/wiki/Fleiss%27_kappa

- https://labelyourdata.com/articles/data-labeling-quality-and-how-to-measure-it

- https://scikit-learn.org/stable/modules/classes.html#module-sklearn.metrics

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.