AI Drift In Retrieval Augmented Generation — AND — How To Control It!

Last Updated on January 5, 2024 by Editorial Team

Author(s): Alden Do Rosario

Originally published on Towards AI.

This week, I got a strange email from a customer.

Hey guys — I’m noticing that the quality of the responses has been degrading in the AI over the last few days. How is this possible?

Everything was fine a few weeks ago. But now the same questions I was asking before are giving bad responses.

To explain what he means:

He is suggesting that the responses from the Retrieval Augmented Generation (RAG) pipeline have been drifting over the last couple of weeks.

In other words, things were perfectly fine a couple of days ago with responses that were making him happy, and now they have progressively started getting worse.

This was quite shocking to me, because by definition, one of the good things about retrieval augmented generation (RAG) is that it is pretty stable in the AI responses as compared to other AI systems like predictive systems.

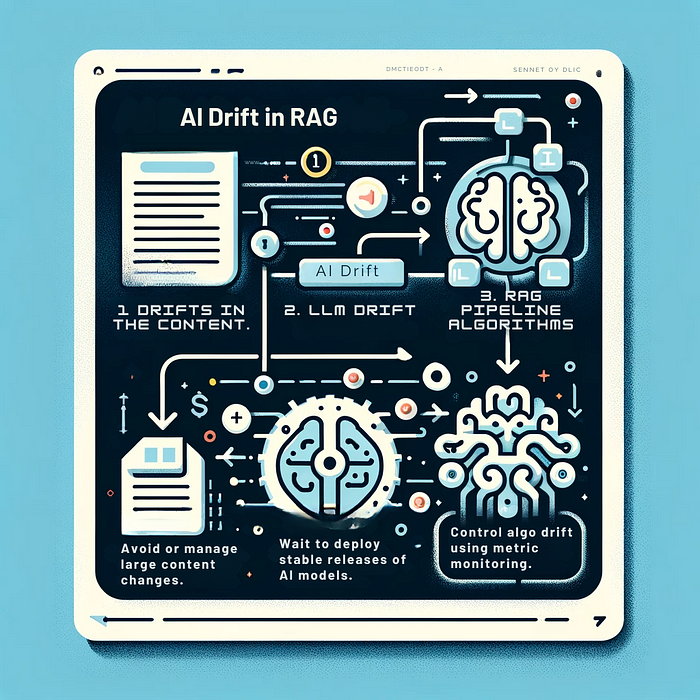

So in this blog post, I will go through AI drift and its occurrences in RAG pipelines and more importantly, how to control them.

What Is AI Drift?

AI drift, thanks to our friends from IBM, is where the performance of the AI responses slowly degrades over time.

Model drift is a phenomenon in machine learning when the statistical properties (distribution) of data change over time. This shift can lead to reduced accuracy, degraded model performance, and unexpected outcomes.

Historically, this was more associated with predictive AI, like the systems you are familiar with in e-commerce, when an AI is used to predict your likelihood of doing something.

IBM’s classic example talks about cases where the predictive AI is trained on one set of data. And then, once you deploy it in production, the AI sees a totally different set of signals and then, over time, learns from that data and starts drifting.

Just for discussion purposes, let’s say your predictive AI is trained on men, and then slowly, over time, as you deploy it in production, it gets used more and more by women.

So now, suddenly, the predictive behavior is drastically different because, as you know, Men are from Mars, and Women are from Venus. (Sorry — I don’t know why I had to throw that in there!)

What Is AI Drift In RAG?

In RAG, there is no runtime machine learning happening in production. It is just snippets from your “ground truth” knowledge being given in the response generation to LLMs like ChatGPT.

So why would there be AI drift in a retrieval augmented generation pipeline?

To get to the bottom of this question, let’s look at the three main sources of AI drift, including the one from the formerly unhappy customer this week.

Source № 1: Drifts In The Content.

So in the case of our unhappy customer, it so happened that he was continuously adding and deleting content to his knowledge base.

As you know, this content gets added to the vector database (like Pinecone) and is used to generate the response.

So, his content and vector database changed dramatically from day-to-day due to additions and deletions.

And due to this, his responses started drifting from what they were at the start of the week.

When we dug into it, it turned out that that was the root cause. As soon as that was identified and he fixed his content, his answers became excellent again, and he went from being an unhappy customer to being a happy customer.

How To Control Content Drift?

To control content drift is no different than your classic CMS (content management system) and knowledge change management.

Think of it almost like a GitHub repo that keeps changing.

Hopefully, your RAG pipeline, whether you have built it yourself (please stop and read this!) or you are using a good RAG SaaS platform that has the ability to let you control content changes and the associated CDC (change data capture).

Believe it or not, this is a very tricky aspect of RAG pipelines. And if you built your own RAG pipeline using something like LangChain (I hope not!) , you will painfully run into the management and the change-data-capture problems associated with your knowledge.

Just think about it:

- What happens if the pricing on your website changes?

- Or if there an update to your FAQs on your website?

- Or if there a new version of your product manuals?

Is your RAG chatbot keeping pace with these changes, and is it in sync with your company’s knowledge base?

If not, you have a potential compliance and customer experience problem on your hands. (Just imagine your chatbot giving one price to the customer while the website says something totally different!)

Source № 2: LLM Drift.

Whenever the ChatGPT API or whatever LLM API you are using is upgraded, you can expect AI drift to happen.

For example, when OpenAI released the June 13th version of ChatGPT-4 and we immediately upgraded to it, the AI responses started going nuts, with situations where English questions were being answered in Spanish.

This led to all sorts of craziness and 3 days of firefighting to figure out what was wrong with the RAG pipelines.

It just so happens that every time OpenAI releases a new version, including the recent November 6th version, the API and LLM go a little wild, and somehow, it takes OpenAI a couple of days to stabilize the release.

How To Control LLM Drift?

Do NOT immediately upgrade to the latest API.

If AI drift and stability are important to you, let OpenAI take a few days to stabilize the latest versions.

Depending on your production use cases, you can decide whether you want to upgrade within the next few days. Or, if you just want to wait for the completely stable version to come out.

For old-timers in the DevOps world, this is very familiar territory. We wait for stable versions of Debian OS before we upgrade production workloads. Upgrading AI APIs and models is no different.

Case in point: We waited a MONTH to upgrade to GPT-4-turbo, even though it is much cheaper and better.

We waited to test the system for all aspects of effects of the new API release, because for us, features like anti-hallucination, citations, and query intent are very important for our business customers.

Source № 3: RAG Pipeline Algorithms.

Your RAG pipeline will have many internal algorithms that affect the AI responses.

At a minimum:

- the way the data is being parsed

- the way the data is being chunked

- the way the query intent is being detected

- the algorithm used for the vector database search.

- the algorithm for the chunk re-ranking

- the algorithm for the prompt engineering

All of these are internal sources of potential drift that can occur in an RAG pipeline.

Just think about it: if the way the vector database is queried or the chunks are re-ranked, one can expect significant changes to the responses.

How To Control RAG Pipeline Drift?

By having internal evaluation metrics that constantly measure the efficacy and important factors in your RAG pipeline.

Recently, there have been many frameworks emerging like Tonal , Giskard and RAGAs that aim to compute internal efficacy metrics on an ongoing basis.

These metrics could be about context efficacy, anti-hallucination scores, answer relevancy, racial bias scores, and so on.

Then, just like any other DevOps metrics, you would monitor these RAG pipeline metrics to see if they are drifting over time.

Conclusion

Yes, having AI drift in retrieval augmented generation pipelines can be a real issue. So, monitoring the stability and resiliency metrics of the pipeline is critical.

And as I indicated, if you have built your own RAG pipeline — tough luck, you will have to build this into your system.

Hopefully, you took the smart route, and whatever RAG SaaS you are using will deal with this for you.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.