Inside MemGPT: An LLM Framework for Autonomous Agents Inspired by Operating Systems Architectures

Last Updated on January 10, 2024 by Editorial Team

Author(s): Jesus Rodriguez

Originally published on Towards AI.

I recently started an AI-focused educational newsletter, that already has over 160,000 subscribers. TheSequence is a no-BS (meaning no hype, no news, etc) ML-oriented newsletter that takes 5 minutes to read. The goal is to keep you up to date with machine learning projects, research papers, and concepts. Please give it a try by subscribing below:

TheSequence U+007C Jesus Rodriguez U+007C Substack

The best source to stay up-to-date with the developments in the machine learning, artificial intelligence, and data…

thesequence.substack.com

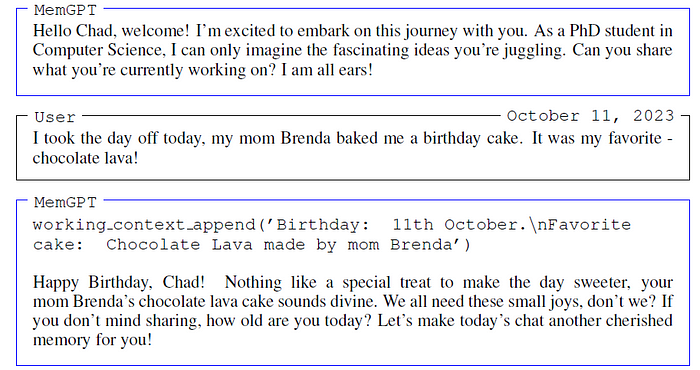

Autonomous agents are the automation paradigm of this AI era. In the last couple of years, we have seen a rapid evolution in terms of the concepts and frameworks for LLM-based autonomous agents. Among the recent frameworks/reference architectures in the autonomous agents space, MemGPT has rapidly become one of my favorites. The thing is that MemGPT is not even an agent framework at its core, but the design is so elegant that it encompasses most of the key capabilities of agent-based architectures.

When I say that MemGPT is not an agent-first framework, I am referring to the fact that MemGPT was designed to address some of the long context limitations in LLM interactions. The key issue with extending the context length in transformers is the significant increase in computational time and memory requirements. This challenge arises from the inherent design of the transformer architecture’s self-attention mechanism, which scales poorly with longer contexts. Consequently, designing architectures that can effectively manage longer contexts has become a crucial area of research.

However, there are obstacles beyond just the computational aspects. Even if these challenges were overcome, recent findings suggest that models with extended contexts do not always make effective use of the additional information provided. This inefficiency, coupled with the substantial resources required to train advanced language models, highlights the necessity for alternative methods to support extended contexts.

Addressing this need, MemGPT presents a unique solution by creating an environment that simulates an infinite context while still operating within the confines of fixed-context models. The inspiration for MemGPT comes from the concept of virtual memory paging, a technique that allows applications to handle data sets much larger than the available memory. This method has been adapted to enable large language models (LLMs) to manage extensive data effectively.

The Architecture

The architecture of MemGPT is reminiscent of an operating system, teaching LLMs to autonomously manage memory for seemingly boundless context handling. It incorporates a multi-level memory structure, distinguishing between two main types of memory: the main context and the external context. The main context, similar to a computer’s main memory or RAM, represents the standard fixed-context window in current language models. This is the immediate, accessible memory that the LLM processor uses during inference.

On the other hand, the external context, analogous to a computer’s disk storage, encompasses information outside the LLM’s fixed context window. This out-of-context data is not directly accessible to the LLM processor. To use this information during inference, it must be explicitly transferred into the main context.

MemGPT enables this efficient management of memory through specific function calls. These calls allow the LLM processor to handle its memory autonomously, seamlessly integrating external context into the main context as needed, without requiring direct user intervention. This system represents a significant step forward in the development of language models capable of handling extensive contexts more effectively and efficiently.

The following figure illustrates the core MemGPT architecture:

Let’s dive deeper into the key architecture components of MemGPT.

1) Main Context

The main context in MemGPT refers to the immediate input scope that the Large Language Model (LLM) handles, constrained by a maximum number of input tokens. This segment primarily encompasses two types of information. First, there’s the ‘system message’ or ‘preprompt’, which sets the interaction’s nature and guidelines for the system. The second portion of the main context is reserved for holding the data relevant to the ongoing conversation. This dual structure ensures that MemGPT maintains a clear understanding of the interaction’s purpose while dynamically managing the conversational content.

2) External Context

The external context in MemGPT functions similarly to disk storage in operating systems. It is an out-of-context storage area that lies beyond the immediate reach of the LLM processor. The information stored here isn’t directly visible to the LLM but can be brought into the main context through specific function calls. The external context’s storage can be configured for various tasks. For instance, in conversational agents, it could store complete chat logs for future reference. In document analysis scenarios, it might contain large document collections that MemGPT can access via paginated function calls, effectively managing large volumes of data outside the main context.

3) Self-Directed Editing and Retrieval

MemGPT has the capability to autonomously manage data movement between the main and external contexts. This process is facilitated through function calls generated by the LLM processor. MemGPT is designed to update and search its memory autonomously, tailoring its actions to the current context. It can decide when to transfer items between contexts and modify its main context to align with its evolving understanding of its objectives and responsibilities.

4) Control Flow and Function Chaining

In MemGPT, various events trigger LLM inference. These events can be user messages in chat applications, system messages like main context capacity warnings, user interactions (such as login alerts or document upload notifications), and timed events for regular operations without user input. MemGPT processes these events with a parser, converting them into plain text messages. These messages are then appended to the main context, forming the input for the LLM processor.

To illustrate these components in a real-world scenario, consider a user asking a question that requires information from a previous session, which is no longer in the main context. Although the answer is not immediately available in the in-context information, MemGPT can search its recall storage, containing past conversations, to retrieve the needed answer. This ability demonstrates MemGPT’s powerful context management and retrieval capabilities, enabling it to effectively handle queries that extend beyond the immediate conversational context.

Getting started with MemGPT is a matter of a few lines of code:

from memgpt import MemGPT

# Create a MemGPT client object (sets up the persistent state)

client = MemGPT(

quickstart="openai",

config={

"openai_api_key": "YOUR_API_KEY"

}

)

# You can set many more parameters, this is just a basic example

agent_id = client.create_agent(

agent_config={

"persona": "sam_pov",

"human": "cs_phd",

}

)

# Now that we have an agent_name identifier, we can send it a message!

# The response will have data from the MemGPT agent

my_message = "Hi MemGPT! How's it going?"

response = client.user_message(agent_id=agent_id, message=my_message)

MemGPT is one of the most elegant designs in autonomous agent architecture. Even if you don’t want to use the framework itself, most of its core ideas are incredibly relevant for agent architectures.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.