Advanced Concepts in Python — I

Last Updated on January 6, 2023 by Editorial Team

Author(s): Ankan Sharma

Originally published on Towards AI the World’s Leading AI and Technology News and Media Company. If you are building an AI-related product or service, we invite you to consider becoming an AI sponsor. At Towards AI, we help scale AI and technology startups. Let us help you unleash your technology to the masses.

Advanced Concepts in Python — I

A detailed look into Iterators, Generators, Coroutines, and Iterator Protocol

Python has been my go-to language for over two years now. Generally, it's a pretty straightforward language. But, some concepts get mind-boggling at times.

So, I started this series of blogs to simplify and code those concepts to make them more coherent.

Topics to be Covered

- Iterators

- Iterables

- Generators

- Coroutines

- Iterator Protocol

Iterators

Iterators in Python are objects that emit streams of values one at a time. To access the next value in the stream, we call the “__next__()” method over the Object.

You can think of iterators in Python as a gun. The bullets are values and the “__next__” method is a trigger. You press the trigger (__next__), and it shoots the bullet(values).

Key points to remember about iterators

a. The iterator object remembers its state during the iteration.

b. Iterators can only move forward, and if elements in the sequence are exhausted, it will raise the StopIteration error.

c. The Iterator cannot be reused once the elements are exhausted.

d. Loops can iterate over the iterator object.

In cell 1, I am creating a class “CustomIterator” and added the “__iter__” and “__next__” methods into it. __iter__ returns the instance of itself and __next__ keeps the state and returns the next element in the iterator.

In cell 6, I am iterating till the first four elements, but when I ran for loop on the same object later in cell 7, it starts from the 5th element and iterates to the last. So, iterator objects can’t be reused.

Functions like zip, enumerate, and even on File opened in python return an iterator object.

z = zip(["asd","sdf"],[1,2])

next(z)

## Output

("asd",1)

Iterables

Iterables are objects in Python whose elements can be iterated over by loops. An inbuilt sequence of data structures in Python is called Iterables.

List, Tuples, Sets, and Dictionary are all Iterables.

lst = [1,2,3,4,5]

for i in lst:

print(lst)

## Output

1

2

3

4

5

How to check if an Object is Iterable or Not?

Every Iterables class will have a “__iter__()” method that creates iterator objects. To check if an object is an iterable call the “__dir__()” method of it, you can see a “__iter__” in the returned list.

lst = [1,2,3,4,5]

print(lst.__dir__())

**All iterators are iterables, but all iterables are not iterators.

Generators

A generator in Python is a function that returns a stream of values through the “yield” keyword. It returns a generator iterator object. So, you can call the “__next__” method of it to get the next value.

In the above gif, you can think of that machine as a generator and those mails are values. Whenever the “__next__” is called, it will generate the next values.

Like Iterators, Generators also follow a lazy loading strategy. It doesn’t load all the data into memory and hog it. So, the generator is useful when handling large data like Massive Image Datasets in deep learning.

In cell 1, I create a generator function “custom_generator” which squares each value in a range and returns it one by one.

From cell 4, I used a generator expression to get a stream of cubes of values.

Generator Expression

This is another way to implement a generator in one line. It looks like “comprehension” with the same property as the generator function.

# List Comprehension

lst_comp = [x**3 for x in range(10)]

print(lst_comp)

# Generator expression

gen = (x**3 for x in range(10))

print(gen.__next__())

print(gen.__next__())

print(gen.__next__())

## Output

[0,1,8,...,729]

0

1

4

If we didn’t use the generator function, then it could be done like this:

def not_generator_function(n):

square_list = []

for i in range(n):

square_list.append(i)

return square_list

lst = not_generator_function(10)

print(lst)

## Output

[0,1,4,....,81]

Now, think the range is in trillions and this function will crash the memory as it will load all the data in memory at once. So, the better choice is to create a generator function.

Coroutines

Coroutines are functions that can be paused mid-execution and then their execution can be resumed from that point at a later time.

There are several use cases for coroutines like network calls, data preprocessing pipelines, co-operative multitasking, etc.

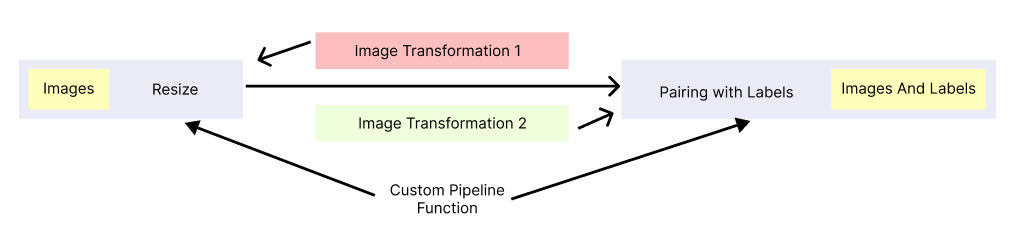

Let's take an example. You have a massive image dataset and you want to use different pre-processing steps.

Custom Pipeline function takes images and resizes them. Then the function pauses and moves to the “Image Transformation 1” function and “Image Transformation 2” function and applies transformations. Then it resumes the Custom Pipeline function to pair those transformed images with labels.

This is a practical scenario where coroutine can be used.

Coroutine uses “yield” to pause the function and “next” to resume the function's execution.

In the above code, you can see when we call “next” on the function, the function execution starts and then stops when it reaches “yield”. It raises StopIteration when there is no more “yield” left in the function.

Coroutines allow us to send values to the last paused checkpoint in the function. To pass value, we use the “send” keyword, but the “send” keyword can only be used when function execution pauses at “yield”.

We got a basic understanding of coroutine. Now, let's create a pipeline with multiple coroutines working simultaneously. I created three coroutines, and then modified values throughout the pipeline by switching between the coroutines as required.

“cor_main” is the main coroutine pipeline and it takes the argument “a”. The coroutine stops at “x = yield”, and then I send a value to it and this function then yields “x+a”. It is then passed from two other coroutines “transformation1” and “transformation2”.

The transformed value is then passed to the main coroutine to “z = yield” and the final value yielded by the main coroutine is “x+a+z”.

yield from

Earlier, we discussed the “yield” keyword in detail in generators. “yield from” is like “yield” but on steroids. It helps to unpack a simple generator in one sentence.

In cell 2, you can see that instead of looping over the “yield_main” function, I am just using the “yield from” to unpack the generator. Yield can also be used to expand iterables as shown in cell 5.

In cell 7, I am defining a “data_sink” generator that gets values and prints them. The values are received from a “data_source” function, and then “data_sink” is wrapped with the function that contains “yield from” and it acts as a sub-generator of the “data_sink” function.

** “yield from” is added in python 3.3

Iterator Protocol

Disclaimer: This section is optional. I am writing this because I found this interesting. So, thought of sharing it with you guys.

Iterator protocol dictates how iterators and iterables work, how “__next__” gives the succeeding value, and raises exceptions when an iterator is exhausted. In fact, comprehension and unpacking of iterables follow these under the hood.

Fun fact, for-in loop in python doesn’t use indexes to loop over the iterables. Let’s create a custom for-loop without “for loop” and with iterator protocol, then you can better understand the concept.

In cell 1, I created a function “custom_loop” which takes an iterable object as an argument, then converts the iterable to an iterator with “__iter__()”. I used an infinite while loop and it breaks when “StopIteration” is raised and inside try block “__next__()” is called to get the succeeding value.

In the next part, it's mostly the same except I am using “yield from” to iterate.

So, to drive the point home, iterator protocol is the black magic that rules the iterator world of python.

That’s the end of this first part of the series. In the next part, I will discuss First Class functions, Higher-Order functions, Decorators, and Context Managers.

To check out my “Statistics in Machine Learning” series click here.

Life of a Programmer in a nutshell:

google_it() if stuck else continue

Advanced Concepts in Python — I was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Join thousands of data leaders on the AI newsletter. It’s free, we don’t spam, and we never share your email address. Keep up to date with the latest work in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.