Active Learning builds a valuable dataset from scratch

Last Updated on December 4, 2021 by Editorial Team

Author(s): Edward Ma

Originally published on Towards AI the World’s Leading AI and Technology News and Media Company. If you are building an AI-related product or service, we invite you to consider becoming an AI sponsor. At Towards AI, we help scale AI and technology startups. Let us help you unleash your technology to the masses.

Machine Learning

Introduction to Active Learning

Active Learning is one of the teaching strategies which engage learners (e.g. students) to participate in the learning process actively. Compared to the traditional learning process, learners do not just sit and listen but work together with teachers interactively. Progress of learning can be adjusted according to the feedback from learners. Therefore, the cycle of active learning is very important.

If you are already familiar with Active Learning, you can jump to the last section on how to use NLPatl (NLP Active Learning) python package to achieve it.

Objective

Can we apply this framework to the machine learning area? It is possible but we need to clarify what is the goal of active learning. When training classification models, we usually lack labeled data at the very beginning. Of course, we can randomly pick records and label them but it is too expensive.

The assumption of active learning is that we can estimate representative records in some ways and label them by subject matter experts (SMEs). Since we only need the most representative records, we do not need a very large amount of labeled data. Machine learning models should be able to find patterns from those valuable records. It saves money and time to acquire enough data to train your amazing models.

So the key points are the strategies of estimating representative records and inputs from SMEs.

Strategies

There are lots of strategies to estimate the value of records. I will start with 3 approaches to introduce active learning. I will walk through the idea first and come with examples one by one. Margin Sampling refers to calculating the difference between the highest and second-highest probabilities. Entropy Sampling aims at utilizing all probabilities output (Margin Sampling only uses the largest two outcomes) to identify the most uncertainty records. The last method is different from the aforementioned as it uses unsupervised learning to identify the most valuable records. You may call it Clustering Sampling.

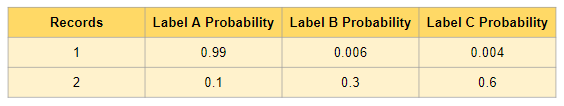

To start with the explanation, we have the following samples record. Given that we have a classification model and predict the probabilities.

Margin Sampling

Margin Sampling refers to calculating the difference between the highest and second-highest probabilities. The assumption is that if the difference between the two highest probabilities is big, the classification model has higher uncertainty. From the above example, Record 2 has a higher value (0.3 = 0. 6-0.3) than Record 1 (0.984 = 0.99–0.006). Therefore, the margin sampling approach picks record 2 rather than record 1 for labeling.

Entropy Sampling

Entropy Sampling aims at utilizing all probabilities output to identify the most uncertainty records. Rather than just calculating the differences of the highest probabilities. This approach calculates the entropy by utilizing all probabilities. From the above example, Record 2 has a higher value (0.898) than Record 1 (0.063). Therefore, the entropy sampling approach picks record 2 rather than record 1 for labeling.

Clustering Sampling

Clustering Sampling is different from the above strategies. The prerequisite of Margin Sampling and Entropy Sampling has an initially labeled dataset and training a simple classification model. If you do not have any labeled dataset at the beginning, you may consider using this approach. Basically, it leverages transfer learning to convert texts to embeddings (or vectors) and fits into an unsupervised algorithm to find the most representative records. For example, you may apply KMeans to find the clusters and label them.

Python code by NLPatl

Luckily, you do not need to implement the framework by yourself but the NLPatl python package is already ready for use. Having an idea about active learning, then preparing to get your hands dirty. I will walk through how can you apply active learning in NLP with a few lines of code. You can visit this notebook for the full version of the code.

The following samples code shows how to do use Entropy Sampling to estimate the most valuable records.

# Initialize entropy sampling apporach to estimate the most valuable data for labeling

learning = EntropyLearning()

# Initial BERT model for converting text to vectors

learning.init_embeddings_model(

'bert-base-uncased', return_tensors='pt', padding=True)

# Initial Logistic Regression for classification

learning.init_classification_model(

'logistic_regression')

# Train sample classification model first

learning.learn(train_texts, train_labels)

# Label data in notebook interactively

learning.explore_educate_in_notebook(train_texts, num_sample=2)

The following samples code shows how to do use Clustering Sampling to estimate the most valuable records.

# Initialize clustering sampling apporach to estimate the most valuable data for labeling

learning = ClusteringLearning()

# Initial BERT model for converting text to vectors

learning.init_embeddings_model(

'bert-base-uncased', return_tensors='pt', padding=True)

# Initial KMeans for clustering

learning.init_clustering_model(

'kmeans', model_config={'n_clusters': 3})

# Label data in notebook interactively

learning.explore_educate_in_notebook(train_texts, num_sample=2)

Like to learn?

I am Data Scientist in Bay Area. Focusing on the state-of-the-art in Data Science, Artificial Intelligence, especially in NLP and platform related. Feel free to connect with me on LinkedIn or Github.

Active Learning builds a valuable dataset from scratch was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Join thousands of data leaders on the AI newsletter. It’s free, we don’t spam, and we never share your email address. Keep up to date with the latest work in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.