A Practical Guide to Building GPT-2 with PyTorch (Part 2)

Last Updated on July 9, 2024 by Editorial Team

Author(s): Amit Kharel

Originally published on Towards AI.

This is the second part of the GPT-2 from scratch project. If you haven’t read the first part yet, I highly recommend getting familiar with the language model basics before continuing.

Build and Train GPT-2 (Part 1)

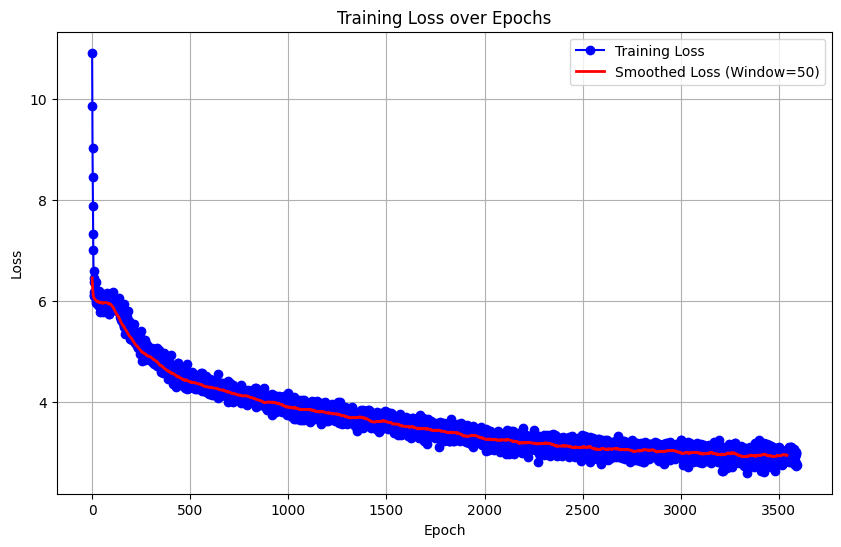

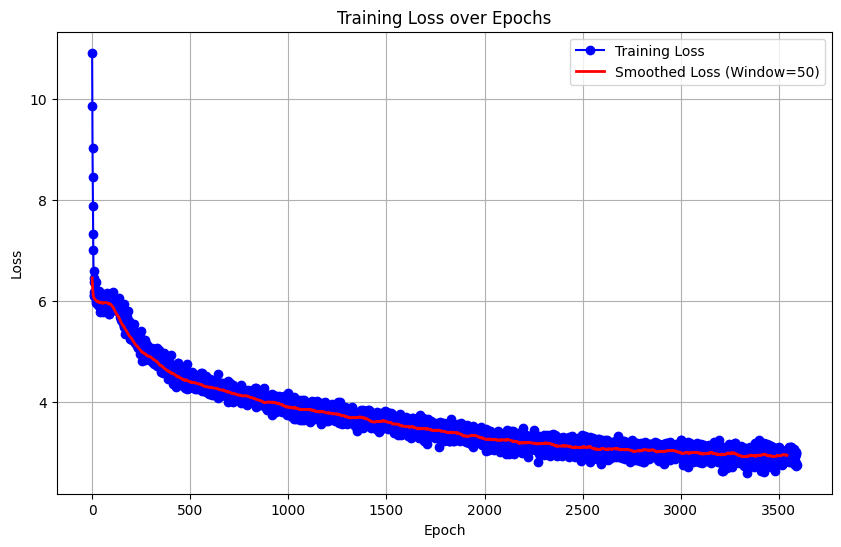

Final Loss:

In this section, we will add the GPT-2 parts one by one and then train & evaluate how the model performs in each stage. Here’s how it goes:

a. Positional Encoding + Fully Connected Layer (NN)

b. (Masked) Self-Attention + Normalization

c. (Masked) Multi-Head Attention

d. Multiple GPT Decoder Blocks

e. Improving Tokenizer

f. Final GPT-2 Training

To recall from previous part, our model looks like below:

Simple Bi-Gram Model

Code:

import torch.nn as nnimport torch.nn.functional as F# used to define size of embeddingsd_model = vocab_size class GPT(nn.Module): def __init__(self, vocab_size, d_model): super().__init__() self.wte = nn.Embedding(vocab_size, d_model) # word token embeddings def forward(self, inputs, targets = None): logits = self.wte(inputs) # dim -> batch_size, sequence_length, d_model loss = None if targets != None: batch_size, sequence_length, d_model = logits.shape # to calculate loss for all token embeddings in a batch # kind of a requirement for cross_entropy logits = logits.view(batch_size * sequence_length, d_model) targets = targets.view(batch_size * sequence_length) loss = F.cross_entropy(logits, targets) return logits, loss def generate(self, inputs, max_new_tokens): # this will store… Read the full blog for free on Medium.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI