A Great Overview of Machine Learning Operations and How the MLFlow Project Made It Easy (Step By Step)

Last Updated on July 17, 2023 by Editorial Team

Author(s): Ashbab khan

Originally published on Towards AI.

development is a most important concept whether we talk about human evolution from hunter-gatherer to the modern human we see today. The same concept applies to the technologies such as Artificial Intelligence.

In the past, our focus is how to use Machine Learning to solve a problem, and almost a single person is handling all of the things, but today we see that the work is now divided. We now need a Data Scientist, Machine Learning Engineer, and Data Engineer due to the advancement in Technology.

Today making a production model is a challenging task, and we need to understand how we made it possible through Machine Learning Operations skills so in this article we are getting familiar with MLOps and MlFlow let’s get started.

What is MLOps in simple terms

???????????????????? in simple terms, is the operation skills on how to make your model into production, which will be retrained and effectively tracked

such as which experiment generates the highest accuracy model or the Lowest accuracy model is based on summary metrics upload, and we can easily see the type of hyperparameters used in the highest and lowest score model.

We can easily trace the experiment author and when it is created what is the output artifact of the component such as a pre-process component generating preprocessed data and what are the summary metrics created such as the accuracy or roc_score, etc.

How an end-to-end Machine Learning Pipeline in MLOps works.

Developing an ML model for production is a challenging task it requires a lot of different skill sets other than making a machine learning model because a model is useless if it can’t make it to production.

Machine Learning Operations Engineering is one of them that helps us do this tough job in less time and with fewer resources.

According to experts, almost 50 to 90% of the model doesn’t make it into production, and that’s the scariest part.

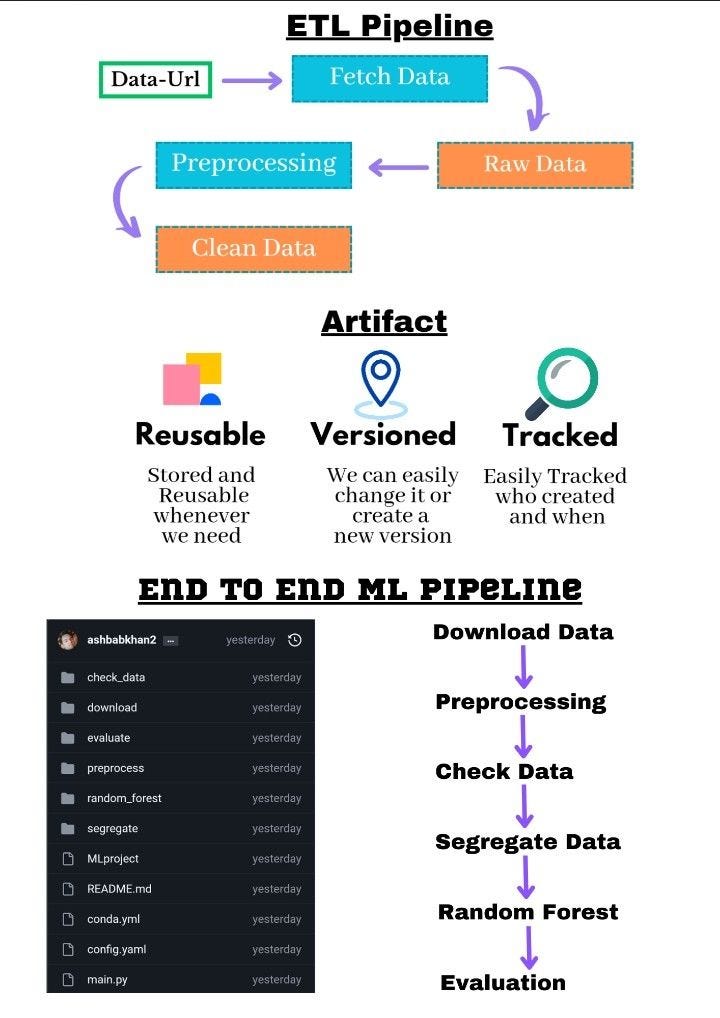

Let’s see a simple ETL Pipeline

In the image, the blue one is a component, and the orange one is an artifact.

A component is a group of programming files that perform some task, whereas the artifact is the file or a directory produced by the component

for example:- see the above image. We have 6 components from download data to evaluation, and every component at least has three important files. After the component finishes running it will generate some files.

For example, the download component will take the Url of the data and give us a raw data file which we upload as an artifact to the platform, such as Weights and Biases.

the same process is done in the preprocessing component where it takes the previously created raw data as input and then performs preprocessing and again generates new data which is preprocessed data, and we again save it back to Weights and Biases and the same things happen with other components.

the one thing you might notice is that we are generating lots of data because every component in a pipeline needs different data so our data must be stored at the location so that any of your other teams who is residing anywhere also get access to the data.

As we discussed earlier artifact is a file or a directory of files generated by the different components, but most importantly artifact can be versioned. It means whenever we are making some changes in the component, or maybe new data is added to the source and running the component again creates a new version of the artifact.

as we see in the below image sample.csv is our artifact, and below there is a version of that artifact.

various tools and packages are used to store and fetch those artifacts one of the tools is Weights and Biases we can also use some other tools like Neptune, Comet, Paperspace, etc.

Let’s take a brief introduction on how these tools or packages like Weights and Biases, also known as wandb used in MlOps:

- This will be used to store and fetch artifacts

- We can store many configurations to it, like the hyperparameters used in the model, and also store the images like feature importance, roc curve or correlation, etc.

- Every time we fetch or store artifacts or, in simple terms, when we initialize the wandb we are creating a run. A run is an experiment that stores our configuration file, images, and basics info like who created this run and when and what is the input artifact it used and what is the output artifact it produced in the above image we see our run and artifacts.

See the ETL pipeline image. We have two components above:

- download data

- preprocessing

When we run the ETL pipeline, what happens is that it passed the URL to our download data component, and it generates a file called raw_data (artifact) which we store in the weight and biases.

Then in the preprocessing component, we fetch this raw_data file from weight and biases and then preprocess this data and then store it as new data, which is clean_data.csv and upload it to weight and biases as an artifact, this is how we store and fetch different files and directories from anywhere using weights and biases.

See the image of End to End ML pipeline. We see a lot of components such as download_data, preprocessing, checking_data, etc. the work of this pipeline is the same as we see in ETL the download_data generates an artifact, and then that artifact is used by preprocessing component, and so on

in the end, the random_forest component will generate our model, which is an inference artifact that is ready to predict the target value of unknown observations.

So now our inference artifact is ready for production, and as we discussed earlier, that artifact can be versioned, so let’s say we generated 2 versions from v0 and v1 and we decided to use the v1 for production then we marked this artifact as prod means ready for production.

then we upload our project to GitHub so that other developers have access to this code, and the rest of the process is handled by software engineers and DevOps engineers that how it will be integrating the model with the software.

Problem due to model to drift and how to solve it

As we are all familiar with that a model needs updated data for the best performance otherwise it will cause model drift.

It is very important to continuously update the data and re-train our model to avoid model drift, so there might be questions about how we will do this in MlOps, and the answer is simple.

let’s say our source data is updated with new data, and we want to re-train our model with that new data, so we have to run our pipeline again this will run all the components again with the new data passed in the download data component, and so on.

when our inference pipeline run again with the newly added data, it will generate a new version v2 inference artifact and then we have to unmark the v1 from prod that we marked previously and mark the latest version v2 as a prod, and that’s it our new inference artifact is ready for production again.

But this is not a simple task we have to do a lot of experiments and create a lot of versions of the artifact, and the best-performing artifact is marked as production so that any person easily identifies that this version of the artifact is going to be used in production.

What is MLFlow and how MLFlow used in MLOps to run our end-to-end machine learning pipeline in production?

MLFlow is a Framework that is used to run our Machine Learning Pipeline through the effective workflow using a simple command.

mlflow run .

mlflow run src/download_data

through MlFlow we can chain all the components that we have seen previously so that it can execute all the components in the right flow.

The MlFlow project is divided into three parts:

- ???????????????????????????????????????????? (????????????????????????????????????????????????)

- ????????????????

- ???????????????????????????? ????????????????????????????????????????

Let’s understand them one by one

????????????????????????????????????????????: the first one is the environment file means the packages that are used in a code, such as pandas, MlFlow, and, seaborn these all are packages required in a data science project so below is our environment file.

we see that component is the name of this environment file, and then we have the dependencies section these are the packages that we are downloading from the two channels the first one is conda forge if it is can’t able to find any packages in conda forge.

then it will look in the defaults channel to download and install the file, and we can also download and install packages from pip as we do for wandb.

Important note

if we are downloading a specific version of packages from the channel as we do below, like mlflow=2.0.1 we have to use only one = sign, and if we are downloading specific version packages from pip we have to use == sign like we do wandb==0.13.9.

name: components

channels:

- conda-forge

- defaults

dependencies:

- mlflow=2.0.1

- python=3.10.9

- hydra-core

- pip

- pip:

- wandb==0.13.9

????????????????: Code is the actual logic which is our programming file.

???????????????????????????? ????????????????????????????????????????: MlFlow doesn’t run the coding file directly it first runs the project definition file which is an MLproject file in this file we write a code that how the code file is run and what arguments our code file needs.

Below we see our MLProject file the first thing that we understand is that in the conda_env we are using our environment file name as we see above in the environment section this will run our conda.yml file (the above environment file we see is saved as a conda.yml file).

this will install the necessary packages from channels and pip, then it runs the parameters section, and at the last, the command section will run our python file run.py with the necessary arguments that our python file needs.

This is how the MlProject file looks like

name: download_file

conda_env: conda.yml

entry_points:

main:

parameters:

artifact_name:

description: Name for the output artifact

type: str

artifact_type:

description: Type of the output artifact. This will be used to categorize the artifact in the W&B

interface

type: str

artifact_description:

description: A brief description of the output artifact

type: str

command: >-

python run.py --artifact_name {artifact_name} \

--artifact_type {artifact_type} \

--artifact_description {artifact_description}

So what are the above parameters, and where it is getting value from

Let’s understand our first question what is this above parameter is

To create an artifact, we need these three parameters, and we store and fetch artifacts from the code file. That’s why we are passing these parameters to our code file from the MlProject file to avoid hard coding:

- artifact name

- artifact type

- artifact description

Passing value to a python file using cmd

To receive an argument through cmd or MlProject to a python file, we have to use a package called argparse below is a demonstration of how Argparse works.

below is the code that we need to add in the python file __main__ section to receive arguments from cmd in our case, we are receiving 3 arguments from MlProject to our code file or python file.

- —artifact_name

- — artifact_type

- — artifact_description

Code file

if __name__ == "__main__":

parser = argparse.ArgumentParser(description="Download URL to a local destination")

parser.add_argument("--artifact_name",

type=str,

help="Name for the output artifact",

required=True)

parser.add_argument("--artifact_type",

type=str,

help="Output artifact type.",

required=True)

parser.add_argument("--artifact_description",

type=str,

help="A brief description of this artifact",

required=True

)

args = parser.parse_args()

see the MLproject file again that’s the same three arguments we are passing to the run.py file in the command section and that’s how we receive the same 3 arguments in python.

Our second question is where the MlProject file getting value from

As we discussed earlier that, whenever we run a MlFlow project, the first file will run is the MlProject file, and we use the above command in the terminal, which is mlflow run {file_path}. This command is used when we are passing nothing to our Mlproject file, but in our case, there are 3 parameters that this file needs, so we use a little bit different command.

The below code is the practical demonstration of how we pass value to the MlProject file through cmd.

mlflow run . -P artifact_name=clean_data.csv \

-P artifact_type=clean_data \

-P artifact_description="Clean and preprocessed data"

here in the above code, to pass a value to MlProject parameters through MlFlow we used the -P command and the parameters name as we did in the above code.

So that’s how MlFlow runs the components and how these three files code, environment, and MlProject work together thank you for reading this article.

you can connect with me on LinkedIn

till then if you have any questions regarding this post, feel free to ask in the comment section

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.