A Gentle Introduction To Genetic Algorithms

Last Updated on July 19, 2023 by Editorial Team

Author(s): Dasaradh S K

Originally published on Towards AI.

Programming

Genetic Algorithms are based on Charles Darwin’s theory of natural selection and are often used to solve problems in research and machine learning.

In this article, we’ll be looking at the fundamentals of Genetic Algorithms (GA) and how to solve optimization problems using them.

What are Genetic Algorithms?

Genetic algorithms were developed by John Henry Holland and his students and collaborators at the University of Michigan in the 1970s and 1980s.

It is a subset of evolutionary algorithms, and it mimics the process of natural selection in which the fittest individuals survive and are chosen for cross-over to reproduce offsprings of the next-generation.

The natural selection process also involves the addition of small randomness to the offsprings in the form of mutation. This will result in a new population of individuals with mixed fitness.

But only the fittest individuals are chosen for reproduction, and the fitness is improved consistently over generations.

Genetic Algorithms for optimization problems

When Genetic Algorithms are used for solving an optimization problem or a search problem, the process of natural selection is repeated continuously until termination.

Termination generally occurs at reaching a point where the newly generated individuals are not significantly different from the previous generation.

This means that the algorithm has reached an optimal or near-optimal solution to the problem.

In practice, Genetic Algorithms works as follows.

Step 1. Generate a set of random individuals

Step 2. Find the best ones from the given population of individuals

Step 3. Cross them over

Step 4. Slightly mutate the new generation offsprings

Step 5. Repeat steps 2 to 4 until termination

Let’s have a look at each of these steps and the terminologies used in brief. We shall also try to implement a genetic algorithm that solves the problem of generating the given optimum string starting from a random string.

Initial Population

The process starts with creating individuals of the first generation, and they are known as the Initial Population.

Each individual is a solution to the given problem and is characterized by a set of parameters known as the Genes. These genes are held together like a chain to form the Chromosomes.

The two primary methods to create the initial population are,

- Random Initialization — where the individuals (solutions) are generated randomly

- Heuristic initialization — where the individuals are generated based on a known heuristic for the problem

In this tutorial, we shall stick to random initialization and proceed further.

Fitness function

In order to find how good or how ‘fit’ is an individual in comparison to others, we use a fitness function.

The fitness function takes the individual as an input and returns a fitness score based on how good is the individual in finding an optimum solution to the problem.

Only the individuals with the highest fitness score will be selected for a crossover (reproduction). In this tutorial, let’s select only the top two fittest individuals for crossover.

The fitness function must be sufficiently fast enough as the fitness score is calculated for all the individuals for every generation.

In cases where fitness can not be calculated directly due to the complexity of the problem, we can use fitness approximation.

Selection

Parent Selection, or simply selection, is the process in which the best individuals are chosen for crossover and is very crucial for the convergence of the genetic algorithm.

The objective of the selection process is that the fittest individuals should be selected for crossover while keeping the next generation diverse enough.

The individuals are generally selected using one of the following selection strategies.

- Fitness Proportionate Selection — where the probability of an individual being selected for the crossover is directly proportional to its fitness score. However, it doesn’t work with a negative fitness score.

- Tournament Selection — where n individuals are randomly selected from the population, and only the fittest individual proceeds to the next round of the tournament.

- Ranked Selection — where the individuals are ranked based on their fitness, and the individuals with the highest ranks are selected. Simply put, individuals with the highest fitness score are selected for crossover.

In this tutorial, we shall use the ranked selection process to select the individuals for crossover.

Crossover

Crossover in genetic algorithms is similar to the biological crossover is the process where the individuals of the current generation pass on their genes to the individuals of the next generation.

Once the crossover process is over, the newly generated individuals replace the old individuals, and the process of selection and crossover is repeated.

The two generally used crossover methods are,

- Point-based crossover — where the offspring chromosome is randomly split at one or many points and alternating regions are filled with alternating parent genes.

- Gene-based crossover — where each parent has an equal probability of passing over their gene to their offspring. We will be using this crossover method in this tutorial.

Mutation

A mutation is a process of slightly altering or changing the genes of the offsprings to keep the population diverse enough. A mutation is generally applied with low probability.

A mutation is essential to introduce and maintain diversity in the population and hence prevents premature-convergence of the genetic algorithm.

The mutation probability shouldn’t be high as it will make the genetic algorithm more like a random search algorithm.

Termination

Termination of the genetic algorithm occurs when the fittest individual of the newly generated population is an optimum solution or a near-optimum solution.

In cases where the computation time is higher, termination can also be called after a certain generation.

In our case, we shall call for termination only when the genetic algorithm completely reaches the optimum solution.

Genetic Algorithm Code

Here is our genetic algorithm that solves the problem of generating the given optimum string “Hello, Genetic Algorithms!”, starting from a random string.

Finals thoughts

Thanks for reading. I hope that you’ve found this article useful in understanding the basics of genetic algorithms.

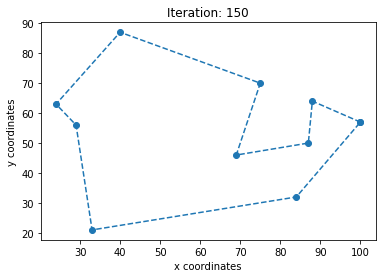

In continuation of this article, I’ll hopefully be writing a tutorial to solve the famous Traveling Salesman Problem using genetic algorithms.

Dasaradh S K

- If you enjoyed the article, follow me on Medium for more

- Let’s connect on LinkedIn

References

[1] Vijini Mallawaarachchi, Introduction to Genetic Algorithms — Including Example Code (2017)

[2] Genetic algorithm — Wikipedia, https://en.wikipedia.org/wiki/Genetic_algorithm

[3] Genetic Algorithms — GeeksforGeeks, https://www.geeksforgeeks.org/genetic-algorithms/

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.