Efficient Biomedical Segmentation When Only a Few Label Images Are Available @MICCAI2020

Last Updated on July 24, 2023 by Editorial Team

Author(s): Makoto TAKAMATSU

Originally published on Towards AI.

Computer Vision

A Proposal for State-of-the-Art Unsupervised Segmentation Using Contrastive Learning

In this story, Label-Efficient Multi-Task Segmentation using Contrastive Learning, by the University of Tokyo and Preferred Networks, is presented. This is published as a technical paper of the MICCAI BrainLes 2020 workshop. In this paper, a multi-task segmentation model is proposed for a precision medical image task where only a small amount of labeled data is available, and contrast learning is used to train the segmentation model. We show experimentally for the first time the effectiveness of contrastive predictive coding [Oord et al., 2018 and H´enaff et al., 2019] as a regularization task for image segmentation using both labeled and unlabeled images, and The results provide a new direction for label-efficient segmentation. The results show that it outperforms other multi-tasking methods, including state-of-the-art fully supervised models, when the amount of annotated data is limited. Experiments suggest that the use of unlabeled data can provide state-of-the-art performance when the amount of annotated data is limited.

Let’s see how they achieved that. I will explain only the essence of ssCPCseg, so If you are interested in reading my blog, please click on ssCPCseg paper and Github.

What does this paper say?

Obtaining annotations for 3D medical images, such as clinical data, is generally costly. On the other hand, there is often a large number of unlabeled images. Therefore, semi-supervised learning methods have a wide range of applications in medical image segmentation tasks.

Self-supervised learning is a representation learning method that predicts missing input data from unlabeled input data among the remaining input data. Contrastive predictive coding (CPC) has been proposed as a method for self-supervised learning, and it can be applied to small labeled ImageNet classification task in the data domain, it has been proposed to outperform the full learning method [H´enaff et al., 2019], but the effectiveness of CPC in the segmentation task has not yet been investigated.

Since the risk of overfitting can be reduced by sharing parameters for both the main segmentation task and the regularization subtask, multi-task learning has been considered an efficient method for small data.

Semi-supervised CPCseg (ssCPCseg) outperformed all other regularization methods, including VAEseg, a fully supervised state-of-the-art model, when the amount of labeled data was small (Table 1). Therefore, a contrastive learning (CPC)-based approach was integrated into the multi-task segmentation model task.

Methodology

The medical image segmentation network in this paper, shown in Figure 1, is an encoder/decoder structure, where the Contrastive predictive coding (CPC) branch branches with the decoder block at the end of the Encoder block. The encoder and decoder are composed of ResNet-like blocks (ResBlocks) with skip connections between the encoder and decoder. Besides the CPC branch, different types of regularization branches, VAE branch, and Boundary attention branch, have been implemented in this paper, but my blog focuses on the CPC branch only.

Contrastive predictive coding (CPC) branch

First, the input image is divided into 16 overlapping patches of 32 x 32 x 32 voxels. Each of the divided patches is encoded separately using an encoder in the Encoder-Decoder architecture, spatially averaged, and aggregated into a single feature vector z_i,j,k.

Then, the eighth layer ResBlock f_res8 and linear layer W are applied to the upper half of this feature vector to predict the lower half of the feature vector z_i,j,k_low. In addition, a negative sample z_l is randomly taken from other feature vectors encoded in different patches of the same image.

z^ is expressed by the following equation.

From the above, the CPC loss can be expressed as follows.

Results

In the experiments, the segmentation performance of four models was measured in order to compare the different regularization effects: encoder-decoder alone (EncDec), EncDec with VAE branch (VAEseg), EncDec with boundary attention branch (Boundseg), and EncDec with CPC branch (CPCseg).

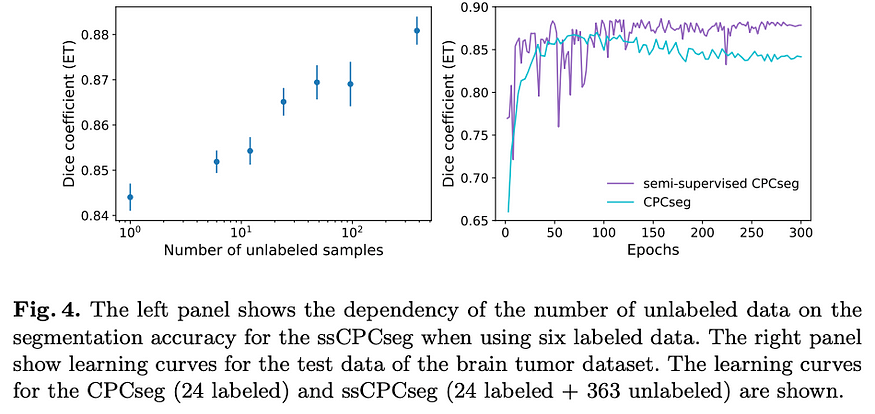

The results in Table 4s suggest that when the amount of labeled data is small, the regularization branch with labeled data has a limited impact on the segmentation performance. Segmentation results for semi-supervised CPCseg (ssCPCseg), which learns representations from both labeled and unlabeled data, show that the semi-supervised method outperforms the fully supervised method (Fig. 4). Furthermore, ssCPCseg outperformed all other regularization methods, including the fully supervised state-of-the-art model, VAEseg, in regions with small labeled data.

These results suggest that semi-supervised methods using CPC branches can provide state-of-the-art performance when the amount of annotated data is limited.

Reference

[Oord et al., 2018] van den Oord, A., Li, Y., Vinyals, “O.: Representation Learning with Contrastive Predictive Coding,’’ arXiv e-prints arXiv:1807.03748 (2018)

[H´enaff et al., 2019] H´enaff, O.J., Srinivas, A., De Fauw, J., Razavi, A., Doersch, C., Eslami, S.M.A., van den Oord, “A.: Data-Efficient Image Recognition with Contrastive Predictive Coding,’’ arXiv e-prints arXiv:1905.09272 (2019)

[ssCPCseg][Github] J. Iwasawa, Y. Hirano and Y. Sugawara, “Label-Efficient Multi-Task Segmentation using Contrastive Learning,'’ MICCAI BrainLes 2020 workshop

Past Paper Summary List

Deep Learning method

2020: [DCTNet]

Uncertainty Learning

2020: [DUL]

Anomaly Detection

2020: [FND]

One-Class Classification

2019: [DOC]

2020: [DROC]

Image Segmentation

2018: [UOLO]

2020: [ssCPCseg]

Image Clustering

2020: [DTC]

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.