5 Key Takeaways from Microsoft AI Summit (March 2024)

Last Updated on April 5, 2024 by Editorial Team

Author(s): Selina Li

Originally published on Towards AI.

· Overview

· Takeaway #1: Github Copilot vs. Microsoft 365 Copilot vs. Microsoft Copilot — What is the difference?

· Takeaway #2: Microsoft Copilot Studio vs. Azure AI Studio — What is the difference?

· Takeaway #3: Two ways to create Retrieval Augmented Generation (RAG) applications (i.e. custom Chatbot) using Microsoft

∘ Way #1: Microsoft Copilot Studio

∘ Way #2: Azure AI and Prompt Flow

· Takeaway #4: GPT-4 Vision and Azure AI Vision Enhancement can enable interesting use cases

· Takeaway #5: Microsoft Azure is taking one step further mitigating harms introduced by foundation models

· Summary

· Enjoyed This Story?

Overview

As a developer, I had the opportunity to attend the Microsoft AI Summit U+007C Microsoft Build AI Day in Melbourne on March 18th. The event was incredibly informative, offering deep insights into the evolution of Microsoft’s AI services and tools. I cannot wait to share the key takeaways from this enriching experience with you.

Whether you are already acquainted with Microsoft or Azure AI solutions or exploring them for the first time, I believe you will find valuable insights here.

Takeaway #1: Github Copilot vs. Microsoft 365 Copilot vs. Microsoft Copilot — What is the difference?

You might have heard about the keyword “Copilot” and can relate it to Microsoft and their AI offerings. But can you distinguish between “Github Copilot”, “Microsoft 365 Copilot” and “Microsoft Copilot”?

Here is the quick answer — the word “Copilot” in the Microsoft context is pretty much the same as “GPT” in the OpenAI context. It can represent any AI related feature, tool or enabler from Microsoft. It began as a specific tool (Github Copilot), and subsequently evolved into a suite / family (Microsoft Copilot) as the company rolled out AI offerings to its most used and beloved products and services.

In June 2021, Microsoft launched “Github Copilot” as an AI assistant to developers to help them write better code within a shorter time. Github Copilot is tightly integrated with VS Code, the popular code editor, and can help developers auto-generate code based on context. Announcement blog here if you want to discover more.

In March 2023, Microsoft rolled out the AI offerings to its most used product Microsoft 365 and announced “Microsoft 365 Copilot” — your copilot for work. It powers Microsoft suites — Word, Excel, PowerPoint, Outlook and Teams with large language models (LLMs), aiming to help customers improve work productivity. Announcement blog here if you want to discover more.

In Sep 2023, Microsoft further rolled out AI power to other core products and services including Windows apps and PC, web browser Edge and Bing as well as Surface devices. This is called “Microsoft Copilot” — your everyday AI companion. It is actually an ecosystem — wherever you are using Microsoft core products, you will be empowered with AI. Announcement blog here if you want to discover more.

Takeaway #2: Microsoft Copilot Studio vs. Azure AI Studio — What is the difference?

As mentioned above, Microsoft Copilot is indeed an AI-powered ecosystem covering core products and services of Microsoft.

Microsoft Copilot Studio is a part of the broader Microsoft Copilot ecosystem. It serves as an extension that empowers users to create custom AI-powered, low-code copilots within Microsoft 365 applications.

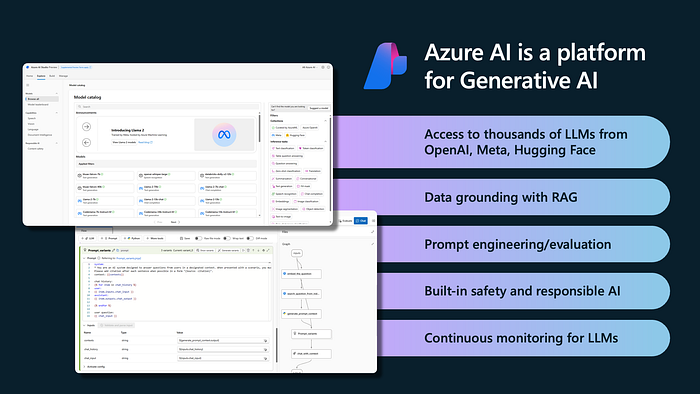

Azure AI Studio, by its name, sits on top of Microsoft Azure and is an all-in-one AI platform for building, evaluating, and deploying generative AI solutions and custom copilots.

- Azure AI Studio provides thousands of language models from OpenAI, Meta, Hugging Face, and more

- It offers data grounding with retrieval augmented generation (RAG), prompt engineering and evaluation, built-in safety, and continuous monitoring for language models.

- With Azure AI Studio, you can quickly and securely ground models on your structured, unstructured, and real-time data.

You will be able to build custom copilot on both. The difference is: Microsoft Copilot Studio sits with Microsoft 365 while Azure AI studio sits with Microsoft Azure (Microsoft cloud platform).

Takeaway #3: Two ways to create Retrieval Augmented Generation (RAG) applications (i.e. custom Chatbot) using Microsoft

There are at least two ways to create Retrieval Augmented Generation (RAG) applications / custom copilot using Microsoft products and services.

There could be other ways as well, but the below two ways were covered by the talks during the summit.

First, use Microsoft Copilot Studio.

For enterprises using Microsoft 365 and SharePoint, we can build custom chatbot on top of Share Point files using Microsoft Copilot Studio. This type of custom chatbot fits more into enterprise internal use cases.

For example, the HR department might place an employee policy on SharePoint and create a custom copilot. Employees can raise questions to the copilot and it will provide the answers and links to the relevant documents.

This custom copilot can also integrate with Teams:

Great news for security team is that the restrictions on user access to different files will apply to Chat as well.

More details about this can be found in the two Youtube videos shared by Daniel Anderson from Microsoft.

- Getting started with Copilot Studio: Build your own Copilot in minutes

- Getting Started with Copilot Studio Part 2: How to connect your own Copilot to SharePoint

Second, use Azure AI and Prompt Flow.

For enterprises using Microsoft Azure, we can build custom chatbot using the Azure AI studio. This type of custom chatbot fits more into use cases where external interactions with customers is involved.

With Azure AI studio, there are also several approaches to building RAG applications:

- Code centric approaches like LangChain, Semantic Kernel, and the OpenAI Assistant API

- Low code/no code approaches like Prompt Flow

Prompt Flow in Azure AI Studio enables users to build RAG in a low code/no code approach.

Azure Prompt Flow aims to simplify prototyping, experimenting and deploying Large Language Models (LLMs)-powered AI applications throughout the lifecycle.

By leveraging Azure Prompt Flow, enterprise can progress through the LLM lifecycle stages including ideating & exploring, building & augmenting, and operationalising.

More details about Prompt Flow can be found here in Microsoft documentation.

Takeaway #4: GPT-4 Vision and Azure AI Vision Enhancement can enable interesting use cases

When we talk about Retrieval Augmented Generation (RAG) applications, we usually think of taking user questions and providing answers in text. For example, we can supply company’s COVID wok-from-home policy documents, and the chatbot can answer user’s questions as text and provide link to the text body.

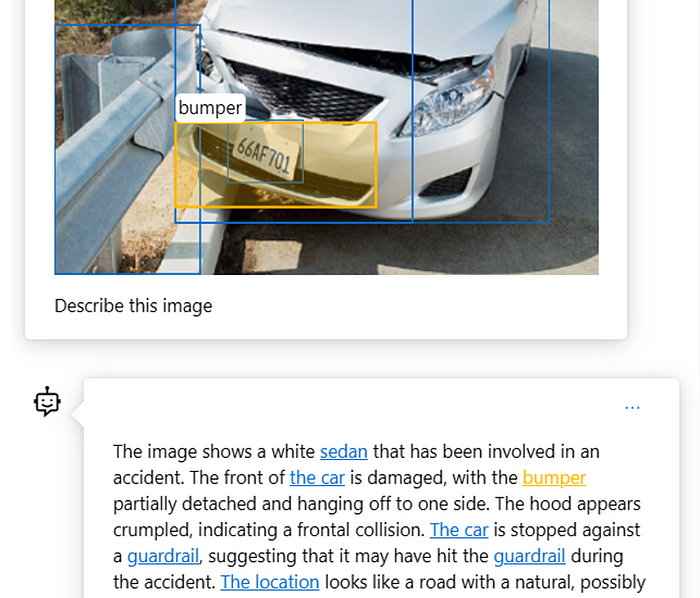

With the help of GPT4-Turbo with vision, user input could be images.

On top of it, Azure AI Vision Enhancement offers extra functionality including:

- Optical Character Recognition (OCR): Extracts text from images and combines it with the user’s prompt and image to expand the context.

- Object grounding: Complements the GPT-4 Turbo with Vision text response with object grounding and outlines salient objects in the input images.

- Video prompts: GPT-4 Turbo with Vision can answer questions by retrieving the video frames most relevant to the user’s prompt.

GPT4-Turbo with vision and Azure AI Vision Enhancement altogether can enable very interesting use cases:

- Car insurance companies can use the tools to analyse car damage pictures, and highlight the clues and evidence to the team responsible for assessing the claim

- Retailers can use the tools to understand any image input from customers and recommend product items similar to what the customer wants

For example, as shown below, the model can understand the damage to the car very well, and can highlight those points to the claim assessment team

Another example, as shown below, the retailer can recommend a tent similar to the one in the image from the customer.

By utilising the AI/LLMs that understands / reasons across multi-modal input, companies can expedite business insights / decisions and actions using real-time pattern.

Takeaway #5: Microsoft Azure is taking one step further mitigating harms introduced by foundation models

One big obstacle for companies to adopt generative AI and LLM technologies is security. People are concerned about the harms and issues introduced by LLMs such as:

- Ungrounded outputs & errors (i.e. confidently inaccurate)

- Jailbreaks & Prompt injection attacks

- Harmful content & code

- Copyright infringement

- Manipulation and human-like behaviour

Microsoft Azure has been introducing strategies to mitigate the harms, and they fall into 4 different layers:

- Model layer

- Safety System layer

- Metaprompt & Grounding layer

- User Experience layer

Descriptions for the layers can be found here: Harms mitigation strategies with Azure AI.

Safety System Layer

At this layer, Microsoft introduced Azure AI Content Safety (now in Public Preview), an AI-based safety system to provide an independent layer of protection, helping users to block the output of harmful content.

Some of the highlighted features of Azure AI Content Safety:

- Content Filtering. Powered by Azure AI Content Safety, it can detect and filter harmful content across four categories (hate, sexual, violence, and self-harm) and four severity levels (safe, low, medium, and high)

- Prompt Shields. A unified API that analyzes LLM inputs and detects User Prompt attacks (i.e. jailbreak user input attacks) and Document attacks, which are two common types of adversarial inputs.

Metaprompt & Grounding layer

At this layer, Microsoft recommends:

- Retrieval augmented generation (RAG) pattern to ground the model in relevant data, rather than store the data within the model itself

- Implementing metaprompt mitigations. A useful references here: System message framework and template recommendations for Large Language Models (LLMs)

User Experience layer

At this layer, Microsoft recommends:

- Being transparent about Al’s role and limitations

- Ensuring humans stay in the loop

- Mitigating misuse and overreliance on Al

More readings here User Experience Layer.

Summary

This concludes my all 5 takeaways!

To summarise my feelings:

- Microsoft is innovating hard to drive AI to “land” into real world use cases. The adoption of Generative AI and LLMs is no longer purely a hype; challenges are being addressed and progress is being matured into production solutions

- Microsoft is doing great in integrating some of the most advanced technologies into their offerings, for example, OpenAI, GPT4 Turbo with Vision, LangChain, etc.

- The huge customer base of existing Microsoft products and services especially Microsoft 365 is a great “soil” for the company to grow up Generative AI applications

- Looking forward to trying things out!

Enjoyed This Story?

Selina Li (Selina Li, LinkedIn) is a Principal Data Engineer working at Officeworks in Melbourne Australia. Selina is passionate about AI/ML, data engineering and investment.

Jason Li (Tianyi Li, LinkedIn) is a Full-stack Developer working at Mindset Health in Melbourne Australia. Jason is passionate about AI, front-end development and space related technologies.

Selina and Jason would love to explore technologies to help people achieve their goals.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.