31 Questions that Shape Fortune 500 ML Strategy

Last Updated on June 14, 2023 by Editorial Team

Author(s): Anirudh Mehta

Originally published on Towards AI.

In May 2021, Khalid Salama, Jarek Kazmierczak, and Donna Schut from Google published a white paper titled “Practitioners Guide to MLOps”. The white paper goes into great depth on the concept of MLOps, its lifecycle, capabilities, and practices. There are hundreds of blogs written on the same topic. As such, my intention with this blog is not to duplicate those definitions but rather to encourage you to question and evaluate your current ML strategy.

I have listed a few critical questions that I often pose to myself and concerned stakeholders on the modernization journey. While ML algorithms & code play a crucial role in success, it’s just a small piece of the large puzzle.

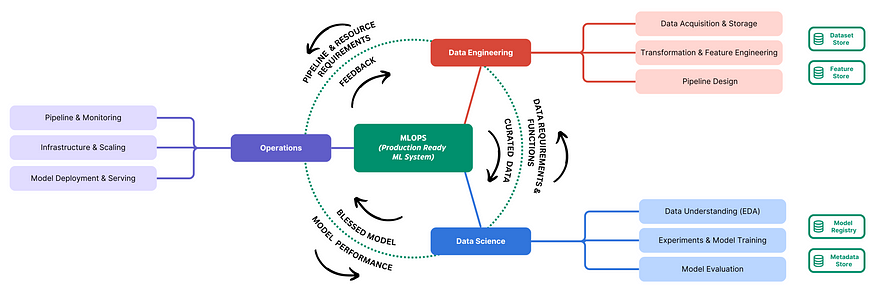

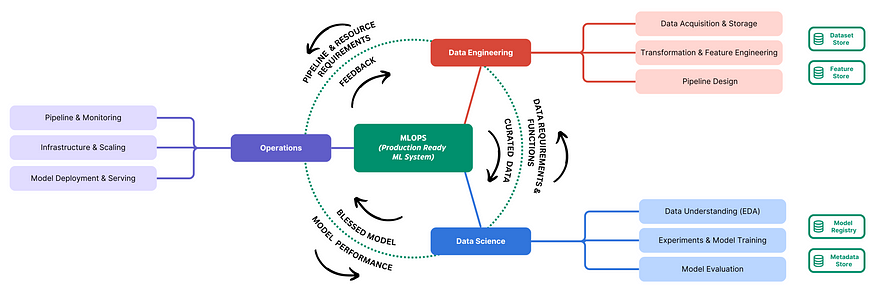

To consistently achieve the same success, there are a vast array of cross-cutting concerns that need to be addressed. Thus, I have grouped the questions under different stages of an ML delivery pipeline. In no way, the questions are targeted for a particular role owning that stage, but are applicable to everyone involved in the process.

Key objectives:

Before diving into the questions, it’s important to understand the evaluation lens through which they are written. If you have additional objectives, you may want to add more questions to the list.

Automation

U+2713 The system must emphasize automation.

U+2713 The goal should be to automate all aspects, from data acquisition and processing to training, deployment, and monitoring.

Collaboration

U+2713 The system should promote collaboration between data scientists, engineers, and the operation team.

U+2713 It should allow data scientists to effectively share the artifacts and the lineage as created during the model-building process.

Reproducibility

U+2713 The system should allow for easy replication of the current state and progress.

Governance & Compliance

U+2713 The system must ensure data privacy, security, and compliance with relevant regulations and policies.

Critical Questions:

Now that we have defined objectives, it’s time to look into the key questions to ask to evaluate the effectiveness of your current AI strategy.

Data Acquisition & Exploration (EDA)

Data is a fundamental building block of any ML system. Data Scientist understands it, identifies and addresses common issues like duplication, missing data, imbalance, outliers, etc. A significant amount of data scientist time goes into this activity of data exploration. Thus, our strategy should focus to support & accelerate these activities and answer the following questions:

▢ [Automation] Does the existing platform helps the data scientist to quickly analyze, visualize the data and automatically detect common issues?

▢ [Automation] Does the existing platform allows integrating and visualizing the relationship between datasets from multiple sources?

▢ [Collaboration] How can multiple data scientists collaborate in real-time on the same dataset?

▢ [Reproducibility] How do you track and manage different versions of acquired datasets?

▢ [Governance & Compliance] How do you ensure that the data privacy or security considerations have been addressed during the acquisition?

Data Transformation & Feature Engineering

After gaining an understanding of the data, the next step is to build and scale the transformations across the dataset. Here are some key questions to consider during this phase:

▢ [Automation] How can the transformation steps be effectively scaled to the entire dataset?

▢ [Automation] How can the transformation steps be applied in real-time to the live data before inference?

▢ [Collaboration] How can a data scientist share and discover the engineered features to avoid effort duplication?

▢ [Reproducibility] How do you track and manage different versions of transformed datasets?

▢ [Reproducibility] Where are the transformation steps and associated code stored?

▢ [Governance & Compliance] How do you track the lineage of data as it moves through transformation stages to ensure reproducibility and audibility?

Experiments, Model Training & Evaluation

Model training is an iterative process where data scientist explores and experiments with different combinations of settings and algorithm to find the best possible model. Here are some key questions to consider during this phase:

▢ [Automation] How can data scientists automatically partition the data for training, validation, and testing purposes?

▢ [Automation] Does the existing platform helps to accelerate the evaluation of multiple standard algorithms and tune hyperparameters

▢ [Collaboration] How can a data scientist share the experiment, configurations & trained models?

▢ [Reproducibility] How can you ensure the reproducibility of the experiment outputs?

▢ [Reproducibility] How do you track and manage different versions of trained models?

▢ [Governance & Compliance] How do you track the model boundaries allowing you to explain the model decisions?

Deployment & Serving

In order to realize the business value of a model, it needs to be deployed. Depending on the nature of your business, it may be distributed, deployed in-house, on the cloud, or at the edge. Effective management of the deployment is crucial to ensure uptime and optimal performance. Here are some key questions to consider during this phase:

▢ [Automation] How do you ensure that the deployed models can scale with increasing workloads?

▢ [Automation] How are the new versions rolled out and the process to compare them against the running version? (A/B testing, canary, shadow, etc.)

▢ [Automation] Are there mechanisms to roll back or revert deployments if issues arise?

▢ [Collaboration] How can multiple data scientists understand the impact of their version before releasing it? (A/B testing, canary, shadow, etc.)

▢ [Reproducibility] How do you package your ML models for serving in the cloud or at the edge?

▢ [Governance & Compliance] How do you track the predicted decisions for auditability and accountability?

Model Pipeline, Monitoring & Continuous Improvement:

As we have seen, going from raw data to actionable insights involves complex series of steps. However, by orchestrating, monitoring, and reacting throughout the workflow, we can easily scale, adapt and make the process more efficient. Here are some key questions to consider during this phase:

▢ [Automation] How is the end-to-end process of training and deploying the models managed currently?

▢ [Automation] How can you detect the data or concept drift w.r.t to the historical baseline?

▢ [Automation] How do you determine when a model needs to be retrained or updated?

▢ [Collaboration] What are the agreed metrics to measure the effectiveness of each stage and new deployments?

▢ [Reproducibility] Are there automated pipelines to handle the end-to-end process of retraining and updating models to incorporate feedback and make enhancements?

▢ [Governance & Compliance] How do you ensure data quality and integrity throughout the process?

▢ [Governance & Compliance] How do you budget and plan for the infrastructure requirements for the building of your models?

A MLOps system streamlines & brings structure to your strategy and thus, allowing you to answer these questions. It provides the capability to version control and to track various artifacts through the dataset, feature, metadata, and model repositories.

In the upcoming blogs, I will demonstrate how to implement these MLOps best practices using a simple case study on AWS, GCP, Azure, or using open-source technologies.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.