Prompt Engineering Guide for Open LLM: Take Your Open LLM Application to the Next Level

Last Updated on January 29, 2024 by Editorial Team

Author(s): Timothy Lim

Originally published on Towards AI.

Introduction: Why do we need another guide?

Numerous prompt engineering guides have already been written. However, the majority of them focus on closed-source models characterized by their immense capacity, robust reasoning capabilities, and comprehensive language understanding.

The purpose of this blog is to address Prompt Engineering for open-source Language Model Models (Open LLMs), specifically within the parameter range of 3 to 70 billion. Despite prevailing notions in various posts, these Open LLMs are incomparable to their closed-source counterparts.

You may have read misleading articles such as “ChatGPT Clone for Just $300”, “An Open-Source Chatbot Impressing GPT-4 with 90%*ChatGPT Quality”, but the truth is, when you are building an application, the differences in the quality of responses in open-source LLM compared to closed-source models become very obvious, especially when better reasoning capabilities are necessary for the task. The capability to follow general instructions, as well as closed-source models, is definitely not as good.

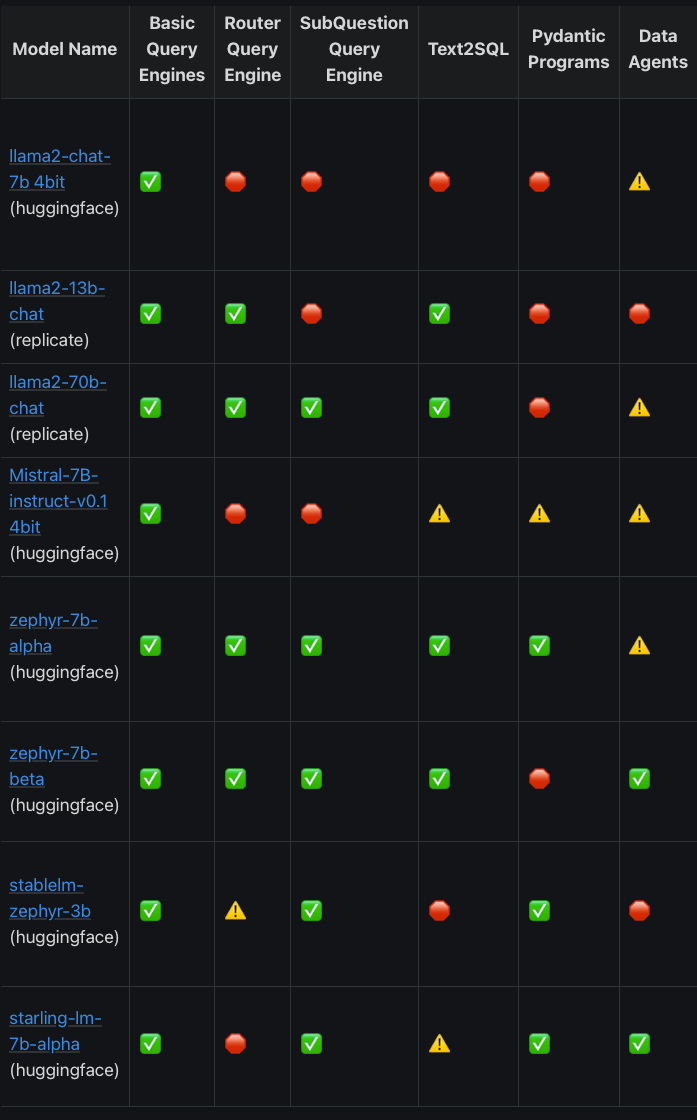

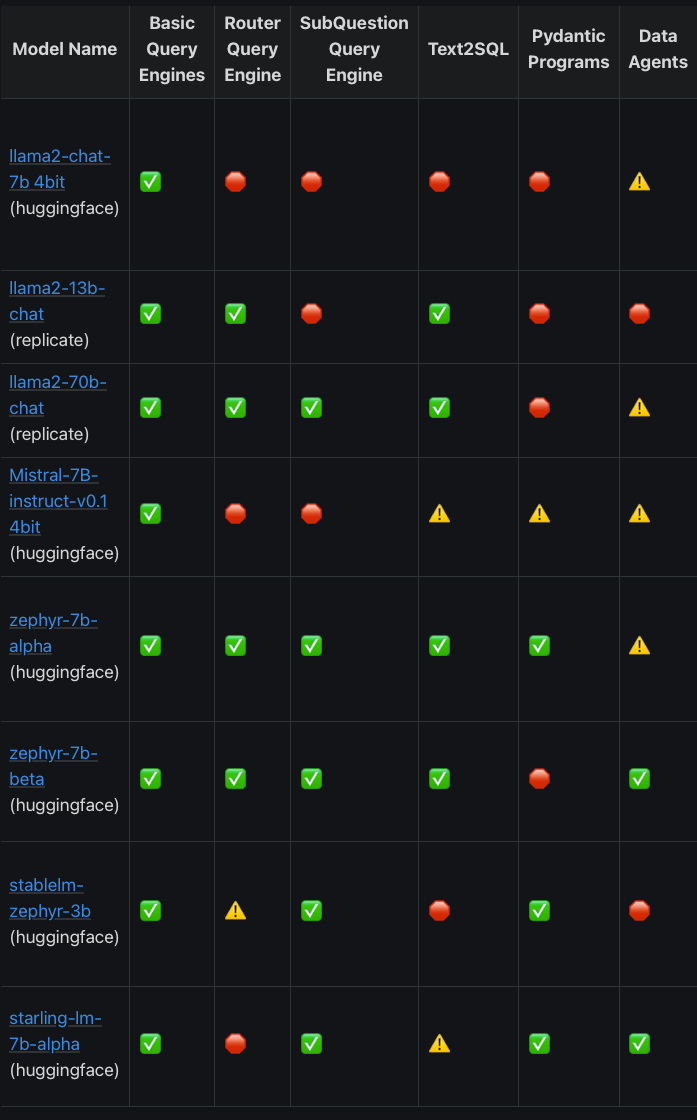

For example, Retrieval Augmented Generation (RAG) LLM applications encompass many demanding tasks that require enhanced reasoning abilities. The team at LlamaIndex has done a commendable job scoping out various tasks needed in an RAG application. However, their prompt engineering efforts have primarily focused on closed-source models, such as OpenAI GPT-4 and GPT-3.5.

The LlamaIndex team was generous enough to… Read the full blog for free on Medium.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.