Converting Textual data to Tabular form using NLP

Author(s): Danish Javed

Originally published on Towards AI.

Introduction: Larger textual files may be more difficult to manage than tabular data because tabular data facilitates understanding by visualizing information in an organized manner.

This article will show how to use NLP (Natural Language Processing) techniques to convert large text files to tabular data frames in Python. This study makes use of a document that contains information about financial data. Assume we want to create a table from this document that includes columns named Title, Person, Product, Condition, Actions, and Price.

Methodology: The proposed methodology includes reading a text file, tokenizing details, writing multiple functions to extract information, and finally creating a data frame with this information.

Libraries Used:

1. Pandas

2. re

3. nltk

4. Spacy

Sample Data:

Real Estate Investment: John is considering buying a rental property in a bustling neighborhood. After researching various listings, he finds a cozy two-bedroom apartment listed for $200,000. He evaluates its potential rental income and compares it with similar properties in the area before making his purchase decision.

Reading Data: Data was stored in .txt and to import it in python following Python code is used.

# Load the text data from the file

with open('/kaggle/input/financial-raw-data/data.txt', 'r') as file:

text_data = file.read()

As mentioned in the sample data, our data has a title that ends with a colon (:), so it is divided into a data frame with two columns: title and Details, using the following code.

# Split the text into Title and details

data = [title.split(":", 1) for title in text_data.split("\n") if title]

# Create a dataframe~

df = pd.DataFrame(data, columns=["Title", "Details"])

Tokenize details: Tokenization is the process of dividing larger text into smaller parts, such as paragraphs into sentences. To perform tokenization, the column of data frame named Details created in the previous step is passed to the sent_tokenize function which is defined in the nltk library as shown in code below.

from nltk.tokenize import sent_tokenize

# Tokenize the details

tokenized_details = df['Details'].apply(lambda x: sent_tokenize(x))

df['Tokenized Details'] = tokenized_details

Functions for extracting required information from the tokenized details column: For each required detail, a function is created to extract the necessary information from a given token or sentence.

In the function to extract names of people from sentences, the given sentence is tokenized into words before being tagged as Part of Speech using the pos_tag function defined in the nltk.tag library. Because names are proper nouns, they were separated using parts of speech as shown in code below.

from nltk.tokenize import word_tokenize

from nltk.tag import pos_tag

def find_proper_nouns(text):

tokens = word_tokenize(text)

tagged_words = pos_tag(tokens)

#NNP and NNPS are used for proper nouns

proper_nouns = [word for word, pos in tagged_words if pos == 'NNP' or pos == 'NNPS']

return proper_nouns

To extract products from sentences, a separate function was created with the goal of separating singular or plural nouns, which are common in product names. The same functionalities as for person names are used in this function, as shown in the code below, with the exception of part of speech tagging for singular and plural nouns.

def find_product_types(text):

tokens = word_tokenize(text)

tagged_words = pos_tag(tokens)

# Define POS patterns for identifying potential product types

product_type_patterns = [

('NN', 'NNS'), # Singular and plural nouns

]

# Find words matching the specified POS patterns

product_types = [word for word, pos in tagged_words if any((pos == pos1 or pos == pos2) for pos1, pos2 in product_type_patterns)]

return product_types

Adjectives and adverbs are extracted in the function to extract product conditions because they most often present conditions, as shown in the following code.

def find_product_conditions(text):

tokens = word_tokenize(text)

tagged_words = pos_tag(tokens)

# Define POS patterns for identifying potential product conditions

condition_patterns = [

('JJ',), # Adjectives

('RB', 'JJ'), # Adverbs + adjectives

]

# Find words matching the specified POS patterns

product_conditions = [word for word, pos in tagged_words if any(all((pos == p) for p in pattern) for pattern in condition_patterns)]

return product_conditions

Verbs represent actions, so we use them to extract actions from sentences. spaCy processing pipeline is used to accomplish this, as shown below.

import spacy

nlp = spacy.load("en_core_web_sm")

def find_actions(text):

doc = nlp(text)

actions = [token.text for token in doc if token.pos_ == 'VERB' or token.pos_ == 'VB']

return actions

Prices tend to be denoted by numbers and currency symbols, so regular expressions are used to match patterns in sentences to find prices, as demonstrated in the code below.

def find_prices(text):

# Regular expression to match common price patterns

price_pattern = re.compile(r'\$\s?\d+(?:,\d{3})*(?:\.\d{1,2})?U+007C\d+(?:,\d{3})*(?:\.\d{1,2})?\s?USDU+007C\d+(?:,\d{3})*(?:\.\d{1,2})?\s?dollars')

# Find all matches in the text

matches = re.finditer(price_pattern, text)

# Extract and return the matched prices

prices = [match.group(0) for match in matches]

return prices

Organize information in Data Frame: As all functions are ready to provide all necessary information, it is time to organize this information into a data frame that will be displayed as a tabular format. To accomplish this, a new data frame is created with column names “Title”, “Person”, “Product”, “Condition”, “Actions”, and “Price”, and a loop is iterated for the old data frame to send each tokenized row to functions, which then fill rows of the new data frame with relevant information from each column, as shown in the code below.

title = ""

for index, row in df.iterrows():

new_title = row['Title']

for sentence in row['Tokenized Details']:

Person = " ,".join(find_proper_nouns(sentence))

Product = " ,".join(find_product_types(sentence))

Condition = " ,".join(find_product_conditions(sentence))

Action = " ,".join(find_actions(sentence))

Price = " ,".join(find_prices(sentence))

if new_title != title:

new_df = pd.concat([new_df, pd.DataFrame({"Title": [new_title], "Person": [Person], "Product": [Product], "Condition": [Condition], "Actions": [Action], "Price": Price})], ignore_index=True)

else:

new_df = pd.concat([new_df, pd.DataFrame({"Title": " ", "Person": [Person], "Product": [Product], "Condition": [Condition], "Actions": [Action], "Price": Price})], ignore_index=True)

title = new_title

new_df

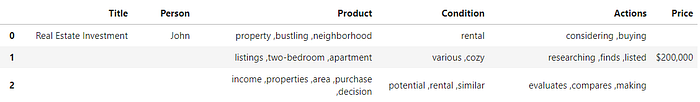

Results: For the sample data shown above, the following results were generated using the proposed methodology, which are very impressive.

For complete code visit following Kaggle notebook

https://www.kaggle.com/code/danishjavedcodes/unstructured-financial-text-into-tables

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.