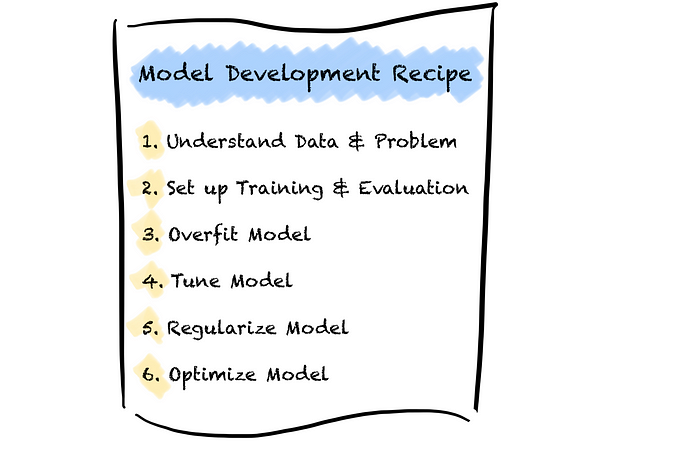

A Recipe For a Robust Model Development Process

Author(s): Jonte Dancker

Originally published on Towards AI.

ML models fail silently.

A model might fail but still produce some output. We might not even get a signal of why or where our model fails. And there are many reasons for a model to fail. A model can fail due to bad data, bugs in the code, wrong hyperparameters, or a lack of predictive power of the model.

But how can we ensure that the model does not fail silently? Well, we cannot. But we can reduce the probability.

First, we must be aware that the ML development process is different from traditional software development. The ML development process is more iterative and more debugging than developing. We cannot plan everything in detail as we do not know what we will find in the data during the development process. We must build a prototype and iterate to identify issues and improvements.

Second, there is no off-the-shelf solution to our problem. Even if libraries show snippets giving us the impression that they will magically solve our problem. They will not.

It is often not the model that decides if we get good results. It is the data we feed it with and a reliable pipeline. To get there, we usually need to go through a lot of trouble.

Overall, we need high confidence in our pipeline, model, and understanding of the problem and data.

However, we cannot test many of the above points with unit tests as in traditional software development. Instead, we need to be patient and pay attention to details. A fast and furious approach to training ML models does not work.

For this, I have three guidelines to gain high confidence in my model development process:

- Build from simple to complex

- Add complexity in small, verifiable pieces

- Debug first, optimize later

Following these guidelines helps me to find bugs and misconfigurations more easily.

We must understand what we change and how it will affect the model. For this, we can make concrete hypotheses about what will happen and check them with experiments. If the results are different from what we expected, we should dig deeper and find the issue. We need to identify this as early as possible.

To follow these guidelines I usually take six steps.

1. Understand the data and problem

Understanding the data is a critical step. Our understanding will guide our decisions on models, features, and approaches. I usually use a lot of visualizations. I do everything that gives me a good feeling about distributions, outliers, etc.

I try lots of different things and spend most of my time during the model development on the data.

2. Set up training and evaluation process

After I had a good idea about the data, it was time to build the pipeline and a baseline model.

The goal is to gain trust in my training and evaluation pipeline through experiments. If we cannot trust this pipeline, we later cannot tell why our model might not perform well. Is it because of the model or do we have a bug in our pipeline?

In the beginning, problems often come from bugs rather than from a wrong model design or badly chosen hyperparameters.

Hence, in this step, we should not do anything fancy. We should choose a simple model that we cannot mess up. Choose only a few features and do not do any fancy feature engineering. Scaling the data should be enough in this step. Our first goal is not a good model performance but to ensure that our pipeline works. We will improve the model later.

We can gain trust in our pipeline through visualizations and unit tests. We can visualize losses, metrics, predictions, and features before they are passed into the model.

We can use unit tests for our feature engineering pipeline, making sure we catch bugs easily.

A good trick is to write specific functions first. Once they work, generalize them.

3. Overfit the model

Only after we have a good understanding of our data, a robust training and evaluation pipeline, and trusted metrics we should look into models.

In this step, we want to verify that the model works and learns well from the data.

Again, do not be a hero. Do not pick a fancy model. Instead, stay as simple as possible. Start by choosing a simple model and use standard settings. Do not add any regularization or drop out.

We can train this model on a small set of training data. The goal is to make the model overfit the data. If it is not overfitting, there is something wrong. Is it because of possible bugs in our code? Does the data have predictive power? Are the features good enough? Did we choose the right model for the problem?

Again, add complexity step-by-step and always check the performance after each change. Verify that the loss decreases with every added complexity.

4. Regularize the model

Now we have verified that the model can learn and has predictive power. The next step is to ensure that the model generalizes well. We have different options we can use. We can get more data if the initial data set is small, we can remove features, try a smaller model, add drop-out or regularization, or use early stopping.

But keep in mind, we want to increase complexity slowly. Do not try all approaches at the same time.

5. Tune the model

Once we have a model that learns and generalizes well, we can tune its hyperparameters. We don’t need to test all hyperparameters. Usually, only a few hyperparameters have a large impact. Hence, a random over-grid search might work better than a full-grid search. Usually, we can start with the Adam optimizer with its default settings. Those settings might not be optimal, but the optimizer is fast, efficient, and robust against a wrong choice of settings.

No matter how we tune our model, we always must check our metrics after each change. Only by checking the metrics frequently, we ensure that we are continuously improving the model.

6. Optimize the model

After we have the optimal architecture and hyperparameters, we are not done. We can still optimize model performance. For example, we can use an ensemble of different models or train the model for a longer time.

Although the steps indicate a linear ML model development process, they are not. We need to go back and forth between steps. In particular, we will often go back to step 1. But, every time you try a new model, you should follow steps 3 to 5 in order.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.