What is inductive bias?

Last Updated on March 24, 2022 by Editorial Team

Author(s): Ampatishan Sivalingam

Originally published on Towards AI the World’s Leading AI and Technology News and Media Company. If you are building an AI-related product or service, we invite you to consider becoming an AI sponsor. At Towards AI, we help scale AI and technology startups. Let us help you unleash your technology to the masses.

Is making assumptions good for a training model?

The inductive bias (also known as learning bias) of a learning algorithm is a set of assumptions that the learner uses to predict outputs of given inputs that it has not encountered — Wikipedia

In the realm of machine learning and artificial intelligence, there are many biases like selection bias, overgeneralization bias, sampling bias, etc. In this article, we are going to talk about inductive bias, without which learning will not be possible. In a dataset, in order to derive insights or predict outputs, we should know what are we looking for. We should make some assumptions about the data itself in order to make learning possible, this is known as Inductive bias.

We will go through an example first to understand the intuition behind the inductive bias.

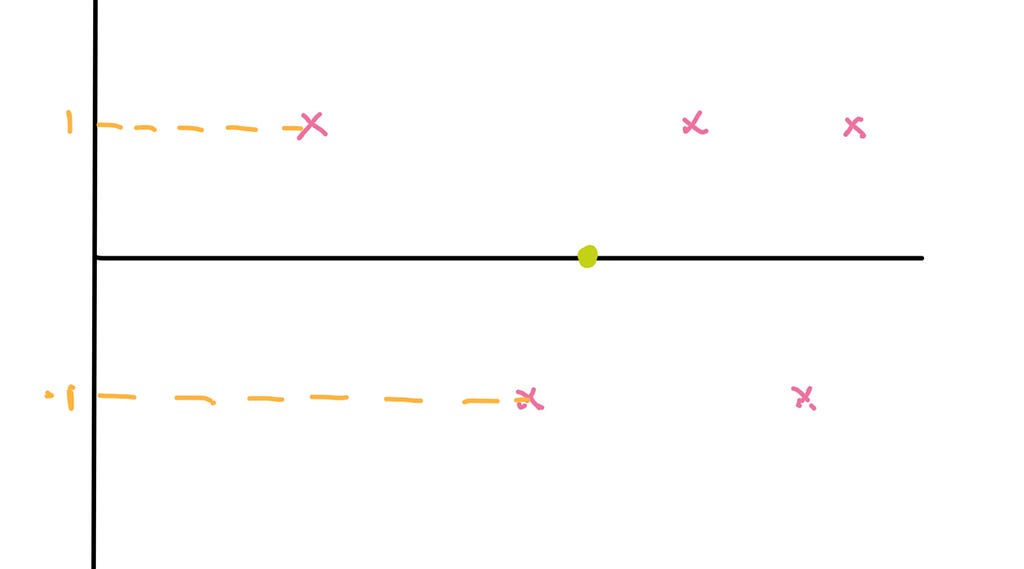

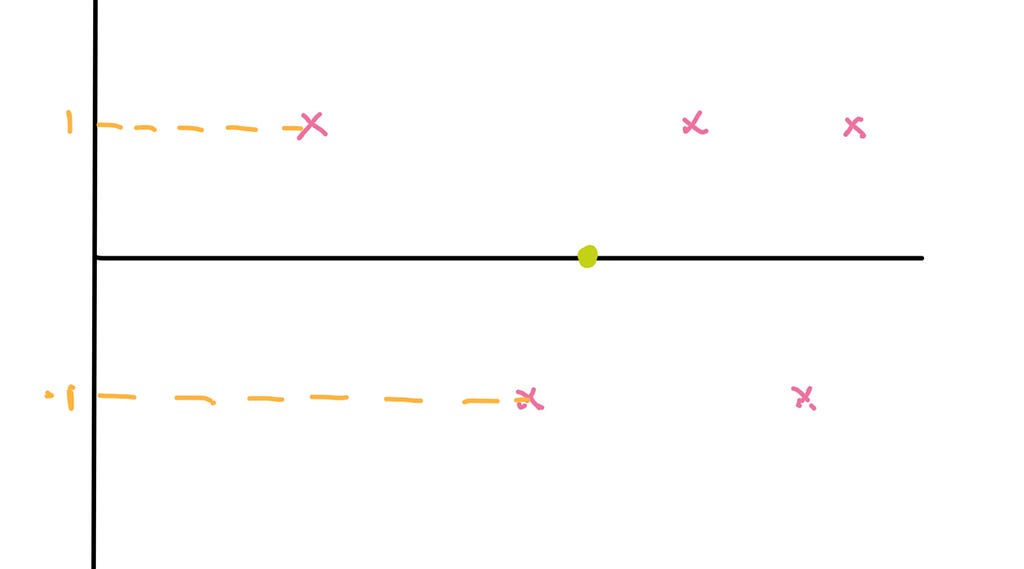

The figure above illustrates the data points belonging to a dataset, where the red point belongs to the training set and the green point is the test point. This is binary classification of whether a point on the x-axis belongs to class “-1” or class “1” based on the feature shown on the x-axis. Consider our data-space consists of 100 data points.

Now in order to find the label for the green point, if we don’t know any information regarding this label and were not able to make any assumptions, then we will be left with 2¹⁰⁰ functions that will generate the label for the green point. Even if we are able to remove functions that don’t satisfy our training set, we will still be left with 2⁹¹ functions, half of which would have predicted “-1” as the label and half of which would have predicted “1” as the label. If we assume the target function should be a constant 1 or constant 0, then after looking at the training samples we can say that the target function should be constant 1.

In the scenario above we have observed the two extremes, one where there is no inductive bias, so the learning is not possible, and the other one where there is a strong inductive bias so we were able to come to the conclusion with less amount of training data. But in real-world scenarios we will be in the middle, we will have some inductive bias along with a larger training dataset.

In real world machine learning scenarios, we have to find a good function space for the hypothesis for a particular application. For example when we are given with a dataset for a regression or classification, based on the understanding we have about the training data we should be able to select models that will correct model the given data. These assumptions are known as inductive bias

To give a more practical example, on a train an engineer, a scientist, and a mathematician are going to Scotland and they see a black sheep, immediately the engineer says that all sheep in Scotland are black, but the physicist contradicts by saying no some sheep in Scotland are black. Annoyed by these conversations the mathematician says there is at least one sheep in Scotland that is at least black on its one side. Each of these people represents a different level of inductive bias, that I have mentioned in the first example, the engineer has a strong inductive bias, while the mathematician has no inductive bias, while the scientist has some inductive bias. The question is who you think is right if you don’t have any ideas regarding sheep in Scotland.

“even after the observation of the frequent or constant conjunction of objects, we have no reason to draw any inference concerning any object beyond those of which we have had experience.” -Hume

In machine learning we violate the statement by Hume because we are generalizing the patterns that we observed over the training data to the test data as well, this is known as Inductive bias. So without inductive bias, learning will not be possible.

Some of you may have already heard of No Free Lunch theorem, which states that

“if all true functions are equally likely then no learning algorithm is better than any other”

That means if we don’t have any assumptions regarding the data that we have to model, then we can’t select the best target function.

What is inductive bias? was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Join thousands of data leaders on the AI newsletter. It’s free, we don’t spam, and we never share your email address. Keep up to date with the latest work in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.