REACH: Enterprise RAG Framework

Last Updated on April 22, 2024 by Editorial Team

Author(s): Ketan Bhavsar

Originally published on Towards AI.

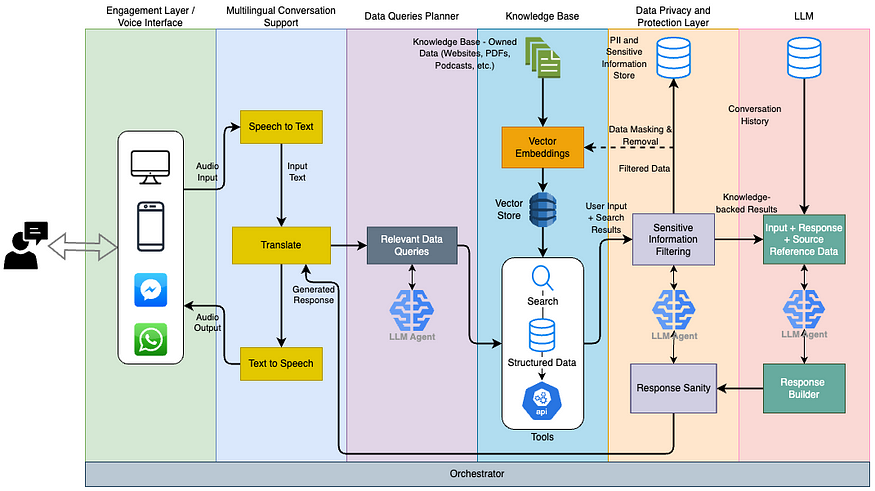

REACH: Responsible Enterprise AI for Conversational Harmony — A Unified RAG Framework for Enhanced User Experiences in ChatBots

I have been working on marketing technology solutions for more than a year now, and one of the most amazing parts of it is the speed at which technology adoption happens within the domain. Customer engagement has seen the fastest change in the last decade, starting from the advent of Call Centres to IVRs and rule-based ChatBots to WhatsApp Business. The next big thing in this area is AI-enabled engagement assistants — helping users to search, navigate, understand and navigate with ease the products and/or services landscape at any company. Although we know the benefits of this modern L3 support, it is still in a nascent stage and struggling to get mainstream for 2 reasons — data protection & privacy and response correctness (brand language, tonality, non-offensiveness, inclusivity, hallucination, etc.). Based on my experience in the past year and after going through some amazing architectures, here I present a framework that could be used to mitigate these issues and build a dependable conversational experience powered by AI, christened REACH, an acronym for Responsible Enterprise AI for Conversational Harmony. It could be used to either build or buy conversational AI ChatBots that help with marketing, customer engagement, servicing and a lot more.

In the leagues of digital transformation, enterprises are increasingly introducing conversational AI’s to engage with their customers. However, all of them either call it an early adoption or a beta, and the user experiences reported are kind of similar. The responses have mixed feedback, and in most cases, the engagement is found to lack the human touch of empathy, trust, and transparency, which ultimately results in a lack of confidence in the brand and frustration. All of this could be avoided by implementing systems that push for privacy, fairness and transparency in the interactions. One way to do this is by calling out knowledge limitations, having the right persona, and providing clear answers.

REACH tries to address these concerns by building on the concepts of enterprise RAG to avoid hallucinations with an orchestrated layer of LLMs fine-tuned to ensure an inclusive, fair and engaging human-like conversation experience.

Building Blocks for the REACH Framework —

1. Engagement/UI Layer

The Engagement Layer serves as the interface between users and solutions built with REACH framework. It hosts of options available to engage with customers through either chat or voice interfaces hosted on owned platforms like Mobile Apps and website, or social media platforms like WhatsApp and Messenger.

Components of the engagement interface –

a) Natural Language Understanding (NLU) — this helps in making the interactions feel more intuitive and conversational.

b) Multi-Modal Support — with the growth of voice interfaces, it has become important to provide a way to engage with text and voice both on any customer support system. This helps with improving accessibility and accommodates rising user preferences.

c) Context Aware — one of the reasons IVRs or dialog based ChatBots lose their appeal is the lack of maintaining context. By building on conversation history capabilities helps with keeping the chat context aware and the engagement cohesive.

2. Multilingual Conversation Module

Building for the world, truly means localising for multiple languages. This is especially true while building for a diverse country like India. To support this diversity of language interactions, while ensuring responsible and inclusive communication, REACH recommends this Multilingual Conversation Module as the second layer of engagement in the system.

Key considerations for this module are —

a) Language Diversity Adaptation — support for multiple languages means navigating the diversity of syntaxes, semantics and cultural nuances. The conversation system needs to ensure it can understand and respond appropriately in varying linguistic contexts.

b) Improving Inclusivity in Language Processing — with the diversity and need to be more inclusive, REACH recommends techniques like language detection, language-specific models, and cross-lingual knowledge transfer to improve accuracy and cultural sensitivity.

c) Seamless Integration for Cohesive Conversations — the Multilingual Conversation Support Module facilitates effortless communication across languages to uphold a consistent UX. It adapts responses to cultural nuances, promoting understanding and empathy in cross-cultural interactions.

3. Data Queries Planner

Another major part of a REACH powered conversational experience is its ability to answer any and all queries to the extent of its knowledge. This module is responsible for breaking down the input message into actionable data requests for the querying system. This will in turn ensure retrieval of relevant and accurate information.

There are 3 main aspects to the query planner, viz. — Accuracy, Efficiency and Privacy & Fairness. Accuracy ensures reliable information, efficiency reduces latency, and privacy considerations safeguard user data and promote fairness.

Strategies to optimize data retrieval while building queries for privacy and fairness —

a) Query Optimisation: Techniques such as query rewriting, indexing, and caching enhance the efficiency of data retrieval by minimizing redundant operations and maximizing the use of pre-computed results.

b) Privacy-preserving Data Retrieval: Methods such as differential privacy, data anonymization, and encryption are employed to protect sensitive information during data retrieval operations. These techniques ensure that user privacy is preserved without compromising the utility of retrieved data.

Several real-world examples illustrating the effectiveness of the Data Queries Planner —

a) Personalized Recommendations: By optimizing data retrieval and incorporating user preferences, we can deliver personalized recommendations tailored to individual users’ interests and preferences. This enhances user satisfaction and engagement with the AI system.

b) Contextual Information Retrieval: The planner facilitates the retrieval of contextually relevant information based on the ongoing conversation, ensuring cohesiveness in conversations.

c) Privacy-preserving Data Access: In any public-facing interfaces, it is important to enable privacy-preserving techniques to retrieve information from sensitive data sources, such as personal user data or proprietary company information, without compromising confidentiality or data security. This ensures that users can interact with the AI system confidently, knowing that their privacy is protected.

The queries planner module helps align with the principles of responsibility and conversational harmony by prioritizing user privacy, ensuring data integrity, and promoting fairness in the retrieval system.

4. Knowledge Base (Vector Store) built for RAG

This is the heart of the REACH framework. This is where the magic happens and a perfectly harmonious conversational AI solution comes together. The knowledge base is nothing but a vector store that serves as a repository of structured and unstructured data both. It can have data ranging from text articles/pages, to audio podcasts and YouTube videos. The possibilities are endless.

The knowledge base is what enhances the overall effectiveness of the conversational AI system by leveraging the concepts of Retrieval Augmented Generation, aka RAG. Vector store helps with efficient data retrieval, and form responses or actions based on verified data sources, largely limiting the chances of hallucination.

Designing an efficient knowledge base, can be achieved by adhering to a few helpful principles. First — a dual retrieval mechanism; this will help to blend traditional keyword-based search with context-aware retrieval using pre-trained language models like BERT or GPT, eventually ensuring comprehensive coverage and relevance. Second — data representation using contextual embeddings; this could capture rich semantic information and help towards accurate matching. Third — computing semantic similarity; this could allow nuanced and contextually relevant retrieval by considering relationships between words and phrases. This is especially important to make the conversation sound more relevant.

Overall knowledge base implementation revolves around efficient indexing and storage, for effective retrieval. These practices help optimize data access, enable on-the-fly response generation, and suggest the presentation of the most informative passages to users.

Enterprise use cases for building with RAG —

a) Information Retrieval in Customer Support: In a customer support scenario, the Knowledge Base with RAG helps retrieve relevant troubleshooting guides, product manuals, and support articles based on user queries. By leveraging semantic similarity and context-aware retrieval, the system delivers accurate and timely assistance to users, enhancing their overall support experience.

b) Knowledge Sharing in Enterprise Collaboration: In an enterprise collaboration setting, RAG system facilitates knowledge sharing and information dissemination among employees. By indexing and retrieving relevant documents, presentations, and training materials, this framework enhances productivity and fosters a culture of continuous learning within the organization.

c) Content Recommendations in E-commerce: Within an e-commerce platform, the Knowledge Base with RAG powers personalized product recommendations and informative content suggestions. By analyzing user preferences and browsing history, RAG-based solution retrieves product descriptions, reviews, and related articles that match the user’s interests, leading to increased engagement and sales conversion rates.

5. Data Privacy and Protection Layer

Data privacy and protection are the single most important thresholds for any conversational AI solution to breach in order to become generally available. In today’s world, enterprises need to navigate a complex web of laws, regulations and ethical guidelines to ensure responsible use of data & protect user privacy. Enterprises today are holding back, just waiting for a solution on these. In the REACH framework, we propose a way to mitigate the risks involved to a certain extent by incorporating a dedicated data protection layer powered by specially fine-tuned LLMs to ensure respect for the code of conduct in an enterprise brand landscape.

Regulatory compliances in Enterprise AI —

a) Data Protection Regulations: Compliance with data protection regulations such as GDPR (General Data Protection Regulation) and CCPA (California Consumer Privacy Act) is essential for enterprises operating in global markets. These regulations impose strict requirements on data collection, processing, and storage, with hefty penalties for non-compliance.

b) Fairness and Bias: Ensuring fairness and mitigating bias in AI systems is crucial for promoting equitable outcomes and avoiding discriminatory practices. Ethical AI frameworks emphasize the need to identify and address biases in data and algorithms to uphold principles of fairness and social justice.

c) Transparency and Accountability: Transparency and accountability are foundational principles of responsible AI. Enterprises must be transparent about their data practices and accountable for the decisions made by AI systems. This includes providing clear explanations of how data is collected, used, and protected, as well as mechanisms for addressing user concerns and grievances.

Ways to ensure responsible use of data —

a) Data minimization: Only ask for what’s absolutely necessary — minimize collection and retention of personal data as much as possible. This will help reduce the risk of unauthorized access or misuse of sensitive information.

b) Data Anonymisation: Employ techniques like data masking and tokenization to ensure sensitive information is de-identified before storage or processing. This protects user privacy while still allowing for meaningful analysis and utilisation of data.

c) Granular Consents: Granular consent management for data collection, processing and sharing activities provides users with explicit control over their data. It builds trust with the users and ensures legal compliance as well. 3rd party tools can be employed to further nurture trust in the system.

Enterprise scenarios where having the privacy layer could bolster trust and adoption with users —

a) Healthcare Assistant: Confidentiality of patient data is of utmost important for enabling any type of conversations in a healthcare setup. This could be implemented using privacy layers as prescribed in REACH framework, by implementing robust privacy-preserving mechanisms. This eventually leads to fostering trust, which is paramount for an open dialogue about sensitive health issues, thereby improving patient outcomes.

b) Financial Advisory: Finance is the second most private area for any user and mandates additional privacy. Having a defined privacy layer enables users to share sensitive financial data with confidence, knowing that their data is protected while providing personalized recommendations and guidance.

6. Language Model (LLM) Integration

Finally the magic potion enabling natural conversations in enterprise systems — LLM integrations. LLMs provide to build on the following key principles for reliable conversational systems —

i) Understanding Context: Language models are trained to understand the context of user queries and generate responses that are contextually relevant and coherent. We leverage these language models to interpret user intents accurately and provide informative and engaging responses.

ii) Promoting Inclusivity: Language models are fine-tuned to promote inclusivity and diversity in communication by recognizing and accommodating linguistic variations and cultural nuances. Enterprise AI frameworks ensure that language models are trained on diverse datasets to capture a broad spectrum of language usage.

iii) Mitigating Bias: Language models are rigorously evaluated and audited to identify and mitigate biases in language generation. It is imperative employs techniques such as debiasing algorithms and fairness-aware training to ensure that language models produce unbiased and equitable responses.

Summarising the key techniques to consider for enhancing the frameworks conversational abilities. First, we can streamline the process by tidying up and breaking down user input for easier comprehension. Then, integrating advanced language models like BERT and GPT to elevate the AI’s understanding. Lastly, fine-tuning the responses using methods such as response ranking and paraphrasing to ensure they resonate with users.

These enhancements could potentially transform the ChatBot’s conversational prowess. Interactions will feel more natural and seamless, akin to chatting with a friend. Moreover, the system could continually learn from these interactions, refining its understanding and responses over time. It’s an exciting journey towards potentially creating more engaging and intuitive conversations for our users.

Experiments for assessing the Effectiveness of LLM Integration in conversational AI interfaces —

a) Usability Testing: User studies are one of the best ways to evaluate the usability and effectiveness of conversational AI. Participants perform tasks and engage in conversations with the system, and their experiences and feedback are analyzed to identify areas for improvement.

b) Performance Evaluation: Technical experiments measure the performance of conversational AI systems in terms of language understanding, generation accuracy, and response coherence. Furthermore, metrics such as intent classification accuracy, language model perplexity, and user satisfaction scores are used to evaluate performance.

c) A/B Testing: Randomizing users to use different versions of the system, recording their interactions and collating their feedback, helps assess user satisfaction and engagement. This strategy also helps with evaluating integrity and efficiency of the conversational system.

These testing strategies provide valuable insights into the effectiveness of conversational AI systems and implications for data privacy & conversational harmony.

Conclusion:

REACH framework is a solid step towards building responsible enterprise conversational AI systems within the marketing technology space to enhance customer engagement experience. By ensuring data privacy and protection and by being transparent about it, the framework helps build trustful interactions, which is the basis of any human-like conversation. The fine-tuned language models ensure inclusivity and a code of conduct within the responses, further pushing the boundaries of Human Machine Interfaces. Conversational AI systems are a complex space in a nascent stage, and having frameworks like REACH helps early adopters stay aligned with the enterprise's expectations. From enhancing conversational experiences to championing ethical AI practices, I believe, frameworks like REACH stand as a beacon for responsible innovation and harmony in digital communication.

So, how are you building your enterprise conversational AI systems? 🙂

Let’s talk.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.