.NN#4 — Neural Networks Decoded: Concepts Over Code

Last Updated on February 11, 2025 by Editorial Team

Author(s): RSD Studio.ai

Originally published on Towards AI.

This member-only story is on us. Upgrade to access all of Medium.

In our ongoing journey to decode the inner workings of neural networks, we’ve explored the fundamental building blocks — the perceptron, MLPs, and we’ve seen how these models harness the power of activation functions to tackle non-linear problems. But even with cleverly designed architectures and activation functions, a neural network is initially like a ship without a compass, aimlessly drifting on the ocean of data. How do we guide these complex systems towards our desired destination: accurate and reliable predictions?

The answer lies in loss functions.

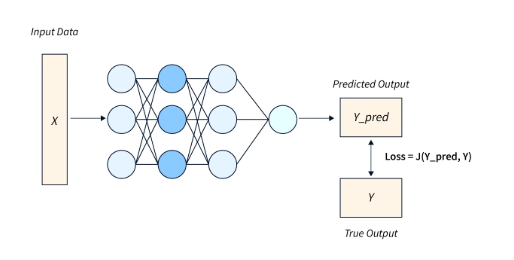

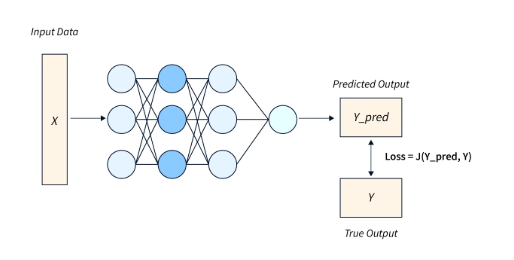

Loss functions are the “guiding stars” of neural network training, providing a mathematical measure of how well (or how poorly) our model is performing. They quantify the difference between the network’s predictions and the actual, desired outputs. Essentially, a loss function is how the algorithm is able to know if the learning process has gone the correct way. It’s how well the model was able to get the output data when compared to actual values which are known as ground truths.

By analyzing the loss, neural networks can “learn from their mistakes,” adjusting their internal parameters (weights and biases) to gradually improve their performance and… Read the full blog for free on Medium.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.