Inside SIMA: Google DeepMind’s New Agent that Can Follow Language Instructions to Interact with Any 3D Virtual Environment

Last Updated on March 25, 2024 by Editorial Team

Author(s): Jesus Rodriguez

Originally published on Towards AI.

I recently started an AI-focused educational newsletter, that already has over 165,000 subscribers. TheSequence is a no-BS (meaning no hype, no news, etc) ML-oriented newsletter that takes 5 minutes to read. The goal is to keep you up to date with machine learning projects, research papers, and concepts. Please give it a try by subscribing below:

TheSequence U+007C Jesus Rodriguez U+007C Substack

The best source to stay up-to-date with the developments in the machine learning, artificial intelligence, and data…

thesequence.substack.com

Video games have long served as some of the best environments for training AI agents. Since their early days, AI labs like OpenAI and DeepMind have built agents that excel at mastering video games such as Atari, Dota 2, StarCraft, and many others. The principles of many of these agents have been applied in areas such as embodied AI, self-driving cars, and many other domains that require taking action in different environments. However, most of the AI breakthroughs in 3D game environments have been constrained to one or a small number of games. Building that type of AI is really hard, but imagine if we could build agents that can understand many gaming worlds at once and follow instructions like a human player?

Last week, Google DeepMind unveiled their work on the Scalable, Instructable, Multiworld Agent (SIMA). The goal of the project was to develop instructable agents that can interact with any 3D environment just like a human by following simple language instructions. This might not seem like a big deal until we consider that the standard way to communicate instructions has been with super-expensive reinforcement learning models. Language is the most powerful and yet simple abstraction for communicating instructions about the world or, in this case, a 3D virtual world. The magic of SIMA is its ability to translate those abstract instructions into mouse and keyboard actions used to navigate an environment.

SIMA’s goal is to develop an agent capable of understanding and executing any verbal command within a myriad of virtual 3D worlds, ranging from specially designed research platforms to a wide array of popular video games. This endeavor is rooted in the history of efforts to craft agents that navigate and interact with video games and 3D simulations. Yet, SIMA distinguishes itself by aiming for a universal approach, learning from the vast and varied experiences similar to how humans do, rather than focusing on a narrow set of environments. Start with specialization and aim for generalization! Ambitious

The SIMA team has crafted an agent that can carry out tasks over short periods based on verbal instructions, either from humans or generated by AI. They’ve tested this agent in over ten different 3D settings, including both academic research environments and commercial games. Since video games don’t inherently provide feedback on task completion, the team has innovated with methods like optical character recognition to identify task-related text on the screen and human reviews of the agent’s gameplay recordings. They outline their initial strides and the overarching methodology, aiming to build an agent that can do anything a human can in any virtual setting.

In designing SIMA, DeepMind made several critical choices to ensure the agent’s adaptability across various environments. They selected complex video games featuring numerous objects and potential interactions, running these games asynchronously to mimic real-world conditions where the game doesn’t pause for the agent. Due to hardware limitations, only a limited number of game instances can be run on a GPU, which contrasts with the capacity to run many simulations simultaneously in some research contexts. The agents are designed to rely on the same visual input and control mechanisms — a keyboard and mouse — that a human player would, without any inside knowledge of the game’s internal mechanics. Furthermore, DeepMind focuses on the agent’s ability to follow complex verbal instructions rather than achieving high scores or mimicking human gameplay. The training emphasizes open-ended natural language communication to maximize the agent’s understanding and responsiveness.

The Dataset

Google DeepMind’s project, SIMA, is designed to understand and act on language within a multitude of detailed 3D environments. By choosing settings that allow for a wide variety of interactions, these environments are pivotal for developing deep language-based tasks. The project leverages over ten distinct 3D environments, including both commercial video games and academic research platforms. This diversity ensures a broad spectrum of visual experiences and interaction opportunities, while maintaining the core elements of 3D navigation and interaction common to all settings.

1) Commercial Games

SIMA uses commercial games for \their complex interactions and high visual quality, avoids content with extreme violence or bias, and ranges from space exploration to resource management. These games challenge the agent with a variety of tasks, including but not limited to flying, mining, and crafting.

2) Research Environments

AI research environments offer a more controlled setting for testing and honing specific abilities, with easier task completion assessment. These platforms typically mirror real-world physical interactions more closely, albeit in a simplified manner, compared to the broader and more unpredictable nature of commercial video games.

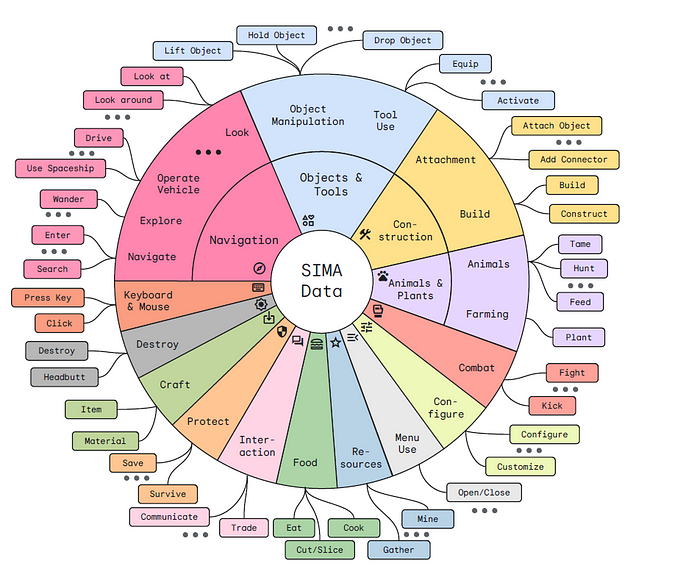

3) Instruction Dataset

For training and evaluating its agents, SIMA uses a dataset filled with a wide array of text instructions. These instructions cover general tasks common across many 3D settings, like navigation and object manipulation, thanks to the shared three-dimensional nature of the environments. The instruction set is organized into categories using a data-driven approach, which clusters the human-generated instructions into a hierarchy based on similarities in language use, as identified in a pre-established word embedding space. This structured dataset facilitates the development of an agent capable of understanding and performing a diverse range of tasks across different virtual worlds.

The Architecture

Google DeepMind’s SIMA agent is designed to translate visual inputs and spoken commands into keyboard and mouse actions. This task is challenging due to the complexity of interpreting and acting on diverse instructions, coupled with the wide range of potential inputs and outputs. To manage this, DeepMind has chosen to focus the agent’s training on tasks that can be completed quickly, within about 10 seconds. This approach allows the tasks to be broken down into simpler components, making it possible to apply them across various scenarios and environments with the right sequence of instructions.

The architecture of the SIMA agent builds upon previous efforts but has been tailored to meet broader objectives. It includes a mix of newly developed components and several pre-existing models that have been adapted for this specific purpose. Among these are models trained for detailed image-text matching and video prediction, which have been further refined using the agent’s data to improve its performance. These models have been shown to provide valuable insights when combined, enhancing the agent’s ability to understand and interact with its environment.

More technically, the agent employs freshly developed transformers that integrate inputs from the pre-trained visual models, the given verbal instructions, and a memory-focused Transformer-XL. This integration forms a comprehensive state representation, which then guides the policy network in generating appropriate sequences of keyboard and mouse actions. The training process involves behavioral cloning and an additional goal of predicting when a task has been completed successfully. This method enables the SIMA agent to not only learn from vast amounts of pre-existing data but also to adapt to the specific challenges presented by the simulation environments and control tasks it encounters.

The Results

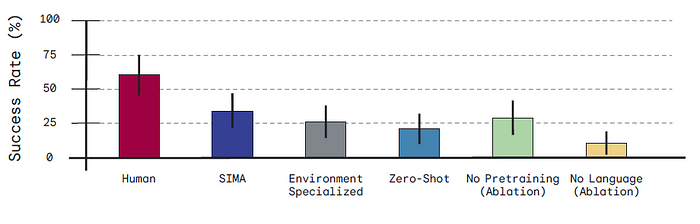

Following a showcase of the SIMA agent’s abilities through various examples, Google DeepMind shifts focus to a detailed analysis of the agent’s performance. This examination is categorized by the virtual environments and types of skills the agent has been trained on. Additionally, the results are benchmarked against various standards and prior models to highlight the agent’s adaptability and the success of the design decisions made. Furthermore, an evaluation comparing the agent’s task execution to human performance provides another layer of insight into its capabilities.

1) Environment Performance

In terms of environmental performance, the agent shows promising results, though it does not achieve perfection across the board. Success rates differ significantly from one environment to another, illustrated through a color-coded system to denote the methods used for assessment. Interestingly, even without being exposed to a particular environment during training, the agent demonstrates competent performance, often surpassing basic models without language capabilities and, in some cases, equalling or besting agents tailored to specific environments.

2) Skill Performance

When assessing the agent’s skillset, it’s clear that its proficiency varies across the spectrum of evaluated abilities. Some tasks are executed with high reliability, while others show room for improvement. The skills have been organized into categories, represented by colors, based on their evaluation tasks, indicating a structured approach to analyzing the agent’s performance in different domains.

3) Human Benchmark

To round off the analysis, human-level performance is used as a benchmark, particularly in a series of tasks within the game No Man’s Sky. These tasks were chosen to challenge the agent with a range of skill requirements, from basic to complex. The human participants involved in this comparison were experienced players who had contributed to the data collection process, offering a credible standard for assessing the agent’s effectiveness in a realistic context.

SIMA represents a major milestone in the quest to develop agents that can interact with diverse environments without being formally trained on them. The research can have profound implications in fields such as embodied AI, robotics, self-driving vehicles and many others,

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.