Critical Ideas Behind What Powers LLMs

Last Updated on May 1, 2024 by Editorial Team

Author(s): Vishnu Regimon Nair

Originally published on Towards AI.

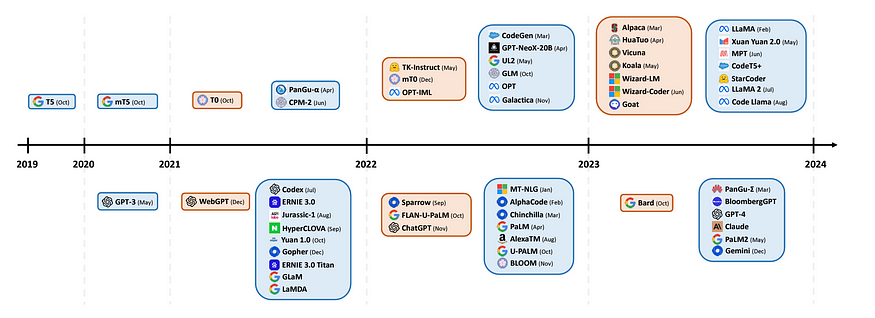

In recent years, the ascent of Large Language Models (LLMs) such as GPT-4 and LLaMA has paved the way for the general public to utilize AI in their daily workflows. It can range from programming and documentation to writing emails and rap battles between 2 famous fictional characters. It is safe to say it is versatile regarding what it can do within the language space. I want to explore seven critical questions regarding LLMs and their impacts. I want to probe their societal impact, technical and ethical considerations, and future trajectories.

7 Vital Questions Surrounding LLMs

- Can LLMs plan long-term in various scenarios, or is it regurgitating a memorized template that mimics long-term planning in specific scenarios?

- How can we identify elements in the system that contribute the most/least to optimize the responses of LLMs?

- How can we develop a structure that can extract the maximum value from LLMs?

- How do we navigate the semantic correctness vs syntactic correctness that creates difficulties in picking training data?

- Is it right to evaluate based on factual accuracy?

- Why are sentience, intelligence, and even morality seemingly attributed to humans based even on contested evidence, while these attributes are often denied to LLMs?

- Are the outputs of LLMs a remix of the Internet, or are they producing original ideas?

But before that, how does it work on a high level? After all, many people who use these tools don’t know programming or Neural Networks(the architecture that powers ChatGPT—it is also based on the human brain). So, I want to provide an intuitive way of thinking about large language models, the framework behind ChatGPT that allows it to do everything it can.

Imagine texting on your phone, but instead of suggesting the next word, your phone predicts sentences that make sense! That’s what Large Language Models (LLMs) do, but on a much bigger scale. On the phone, it suggests the next word based on the last one or two. Pretty basic, right? LLMs are trained on massive amounts of text, like most of the Internet! This lets them predict single words and whole sentences that flow naturally and make sense in context. Think of it like having a superpowered dictionary that understands how words work together. This is what tools like ChatGPT and Gemini do.

To clarify, ChatGPT is a web application that serves large language models. The model that powers ChatGPT is GPT3.5, GPT 4, or any other model that OpenAI will develop. LLMs work on an approach that can essentially be called “predict the next word.” So, they try to predict the next word based on the input prompt.

LLMs are trained on substantial text corpora, a massive part of the Internet. So, they find patterns within the text, such as the grammar or specific phrases that are more often paired together. For example, in the series Rick and Morty, Rick and others say, “Wubba Lubba Dub Dub.” So if the input text is “Wubba Lubba” and you ask it to complete it, there is an excellent chance it says “Dub Dub” if it was trained on text based on the series. Another example would be texts based on Harry Potter; the spells used in Harry Potter can be asked, and it will tell you many spells. If the LLMs are trained on text from Harry Potter or even Reddit pages on Harry Potter, it can give you references to Harry Potter.

There are patterns in language and the context in which the text is written, and LLMs learn these patterns to predict the next word. If a massive corpus of high-quality data is learned, it can do this more effectively since it has more examples and patterns from which it can learn. That is why LLMs are trained on a massive volume of data.

This allows LLMs to continue generating output based on a prompt, but it also means that it can create incorrect text or “hallucinate.” There are various reasons for hallucinations. Since LLMs are trained on lots of data, the datasets used can be incomplete or even misinformation. Since LLMs work on patterns in data rather than the factual accuracy of those texts, they can create highly inaccurate, biased, and hurtful text.

Latent Space and Navigation

In the context of LLMs, the “latent space” refers to a high-dimensional space where the model represents language and its underlying structure. This space captures the relationships between words, phrases, and sentences based on semantic and syntactic similarities.

In the series Sherlock Holmes, Sherlock talks about a concept called “Mind Palace.” He uses it to store vast amounts of information. In this technique, he constructs a Mind palace virtual space resembling a house or a mansion. He stores information in various places in this house, such as corridors and rooms. These corridors and rooms can represent specific or broad categories and topics.

Similarly, LLMs can also be viewed to create a space to store information. This is called the latent space(latent meaning hidden) and allows LLMs to store information and organize it to keep similar information in nearby areas. Caveat: This is just an intuitive explanation. In reality, the latent space can look quite different.

So, what makes two words similar?

There are three main reasons:

- Semantics(Meaning): Words with similar meanings are closer together. This includes synonyms (king-queen), hypernyms (dog-animal), and hyponyms (animal-dog).

- Syntax(Structure): Words with similar grammatical roles or structures are closer together. For example, walking and strolling are placed closer together.

- Statistical Co-occurrence: Words frequently appearing together in the training data are likely to be positioned near each other. This captures the statistical relationships between words, even if they aren’t directly related in meaning or grammar.

So, an LLM learns these similarities or distinguishable features and uses that to understand language and its intricacies. Such features are stored in the latent space representation of data. Forcing the model to learn these distinguishable features removes unimportant information. For example, “Go Home” and “Please go home” convey the same core meaning (request to go home) but differ in grammatical structure and tone. During dimensionality reduction, the LLM might downplay these variations, focusing on the core meaning in the latent space representation. So, LLMs prioritize core semantic features and statistically relevant information while potentially discarding less crucial details.

There is a view that compression is intelligence[Hutter Prize]. Since model weights remain fixed during training and are much smaller than the data they aim to fit, efficient compression is the only way to accomplish their task effectively. Compressing data requires identifying patterns and extracting meaningful relationships. Acquiring knowledge involves grasping fundamental concepts and discerning underlying principles. Instead of memorizing answers to potential prompts, it understands the language and the context in which it is written and generalizes effectively, thereby learning. So, in the pursuit of compression, intelligence is also claimed to be achieved.

When recalling the information, individuals mentally navigate through their mind palace, retracing their steps through the rooms and areas where they stored the data. As they encounter each location, they retrieve the associated information. Similarly, LLMs navigate the latent space to retrieve the information required when prompted by finding associated information with the words in the latent space and the prompt given. This text positioning happens during training and reflects the statistical correlations in the training data. For example, words like dog, cat, and tiger are kept closer together, and TV, computer, and phones are kept together.

A probabilistic approach to “predict the next word.”

The approach for predicting the next word is a probabilistic one. LLMs assign a probability score to possible words that can follow a given phrase and then build on top of that. For example, imagine an LLM is trained in a massive set of research papers. If the phrase presented to it was, “The researchers observed a significant correlation between…” the LLM wouldn’t just pick a single word like “temperature” or “mutation.” Instead, it would analyze the training data and determine which words/phrases are more relevant to the given phrase. Then, it would assign a probability score to each of these words. For instance, it might assign a high probability (e.g., 70%) to words like “variables” or “factors,” and words like “happiness” or “dogs” would receive low probabilities (e.g., 2% and 1%). This is because datasets trained on scientific contexts have words like variables that occur more frequently than happiness. So, LLMs analyze the training data, figure out relations between words and their probabilities of occurring together, and use that information to predict the following word/phrase in their outputs. They pick words with higher probabilities in a given context and use them to predict new words. This is also why LLMs generally continue giving outputs even when in scenarios where there isn’t a logical continuation. It can just continue giving outputs by picking words that aren't logical or factually accurate when combined with other words/phrases. In many cases, it’s only seeing the next step without considering the big picture. In essence, LLMs find the most relevant information from the prompt, connect that with their training data, and generate an answer most appropriate to the combination of training data and the prompt.

The greedy algorithm of sequentially picking the word with higher probabilities, focusing on a local optimum, does not guarantee a global understanding. This myopic vision causes limitations, like a lack of complex long-term planning and reasoning abilities.

Applications like ChatGPT and Gemini can utilize these probabilities to create richer outputs. One of the critical parameters for controlling such probabilities, called ‘temperature,’ is used. At a temperature of 0, the model always picks the next word with the highest probability. In the previous example, it was ‘variables.’ However, this would lead to more predictable and repetitive text. For instance, if someone asks the same question, they would always get the same answer. This would make it more deterministic. Increasing the temperature introduces a controlled element of randomness, ensuring some variance in word choice. This makes the answers more varied and natural, like talking to a real person who might say different things in similar situations. This element of randomness is beneficial in most applications, as it helps generate text that flows more naturally and avoids a monotonous style. The specific amount of randomness would depend on the use case. For example, if you want to create a chatbot based on a character like Han Solo, you could reduce the temperature or randomness as you want it to output text closer to the character and not variations from the character.

Application to Text Generation

In essence, LLMs function by transforming input text into representations within a latent space. This space acts as a centralized repository for all of the representations. These representations are then manipulated to generate the outputs produced. This type of similarity-based positioning allows LLMs to navigate natural language. This also causes issues like bias, where the model learns to associate black people with crimes as compared to white people. This is because it knows the statistical correlations between words. So, if many datasets in the training data associate black people with crimes, the LLM will also learn it. In many cases, it propagates an increase in bias and amplifies it a lot. So, we must find ways to handle scenarios involving bias, fairness, and accountability.

The quality of LLMs depends on the quality of their latent space representation, their ability to navigate that space, and their capability to produce coherent language output.

What limits LLMs

- The opacity of LLMs’ internal representations causes a communication gap that hinders our ability to effectively provide input requirements and understand the underlying structures, reducing their output quality.

- The training data used is a massive corpus of text from various sources, so we can’t identify the type of training data that caused the LLMs to learn unwanted outputs like bias. Clarity in the texts used for training and picking the best sources with little to no bias and other unwanted outputs is required.

- The temperature setting and other parameters can induce randomness, making evaluating it more challenging. Since there is no deterministic response, benchmarking and assessing such responses becomes difficult. How do we know if the model responds correctly but in the wrong scenario? Or if it is reacting wrongly in the correct scenario? The issue lies in being able to benchmark and evaluate without precise reproducibility. For example, a prompt answers non-biasedly while testing, but the same questions elicit bias afterward.

- Multiple benchmarks like Massive Multitask Language Understanding, GSM8k, and HumanEval exist to evaluate LLMs, but there are risks associated with accepting these scores at Face Value. LLMs are trained on vast corpora of Wikipedia, blogs, books, Scientific research, and custom datasets, so there is a chance that LLMs have already seen the data used in these public benchmarks. This is, in fact, the case where there is a considerable overlap between training data and data used in public benchmarks. This is called ‘contamination’ and is a known challenge. Scores on benchmarks aren’t reliable, especially if there are differing contamination levels with different models, making it challenging to evaluate them accurately. These benchmarks are a proxy for what we want, which is generalizability. So, inflated performances won’t help us assess accurately.

- They produce factually wrong outputs and confidently speak about them. This is called “hallucination.” This is especially problematic when LLMs serve mission-critical and high-stakes workflows like diagnostic medicine. It represents a considerable risk to such applications and causes fewer real-world deployments in many fields where such risk is unacceptable. They can also contradict their statements, which makes reproducibility a concern.

- There are ethical concerns surrounding using LLMs to spread propaganda and fake news. LLMs can likely drown the internet with AI-written texts and pose as humans by creating fake profiles, and we wouldn’t be able to identify them at scale. This can be used to incite hate or mischaracterize a minority. The generated text can be used to deceptively fool people into believing that many in society have the opinion of something harmful and be used to manipulate public opinion. This is called Astroturfing.

- LLMs can generate toxic content that spreads historical biases and stereotypes. The training data might contain inappropriate language, and the model can pick up on these historical biases based on race, sexual orientation, and gender. The LLM might learn to associate certain characteristics with specific groups, leading to outputs that reinforce stereotypes. It can also generate disallowed content, such as creating dangerous chemicals and bombs.

Food for thought

1) While large language models (LLMs) excel at various tasks, their capabilities in long-term planning remain a topic of debate. LLMs are very good in some instances, like the grammar of the paragraph created. For example, they place correct articles(a/an) before the word, so they have planned on a syntactic level. This shows a grasp of grammatical rules, a form of localized planning within a sentence structure. On a semantic level, LLMs face difficulties like solving puzzles. Tasks like solving puzzles, which require a broader understanding of cause-and-effect and multi-step strategies, expose limitations in their planning abilities. Some argue that seemingly planned outputs are simply the regurgitation of a memorized template. The models might have identified patterns in training data that involve sequential steps, allowing them to mimic planning without proper understanding. Planning often requires reasoning beyond directly observed patterns. Of course, the definition of “planning” itself can be subjective. One expert can see an action as planned, but another can see it as simple pattern recognition. Can LLMs plan long-term in various scenarios, or is it regurgitating a memorized template that mimics long-term planning in specific scenarios?

2) It is challenging to pinpoint which training data or computations contributed most to the final output. There can be a combination of factors within the training data. A seemingly insignificant variation in the input data can lead to drastic changes in the network’s output. Given how Neural Networks work, this system has multiple layers. The computations at previous layers are accumulated and can be magnified as the data passes through the network, causing significant changes in the output due to the steady accumulation of errors. Some components can have delayed feedback, making it difficult to track which part caused the output. This problem, called the Credit Assignment Problem, introduced by Marvin Minsky in his 1963 paper “Steps Toward Artificial Intelligence,” is a core challenge in machine learning. It revolves around understanding how credit should be allocated to components within a complex system.

We can’t effectively adjust the system if we don’t understand which components contribute the most to success or failure. To optimize, we can try two approaches. Either remove components that cause failure(in our case, wrong outputs like bias) or keep components with more chances of success. How can we identify elements in the system that contribute the most/least to optimize the responses of LLMs?

3) One crucial aspect is evaluating the prompts we feed LLMs. These models are sensitive to minor phrasing changes in the prompt, even if they don’t change the question’s meaning. Another example is prompting with emotional stimuli that alter the outputs. There are cases where outputs are improved based on emotional stimuli. This showcases that seemingly minor input prompt variations can cause significant output changes. LLMs operate in a high-dimensional latent space where similar information is close to each other. The prompts guide the navigation of the LLMs to the most relevant region. Somehow, slight variations in the prompts are leading to significant changes in the output.

These variations beg the question: are LLMs capable of much more if we ask the right questions in the correct format? One of the current bottlenecks lies in the need for more transparency surrounding LLM internal processes. We need more insight into how these models structure and learn from the information. This opacity makes it difficult to pinpoint the most effective communication methods to elicit optimal results from LLMs. How can we develop a structure that can extract the maximum value from LLMs?

4) One of the ideas behind training models on vast volumes of data is the principle of “wisdom of the crowd.” The aggregate of multiple datasets from various sources should be able to solve the problem that LLMs claim to solve. However, it becomes problematic when we evaluate why we picked them. Data is used to learn the syntactic language and semantic language. Syntactic language helps the model understand grammar, and semantic language gives an understanding of the world. So, there can be datasets that have both, either, or none of them. If it is syntactically wrong and factually wrong, there isn’t any value in including them. However, many texts are syntactically correct but semantically wrong, such as “human beings are plants.” Should we include such data in the training set? Because even if such data is semantically incorrect, it is syntactically correct. This would help LLMs understand the language better.

Suppose it is decided that we only add texts that are both factually and syntactically correct. In that case, we won’t be able to add multiple sources like fiction and older science theories. For example, our understanding of the atom changed through various theories like the Thompson model, the Rutherford model, and so on. Once one model is disproved, it is no longer correct or accepted, so should we remove such theories from our training data? Even theories that are accepted today can be proved wrong tomorrow. As time passes, things we know to be true today can change. So, should we continuously update training data only to have the most valid theory and remove everything else? This will cause a lot of historical attempts and theories to understand the world to be removed. Is that what we want? Of course, understanding history is essential for us to improve and learn from our mistakes. How do we navigate the semantic correctness vs syntactic correctness that creates difficulties in picking training data?

5) Since LLMs work on next work prediction, a few wrong words are technically not that bad an output but can significantly change the text’s factual accuracy. Perhaps a new method of evaluation should be implemented. Once a sentence or a passage is created, the model stops to check if the text is factually correct or not based on the factual text it possesses. However, it is hard to determine if a sentence/passage is factually accurate if it doesn’t understand the world. This is also difficult for humans. For example, if someone asked you, “Which is the second largest flower in the world?” it would be difficult to guess; if you don’t have an idea, you will probably be wrong. Another example would be if someone says, “The sun rises in the West.”

Humans can understand that it is syntactically correct(grammar is okay), but the sentence is factually wrong. This type of fact-based checking isn’t inherently present in LLMs. There are attempts to mitigate errors using RLHF, but we can still use jailbreak prompts to generate factually wrong and biased text. But is it right to make this the absolute evaluation criterion? After all, people can believe and say things that aren’t factually correct. For example, many people still believe the Earth is flat, and many other theories(even conspiracy ones) exist. Is it right to evaluate based on factual accuracy?

6) The rise in LLMs has caused many to question whether the outputs created by LLMs are original. I think it would depend on how we define “originality.” After all, much human thinking is ironically derivative of past thinking and ideas we had in the past. We build ideas upon previous knowledge iteratively, so if we can do it and call it “original,” why shouldn’t we allow the same for LLMs? I don’t think there is a concrete explanation of what constitutes originality. We have a rough explanation of what used to work, like specific research papers that get published and labeled as “novel” or “original.” It seems this explanation is no longer enough to explain whether the outputs of LLMs are, in fact, original. However, it would be interesting to see a concrete explanation of what “originality” looks like and whether LLMs possess it. Are the outputs of LLMs a remix of the Internet, or are they producing original ideas?

7) The nature of intelligence remains a topic of debate, lacking a single, universally accepted definition. One aspect of intelligence agreed upon in many circles is that it should be able to do well in multiple tasks, i.e., it should be general. When AI first emerged, people were skeptical regarding its ability to engage in complex tasks involving strategies like Chess and Go. Through continuous learning, AI eventually transcended those limitations and surpassed the best human players. Its long-term planning and, sometimes, seemingly haphazard moves were outstanding strategies, sacrificing short-term wins for long-term gains. AI’s planning capabilities in specific scenarios are undeniable.

No matter how well AI performs in planning tasks, we tend to change the goalposts and introduce new metrics and benchmarks. People question the benchmarks and claim they aren’t sufficient or wrong. This raises a fundamental question: is this entirely fair? Is this partly because no single, universally accepted definition of intelligence exists? We acknowledge that not all humans excel in every aspect of reasoning. Yet, we don’t question their sentience, intelligence, or morality based solely on limited evidence. After all, humans who are good at Chess need not be good at Go. Likewise, a person who can pass the bar may not pass the medical entrance test. The same question arises with AI: why are sentience, intelligence, and even morality seemingly attributed to humans based even on contested evidence, while these attributes are often denied to LLMs?

Everyone is a genius. But if you judge a fish by its ability to climb a tree, it will live its whole life believing that it is stupid. — Source

Emerging Research Areas

- Multi-modal learning: Training them on different data types beyond text, like image and audio, opens new avenues for these models to learn. This could mean they can create accurate text descriptions of inputted images, composing different types of music with other styles or remixes of popular songs sung by a different person. If trained on lots of code and its relevant documentation, it could also be a translator between languages. For example, learning just one language like Python is enough, and then it can be converted into R and C++ while maintaining the same logic.

- Mitigating Bias: Unfortunately, LLMs perpetuate biases in their training data, which allows for biased outputs. Many techniques, such as fairness-aware pre-training, data augmentation, and RLHF, are implemented to reduce the number of biased responses they produce. This would lead to the creation of more unbiased and equitable models.

- Few-Shot Learning: One goal is to enable LLMs to perform well on new tasks that they need to be explicitly trained on. If they can achieve this using minimal fine-tuning data, it would increase the adaptability of these models and reduce reliance on large datasets, making them less computationally expensive and more eco-friendly.

- Explainability and Interpretability: Understanding why LLMs respond to a prompt in a certain way remains challenging. This means the models used are still black boxes that we don’t quite understand. We need explainability and interpretability for wider-scale adoption and more critical applications like LLMs that help with legal filing or medical assistance. This will help foster transparency and trust in these systems.

- Improving Reinforcement Learning from Human Preference(RLHF): RLHF has become an emerging technique that allows LLMs to increase the quality of their outputs and decrease the chances of wrong outputs, such as toxicity.

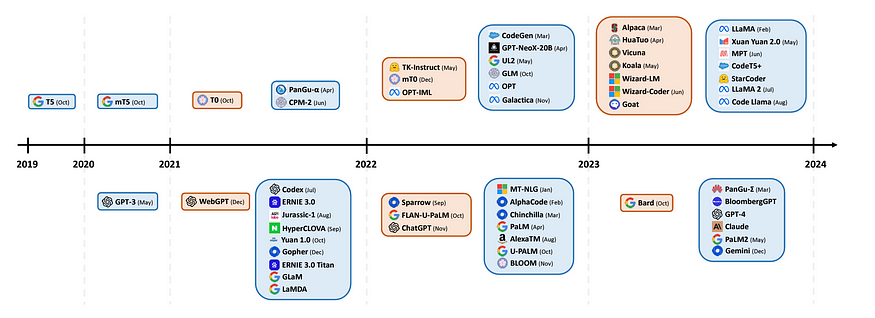

Labelers classify data based on their preferences, so how can we know if they represent “human” preferences? What exactly is human preference? We don’t have a mathematical way to describe human preference. This preference can change across genders, ethnicities, ages, education, nationality, religion, language, etc. From the image below, from the authors of the InstructGPT: Training language models to follow instructions with human feedback (Ouyang et al., 2022), we can see that there is no labeler above age 65+ and no Indigenous/Native American/Alaskan Native communities included. So, how do we define and measure human preference? How do we improve learning from human preference?

Conclusion

Beyond current applications, LLMs have profound long-term societal implications that extend into education, employment, and governance. What is the future of human labor in an increasingly automated workforce? How will LLMs affect the economy and the quality of life? How do we assign intellectual property rights over generated content? As we push the boundaries of LLM capabilities, we should acknowledge the inherent limitations and their impact on a society unprepared for such advancements. We should not wait for the dust to settle before considering these impacts. AI systems have only begun to transform our workflows, and in a society increasingly dependent on these systems, it is imperative to frame dialogue on the new paradigm of AI.

How do we chart a course toward responsible innovation and equitable deployment of language technologies? Various safety measures are in place to reduce harmful content generation, but it is essential to acknowledge that vulnerabilities remain. Mitigations like use policies and monitoring are crucial. We must address emergent risks through safety assessments and testing with diverse real-world data. Cross-functional collaboration on research is needed to ensure the responsible adoption of increasingly powerful language models.

Bibliography and References

[1] Naveed, Humza, et al. A Comprehensive Overview of Large Language Models. arXiv:2307.06435, arXiv, 9 Apr. 2024. arXiv.org, https://doi.org/10.48550/arXiv.2307.06435.

[2] Touvron, Hugo, et al. Llama 2: Open Foundation and Fine-Tuned Chat Models. arXiv:2307.09288, arXiv, 19 July 2023. arXiv.org, https://doi.org/10.48550/arXiv.2307.09288.

[3] OpenAI, et al. GPT-4 Technical Report. arXiv:2303.08774, arXiv, 4 Mar. 2024. arXiv.org, https://doi.org/10.48550/arXiv.2303.08774.

[4] Ouyang, Long, et al. Training Language Models to Follow Instructions with Human Feedback. arXiv:2203.02155, arXiv, 4 Mar. 2022. arXiv.org, https://doi.org/10.48550/arXiv.2203.02155.

[5] Li, Cheng, et al. Large Language Models Understand and Can Be Enhanced by Emotional Stimuli. arXiv:2307.11760, arXiv, 12 Nov. 2023. arXiv.org, https://doi.org/10.48550/arXiv.2307.11760.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.