Automated Rationale Generation: Moving Towards Explainable AI

Last Updated on November 4, 2020 by Editorial Team

Author(s): Shreyashee Sinha

Artificial Intelligence

With the advent of AI, it has become imperative for the progression of a parallel field, Explainable AI (XAI), to foster trust and human-interpretability in the workings of these intelligent systems. These explanations help the human collaborator understand the circumstances that led to any unexpected behavior in the system and allows the operator to make an informed decision. This article summarizes the paper that demonstrates an approach to generating natural language real-time rationale from autonomous agents in sequential problems and evaluates their humanlike-ness.

Abstract

Using the context of an agent that plays Frogger, a corpus of explanations is collected and fed to a neural rationale generator to produce rationales. These are then studied to measure user perceptions of confidence, humanlike-ness, etc. An alignment between the intended rationales and the generated ones was noticed, with users preferring a detailed description that accurately described the mental model of the agent.

Introduction

Automated rationale generation is a process of producing a natural language explanation for agent behavior as if a human had performed the behavior by verbalizing plausible motivations for their action. Since rationales are natural language explanations and don’t literally expose the inner workings of an intelligent system, they are much more accessible and intuitive to non-experts, facilitating advantages such as higher degrees of satisfaction, confidence, rapport, and willingness to use autonomous systems.

In previous work, it was shown that recurrent neural networks could be used to translate internal state and action representations into natural language while relying on synthetic data. To explore if human-like plausible rationales can be generated, a corpus is created by a remote think-aloud methodology and used to train a neural network based on this work to translate an agent’s state into natural language rationales. Further, a couple of studies are conducted to identify the quality of rationales and user preferences.

Methods

Data Collection

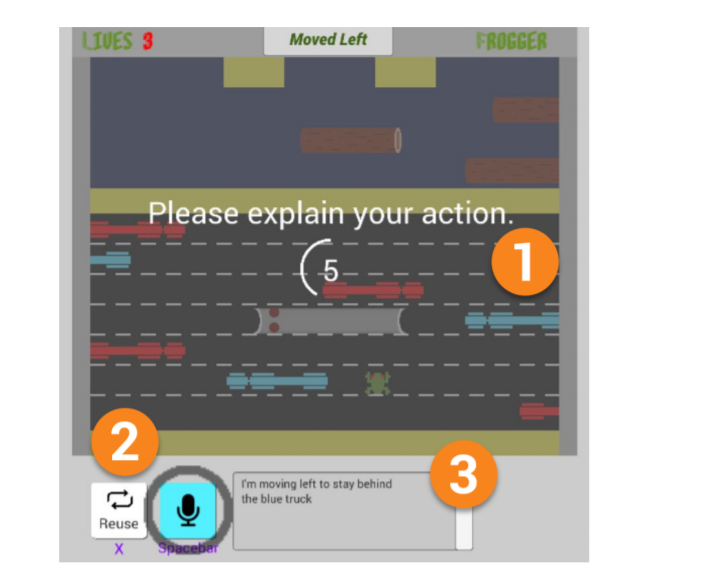

A modified version of Frogger is built, which asks the player for their natural explanation after every action, which they can speak in the microphone. A speech to text library is used to then convert and confirm the player’s action in the text. This was then deployed to a sample of 60 users, and the game state, as well as the users’ explanations, were captured.

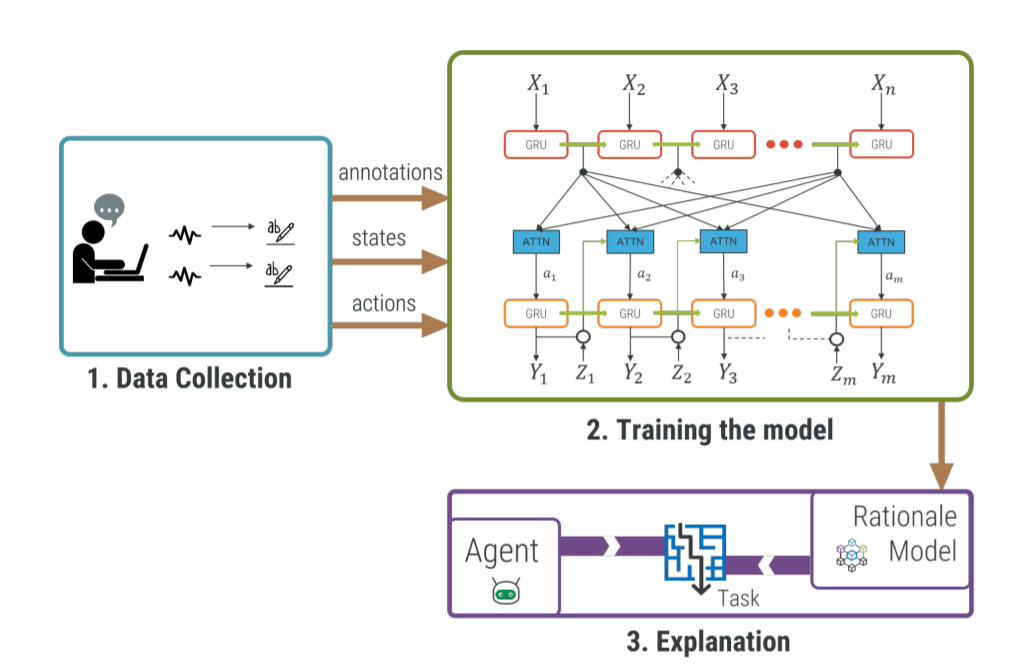

Training the Neural Model

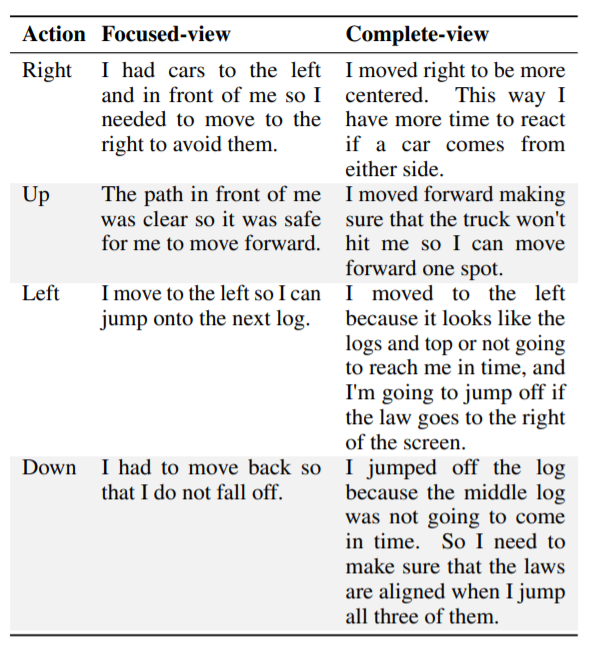

An encoder-decoder network is then used to generate relevant natural language explanations for any given action. They are both RNNs comprised of Gated Recurrent Unit (GRU) cells. The input to the network comprises of the state of the game environment serialized into a sequence of symbols where each symbol characterizes a sprite in the grid-based representation, along with information concerning Frogger’s position, the most recent action, the number of lives remaining, etc. It was found that a reinforcement learning agent using tabular Q-learning plays the game effectively when given a limited window for observation. Thus, two configurations were used, a focused-view— limiting the observation window to a 7×7 grid, and the complete-view — the entire observation deck.

Perception and Preference Studies

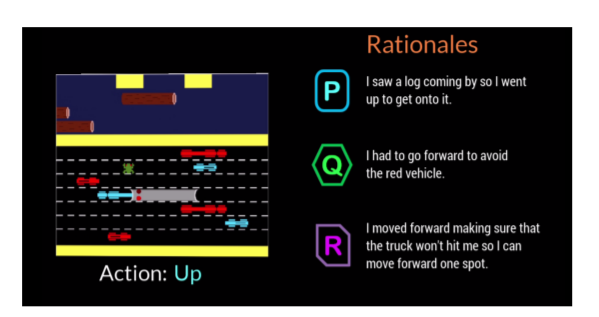

With the model trained and capable of generating rationales, as shown below, a user perception study of the explanations is conducted, being followed by the preference of the users between the focused and complete views.

For the perception study, the generated rationale is evaluated against a baseline random sample and an “exemplar” rationale, which is decided unanimously by the participants of the study. A set of 128 researchers then evaluated each of the 3 rationales on a 5 -point scale based on metrics of confidence, understandability, humanlike-ness, and adequate justification. After studying the results of these rationales, it was clear that the researchers preferred the generated rationale much more than the “random” baseline measure. Also, it indicated a strong preference for the generated rationale, sometimes even over the exemplar one.

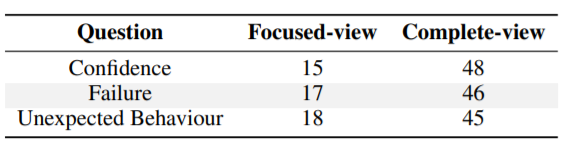

Further, for the preference evaluation, the study focused on the difference between the complete-view and the focused-view. A set of 65 researchers were asked to answer questions on the main difference, confidence, failure, and unexpected behavior between these two views. These responses were then similarly converted to dimensions, and quantitative analysis was done. It demonstrated a higher degree of confidence in the complete-view, with the users liking the representation of the complete mental state of the agent over the succinct but narrow-sighted rationale of the focused-view.

Conclusion

Thus these insights can help us design better human-centered, rationale-generating, autonomous agents. Also, this rationale can be tweaked based on the context to generate styles that meet the needs of the task, whether a succinct explanation (focused-view) or a more holistic insight (complete-view).

As our analysis reveals, context is king.

The preference study shows why the detail is necessary, especially in tasks that need to communicate failure. Being a novel field of study, there are several limitations that can be improved upon, such as the ability of users to content rationales and interactivity. Moreover, this idea can be extended to work with non-discrete actions as well, and even try to understand the feasibility of the system in larger state-action spaces. The results are promising, for sure!

References

Automated Rationale Generation: Moving Towards Explainable AI was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.