From Raw to Refined: A Journey Through Data Preprocessing — Part 4: Data Encoding

Last Updated on December 21, 2023 by Editorial Team

Author(s): Shivamshinde

Originally published on Towards AI.

Why data encoding is necessary

Humans can understand textual information. However, this is not the case for machines and any algorithms that machines run. Machines and algorithms only understand the numbers and the mathematical operations of the numbers. Therefore, if one needs to communicate textual information with machines or if one needs to input textual information to the algorithms, then the said information should be first converted into the numerical format that could represent the information.

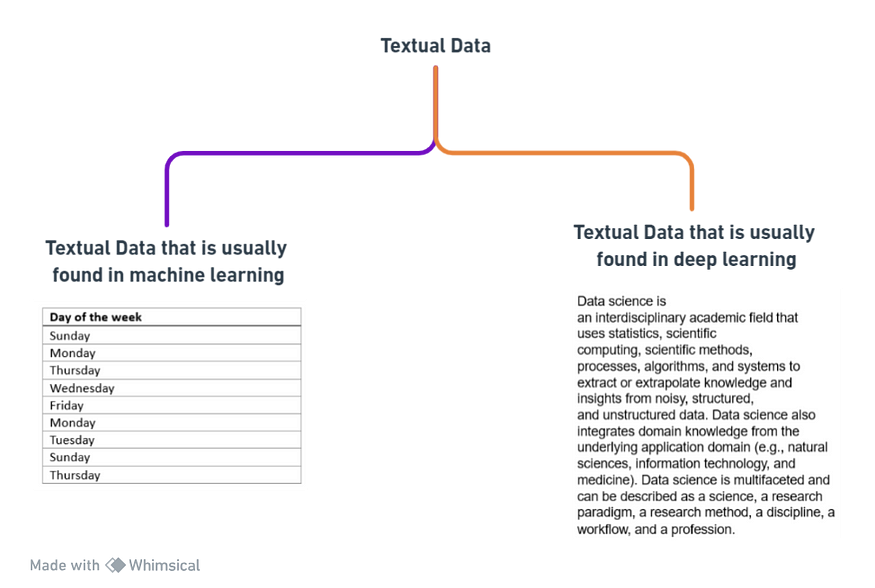

Data encountered in machine learning and deep learning tasks

Usually, the textual data we encounter in machine learning is discrete, such as columns with limited textual values. For example, the data could contain a column named ‘day of the week’ for which only seven values are possible. Another example could be the column ‘Seasons,’ for which only four values are possible. This kind of textual data can be encoded using ordinal or one-hot encoding methods. These methods are pretty easy to implement with the built-in classes present in the Scikit-Learn library.

On the other hand, in deep learning, especially in natural language processing, we will encounter textual data that is in the format of sentences or whole paragraphs. For this kind of textual data, we use a different approach. For this kind of data, we first clean the data to make it proper for encoding. After the cleaning, we use encoding methods such as CountVectorizer, TfidfVectorizer, and HashingVectorizer from the Scikit-Learn library. Another critical method that is more sophisticated is known as word embedding. We can use the methods present in the Python libraries, such as Tensorflow and gensim to encode the data using word embedding methods.

Methods used to encode the textual data that is usually found in machine learning tasks

Ordinal Encoding and One-hot Encoding are the two most popular techniques for dealing with this type of textual data. Of course, other methods can deal with such data, but people use them less frequently.

Let’s use the ‘tips’ dataset in the Seaborn library to demonstrate these methods.

- Ordinal Encoding

This encoding type is often used with the data that can be ranked. For example, if we take an example of days in the week, we can rank them from Sunday as 0 to Saturday as 6.

Let’s see how it is done.

## Importing required libraries

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

import warnings

warnings.filterwarnings('ignore')

## Importing the tips dataset using seaborn

tips = sns.load_dataset('tips')

tips.head()

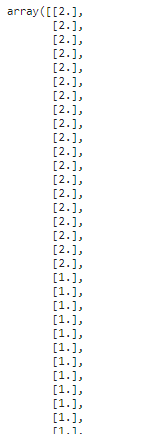

Let’s use the ordinal encoding method on the ‘day’ column.

from sklearn.preprocessing import OrdinalEncoder

enc = OrdinalEncoder()

transformed_day_column = enc.fit_transform(tips[['day']])

transformed_day_column

Many more rows are in the output, but I am just showing some of them to save space.

2. One-hot encoder

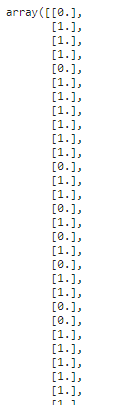

We use the one-hot encoding method when our textual data in columns doesn’t have any order. One example would be the column ‘sex’ in the tips dataset. Since the sex has no order or hierarchy, we can use one-hot encoding in this case.

Let’s see how to perform one-hot encoding on the ‘sex’ column of the tips dataset.

from sklearn.preprocessing import OneHotEncoder

ohe = OneHotEncoder()

transformed_sex_column = enc.fit_transform(tips[['sex']])

transformed_sex_column

Again, many more rows are in the output, but I am just showing some of them to save space.

Methods used to encode the textual data that is usually found in deep learning task

Scikit-Learn provides some classes for very basic encoding of textual data. Some of these classes are CountVectorizer, TfidfVectorizer, and HashingVectorizer. These classes will help us encode the textual data but they are far from efficient in representing the intent or nature of textual data.

The most efficient technique that is used for the encoding of textual data is called word embedding.

Word embeddings are a type of word representation that allows words with similar meanings to have a similar representation. Word embeddings are techniques where individual words are represented as real-valued vectors in a predefined vector space.

There are three algorithms that can be used to learn the word embedding from the text corpus.

- Embedding layer

An embedding layer is a word embedding learned jointly with a neural network model on specific natural language processing tasks, such as language modeling or document classification.

It requires that document text be cleaned and prepared such that each word is one-hot encoded. The size of vector space is specified as part of the model, such as 50, 100, or 300 dimensions. The vectors are initialized with small random numbers. The embedding layer is used on the front end of a neural network and is fit in a supervised way using the backpropagation algorithm.

The one-hot encoded words are mapped to the word vectors. If a multilayer Perceptron model is used, then the word vectors are concatenated before being fed as input to the model. If a recurrent neural network is used, each word may be taken as one input in a sequence.

This approach of learning an embedding layer requires a lot of training data and can be slow, but it will learn an embedding both targeted to the specific text data and the NLP task.

Check out the following jupyter notebook for implementing the embedding layer into the neural network.

IMDB sentiment analysis – keras TextVectorization

Explore and run machine learning code with Kaggle Notebooks U+007C Using data from [Private Datasource]

www.kaggle.com

2. Word2Vec

Word2Vec is a method for effectively learning a standalone word embedding from a text corpus.

These standalone word embeddings could be used to do the math of the representation of words.

For example,

subtracting the ‘man-ness’ from ‘king’ and adding ‘women-ness’ will give us the meaning of the word ‘queen.’ The analogy is here is

King → Queen => Man → Women

Two learning models that can be used as part of the word2vec approach to learning word embedding were introduced. They are:

a. continuous bag-of-words or CBOW model

b. continuous skip-gram model

The continuous bag of words model learns the embedding by predicting the current word based on its context (surrounding words). On the other hand, the continuous skip-gram model learns by predicting the contexts (surrounding words) given a current word.

Taken from “Efficient Estimation of Word Representations in Vector Space”, 2013

3. Global Vectors for Word Representation (GloVe)

This is another approach to learning the embedding of the textual data.

Ways to use the embedding methods

- Learning the embedding

In this approach, we learn the embedding for our problem using the textual data available to us. If we are to learn the accurate embedding that could represent the nature of words in our corpus from scratch, we will need a large amount of textual data, maybe even billions of words. There are two ways to learn the embedding:

learning the embedding without any other networks

This approach is used to learn the embedding when we need to same embedding for many tasks. Here, we will learn the embedding, save it, and then use it for as many tasks as we require.

learning the embedding along with the neural networks used for our specific task

This approach is used to learn embedding when we need not use the embedding for more than one task. Therefore, we will learn the embedding for our data while training the neural network.

2. Reusing the embedding

Everyone cannot afford to get a large amount of data to get their embedding vector. So, the solution, in this case, is to use freely available pre-trained embedding vectors from the internet. Once we obtain these freely available embeddings, we can make use of them in the following two ways.

Use the downloaded embeddings as they are

Once we download the embeddings from the internet, we can use them directly to train our neural network for our task. You can check out the following Kaggle notebook to know how to do this in code.

IMDB Sentiment analysis – pretrained embeddings

Explore and run machine learning code with Kaggle Notebooks U+007C Using data from [Private Datasource]

www.kaggle.com

Updating the downloaded embedding

We can update the downloaded embedding along with the neural network to tailor the embedding for our task at hand.

References:

User guide: contents

User Guide: Supervised learning- Linear Models- Ordinary Least Squares, Ridge regression and classification, Lasso…

scikit-learn.org

NLP Starter U+1F4CB Continuous Bag of Words (CBOW)

Explore and run machine learning code with Kaggle Notebooks U+007C Using data from U.S. Patent Phrase to Phrase Matching

www.kaggle.com

Implementing Deep Learning Methods and Feature Engineering for Text Data: The Continuous Bag of…

The CBOW model architecture tries to predict the current target word (the center word) based on the source context…

www.kdnuggets.com

Thanks for reading! If you have any thoughts on the article, then please let me know.

Are you struggling to choose what to read next? Don’t worry, I have got you covered.

From Raw to Refined: A Journey Through Data Preprocessing — Part 3: Duplicate Data

This article will explain how to identify duplicate records in the data and, the different ways to deal with the…

pub.towardsai.net

and one more…

From Raw to Refined: A Journey Through Data Preprocessing — Part 2 : Missing Values

Why deal with missing values?

pub.towardsai.net

Shivam Shinde

Have a great day!

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.