Practical Guide to Boosting Algorithms In Machine Learning

Author(s): Youssef Hosni Originally published on Towards AI. Use weak learners to create a stronger one Boosting (originally called hypothesis boosting) refers to any Ensemble method that can combine several weak learners into a strong learner. The general idea of most boosting …

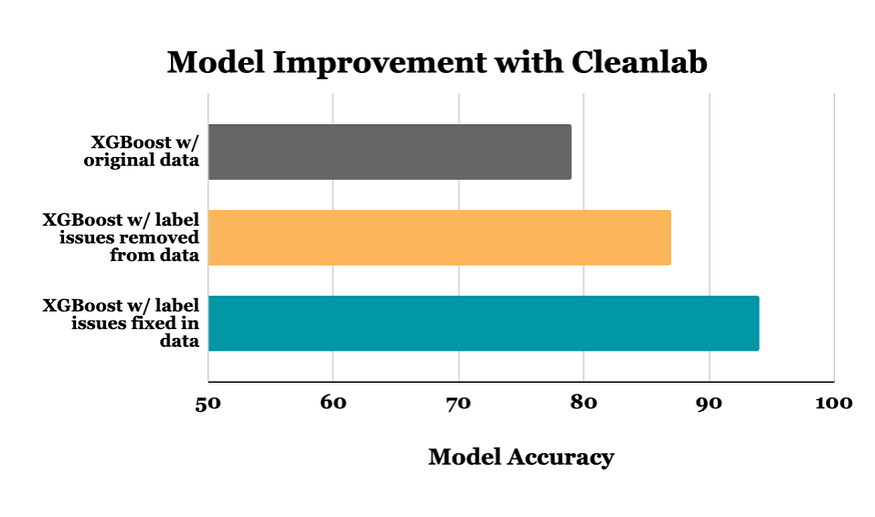

Handling Mislabeled Tabular Data to Improve Your XGBoost Model

Author(s): Chris Mauck Originally published on Towards AI. Reduce prediction errors by 70% using data-centric techniques. “Instead of focusing on the code, companies should focus on developing systematic engineering practices for improving data in ways that are reliable, efficient, and systematic. In …

Do House Sale-Price Prediction Like a Professional Data Scientist

Author(s): John Adeojo Originally published on Towards AI. Advanced Machine Learning Tactics Image by Author: Generated by Midjourney Join me as I work through a fun and challenging regression problem — predicting house-sale prices. I will walk you through everything from best …

Ensemble Learning: Maximizing Predictive Power through Collective Intelligence

Author(s): David Andres Originally published on Towards AI. Photo by Cristina Marin on Unsplash Ensemble Learning is a powerful method used in Machine Learning to improve model performance by combining multiple individual models. These individual models, also known as “base models” or …

How to Train XGBoost Model With PySpark

Author(s): Divy Shah Originally published on Towards AI. Why XGBoost? XGBoost (eXtreme Gradient Boosting) is one of the most popular and widely used ML algorithms by Data Scientists in every industry. Also, this algorithm is very efficient in terms of reducing computing …

Getting the data

Author(s): Avishek Nag Originally published on Towards AI. Comparative study of different vector space models & text classification techniques like XGBoost and others In this article, we will discuss different text classification techniques to solve the BBC new article categorization problem. We …