Backpropagation and Vanishing Gradient Problem in RNN (Part 2)

Author(s): Alexey Kravets Originally published on Towards AI. How it is reduced in LSTM https://unsplash.com/photos/B22I8wnon34 In part 1 of this series, we went through back-propagation in an RNN model and explained both with formulas and showed numerically the vanishing gradient problem in …

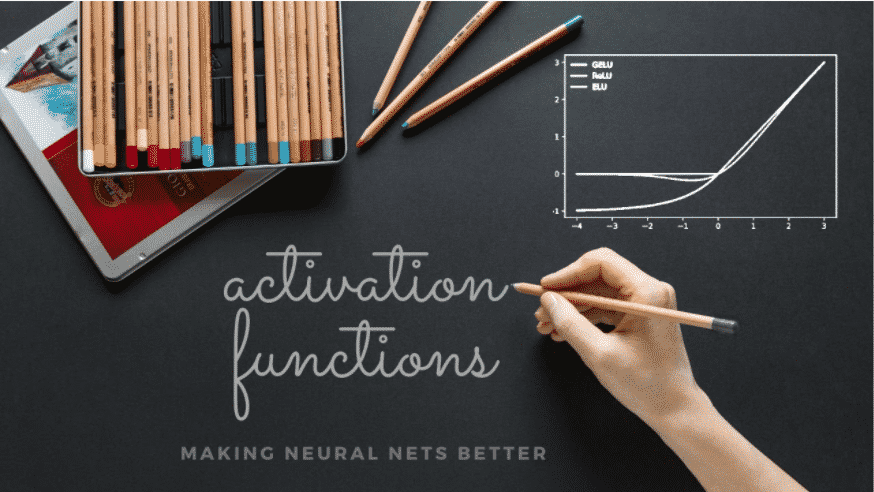

Parametric ReLU | SELU | Activation Functions Part 2

Author(s): Shubham Koli Originally published on Towards AI. Parametric ReLU U+007C SELU U+007C Activation Functions Part 2 What is Parametric ReLU ? Rectified Linear Unit (ReLU) is an activation function in neural networks. It is a popular choice among developers and researchers …

Parametric ReLU | SELU | Activation Functions Part 2

Author(s): Shubham Koli Originally published on Towards AI. Parametric ReLU U+007C SELU U+007C Activation Functions Part 2 What is Parametric ReLU ? Rectified Linear Unit (ReLU) is an activation function in neural networks. It is a popular choice among developers and researchers …

Parametric ReLU | SELU | Activation Functions Part 2

Author(s): Shubham Koli Originally published on Towards AI. Parametric ReLU U+007C SELU U+007C Activation Functions Part 2 What is Parametric ReLU ? Rectified Linear Unit (ReLU) is an activation function in neural networks. It is a popular choice among developers and researchers …

Backpropagation and Vanishing Gradient Problem in RNN (part 1)

Author(s): Alexey Kravets Originally published on Towards AI. Theory and code https://unsplash.com/@emilep Introduction In this article, I am not going to explain the applications or intuition about the RNN model — indeed, I expect the reader to already have some familiarity with …

Calculating the Backpropagation of a Network

Author(s): Javed Ashraf Originally published on Towards AI. A beginner’s guide to the math behind backpropagation Machine learning has become a trending topic for many programmers and companies to investigate & utilize. Though there are various frameworks and tools which help to …