Where are the Robots that Sci-Fi Movies and Books Promised?

Last Updated on May 22, 2020 by Editorial Team

Author(s): Padmaja Kulkarni

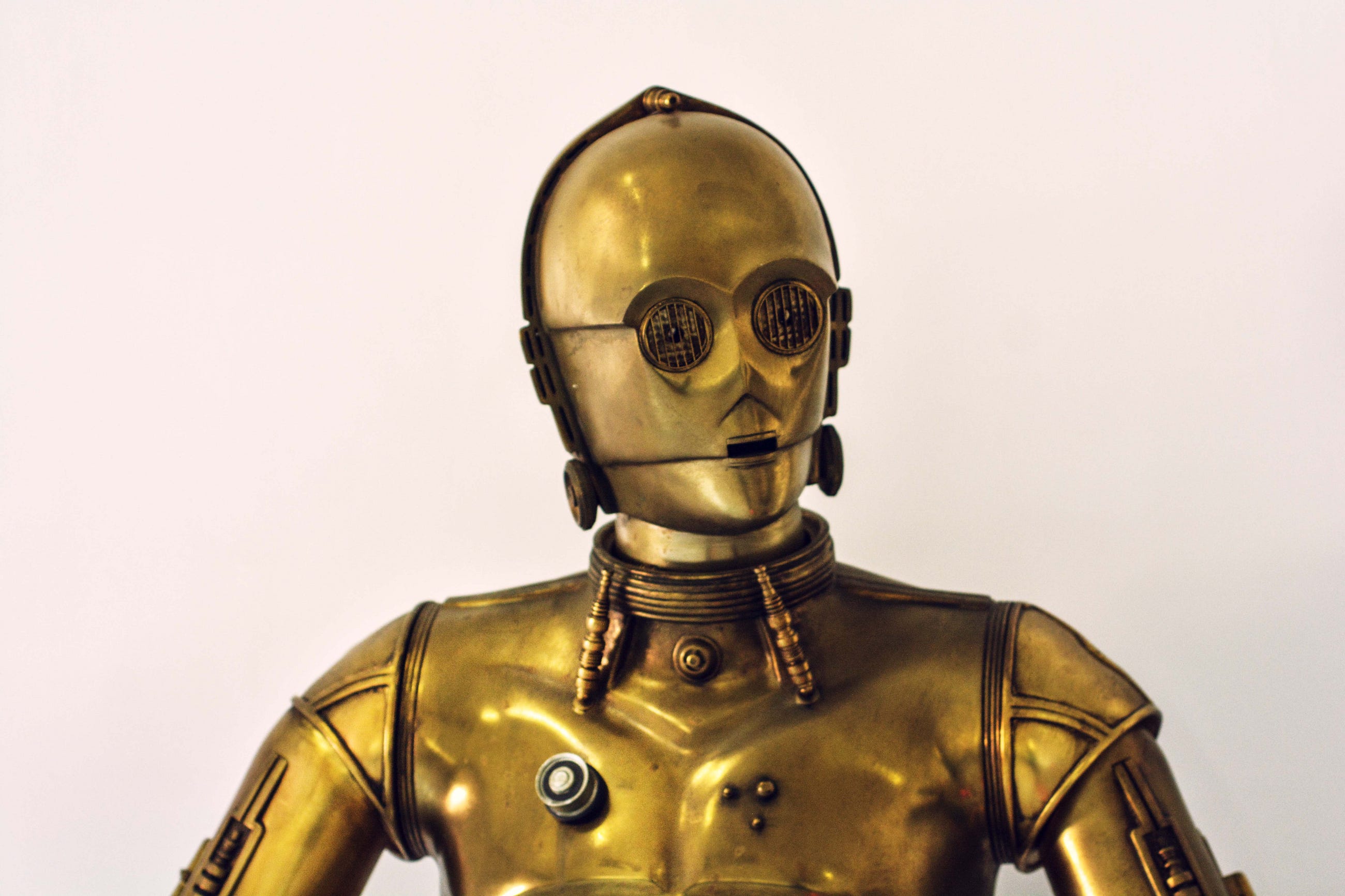

Sci-Fi movies and books promised that the future would look rather different with state of the art robots. Star Wars bespoke C-3PO and R2-D2. Back To The Future gave us dreams of time travel and ubiquitous flying cars. The Hitchhiker’s Guide To The Galaxy promised robots capable of human emotions, and The Jetsons robots capable of looking over the household, robots being able to cook, clean, and to be companions. It seemed likely and possible with the modern-day technological advancement and breakthroughs in the labs. And yet, we don’t even seem to be even halfway there when we look around.

In reality, we have planes that come close to flying cars, and the capability to take a human to the moon, which is quite impressive. However, on the robotics front, we have a vacuum cleaner Roomba, good at a single task, a dishwasher with no adaptive intelligence, a programmable washing machine. Additionally, we have virtual agents like Alexa or Google Home (yet, not a robot), which often misunderstands my thick accent and can’t have a conversation. So, where are the robots that Sci-fi movies and books promised?

The last two years seemed promising with the introduction of the new robotic platforms like Kuri, Nao, and Pepper, the friendly Home Robots, and TikTok, the tidying robot. However, Kuri and TikTok disappeared even before they could take off. Nao and Pepper’s applications remained restricted to some scripted tasks. Spot Mini showed some promise as the robot dog capable of cleaning the house, but working with humans and in an ever-changing environment is a great challenge for it, and for now, making this robot work in your house still remains a distant future. Boston Dynamics created Atlas, a robot capable of hopping, running, and even backflipping. However, popular footage shows only the best attempts of the robot, not the general behavior.

So the question remains — where did we go wrong in creating the promised robots? One thing for sure, creating these robots is not as easy as it was initially envisaged. We expect too much of robots. Our fantasies come from sci-fi movies and not from ongoing and extrapolated research. We expect our robots to look like humans and have humanlike capabilities of sensing, grasping, and manipulation. Moreover, we expect them to reason with their surroundings and act autonomously. Let’s dive deep into these expectations and have a look at what the problem is.

Robots looking like humans are really difficult to manufacture because of our complex body structure. We can’t even replicate a human hand due to its intricate structure — an adaptive skin layer, the core of bones for strength, nerves for sensory information, muscles, tendon for flexible movement. Closest we came creating Sophia, but all she could do was face recognition and giving scripted answers.

Even if we replicate the joint structure of humans, adding the necessary adaptive capabilities without compromising strength remains a problem. Moreover, we can’t add rich sensing capabilities remotely close to the human body with senses like touch, vibration, pressure, and temperature at a microscopic level. This renders humanlike robots-bodies incapable of performing human-level tasks.

Grasping and manipulation are basic tasks that humans do effortlessly, even without visual aid. For robots, it needs meticulous planning due to our inability to integrate sensing capabilities and adaptive “skin.” Furthermore, we expect a robot to perform this task with 100% accuracy. Even 99% would mean that the robot drops a cup for the 99 times it picks it up, and we certainly don’t want that.

Cameras that help robots see and algorithms that use the sensory data to make sense of the surroundings are still not accurate. And even when we use multiple cameras and different types of them, like Infra-Red, expanding a robot’s field of view and information richness, processing such an enormous amount of data continuously is still a challenge. Just to put things in perspective, our brain captures and sees an object a million times before it can identify an object. The computational power and memory required to train a robot to know all surrounding objects are still unattainable.

Reasoning with surroundings and acting autonomously are some things that are developed in a human over time. Sensory capabilities and reasoning skills are complementary. Moreover, our curiosity helps us learn and explore. How to code this is still a topic of ongoing research.

Having cheap robotic platforms with rich sensor modalities is one of the hurdles for speeding up the research. One might think, why not use simulations if robots are not affordable? Well, we are not yet good at transferring skills from simulation to real robots, although current research has deemed this issue to be of immense importance. Moreover, creating a simulation environment capable of accurate object representations, especially deformable ones, is in itself challenging.

Transferring algorithms from one robot to another is also a significant challenge due to different robot morphologies, different sensor interfaces, available modalities, and accuracies. Unlike the internet, where everyone can write their webpage and customize every single page from any website to a great extent, even on one’s PC, robotics hardware does not provide such flexibility. The solutions offered to solve problems like manipulation, grasping, and planning remains platform dependent. Most of them catered to a specific lab scenario. Taking the skills to the outside dynamic and unpredictable world is a huge challenge.

We lack both the software and hardware capabilities to create a future generation of robots. But given the speed of innovation, it seems likely to have robots capable of doing a single task autonomously and correctly. The Roomba, a robot built to clean the floor autonomously, is already doing a great job. We have teleoperated robots that go into hazardous areas and use human reasoning skills to accomplish tasks, for example, bomb defusal robots. We have robots like Da Vinci, which can enable surgeons to manipulate the surgical tools up to submillimeter accuracy and improve the success of the surgery.

We might not have a robot from the Jetsons, but we can have a robot following you in the house all day to remind you of the chores similar to Kuri. We are testing and improving autonomous cars. Social presence robots and autonomous delivery robots especially proved to be useful during this current COVID crisis and will continue to improve. It took three years, but we have a robotic hand that can solve a Rubik’s cube from scratch without any human help.

The Sci-Fi robotic dream might seem a bit far away, but we are walking, rather sprinting towards it with every passing day.

Where are the Robots that Sci-Fi Movies and Books Promised? was originally published in Towards AI — Multidisciplinary Science Journal on Medium, where people are continuing the conversation by highlighting and responding to this story.

Published by Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.