The NLP Cypher | 01.10.21

Last Updated on July 24, 2023 by Editorial Team

Author(s): Quantum Stat

NATURAL LANGUAGE PROCESSING (NLP) WEEKLY NEWSLETTER

Melting Clocks

Once in a while you discover a goodie in the dregs of research. A cipher cracking paper emerged recently on the topic of using seq2seq models to crack 1:1 substitution ciphers. ?

(1:1 substitution is when ciphertext represents a fixed character in the target plaintext. Read more here if you prefer to live dangerously.

Several deciphering methods used today make a big assumption. That we know the target language of the cipher we need to crack. But when diving into encrypted historical texts where the target language is unknown, well, you tend to get a big headache when the language origin is ambiguous.

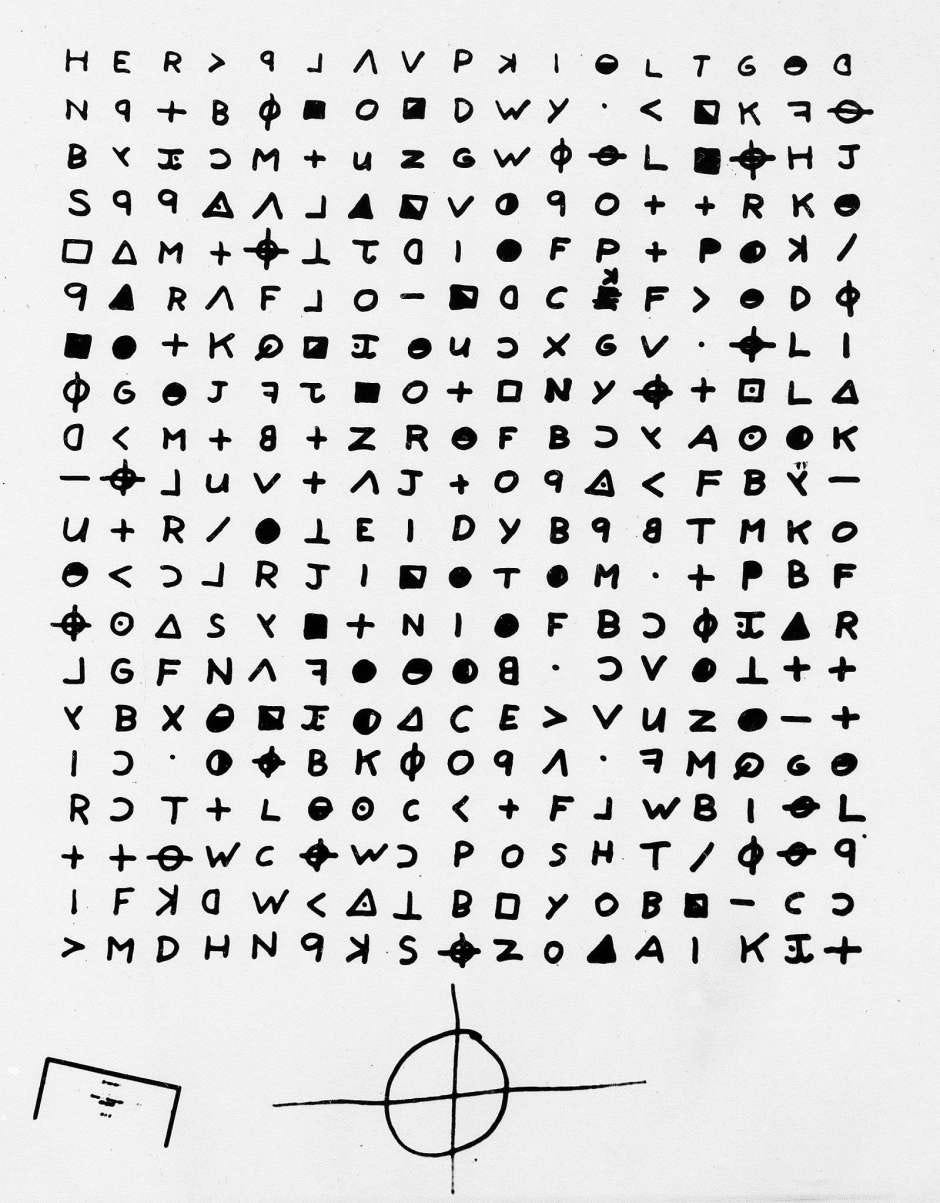

When one begins to attack encrypted text. The state of the cipher can be in various conditions: alphanumeric (numbers/letters) or it can even be symbolic or it can be a mix of both (like the Zodiac Killer’s ciphers ?).

However, IF we know ahead of time that the cipher’s plaintext language is… say English (and not Latin, or any other language), well, we are off to a good start and with a healthy advantage. Why? Because we can leverage the unique features of the English language that doesn’t occur in other languages. I.e. the letter “e” is the most frequent letter in English, so it’s possible the most frequent letter in the ciphertext could be the letter “e” , and by using these heuristics, letter by letter you slowly turn into Tom Hanks from the Da Vinci Code.

Letter Frequencies in the English Language

What’s really interesting about this paper is that the authors wanted to test if a multi-lingual seq2seq transformer would be able to crack ciphers WITHOUT knowing the origin of the language of the plaintext. They formulated the decipherment as a sequence-to-sequence translation problem. The model was trained on the character level.

What’s cool is that they tested the model on historical ciphers (that have been previously cracked) such as the Borg cipher and it was able to crack the first 256 characters with very low error. According to the authors, this is the first application of sequence-to-sequence neural models for decipherment!

NSA be like…

If you enjoy this read, please give it a ?? and share with your friends! It really helps us out!

Don’t Worry There’s a Stack Exchange for Crypto Nerds

OpenAI Dropping Jewels

You probably have already heard of the model drops from OpenAI from this week so I’ll save you the recap. Added their two blogs in case you want to catch up. This week I added the Colab notebook for CLIP on LinkedIn and it got a good reception, will also append it here if you are interested:

Colab of the Week | CLIP

DALL-E Blog

DALL·E: Creating Images from Text

CLIP Blog

CLIP: Connecting Text and Images

DALL-E Replication Already on GitHub

Surprise! Someone already replicated DALL-E on PyTorch ?. ??

pip install dalle-pytorch

Object Storage Search Engine

Thank your local hacker

Hey you know how when you setup your S3 bucket or another object storage and you have the option to choose between public or private setting. Well have you ever wondered what it would look like if someone could harvest all the public bucket URLs for you to openly search them: ?

Inside the Rabbit Hole

The Ecco library allows one to visualize why language models bust moves the way they do. The library is mostly focused on autoregressive models (e.g. GPT-2/3 models). They currently have 2 notebooks to visualize neuron activation and input saliency.

It is built on top of PyTorch and Transformers.

Text-to-Speech with Swag

15.ai came on the scene in 2019 with its awesome text-to-speech demo and it’s been refining its models’ capabilities ever since. You can type in text and get deep learning generated speech conditioned on various characters ranging from HAL 9000 from 2001: Space Odyssey to Doctor Who.

15.ai: Natural TTS with minimal data

ML Metadata

Google came out with Machine Learning Metadata (MLMD). A library to keep track of your entire ML workflow. Allows you to version your models and datasets so you know why things go wrong when they do.

ML Metadata: Version Control for ML

El GitHub:

MLDM API Class:

mlmd.metadata_store.MetadataStore | TFX | TensorFlow

NNs for iOS with Wolfram Language

Wolfram out of left field, and he brought a smartphone. In a recent Wolfram blog post, they show how to train an image classifier, throwing it on ONNX, and then converting it to Core ML so it can be used on iOS devices. Includes code!

Deploy a Neural Network to Your iOS Device Using the Wolfram Language-Wolfram Blog

Machine Learning Index w/ Code

A huge index with several hundred projects per index on all things machine learning, includes computer vision and NLP. You can find the Super Duper NLP Repo on it ?.

ashishpatel26/500-AI-Machine-learning-Deep-learning-Computer-vision-NLP-Projects-with-code

Repo Cypher ??

A collection of recent released repos that caught our ?

Ask2Transformers

Ask2Transformers automatically annotates text data.. aka zero-shot. ?

Subformer

A parameter efficient Transformer-based model which combines the newly proposed Sandwich-style parameter sharing technique.

SF-QA

Open-domain QA evaluation library, it includes efficient reader comparison, reproducible research, and knowledge source for applications.

ARBERT & MARBERT

Arabic BERT returns for a 2nd week in a row on the Cypher. This time its ARBERT and MARBERT. It also includes ArBench a benchmark for Arabic NLU based on 41 datasets across 5 different tasks.

CRSLab

CRSLab is an open-source toolkit for building Conversational Recommender System (CRS). Includes models and datasets.

Dataset of the Week: StrategyQA

What is it?

“StrategyQA is a question-answering benchmark focusing on open-domain questions where the required reasoning steps are implicit in the question and should be inferred using a strategy. StrategyQA includes 2,780 examples, each consisting of a strategy question, its decomposition, and evidence paragraphs.”

Sample

Example 1

“Is growing seedless cucumber good for a gardener with entomophobia?”

Answer: Yes

Explanation: Seedless cucumber fruit does not require pollination. Cucumber plants need insects to pollinate them. Entomophobia is a fear of insects.

Example 2

“Are chinchillas cold-blooded?”

Answer: No

Explanation: Chinchillas are rodents, which are mammals. All mammals are warm-blooded.

Example 3

“Would Janet Jackson avoid a dish with ham?”

Answer: Yes

Explanation: Janet Jackson follows an Islamic practice. Islamic culture avoids eating pork. Ham is made from pork.

Where is it?

StrategyQA Dataset – Allen Institute for AI

Every Sunday we do a weekly round-up of NLP news and code drops from researchers around the world.

For complete coverage, follow our Twitter: @Quantum_Stat

The NLP Cypher | 01.10.21 was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.