This AI newsletter is all you need #20

Last Updated on December 13, 2022 by Editorial Team

Author(s): Towards AI Editorial Team

Originally published on Towards AI the World’s Leading AI and Technology News and Media Company. If you are building an AI-related product or service, we invite you to consider becoming an AI sponsor. At Towards AI, we help scale AI and technology startups. Let us help you unleash your technology to the masses.

What happened this week in AI by Louis

eDiffi, DALLE and stable diffusion. This is, once again, what happened this week in AI. There’s nothing new about stable diffusion, but is it here to stay? Will there be open-source competitors or even proprietary-worthy competitors? eDiffi, as we cover below, is a new proprietary competitor by NVIDIA. They defend that it achieves better results, more fidelity, and allows more control to the user. Yet, it is not open-source. On the same idea, OpenAI just opened its beta to the public. That means anyone can pay to use it or their API.

Are you interested in such models if you cannot access their code? What if they build an amazing and super user-friendly tool at a relatively cheap price? Isn’t it worth it? Personally, I think so. But I also think it slows down progress, and I’d much rather see code and available checkpoints for other researchers (at least) to implement and build upon. I am convinced the next few conferences like CVPR will have tons of papers based on diffusion models and improving them without re-training or quick fine-tuning, simply thanks to stable diffusion. Still, I’d love to have your take on this, and this is what this week’s poll is about. See more below!

*Reminder* Activeloop is giving away two NeurIPS tickets for our community (in-person & virtual! Sorry for the error last week)! You can visit the NeurIPS conference, where you can attend amazing talks, workshops, tutorials, meet with the best researchers, and many interesting brands and tools in our industry. Just join our Discord community and send me a private message (@Louis B#1408) to have a chance to win a ticket to the in-person event!

Hottest News

- A weekly newsletter you should follow!

The newsletter I am referring to is AlphaSignal. If you like my daily newsletter, you’ll love Lior’s weekly recap of the top 1% of papers, tweets, GitHub repos, and more. He developed an algorithm to figure out the most interesting papers, repos and tweets from the research community and manually curates the last few to ensure quality. I recently discovered it and it is awesome. You will love it and the quality is simply out of this world! Have a look at his newsletter and let me know what you think! - Direct Data Access — a new way to interface with your data

DagsHub is launching Direct Data Access — a new and improved way to interact with your data. Providing an intuitive interface to stream and upload data for any ML project. It doesn’t require any adaptation, and lets you keep all the benefits of a versioned and shareable dataset based on open-source tools. If you want to dive into the docs, you can go there now. Let me know if you find this useful! - FastDup | A tool for gaining insights from a large image collection

FastDup is a tool for gaining insights from a large image collection. It can find anomalies, duplicate and near duplicate images, clusters of similarity, learn the normal behavior and temporal interactions between images. It can be used for smart subsampling of a higher quality dataset, outlier removal, novelty detection of new information to be sent for tagging.

Most interesting papers of the week

- eDiffi: Text-to-Image Diffusion Models with Ensemble of Expert Denoisers

eDiffi is a new generation of generative AI content creation tool that offers unprecedented text-to-image synthesis with instant style transfer and intuitive painting with words capabilities. Learn more in our article! - UPainting: Unified Text-to-Image Diffusion Generation with Cross-modal Guidance

They propose an effective approach to unify simple and complex scene image generation combining the power of large-scale Transformer language model in understanding language and image-text matching model in capturing cross-modal semantics and style, is effective to improve sample fidelity and image-text alignment of image generation. - POP2PIANO : POP AUDIO-BASED PIANO COVER GENERATION

Pop2Piano, a Transformer network that generates piano covers given waveforms of pop music! Generate a piano cover of any pop music! Here is the code.

Enjoy these papers and news summaries? Get a daily recap in your inbox!

The Learn AI Together Community section!

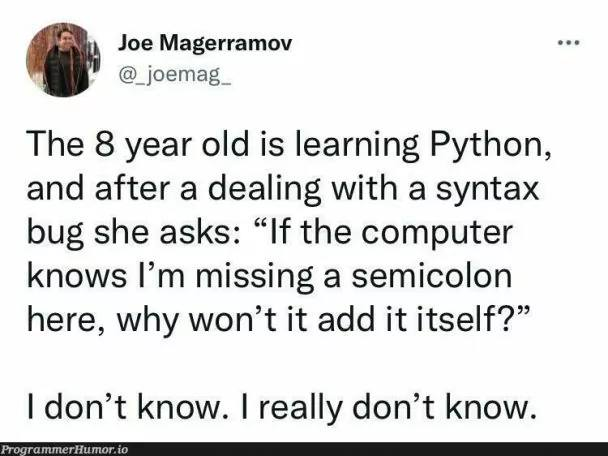

Meme of the week!

This is the first step to real AGI (and we are dreaming about it). Meme credit to AgressiveDisco#4516.

Featured Community post from the Discord

A member of the community, tomi.in.ai#8822, has made a Foundational Research Paper Summary video on the 2015 paper that changed a lot of things: U-Net.

It’s a popular algorithm widely used for image segmentation tasks.

Watch his video here and give him a like for us!

P.S.: the idea of his channel is to make high-level summaries of most of the popular computer vision algorithms out there.

AI poll of the week!

TAI Curated section

Article of the week

5 Types of ML Accelerators by Luhui Hu

Recent years have seen exponential growth in the scale of deep learning models. It becomes increasingly challenging as deep learning training and serving evolve. Scalability and efficiency are two major challenges for training and serving due to the growth of deep learning models. Are deep learning systems stuck in a rut? No! The author summarizes five primary types of ML accelerators or accelerating areas.

Our must-read article

Jupyter Extensions to Improve your Data Workflow by Cornellius Yudha Wijaya

If you are interested in publishing with Towards AI, check our guidelines and sign up. We will publish your work to our network if it meets our editorial policies and standards.

Ethical Take on the Blueprint for an AI Bill of Rights by Lauren

I’m late to this analysis train, but the Blueprint for an AI Bill of Rights was (somewhat) recently released! It details a set of 5 guidelines that are intended to shape how AI is deployed, and I highly recommend reading through it. Many have taken it to be one of the greatest steps in moving toward applying AI ethics in a more practical and authoritative context, especially as we’ve seen failures of self-regulation like the recent firing of Twitter’s entire ethical AI team.

When the AI Bill of Rights was announced, I was really excited because I had hoped this set of guidelines would act as a precursor to more enforceable legislation, which has since been confirmed. The Blueprint for an AI Bill of Rights also serves as a statement that the U.S. is working to play catchup in our AI ethics shortcomings, as we’re far behind other regions such as the E.U. in this regard.

However, we haven’t cracked the code. Others have pointed out that this set of rules fails to address important issues, including this piece from The Conversation about the AI Bill of Rights by Professor Christopher Dancy. I usually advocate for more generalist approaches to be able to specialize in more detailed applications, but Dancy’s approach highlighted that by not addressing specific routes of oppression — namely systemic racism — the Blueprint fails to adequately address the issue, falling short of where we need to land for an application of AI ethics to really be effective against some of the worst cases of harm and bias. This is a clear case in which a specific address is quite necessary.

This may be rectified in future legislation, which I am very much hoping it does. As grateful as I am to have the U.S. government taking a stance and planning action to shape where AI needs to go, I can’t help but draw the parallel with other AI ethics issues that need to be explicitly addressed, such as neurodivergent users being exploited by attention-cultivating algorithms. Not every issue can be named, but the big ones ought to be, and a failure to do so may result in the generality being exploited beyond its intended protections. I will be keeping a very close eye on what comes of these guiding principles and how legislation is advancing in response!

Job offers

Data Scientist @ Alethea Group (Remote, US)

Machine Learning Engineer, Infrastructure @ Earnin (Remote)

AI Content Fellowship @ Deepgram (Remote)

Machine Learning Engineer, Copilot Model Improvements @ Github (Remote, US)

Machine Learning Engineering Manager @ Verana Health (Remote)

AI Implementation Manager (Healthcare) @ ClosedLoop (Remote)

Interested in sharing a job opportunity here? Contact sponsors@towardsai.net.

This AI newsletter is all you need #20 was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Join thousands of data leaders on the AI newsletter. It’s free, we don’t spam, and we never share your email address. Keep up to date with the latest work in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.