This AI newsletter is all you need #13

Last Updated on January 6, 2023 by Editorial Team

Author(s): Towards AI Editorial Team

Originally published on Towards AI the World’s Leading AI and Technology News and Media Company. If you are building an AI-related product or service, we invite you to consider becoming an AI sponsor. At Towards AI, we help scale AI and technology startups. Let us help you unleash your technology to the masses.

What happened this week in AI

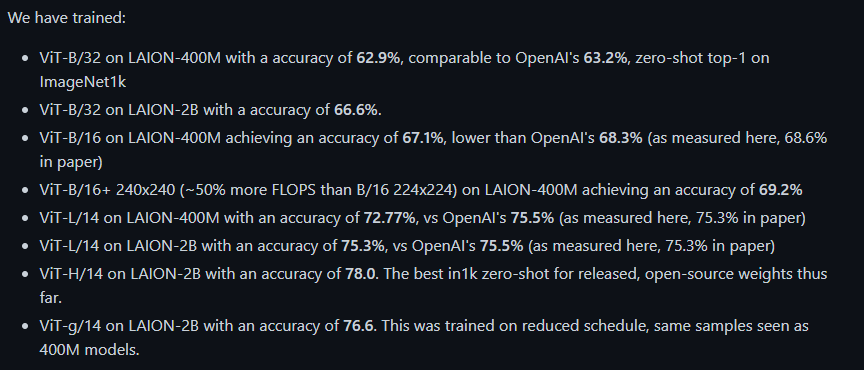

This week, my attention was on Emad and the amazing work he and his team (Stability.ai) have been involved with. This week, Emad announced OpenCLIP, an open-sourced version of CLIP that beats state-of-the-art CLIP results, improving text-image encodings pair, which has a dramatic impact on current research as such a pre-trained open-sourced model will be used by thousands of researchers to create amazing new applications and models involving images and text.

What is OpenCLIP exactly? OpenCLIP is an open-source implementation of OpenAI’s CLIP (Contrastive Language-Image Pre-training). And what do they bring exactly? Why is this so cool? It’s cool because, as stable diffusion, their goal is to bring CLIP to you, the regular researcher (in terms of computing resources, not in terms of skills, you are definitely better than a regular researcher if you are reading this! 😉). This means that they want to enable training/facilitate the study of models with contrastive image-text supervision. As they mention, “our starting point is an implementation of CLIP that matches the accuracy of the original CLIP models when trained on the same dataset.” This means they bring many new SOTA pre-trained models you can implement thanks to more efficient implementations and open-source code (see below). Check out their repository to learn more and implement their models!

Hottest News

- Diffusion Bee, a Stable Diffusion GUI App for M1 Macs!

“Diffusion Bee is the easiest way to run Stable Diffusion locally on your M1 Mac. Comes with a one-click installer. No dependencies or technical knowledge needed.” - MIT researchers have made DALL-E more creative!

As the article highlights, the researchers developed a new method that uses multiple models to create more complex images with better understanding. The method, called Composable-Diffusion, achieves better results by generating an image using a set of diffusion models, with each responsible for modeling a specific component of it. - I was nominated in the 2022 Noonies by Hackernoon!

I would love it if you could support me there and vote for my work if you like it! (both for tech YouTube and data science newsletter). Thank you in advance for this amazing community! 😊🙏

YT: https://www.noonies.tech/2022/emerging-tech/2022-top-tech-youtuber

Newsletter: https://www.noonies.tech/2022/emerging-tech/2022-best-data-science-newsletter

This issue is brought to you thanks to Delta Academy:

Most interesting papers of the week

- im2nerf: Image to Neural Radiance Field in the Wild

A learning framework that predicts a continuous neural object representation given a single input image in the wild, supervised by only segmentation output from off-the-shelf recognition methods. - Test-Time Prompt Tuning for Zero-Shot Generalization in Vision-Language Models

Test-Time Prompt Tuning (TPT): A method that can learn adaptive prompts on the fly with a single test sample. - StoryDALL-E: Adapting Pretrained Text-to-Image Transformers for Story Continuation

“We first propose the task of story continuation, where the generated visual story is conditioned on a source image, allowing for better generalization to narratives with new characters. […] Our work demonstrates that pretrained text-to-image synthesis models can be adapted for complex and low-resource tasks like story continuation.”

Enjoy these papers and news summaries? Get a daily recap in your inbox!

The Learn AI Together Community section!

Meme of the week!

Oh no…

EDA stands for Exploratory Data Analysis and is the process of investigating the data you will use in an ML model before thinking about which model to apply.

Meme shared by friedliver#0614.

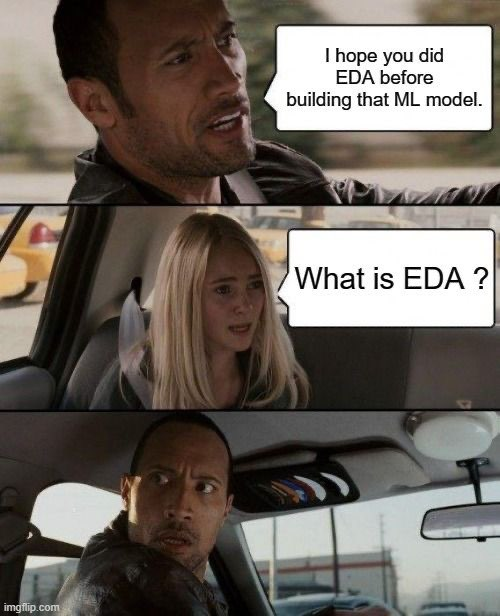

Featured Community post from the Discord

Such a cool project by deep2universe#6939 from the Learn AI community. The algorithm will run on any YouTube videos and perform pose and motion detection on the people in the video. Quite cool! As he mentions, the author, unfortunately, has to stop the development of the extension, but open-sourced the code for further use. We strongly encourage anyone looking to tackle an interesting project to reach out to deep2universe on discord to take on his work. It’s a fantastic project for people interested in computer vision, especially motion or pose detection.

Watch more in the demo video:

Download the Chrome extension.

Check out the code:

GitHub – deep2universe/YouTube-Motion-Tracking: YouTube™ AI extension for motion tracking

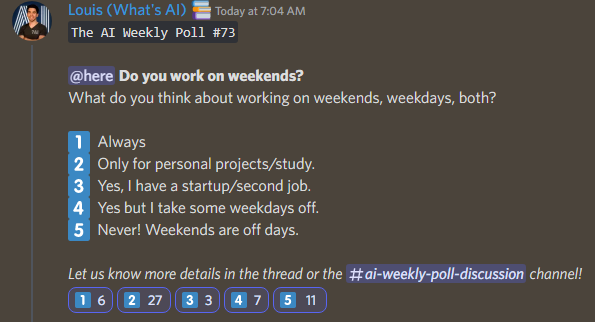

AI poll of the week!

What do you think about working on weekends, weekdays, or both? Join the discussion on Discord.

TAI Curated section

Article of the week

Difference Between Normalization and Standardization by Chetan Ambi.

One of the crucial processes in the machine learning pipeline is feature scaling. Normalization and standardization are the two commonly utilized methods for feature scaling. But what is the difference between them and when to use them? This is a very common question among people who have just started their data science journey. The author beautifully explains the difference using formulas, visualizations, and code.

Our must-read articles

Improve Your Classification Models With Threshold Tuning by Edoardo Bianchi

Imbalanced Data — Real-Time Bidding by Snehal Nair

If you are interested in publishing with Towards AI, check our guidelines and sign up. We will publish your work to our network if it meets our editorial policies and standards.

Lauren’s Ethical Take on increasing DALLE’s creativity

I think it’s so incredible that this model is able to adhere to natural language prompts, thanks to MIT researchers! However, I don’t quite agree with the general claim that this ability increases the model’s creativity. This enhancement appears more to be focused on accuracy, which creativity as a concept does not always correlate with. Art often requires breaking the rules or working with accidents, and this “error” is part of the creative process and often makes art as amazing as it is. Increasing the model’s ability to color in the lines doesn’t follow this principle. Similarly, increased complexity does not necessarily correlate with increased creativity either — incredibly simple things can be incredibly creative.

A counterargument to my first stance might be that exercising abilities within the natural language constraints IS actually more of a creative exercise than being allowed to go off script. However, I will reassert that breaking parameters can be more creative than working within them. Part of what made the initial wave of DALLE creations so impactful was this lack of regard for preconceived notions based on language (other than what it was trained on, of course!). We may lose some of that creative impression by optimizing for accuracy in this way. Whichever direction we choose will continue to shape how we attempt to cultivate creativity and the future of AI digital art tools!

Job offers

- Senior Software Engineer @ Captur (Remote, +/- 2 hours UK time)

- Senior ML Engineer @ Safe Security (Remote)

- ML/Algorithms Engineer @ Aurora Insight (Hybrid Remote)

- Data Scientist @ Electra (Remote)

- ML Research Intern @ Genesis Therapeutics (Burlingame, CA)

- Senior Staff Data Scientist @ One Concern (Remote)

- Research Scientist — Speech recognition @ Abridge (Remote)

- Computer Vision Scientist @ Percipient AI (Santa Clara, CA)

- Senior Data SciJob Application for Senior Data Scientist at EvolutionIQentist @ EvolutionIQ (Remote)

Interested in sharing a job opportunity here? Contact sponsors@towardsai.net or post the opportunity in our #hiring channel on discord!

This AI newsletter is all you need #13 was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Join thousands of data leaders on the AI newsletter. It’s free, we don’t spam, and we never share your email address. Keep up to date with the latest work in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.