Understanding LangChain 🦜️🔗: PART 1

Last Updated on July 25, 2023 by Editorial Team

Author(s): Chinmay Bhalerao

Originally published on Towards AI.

Understanding LangChain U+1F99C️U+1F517: PART 1

Theoretical understanding of chains, prompts, and other important modules in Langchain

In day-to-day life, we mostly work on building end-to-end applications. There are many auto ML platforms and CI/CD pipelines we can use to automate our ml pipelines. We also have tools like Roboflow and Andrew N.G.’s landing AI to automate or create end-to-end computer vision applications.

If we want to create an LLM application with the help of OpenAI or hugging face, then previously, we wanted to do it manually. Now for the same purpose, we have two most famous libraries, Haystack and LangChain, which help us to create end-to-end applications or pipelines for LLM models. Let's understand more about Langchain.

What is Langchain?

LangChain is an innovative framework that is revolutionizing the way we develop applications powered by language models. By incorporating advanced principles, LangChain is redefining the limits of what can be achieved through traditional APIs. Furthermore, LangChain applications are agentic, enabling language models to interact and adapt to their environment with ease.

Langchain constist of few modules. As its name suggests, CHAINING different modules together are the main purpose of Langchain. The idea here is to chain every module in one chain and, at last, use that chain to call all modules at once.

The modules consist of the following:

- Model

- Prompt

- Memory

- Chain

- Agents

- Callback

- Indexes

Let's start with,

Model:

As discussed in the introduction, Models mostly cover Large Language models. A large language model of considerable size is a model that comprises a neural network with numerous parameters and is trained on vast quantities of unlabeled text. There are various LLMs by tech giants, like,

- BERT by Google

- GPT-3 by OpenAI

- LaMDA by Google

- PaLM by Google

- LLaMA by Meta AI

- GPT-4 by OpenAI

- and many more…

With the help of the lang-chain, the interaction with large language models gets easier. The interface and functionality provided by Langchain help to integrate the power of LLMs easily into your work application.LangChain provides async support for LLMs by leveraging the asyncio library.

There is also Async support [By releasing the thread that handles the request, the server can allocate it for other tasks until the response is prepared, which maximizes resource utilization.] provided by langchain for the case where you want to call multiple LLMs concurrently and it is known as network-bound. Currently, OpenAI, PromptLayerOpenAI, ChatOpenAI and Anthropic are supported, but async support for other LLMs is on the roadmap. You can use the agenerate method to call an OpenAI LLM asynchronously. You can also write a custom LLM wrapper than one that is supported in LangChain.

I used OpenAI for my application and mostly used Davinci, Babbage, Curie, and Ada models for my problem statement. Each model has its own pros, token usage counts, and use cases. You can read more about these models here.

Example 1:

# Importing modules

from langchain.llms import OpenAI

#Here we are using text-ada-001 but you can change it

llm = OpenAI(model_name="text-ada-001", n=2, best_of=2)

#Ask anything

llm("Tell me a joke")

Output 1:

'\n\nWhy did the chicken cross the road?\n\nTo get to the other side.'

Example 2:

llm_result = llm.generate(["Tell me a poem"]*15)

Output 2:

[Generation(text="\n\nWhat if love neverspeech\n\nWhat if love never ended\n\nWhat if love was only a feeling\n\nI'll never know this love\n\nIt's not a feeling\n\nBut it's what we have for each other\n\nWe just know that love is something strong\n\nAnd we can't help but be happy\n\nWe just feel what love is for us\n\nAnd we love each other with all our heart\n\nWe just don't know how\n\nHow it will go\n\nBut we know that love is something strong\n\nAnd we'll always have each other\n\nIn our lives."),

Generation(text='\n\nOnce upon a time\n\nThere was a love so pure and true\n\nIt lasted for centuries\n\nAnd never became stale or dry\n\nIt was moving and alive\n\nAnd the heart of the love-ick\n\nIs still beating strong and true.')]

Prompts:

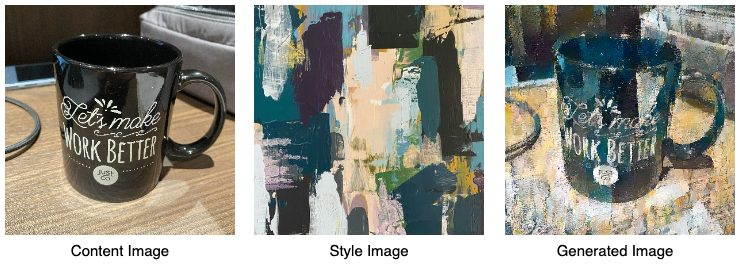

We all know that prompt is the input that we give to any system to refine our answers to make them more accurate or more specific according to our use case. Many times you may want to get more structured information than just text back. Many new object detection, and classification algorithms which are based on contrastive pretraining and zero-shot learning included prompts as a valid input for results. Just to give examples, OpenAI’s CLIP and META’s Grounding DINO use prompts as input for predictions.

In Langchain, we can set a prompt template according to the answer we want and then chain it to the main chain for output prediction. There is also a facility for the output parser to refine results. Output Parsers are responsible for (1) instructing the model how output should be formatted, and (2) parsing output into the desired formatting (including retrying if necessary).

In Langchain, we can provide prompts as a template. Template refers to the blueprint of the prompt or the specific format in which we want an answer. LangChain offers pre-designed prompt templates that can generate prompts for different types of tasks. Nevertheless, in some situations, the default templates may not satisfy your requirements. With the default, we can use our custom prompt template.

Example:

from langchain import PromptTemplate

# This template will act as a blue print for prompt

template = """

I want you to act as a naming consultant for new companies.

What is a good name for a company that makes {product}?

"""

prompt = PromptTemplate(

input_variables=["product"],

template=template,

)

prompt.format(product="colorful socks")

# -> I want you to act as a naming consultant for new companies.

# -> What is a good name for a company that makes colorful socks?

Memory:

Chains and Agents in LangChain operate in a stateless mode by default, meaning that they handle each incoming query independently. However, there are certain applications, like chatbots, where it is of great importance to retain previous interactions, both over the short and long term. This is where the concept of “Memory” comes into play.

LangChain provides memory components in two forms. First, LangChain provides helper utilities for managing and manipulating previous chat messages, which are designed to be modular and useful regardless of their use case. Second, LangChain offers an easy way to integrate these utilities into chains. This makes them highly versatile and adaptable to any situation.

Example:

from langchain.memory import ChatMessageHistory

history = ChatMessageHistory()

history.add_user_message("hi!")

history.add_ai_message("whats up?")

history.messages

Output:

[HumanMessage(content='hi!', additional_kwargs={}),

AIMessage(content='whats up?', additional_kwargs={})]

Chains:

Chains provide a means to merge various components into a unified application. A chain can be created, for instance, that receives input from a user, formats it using a PromptTemplate, and subsequently transmits the formatted reply to an LLM. More intricate chains can be generated by integrating multiple chains with other components.

LLMChain is considered to be among the most widely utilized methods for querying an LLM object. It formats the provided input key values, along with memory key values (if they exist), according to the prompt template, and subsequently sends the formatted string to LLM, which then produces an output that is returned.

One may take a series of steps subsequent to invoking a language model, where a sequence of calls to the model can be made. This practice is especially valuable when there is a desire to utilize the output from one call as the input for another. In this series of chains, each individual chain has a single input and a single output, and the output of one step is used as input to the next.

#Here we are chaining everything

from langchain.chat_models import ChatOpenAI

from langchain.prompts.chat import (

ChatPromptTemplate,

HumanMessagePromptTemplate,

)

human_message_prompt = HumanMessagePromptTemplate(

prompt=PromptTemplate(

template="What is a good name for a company that makes {product}?",

input_variables=["product"],

)

)

chat_prompt_template = ChatPromptTemplate.from_messages([human_message_prompt])

chat = ChatOpenAI(temperature=0.9)

# Temperature is about randomness in answer more the temp, random the answer

#Final Chain

chain = LLMChain(llm=chat, prompt=chat_prompt_template)

print(chain.run("colorful socks"))

Agents:

Certain applications may necessitate not only a pre-determined sequence of LLM/other tool calls but also an uncertain sequence that is dependent on the user’s input. These kinds of sequences include an “agent” that has access to a range of tools. Based on the user input, the agent may determine which of these tools, if any, should be called.

According to documentation, the High-level pseudocode of agents looks something like:

Some user input is received

The agent decides which tool — if any — to use, and what the input to that tool should be

That tool is then called with that tool input, and an observation is recorded (this is just the output of calling that tool with that tool input.

That history of tool, tool input, and observation is passed back into the agent, and it decides what steps to take next

This is repeated until the agent decides it no longer needs to use a tool, and then it responds directly to the user.

Example:

from langchain.agents import load_tools

from langchain.agents import initialize_agent

from langchain.agents import AgentType

from langchain.llms import OpenAI

llm = OpenAI(temperature=0)

tools = load_tools(["serpapi", "llm-math"], llm=llm)

agent = initialize_agent(tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True)

agent.run("Who is Leo DiCaprio's girlfriend? What is her current age raised to the 0.43 power?")

Let's summarize everything in one snapshot attached below.

Understanding all modules and chaining is important to build pipeline applications for large language models using Lang-Chain. This was just a simple introduction to LangChain, in the next part of this series, we will work on real pipelines like making pdfGPT, making conversational bots, answering over documents, and other applications. This application-based work will make these concepts more clear. The documentation of LangChain is good to understand but I have added my own thoughts to make it more clear. You can find LangChain’s documentation here.

[Edit: I added the next parts of LangChain series below so that you don't need to search for it in my profile]

Understanding LangChain U+1F99C️U+1F517: Part:2

Implementing LangChain practically for building custom data bots involves incorporating memory, prompt templates, and…

pub.towardsai.net

Tokens and Models: Understanding LangChain U+1F99C️U+1F517 Part:3

Understanding tokens and how to select OpenAI models for your use case, how API key pricing works

pub.towardsai.net

If you have found this article insightful

It is a proven fact that “Generosity makes you a happier person”; therefore, Give claps to the article if you liked it. If you found this article insightful, follow me on Linkedin and medium. You can also subscribe to get notified when I publish articles. Let’s create a community! Thanks for your support!

Also, medium doesn’t give me anything for writing, if you want to support me then you can click here to buy me coffee.

WE CAN CONNECT ON :U+007C LINKEDIN U+007C TWITTER U+007C MEDIUM U+007C SUBSTACK U+007C

Signing off,

Chinmay

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Logo:

Logo:  Areas Served:

Areas Served: