GPT-3 from OpenAI is here and it’s a Monster

Last Updated on July 24, 2023 by Editorial Team

Author(s): Przemek Chojecki

Originally published on Towards AI.

Machine Learning

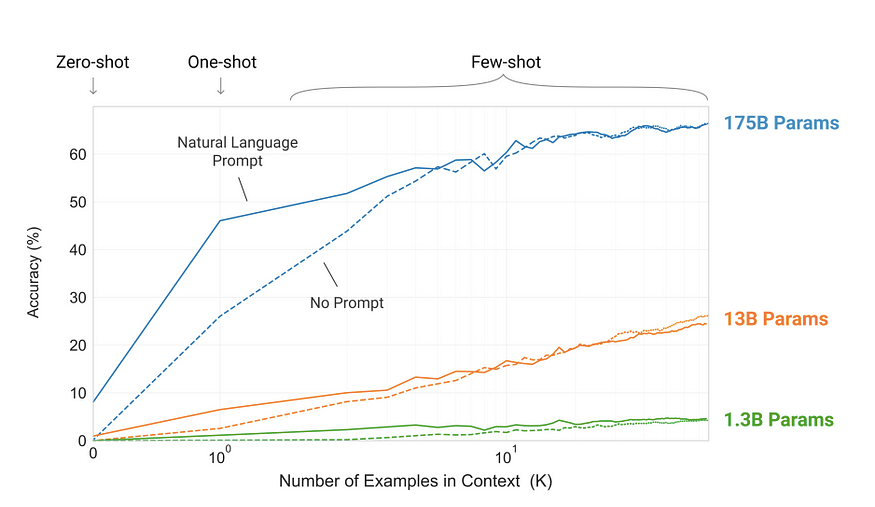

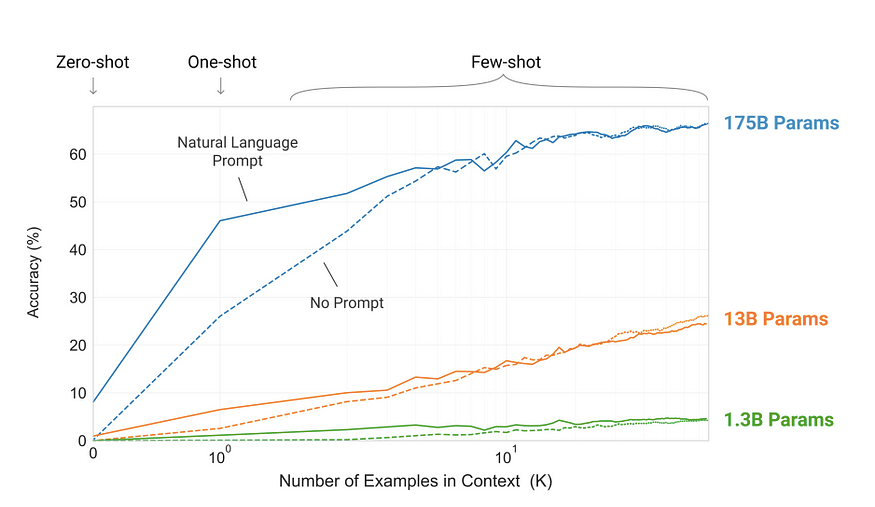

The newest GPT-3 from OpenAI has 175 billion parameters and it is 10 times larger than the previous largest model, Turing-NLG from Microsoft. The behavior that emerges from this large model is exciting: less finetuning is needed to perform specific NLP tasks like translation or question answering. What does that mean?

Language Models are Few-Shot Learners: GPT-3 — the larger the model, the less examples and finetuning it needs to perform well in NLP tasks. Image from OpenAI paper.

Imagine you want to build a model for translation from English to French. You’d take a pre-trained language model (say BERT) and then… Read the full blog for free on Medium.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Logo:

Logo:  Areas Served:

Areas Served: