Get Started With Google Gemini Pro Using Python in 5 Minutes

Last Updated on February 15, 2024 by Editorial Team

Author(s): Dipanjan (DJ) Sarkar

Originally published on Towards AI.

Introduction

Google Gemini Pro is part of Google’s latest AI model, Gemini, which was announced as their most capable and general AI model to date. This represents a significant step forward in Google’s AI development, designed to handle a wide range of tasks with state-of-the-art performance across many leading benchmarks. Gemini Pro, along with Gemini Ultra and Gemini Nano, was introduced to mark the beginning of what Google DeepMind calls the Gemini era, aiming to unlock new opportunities for people everywhere by leveraging AI’s capabilities.

Gemini Pro was globally launched in January 2024, following a collaboration with Samsung to integrate Gemini Nano and Gemini Pro into the Galaxy S24 smartphone lineup. In fact even their ChatGPT competitor assistant app Bard has been now renamed to Gemini just last week as of writing this article (Feb 8, 2024). We also saw the introduction of “Gemini Advanced with Ultra 1.0” through the AI Premium tier of the Google One subscription service.

One of the key features of Gemini Pro is its API, which is designed to allow developers to develop and integrate AI-powered functionalities into their applications quickly. The API supports a variety of programming languages, including Python, which is what we will use here to show you how to get started with using the Gemini Pro Large Language Model for free (as of Feb 2024)!

Gemini Essentials

Google’s Gemini is a suite of AI models designed to handle a wide array of tasks, including content generation and problem-solving with both text and image inputs. Here’s a brief overview of the different Gemini models you can access easily via APIs:

Gemini API Pricing

At this moment of writing the article which is Feb 13, 2024, the Gemini Pro API is free to use, however my gut tells me they will soon introduce a token-based pricing as you can see in the following screenshot taken from their website.

Getting Started with Gemini Pro and Python

Let’s get started now with building basic LLM functionalities using Gemini Pro API and Python. We will show you how to get an API key and then use the relevant Gemini LLMs in Python.

Getting Your API Key from Google AI Studio

Google AI Studio is a free, web-based tool that allows you to quickly develop prompts and obtain an API key for app development. You can sign into Google AI Studio with your Google account and get your API key from here.

Remember to save the key somewhere safe and do NOT expose it in a public platform like GitHub.

Google Gemini Pro is still not accessible in all countries but expect it to be available soon in case you are not able to access it yet, or you could use a VPN. Check available regions here

Using Gemini Pro API with Python for Text Inputs

To start using the Gemini Pro API, we need to install the google-generativeai package from PyPI or GitHub

pip install -q -U google-generativeai

Now I have saved my API key in a YAML file so I can load it and I do not need to expose the key in my code publicly anywhere. I load up this file and load my API key into a variable as follows.

import yaml

with open('gemini_key.yml', 'r') as file:

api_creds = yaml.safe_load(file)

GOOGLE_API_KEY = api_creds['gemini_key']

The next step is to create a connection to the Gemini Pro model via the API as follows where you first need to use your API to set a config and then load the model (or rather create a connection to the model on Google’s servers).

import google.generativeai as genai

genai.configure(api_key=GOOGLE_API_KEY)

model = genai.GenerativeModel('gemini-pro')

We are now ready to start using Gemini Pro! Let’s do a basic task of getting some information.

response = model.generate_content("Explain Generative AI with 3 bullet points")

to_markdown(response.text)

The to_markdown(…) function makes the text output look prettier and you can get the function from the official docs or use my Colab notebook.

Let’s try a more practical example now, imagine you are automating IT support across multiple regions with different languages. We will make the LLM try to detect the source language of the customer issue, translate it to english, reply back in the original language of the customer.

it_support_queue = [

"I can't access my email. It keeps showing an error message. Please help.",

"Tengo problemas con la VPN. No puedo conectarme a la red de la empresa. ¿Pueden ayudarme, por favor?",

"Mon imprimante ne répond pas et n'imprime plus. J'ai besoin d'aide pour la réparer.",

"Eine wichtige Software stürzt ständig ab und beeinträchtigt meine Arbeit. Können Sie das Problem beheben?",

"我无法访问公司的网站。每次都显示错误信息。请帮忙解决。"

]

it_support_queue_msgs = f"""

"""

for i, msg in enumerate(it_support_queue):

it_support_queue_msgs += "\nMessage " + str(i+1) + ": " + msg

prompt = f"""

Act as a customer support agent. Remember to ask for relevant information based on the customer issue to solve the problem.

Don't deny them help without asking for relevant information. For each support message mentioned below

in triple backticks, create a response as a table with the following columns:

orig_msg: The original customer message

orig_lang: Detected language of the customer message e.g. Spanish

trans_msg: Translated customer message in English

response: Response to the customer in orig_lang

trans_response: Response to the customer in English

Messages:

'''{it_support_queue_msgs}'''

"""

Now that we have a prompt ready to go into the LLM let’s execute it!

response = model.generate_content(prompt)

to_markdown(response.text)

Pretty neat! I am sure with more detailed information or a RAG system, the responses can be even more relevant and useful.

Using Gemini Pro Vision API with Python for Text and Image Inputs

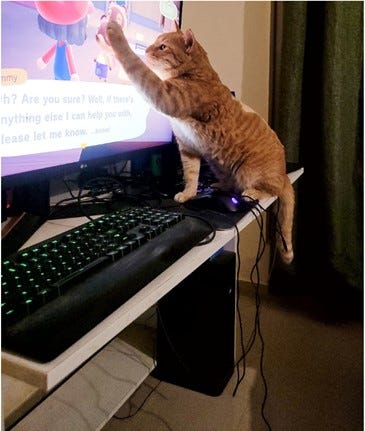

Google has released a Gemini Pro Vision multimodal LLM which can take both text and images as input and return back text as output. Remember, this is still an LLM that outputs text only. Let’s use it with a simple use-case of understanding a picture and creating a short story from it!

We start by loading the image first.

import PIL.Image

img = PIL.Image.open('cat_pc.jpg')

img

After this we load the Gemini Pro Vision model and send it the following prompt to get a response.

model = genai.GenerativeModel('gemini-pro-vision')

prompt = """

Describe the given picture first based on what you see.

Then create a short story based on your understanding of the picture.

Output should have both the description and the short story as two separate items

with relevant headings

"""

response = model.generate_content(contents=[prompt, img])

to_markdown(response.text)

Overall not too bad at all! Although I have probably seen that GPT-4 with DALL-E can recognize the game as Animal Crossing, which is even more accurate. But pretty good, I would say.

You can also use Gemini Pro to build interactive chat experiences. This involves sending messages to the API and receiving responses, supporting multi-turn conversations. Feel free to check out the detailed API documentation for some examples!

Conclusion

In conclusion, whether you’re a seasoned AI developer or just starting out, Google’s Gemini Pro and Python provide a pretty straightforward and powerful way to incorporate cutting-edge AI into your applications and projects. Moreover, the current availability of the Gemini Pro API for free is an invitation to explore the capabilities of AI LLMs without initial investment. While future pricing changes are anticipated, the opportunity to start building with such a powerful tool at no cost is quite a steal!

Hopefully, you got an idea of how to obtain your API key via Google AI Studio to execute your first Python script with the Gemini Pro API with a really short time to get started. Now go ahead and try leveraging it in your own problems and projects!

Reach out to me at my LinkedIn or my website if you want to connect. I do quite a bit of AI consulting, trainings, and projects.

Get the complete code in a Google Colab notebook here!

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI