Everything You Need to Know About Chunking for RAG

Last Updated on November 3, 2024 by Editorial Team

Author(s): Anushka sonawane

Originally published on Towards AI.

Everything You Need to Know About Chunking for RAG

Remember learning your first phone number? You probably broke it into smaller pieces — not because someone told you to, but because it was natural. That’s exactly what AI needs.

Working with large data often overwhelms Large Language Models (LLMs), causing them to generate inaccurate responses, known as “hallucinations.” I’ve seen this firsthand, where the right information is there, but the model can’t retrieve it correctly.

The core issue? Poor chunking. When you ask an AI a specific question and get a vague answer, it’s likely because the data wasn’t broken into manageable chunks. The fix is simple: chunking. Breaking text into smaller pieces allows the AI to focus on relevant data.

In this blog, I’ll explain chunking, why it’s crucial for Retrieval-Augmented Generation (RAG), and share strategies to make it work effectively.

chunking is all about breaking text into smaller, more manageable pieces to fit within the model’s context window. This is crucial for ensuring that the model can process and understand the information effectively.

Think of it like sending a friend a few quick texts instead of one long message much easier to read and respond to! Plus, who wants to wade through a giant wall of text, right?

Chunking is to RAG what memory is to human intelligence — get it wrong, and everything falls apart.— Andrew Ng

Why Should You Care About Chunking?

Let’s look at some real numbers that shocked me during my research:

- A poorly chunked RAG system can miss up to 60% of relevant information (Stanford NLP Lab, 2023)

- Optimal chunking can reduce hallucinations by 42% (OpenAI Research, 2023)

- Processing time can vary by 300% based on chunking strategy alone

Research from companies like Google and Microsoft has shown that when text is broken into chunks, AI models show a 30–40% improvement in accuracy. For example, when processing legal documents, chunking by logical sections rather than arbitrary length improved response accuracy from 65% to 89% in controlled studies.

Why Does Context Length Matter?

Imagine trying to memorize a 1000-page novel in one sitting — overwhelming, right? That’s exactly what happens when we feed massive amounts of data to AI models. They stumble, getting facts mixed up or making things up entirely.

The GPT-4 model can handle an impressive 128,000 tokens. Here’s a handy breakdown:

- Token Rule of Thumb: One token generally corresponds to about 4 characters of English text, translating to roughly ¾ of a word. For example, 100 tokens equate to about 75 words.

- Imagine reading a long article online that’s 5,000 words. That translates to roughly 6,600 tokens. If an AI tried to process the entire article in one go, it might get lost in all the details. Instead, chunking it into sections — like paragraphs or key points — makes it much easier for the AI to focus on what’s essential.

Finding the right chunk size is crucial for retrieval accuracy. If chunks are too large, you risk hitting the model’s context window limit, which can lead to missed information. On the other hand, if they’re too small, you might strip away valuable context that could provide important insights.

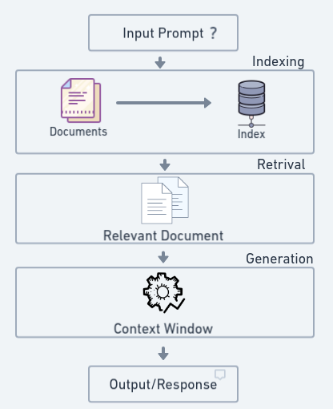

Chunking in RAG Systems: How It Works

RAG begins by breaking large documents into smaller, more manageable chunks. These smaller parts make it easier for the system to retrieve and work with the information later on, avoiding data overload.

Once the document is chunked, each piece is transformed into a vector embedding — a numerical representation that captures the chunk’s underlying meaning. This step allows the system to efficiently search for relevant information based on your query.

When you ask a question, the system retrieves the most relevant chunks from the database using different methods.

The retrieved chunks are then passed to the generative model (such as GPT), which reads them and crafts a coherent response using the extracted information, making sure the final answer is contextually relevant.

Want to see these chunking strategies in action? Check out an interactive notebook for ready-to-use implementations!

RetrievalTutorials/tutorials/LevelsOfTextSplitting/5_Levels_Of_Text_Splitting.ipynb at main ·…

Contribute to FullStackRetrieval-com/RetrievalTutorials development by creating an account on GitHub.

github.com

The Evolution of Chunking: A Journey Through Time

- Fixed-Size Chunking:

Chunks are created based on a fixed token or character count • Key Principles:

· Small portions of text are shared between adjacent chunks(Overlap Windows)

· Text is processed linearly from start to finish(Sequential Processing) • When to Use:

· Documents with uniform content distribution

· Projects with strict memory constraints

· Scenarios requiring predictable processing times

· Basic proof-of-concept implementations • Limitations to Consider

· May split mid-sentence or mid-paragraph

· Doesn’t account for natural document boundaries

· Can separate related information - Recursive chunking:

Uses a hierarchical approach, splitting text based on multiple levels of separators:

· First attempt: Split by major sections (e.g., chapters)

· If chunks are too large: Split by subsections

· If still too large: Split by paragraphs

· Continue until desired chunk size is achieved • Key Principle:

· Starts with major divisions and works down(Hierarchical Processing)

· Uses different separators at each level(Adaptive Splitting)

· Aims for consistent chunk sizes while maintaining coherence(Size Targeting) • When to Use

· Well-structured documents (academic papers, technical documentation)

· Content with clear hierarchical organization

· Projects requiring balance between structure and size • Strategic Considerations

May produce variable chunk sizes - Document-Specific Chunking:

Different documents require different approaches — you wouldn’t slice a PDF the same way you’d slice a Markdown file.• Format-Specific Strategies:

· Markdown: Split on headers and lists

· PDF: Handle text blocks and images separately

· Code: Respect function and class boundaries

· HTML: Split along semantic tagsStudies from leading RAG implementations show that format-aware chunking can improve retrieval accuracy by up to 25% compared to basic chunking.

• Key Principles

· Format Recognition: Adapts to document type (markdown, code, etc.)

· Structural Awareness: Preserves format-specific elements

· Semantic Boundaries: Respects format-specific content divisions• When to Use

· Mixed-format document collections

· Technical documentation with code samples

· Content with specialized formatting requirements• Strategic Considerations

· Requires format-specific parsing rules

· More complex implementation

· Better preservation of document structure - Semantic Chunking:

Semantic chunking uses embedding models to understand and preserve meaning:

·Generate embeddings for sentences/paragraphs

· Cluster similar embeddings

· Form chunks based on semantic similarity

· Sliding Window with Overlap: • Key Principles:

· Meaning Preservation: Groups semantically related content

· Contextual Understanding: Uses embeddings to measure content similarity

· Dynamic Sizing: Adjusts chunk size based on semantic coherence• When to Use

· Complex narrative documents

· Content where context is crucial

· Advanced retrieval systems• Strategic Considerations

· Computationally intensive

· Requires embedding models - Late Chunking:

A revolutionary approach that embeds first, chunks later, It involves embedding an entire document first to preserve its contextual meaning, and only then splitting it into chunks for retrieval. This approach ensures that critical relationships between different parts of the text remain intact, which can otherwise be lost with traditional chunking methods.• The Process:

·Embed entire documents initially

· Preserve global context and relationships

· Create chunks while maintaining semantic links

· Optimize for retrieval without losing context• Key Principles:

· Global Context Priority: Understands full document before splitting

· Relationship Preservation: Maintains connections between related concepts

· Adaptive Boundaries: Creates chunks based on semantic unit• When to Use:

· Long documents with complex cross-references

· Technical documentation requiring context preservation

· Legal documents where relationship accuracy is crucial

· Research papers with interconnected concepts• Strategic Considerations:

· 15–20% higher initial processing overhead

· Requires more upfront memory allocation

· Shows 40% better performance in multi-hop queries (MIT CSAIL, 2023)

· Reduces context fragmentation by 45% compared to traditional methods - Agentic Chunking:

This strategy mimics how humans naturally organize information, using AI to make intelligent chunking decisions.• Key Principles

· Cognitive Simulation: Mirrors human document processing

· Context Awareness: Considers broader document context

· Dynamic Assessment: Continuously evaluates chunk boundaries• When to Use

· Complex narrative documents

· Content requiring human-like understanding

· Projects where accuracy outweighs performance• Strategic Considerations

· Requires LLM integration

· Higher computational cost

·More sophisticated implementation

Before implementing any chunking strategy, consider:

- Document Characteristics

Structure level (structured vs. unstructured)

Format complexity

Cross-reference density - Project Requirements

Accuracy needs

Processing speed requirements

Resource constraints - System Context

Integration requirements

Scalability needs

Maintenance capabilities

Chunking isn’t just about splitting text — it’s about preserving meaning. As one researcher put it:

Chunking is the art of breaking without breaking understanding.

But remember: The best chunking strategy is the one that works for your specific use case. Start with these principles, measure everything, and iterate based on real results.

Before you go!

If you found value in this article, I would truly appreciate your support!

You can ‘like’ this LinkedIn post, where you’ll also find a free friend link to this article.

give the article a few claps on Medium (you can clap up to 50 times!) — it really helps get this piece in front of more people

Also, don’t forget to follow me on Medium and LinkedIn, and Subscribe to stay in the loop with my latest posts!

Until Next Time,

Anushka

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.