Driving power behind ChatGPT-o1 and Deepseek-R1

Last Updated on March 6, 2025 by Editorial Team

Author(s): Deltan Lobo

Originally published on Towards AI.

One of the underlying powers of models like Deepseek-R1 and ChatGPT-o1 is Reinforcement learning.

But first, let’s understand how these models employ Reinforcement Learning.

LLM pre-training and post-training

The training of an LLM can be separated into a pre-training and post-training phase:

- Pre-training: Here the LLM is taught to predict the next word/token. It’s trained on a huge corpus of data — mostly text, and when a question is asked to LLM, the model has to predict the relevant sequence of words/tokens to answer that question.

- Post-training: In this stage, we improve the model's reasoning capability. Most commonly it's trained in two stages:

- Supervised-finetuning(SFT): The pretrained model is further trained on supervised data — labeled data we call, which is typically less amount. The data could look like pairs of reasoning-related stuff, like chain-of-thought, instruction following, question-answering, and so on. When it's trained it's able to mimic expert reasoning behavior.

- Reinforcement learning from Human Feedback(RLHF): We can think of this stage when the responses don't seem okay… and typically implemented when we could allow users to tell which response was correct/incorrect.

Let’s break down RLHF.

Basically, Reinforcement Learning from Human Feedback (RLHF) is a four-step process that helps AI models align with human preferences.

Generate Multiple Responses

- The model is given a prompt, and it generates several different responses.

- These responses vary in quality, some being more helpful or accurate than others.

Think of it like a brainstorming session where an AI suggests multiple possible answers to the same question!

Human Ranking & Feedback

- Human annotators rank these responses based on quality, clarity, helpfulness, and alignment with expected behavior.

- This creates a dataset of human preferences, acting as a guide for future training.

Imagine grading multiple essays on the same topic — some are excellent, others need improvement!

Training the Reward Model

- The reward model is trained to predict human rankings given any AI-generated response.

- Over time, the reward model learns human preferences, assigning higher scores to preferred responses.

It’s like training a food critic AI to recognize what makes a dish taste good based on human reviews!

Reinforcement Learning Optimization

- The base AI model is fine-tuned using Reinforcement Learning (RL) to maximize reward scores.

- Algorithms like PPO (Proximal Policy Optimization) or GRPO (Group Relative Policy Optimization) are used.

- The AI gradually learns to generate better responses, avoiding low-ranked outputs.

This step is like coaching a writer to improve their storytelling based on reader feedback — better writing leads to better rewards!

Now as we got to know where the algorithms kick in, let’s start understanding them.

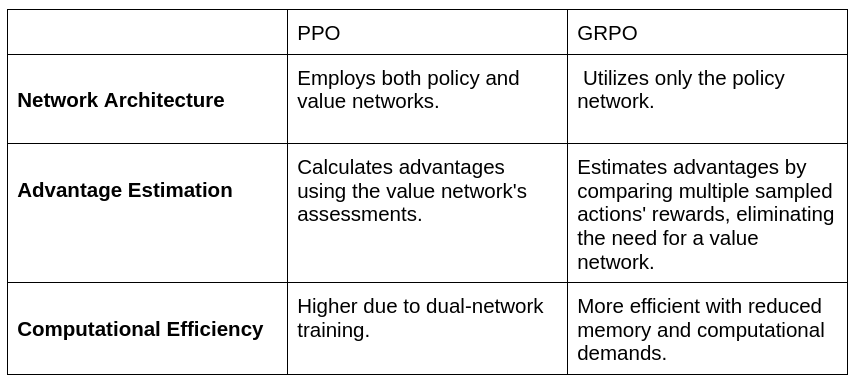

Proximal Policy Optimization (PPO) and Group Relative Policy Optimization (GRPO) are both reinforcement learning algorithms used to train AI models, but they differ in their methodologies and computational efficiencies.

ChatGPT-o1 uses PPO whereas Deepseek-R1 uses GRPO.

Let's break them down into simple terms.

Proximal Policy Optimization (PPO)

Imagine training a player to play football. Here there is a player and a coach. One decides the next or best move (the “player”), and the other evaluates how good that move was (the “coach”). After each move, the coach provides feedback, and the player adjusts his strategy based on this advice.

Similarly… PPO is a policy gradient method that adjusts the policy directly to maximize expected rewards.

It utilizes two neural networks: a policy network that determines actions and a value network or critic that evaluates these actions.

It’s like a student taking a test and a teacher grading each answer, providing scores to guide the student’s future learning.

To maintain stable learning, PPO employs a clipped objective function, which restricts the magnitude of policy updates, preventing drastic changes that could destabilize training.

PPO seeks to maximize the expected advantage while ensuring that the new policy doesn’t deviate excessively from the old policy. The clipping function restricts the probability ratio within a specified range, preventing large, destabilizing updates. This balance allows the agent to learn effectively without making overly aggressive changes to its behavior.

But here’s a catch. Training both policy and value networks simultaneously increases computational requirements, leading to higher resource consumption.

GRPO is an advancement over PPO, designed to enhance efficiency by eliminating the need for a separate value network and focusing solely on the policy network.

Group Relative Policy Optimization (GRPO)

GRPO simplifies the process by eliminating the coach. Instead, for each situation, the AI generates multiple possible actions and compares them against each other. It ranks these actions from best to worst and learns to prefer actions that perform better, relative, to others, a sort of self-learning.

It’s like a student answering multiple versions of a question, comparing their answers, and learning which approach works best without needing a teacher’s evaluation.

Technically speaking, GRPO streamlines the architecture by eliminating the value network, relying solely on the policy network.

Instead of evaluating actions individually, GRPO generates multiple responses for each input and ranks them. The model then updates its policy based on the relative performance of these grouped responses, enhancing learning efficiency.

GRPO generates multiple potential actions (or responses) for each state (or input) and evaluates them to determine their relative advantages. By comparing these actions against each other, GRPO updates its policy to favor actions that perform better relative to others.

The inclusion of the KL divergence term ensures that the new policy remains close to the old policy, promoting stable learning. This approach streamlines the learning process by removing the need for a separate value network, focusing solely on optimizing the policy based on relative performance within groups of actions.

By removing the value network and adopting group-based evaluations, GRPO reduces memory usage and computational costs, leading to faster training times.

Both Proximal Policy Optimization (PPO) and Group Relative Policy Optimization (GRPO) are reinforcement learning algorithms that optimize policy learning efficiently.

- PPO balances exploration and exploitation by clipping the objective function so that the updates are not overly large. It uses a policy network as well as a value network, making it more computationally intensive but stable.

- GRPO removes the value network; instead, it compares the multiplicity of the responses to determine the best action. The result is increased efficiency in computations yet stable learning under a KL divergence constraint.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.