What Is Innateness and Does It Matter for Artificial Intelligence? (Part 2)

Last Updated on July 15, 2023 by Editorial Team

Author(s): Vincent Carchidi

Originally published on Towards AI.

The question of innateness, in biology and artificial intelligence, is critical to the future of human-like AI. This two-part deep dive into the concept and its application may help clear the air.

By Vincent J. Carchidi

(This is a continuation of a two-part piece on innateness in biology and artificial intelligence. The first part can be found here.)

Innateness in Artificial Intelligence

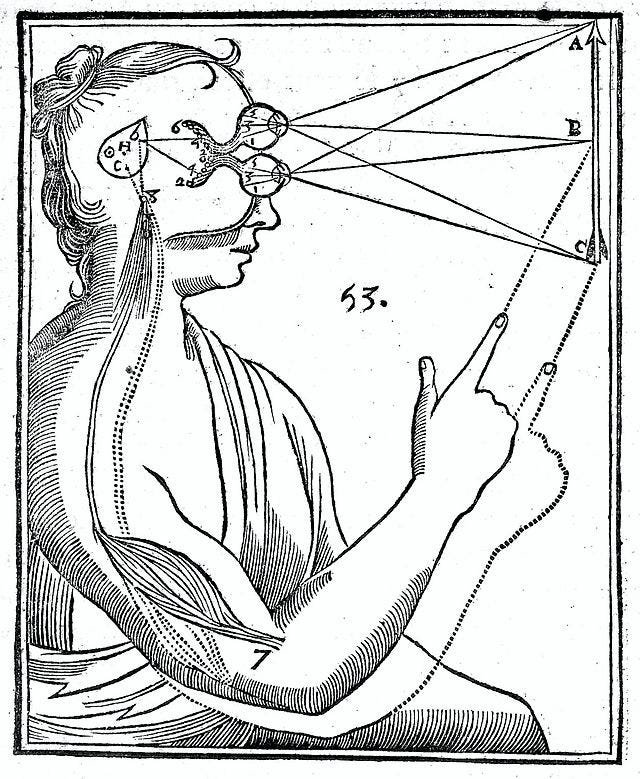

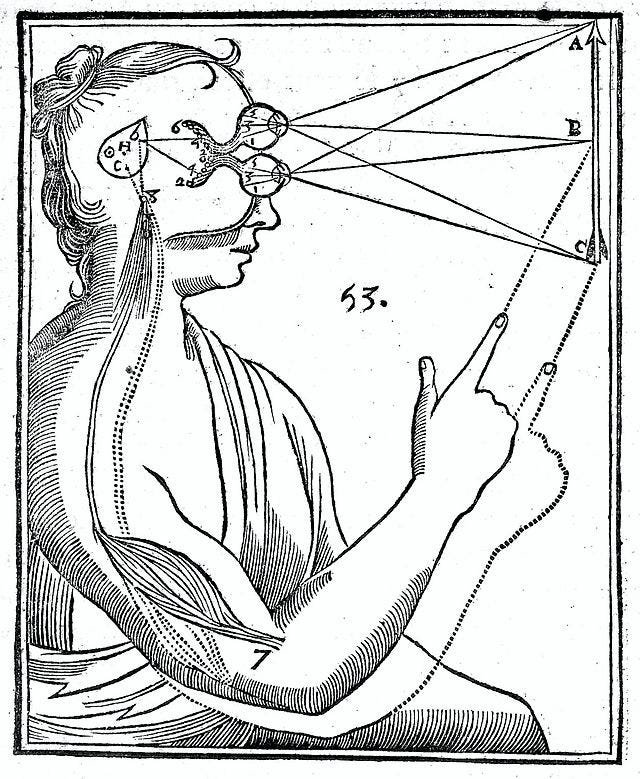

The deep learning revolution has been accompanied by a shift in the relationship between AI and the study of the human mind. Artificial neural networks (ANNs) were originally inspired, loosely, by the massive interconnections of neurons within mammalian brains. While the typical example used of an ANN for broad audiences is a simplistic Feed-Forward Network — in which there is an input layer, hidden layers in the middle, and then an output layer through which information flows only in one direction — deep neural networks (DNNs) possessing many hundreds of such hidden layers have become more “internally heterogeneous” than their shallower predecessors (pictured below).

Source: Wikimedia Commons licensed under the Creative Commons Attribution-Share Alike 4.0 International license.

Over time, however, a reversal of the idea came about in popular AI discourse: that the human brain is actually like DNNs. The flip from biological inspiration for ANNs to biological brains being in the same league as DNNs is not just a linguistic quirk — it betrays both a sophisticated understanding of innateness in human minds and a proper interdisciplinarity in studying AI. For all its merits, the deep learning revolution brought with it a tendency to lower the standards of rigor necessary for understanding the only category of examples we have of intelligence — biological intelligence (a lack of cognitive framing recently lamented by Peter Voss as an obstacle on the path to Artificial General Intelligence, or AGI).

It is not a surprise, then, that figures like Gary Marcus express repeated frustration with machine learning researchers who seem resistant to the idea of building highly structured systems, specified with some significant level of domain-specific knowledge. Marcus is, after all, a cognitive psychologist by trade. And it is not surprising that the one public debate between Gary Marcus and Yann LeCun in 2017 was centered on the question: “Does AI Need More Innate Machinery?”

But this was in the pre-large language model (LLM) era. More recently, in June 2022, Yann LeCun and Jacob Browning, arguing that we may learn new things about human intelligence through AI, claim that LLMs have learned to manipulate symbols, “displaying some level of common-sense reasoning, compositionality, multilingual competency, some logical and mathematical abilities and even creepy capacities to mimic the dead.” The catch — “they do not do so reliably.” LeCun has since expressed his pessimism about LLMs in the pursuit of AGI but has not, clearly at least, connected this to pessimism about deep learning itself (whatever confusions one has about LeCun’s positions, contra Marcus, the former remains something of an empiricist, though not a radical one).

Unreliability has been no obstacle for others, however, who lean into the idea that LLMs can contribute to debates about innateness in humans. Linguist Steven Piantadosi wrote a highly disseminated article arguing that LLMs’ success “undermines virtually every strong claim for the innateness of language that has been proposed by generative linguistics” (p. 1). He characterizes LLMs as “automated scientists or automated linguists, who also work over relatively unrestricted spaces, searching to find theories which do the best job of parsimoniously predicting observed data” (p. 19).

If Piantadosi’s argument were at least partially correct — and LLMs in some way explain how humans acquire natural languages — it would bear directly on the necessity (or lack thereof in this case) of innateness for biological and artificial intelligence. To be sure, others have cast doubt on the argument. Linguist Roni Katzir, upon testing GPT-4 himself, finds that the system fails to prove that it is “suitably biased” (p. 2) towards established constraints in human language use, arguing that they do not model human linguistic cognition so much as they model linguistic text. He argues that it would be strange to even expect LLMs to explain human language acquisition given the different purposes behind their design. Researchers Jon Rawski and Lucie Baumont bluntly argue that Piantadosi’s argument is fallacious, in addition to prioritizing predictive power over explanatory power.

Gameplaying AIs as Windows Into Artificial General Intelligence

In many ways, the debate over innateness in AI in contrast to humans is an oddity. While machine learning has often seen an effort to minimize the number of innate components needed for a system to succeed, the debate assumes that the successes of the deep learning era are a kind of streak of empiricism. Innateness has mattered for the field in ways relevant to the pursuit of AGI. The issue is that when innateness is a factor in successful systems, it is often downplayed or ignored, with the conceptual significance of advancements lost on even those who promote them most.

Nowhere is this more evident than in gameplaying AIs. One of the most baffling things about AlphaGo Zero’s accompanying research paper was the authors’ insistence that the system starts learning how to play Go from a state of “tabula rasa.” Of course, AlphaGo Zero is remarkable for achieving superhuman performance in Go through self-play reinforcement learning, but it was Gary Marcus who explained at length just how much built-in stuff — that is, innate, before learning anything about Go at all — AlphaGo Zero possessed.

Indeed, the domain knowledge used by AlphaGo Zero is as follows, drawn directly from the 2017 research paper (p. 360):

(1) Perfect knowledge of the game rules used during the Monte Carlo tree search (MCTS).

(2) The use of Tromp-Taylor scoring during MCTS simulations and self-play training — this is a list of 10 logical rules specifying several game features, including the 19×19 grid, black and white stones, what counts as “clearing” a color, and so on.

(3) Input features that describe the position are structured as a 19×19 image; “that is, the neural network architecture is matched to the grid-structure of the board.”

(4) “The rules of Go are invariant under rotation and reflection” — this odd phrasing means two things: that the dataset was augmented during training “to include rotations and reflections of each position” and that “random rotations or reflections of the position during MCTS” were sampled using a specific algorithm.

Much like how a human individual cannot frame the world in moral terms without a distinctive moral capacity built in from the start, AlphaGo Zero could not even recognize the Go board, the stones, the movements of either the board or the stones, and the rules of the game without this innate endowment. More than this, as Marcus notes, the MCTS apparatus was in no way learned from the data, but instead programmed in from the start, with the choice of which augmentations to include “made with human knowledge, rather than learned” (p. 8).

Describing AlphaGo Zero as a blank slate because it is trained via self-play reinforcement learning is enormously misleading and it was not a victory for empiricism. Rather, as Marcus correctly explains, it is an “illustration…of the power of building in the right stuff to begin with…Convolution is the prior that has made the field of deep learning work; tree search has been vital for game playing. AlphaZero has combined the two” (p. 8–9). Indeed, much like human moral judgments result from a structured process in the mind drawing principally from a system dedicated to moral intuitions while interfacing with other systems, AlphaGo Zero could not handle the complexity of Go by simply dropping one of its innate components. And, as Artur d’Avila Garcez and Luís C. Lamb note, MCTS is a symbolic problem-solver, making its coupling with AlphaGo Zero’s neural network an element of neuro-symbolic AI.

This combinatorial aspect of AlphaGo Zero’s innate endowment is what makes it so impressive and we should appreciate its built-in knowledge before training as well as the marvel of the training itself.

Unique, combinatorial design choices that richly structure the system to a specific problem are often responsible for many gameplaying AIs’ greatest successes, though techniques like reinforcement learning and self-play are inflated in commentary at the expense of these choices. This is strikingly evident in Meta’s “Cicero,” the Diplomacy-playing agent whose thunder was stolen by being released eight days before ChatGPT.

Cicero, the first agent to reach human-level — not superhuman — performance in Diplomacy, exemplifies these combinatorial elements in two ways: First, on a high level, the system combines strategic reasoning and natural language communication during gameplay. Second, the system’s architecture depends on a combination of research and techniques drawn from areas including strategic reasoning, natural language processing, and game theory. The result, as Gary Marcus and Ernest Davis describe, is “a collection of highly complex, interacting algorithms.”

To succeed at Diplomacy, players must engage in strategic reasoning as they seek to acquire a majority of supply centers on the map. This element of the game, which incorporates tactical proficiency from one move to the next in the service of an overarching goal, is similar to other games like Chess, Go, and Poker — each, by now, mastered in one way or another by AI. Where Diplomacy differs is that players spend significant time negotiating with one another in open-ended text-based or verbal dialogue.

Sounds pretty straightforward, right? Players negotiate with one another to secure favorable outcomes — easy. It’s anything but. Remember, there is a tendency to underappreciate the complexity of both the situations our moral judgments are in response to and the judgments themselves. Diplomacy can be deceptive in an analogous way should we not look from a distance. Aligning the strategic reasoning capabilities of an agent with natural language communication is enormously complex.

Cicero’s architecture can be divided between a planning engine and a dialogue agent. The planning engine is responsible for strategic reasoning whereas the dialogue agent handles natural language communication. Crucially, these two divisions are mediated by a highly specific mechanism (a “dialogue-conditional action model”), and the dialogue agent is controlled by the planning engine. This high-level structure ensures that the planning engine can generate intents which are then used to construct the agent’s goals. This information is then fed into the dialogue agent so that message generation between Cicero and other players remains aligned with the strategic aims of the agent. While Cicero sometimes contradicts itself, its designers report that no humans identified the agent as an AI during anonymous, multi-level tournament play, highlighting the human-like success of the system.

The lower-level structure has a distinctiveness of its own. One might suspect that, given the success of AlphaGo Zero through self-play, a Diplomacy agent should also train on this technique. But, pre-Cicero research found that training a Diplomacy agent without human data led to relatively poor play against humans.

Nor is the Monte Carlo tree search algorithm particularly useful here. While MCTS had been tested in Diplomacy before Cicero (to my knowledge, primarily in the strategic aspect of the game, not so much the negotiation aspect), differences between Go and Diplomacy make it less effective. Go is an asynchronous game where players take their turns one at a time, thereby transmitting all relevant information on the board by the time the next player moves. Diplomacy is simultaneous — players negotiate, then input their actions privately, which are revealed to everyone simultaneously. MCTS cannot cope well with this structure.

Here’s what Meta AI’s team did instead: building on prior research in Diplomacy-playing AIs, they constructed an algorithm called “piKL” (acceptably pronounced “pickle”). piKL is designed to ‘interpolate’ between the dual needs of searching for novel strategies and imitating humans. This algorithm is foundational to the planning engine. Echoing research done for the Poker-playing agent Libratus, Meta AI reports that “piKL treats each turn in Diplomacy as its own subgame…”

A generative large language model, furthermore, underpins the dialogue agent. This LLM was fine-tuned on existing game dialogue. But, language models are ungrounded from the context of the Diplomacy world and thus generate outputs inconsistent with the strategic aims of the agent (in addition to being easily manipulable by humans). To work around this, some human messages on which it was trained were labeled with proposed actions — these represent the intentions of players. The language model was conditioned on these intents.

We can begin to see how this all fits together. During play, the planning engine selects the relevant intents to control the language model. This control is premised on truthful actions for the system and mutually beneficial actions for the recipient of the communication (the system models actions for every player).

Implications

Cicero is a tour de force in engineering. It is, also, one of the most striking examples of an AI system accomplishing a distinctively human feat with a rich, highly structured endowment encoded with some domain knowledge. These internal components interface relatively productively in the alignment of strategic reasoning and natural language communication. Marcus and Davis were among the few who immediately recognized the significance of this system beyond the game of Diplomacy: “If Cicero is any guide, machine learning may ultimately prove to be even more valuable if it is embedded in highly structured systems, with a fair amount of innate, sometimes neurosymbolic machinery.”

Still, many in AI believe that this misses the point: yes, biological intelligence may have all sorts of innate features but artificially intelligent systems may not need them. There are, the idea goes, other ways to perform intelligent behavior than the ways humans do it. One need only look at AlphaGo Zero: this system, whose internal components are comparatively simpler than the human brain, plays Go better than any human in the world. Why focus on building more innate things into such a system when it gets the job done so well already? And why not try to minimize the number of moving parts operative in Cicero while we’re at it?

This hypothetical interlocutor misses the forest for the trees. As Marcus explains in his 2018 paper: “whereas a human can learn many games without specific innate representational features for any particular game, each implementation of [AlphaGo Zero] is innately endowed with game-specific features that lock the system to one particular realization of one particular game, focusing on one particular problem. Humans are vastly more flexible in how they approach problems” (p. 9).

The leap from “narrow” to “general” AI is directly connected to the problem of innateness. And biological intelligence shows why: while it is true that no human individual can play Go at the level of AlphaGo Zero (assuming nothing like KataGo vs. Kellin Pelrine is replicated with AlphaGo Zero), the system could not get to this point without highly specific innate structure, in much the same way that human moral judgment takes on its very specific character owing to our innate structure.

Insights from biology to AI go further than this. Gameplaying AIs are often notable in that they allow researchers to test techniques in environments where there are well-defined objectives (i.e., clear-cut conditions for victory) along with stable constraints (i.e., rules of the game, the board on which it is played, the number of players, and so on). These games are isolated exploitations of human cognitive abilities, including tactical proficiency, strategic reasoning, natural language communication, deception, cooperation and collaboration, and honesty and dishonesty, among others.

The problem is that AlphaGo Zero, Cicero, and other gameplaying AIs are not doing what we are doing when we play Go, Diplomacy, or any other strategy game. As the interlocutor points out, they find different ways of succeeding: AlphaGo Zero can play against itself millions of times with a carefully augmented search algorithm specially designed for Go; Cicero can negotiate without the feeling of being betrayed misguiding its actions with substantial ‘metagame’ knowledge. But AlphaGo Zero can not strategize outside of Go, or even recognize a Go board that does not meet the exact dimensions it was innately programmed to recognize; Cicero cannot negotiate outside of Diplomacy because it does not know how to read intentions so much as it optimizes for agreements each would find acceptable.

And herein lies the problem: when humans strategize in Go, they can put this ability to use in any other game or context; when humans read intentions in Diplomacy, they can use that same cognitive capability anywhere outside of the game with flexibility and robustness, whether in actual face-to-face diplomacy or casual conversation.

The core point is this:

The “different” ways that AIs use to succeed at games in ways superior to humans while simultaneously remaining confined to these domains are due to the power of their innate structure. It will take a new kind of innate structure that mixes with the successful elements of deep learning to make the leap from narrow to general AI.

Conclusion

The idea of innateness, in my experience, bothers people. But what is interesting is not that people are bothered by the idea that human arms, legs, circulatory systems, or spinal cords are innate — they’re not. Rather, people tend to be bothered by the idea that higher-order faculties of the mind are innate — things like moral values, languages, musical sense, and the like.

This double standard has been identified as a potential obstacle in the cognitive and neurosciences, an implicit bias of sorts, which I have argued is likely responsible for some of the resistance to innateness in AI. The “fetish” for end-to-end learning that Rodney Brooks tweeted about may, in part, be the result of this strange double standard simply having made its way into AI, rather than being unique to the field.

But identifying merely raises the stakes in illustrating why neat separations between engineering and science in the effort to build human-like AI, or AGI itself will not suffice going forward. The mindset that says, “Let’s just see what works and what doesn’t work” has tremendous creative advantages, but human-like AI is too high a mountain for this alone. Human-like AI is, as illustrated, likely to be a series of well-structured research problems that cannot afford to take implicit assumptions about biology and cognition in humans or animals for granted.

References:

[1] B. Alloui-Cros, Mastering Both the Pen and the Sword? Cicero in the Game of Diplomacy (2023), Substack

[2] A. Bakhtin, et al., No-Press Diplomacy from Scratch (2021), ArXiv

[3] N. Brown and T. Sandholm, Superhuman AI for Heads-Up No-Limit Poker (2017), Science

[4] V.J. Carchidi, Do Submarines Swim? (2022), AI & Society

[5] N. Chomsky, Naturalism and Dualism in the Study of Language and Mind (1994), International Journal of Philosophical Studies

[6] D. Silver, et al., Mastering the Game of Go Without Human Knowledge (2017), Nature

[7] G. Dupre, (What) Can Deep Learning Contribute to Theoretical Linguistics? (2021), Minds and Machines

[8] A. Garcez and L. Lamb, Neurosymbolic AI: The 3rd Wave (2023), Artificial Intelligence Review

[9] M. Holmes, Face-to-Face Diplomacy (2018), Cambridge University Press

[10] A.P. Jacob, et al., Modeling Strong and Human-Like Gameplay with KL-Regularized Search (2021), ArXiv

[11] R. Katzir, Why large language models are poor theories of human linguistic cognition. A reply to Piantadosi (2023), LingBuzz

[12] G. Marcus and E. Davis, What Does Meta AI’s Diplomacy-Winning Cicero Mean for AI? (2022), Communications of the ACM

[13] G. Marcus, Innateness, AlphaZero, and Artificial Intelligence, ArXiv

[14] Meta Fundamental AI Research Diplomacy Team (FAIR), et al., Human-level Play in the Game of Diplomacy by Combining Language Models with Strategic Reasoning (2022), Science

[15] S. Piantadosi, Modern Language Models Refute Chomsky’s Approach to Language (2023), LingBuzz

[16] J. Rawski and L. Baumont, Modern Language Models Refute Nothing (2023), LingBuzz

[17] A. Theodoridis and G. Chalkiadakis, Monte Carlo Tree Search for the Game of Diplomacy (2020), 11th Hellenic Conference on Artificial Intelligence

[18] S. Ullman, Using Neuroscience to Develop Artificial Intelligence (2019), Science

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.