The Cantilever v/s ChatGPT

Last Updated on August 17, 2023 by Editorial Team

Author(s): Anupsa Swain

Originally published on Towards AI.

Exploring ChatGPT’s natural language processing capabilities in Python by asking it to solve a cantilever beam problem using the Finite Difference Method

ChatGPT, as a Large Language Model, has been tested in a number of fields to see how far it can be pushed to give the answers to the questions (of life, universe and everything) that range from “What is Love?” and “How to fly an airplane?” to “What would the periodic table look like if it was circular?” and “Can ghosts exist as extra-terrestrial life forms exploring the terrarium of Earth?”

In this article, we ask one of those questions: “Can ChatGPT write a finite difference code?”

On a dry Spring afternoon, in a lecture about common structures in aerospace, we encounter the wing of a plane(’s much much cruder version): the cantilever.

What is a cantilever? It is a beam supported at one end and carrying a load at the other end or distributed along the unsupported portion.

And as one must with every structure one encounter, we try to mathematically define and analytically determine the behavior of a cantilever beam with a vertically downwards load at the tip. We also got an insight into solving the same using numerical analysis but didn’t delve further in because (as it goes with these methods) they have the tendency of getting non-trivial.

This class was in the middle of March: around the same time as when ChatGPT, a recently released Large Language Model (LLM) was exponentially gaining momentum. This prompted a lot of buzz in lectures, too: Is ChatGPT all that jazz? What is the extent of its intelligence? Can it do our homework? Will its successors replace us? While this discussion took some interesting turns (the details of which are for another time), our professor suggested an exercise: Use ChatGPT to write a finite difference code to solve the cantilever with a tip load problem. We had already solved the analytical solution. All we had to do was compare.

Getting this at first felt pretty straightforward. Let’s just give ChatGPT the exact problem statement (cue, attempt #1), and it will generate a neat code.

Prompt 1: Write a Python code for constructing a cantilever bar with weight at the end.

On the first try, the bot confidently gave the code and said (paraphrasing), “Yes, I’m certain I’ve got exactly what you need. Here’s the code.”

And as oblivious as the human race is to details, it is more so with a false sense of assurance by a bot. Let’s be fair, why would someone expect a bot to lie?

Unless,

The bot believes in itself a bit too much. The bot has no humility. “Everyone believes me, ergo I must be the epitome of truth”.

But we digress. Getting back to the cantilever —

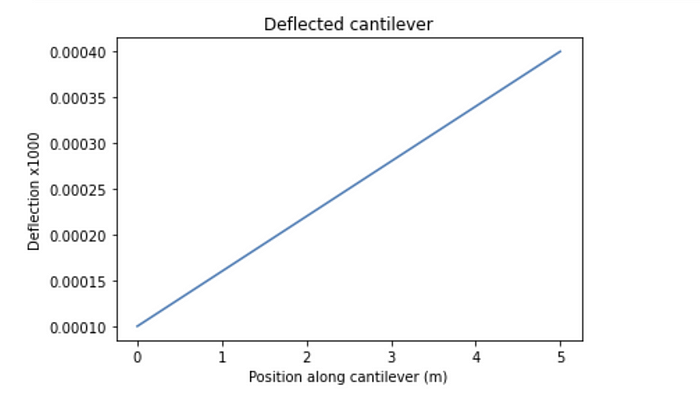

The code written for this assumed the load was along the length of the cantilever; hence the plot of deflection v/s length is uniform.

Maybe ChatGPT needed more context, I assumed. Maybe it’s focussing more on the other words rather than ‘cantilever’. With this in mind, I decided to first clear out what a cantilever is with the bot. Gave it a task so it would learn it.

The second time around, I tried getting ChatGPT to define Cantilever as a Python Class (a hopeful attempt #2), and then work on the problem, with the hope that it would have more insight (given that it’s a learning model).

Attempt 2: Define a cantilever in Python

This seemed to go pretty well. The class definition was neat, with a self-function for deflection calculation. One would feel proud.

class Cantilever:

def __init__(self, length, area, modulus):

self.length = length # Length of the cantilever

self.area = area # Cross-sectional area of the cantilever

self.modulus = modulus # Young's modulus of the cantilever

self.deflections = None # Initialize deflections array to None

def __str__(self):

return f"Cantilever with length {self.length} m, area {self.area} m^2, and modulus {self.modulus} Pa"

def calculate_deflections(self, loads):

# Calculate deflections at different points along the cantilever under a given set of loads

moment_of_inertia = self.area * (self.length ** 3) / 12

self.deflections = []

for load in loads:

deflection = load * (self.length ** 3) / (3 * self.modulus * moment_of_inertia)

self.deflections.append(deflection)

It was too good: the tip-deflection had no significant error with respect to the analytical solution. I ignored it too at first;“Why doubt a good thing?”

The complete deflection map was linear, though. Deflection isn’t supposed to be linear, is it?

ChatGPT understood what I needed in the deflections attribute but wrote the code incorrectly. I wanted a deflection array of different points along the cantilever. Instead, it plotted for different load strengths at the end. This will obviously be a linear curve (rather than the expected cubic) because it is being drawn against Load (P) and not distance (x).

Analytically, the solution looks like:

Where,

P = Tip load

x = distance from the joint

E = Young’s Modulus

Iᵧ = Moment of Inertia about y-axis

L = Length of rod

Stressed and worried about the code (cue, a number of undocumented attempts), I re-read the previously generated codes, looking for a mistake, looking for a line I might have missed, only to find what was staring at my face: ChatGPT used the analytical function of deflection for itself.

Blasphemy

One would find it in the class definition itself and say, “Oh, that wasn’t really hard to find.” What you need to understand is that this was neither my only try nor was this a one-day endeavor.

Time to restart. Redo. Renew. A new chat.

With a fresh mind and a different approach planned, I logged into Open AI. Before doing anything related to the task at hand, I first needed to check one thing; for my sanity:

That’s comforting, isn’t it? As experience has taught us, don’t trust the bot. But we can give it the benefit of the doubt.

We needed the bot to understand two things:

one, what a cantilever is,

and two, how to perform a finite difference analysis on it.

This is a lovely point to stop and analyze a learning model. We asked it to learn what a cantilever is first and then perform a finite difference analysis (FDM) on it. This failed because the quantity we wished to analyze using finite difference was already defined analytically by the bot in its definition of the cantilever.

This is why we go for this the other way (fingers crossed, the third time’s the charm, attempt #3): Ask it to perform some basic finite difference exemplars and then move on to a cantilever.

Attempt 3: Apply finite difference analysis to solve for a cantilever with a tip load

# resultant code:

import numpy as np

import matplotlib.pyplot as plt

# Define the properties of the cantilever

L = 1.0 # Length of cantilever

E = 1.0e7 # Young's modulus

I = 1.0e-4 # Moment of inertia

w = 100.0 # Tip load

# A's added definition of analytical solution for comparison

def cantilytical(x):

return w*(x**3)/(6*E*I) - w*L*x*x/(2*E*I)

# Define the boundary conditions

x0 = 0.0

y0 = 0.0

dydx0 = 0.0

# Define the domain of the solution

a = 0.0

b = L

n = 101 # Number of grid points

h = (b - a) / (n - 1) # Grid spacing

# Initialize the solution arrays

x = np.linspace(a, b, n)

y = np.zeros(n)

dydx = np.zeros(n)

# Apply finite difference approximation to solve the difference equation

for i in range(1, n):

y[i] = y[i-1] + h*dydx[i-1]

dydx[i] = dydx[i-1] + h*(-w/(E*I))

# Apply boundary conditions

y[0] = y0

dydx[0] = dydx0

# Plot the solution

plt.plot(x, y*1000, 'r-', lw=2, label='FDM')

plt.plot(x, cantilytical(x)*1000, 'b--', lw=4, label='exact')

plt.xlabel('x (m)')

plt.ylabel('y (mm)')

plt.legend()

plt.show()

# yay!! :D

This was a fair success. Although, as is evident, this isn’t close to the exact solution of the cantilever (arrived at analytically) since it seems to have been solved using first-order approximations.

In the above execution of the method, ChatGPT was great at recognising the correct algorithm and implementing it. The more nuanced issues, like knowing that the limit for a matrix can’t go beyond n when creating a tri-diagonal matrix, are something the bot misses out on. These are things that we, as human coders, recognize and know to work around.

To conclude, ChatGPT is a pretty good large language model. But it does well in the languages, is all. Mastering code is something it hasn’t or (from our experience), won’t be able to master. It brings about a contradiction in the way of the world. We can write code. Code can’t write code about or greater than itself.

The Poet

Final prompt to the poet, ChatGPT: Write a poem on ChatGPT messing up coding

What followed was the highlight of my endeavor:

Oh, ChatGPT, the master of words,

With language skills beyond compare,

But when it comes to coding boards,

It struggles to get things square.

Its vocabulary is vast and wide,

With grammar rules it can abide,

Syntax rules though, it cannot hide,

It messes up and cannot decide.

Lines of code it tries to write,

With functions, classes, loops in sight,

But when it runs, oh what a fright,

It crashes hard, no end in sight.

Yet still we love dear ChatGPT,

For all the language help it brings,

And though its code may faulty be,

Its linguistic prowess, heartwarming.

With this self-inspective poem by an LLM for a co-author, I rest my case.

The complete interaction has been documented on GitHub for those interested in the details. This also includes the complete codes generated by ChatGPT and minute editions by yours truly.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.