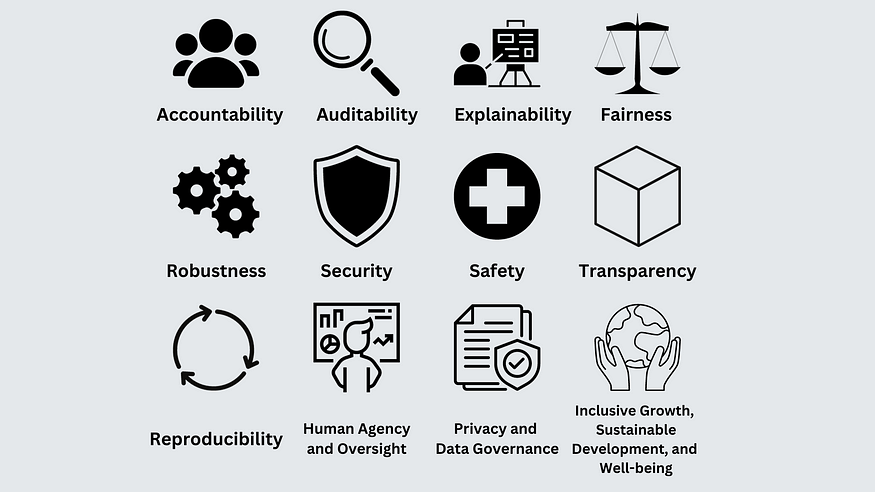

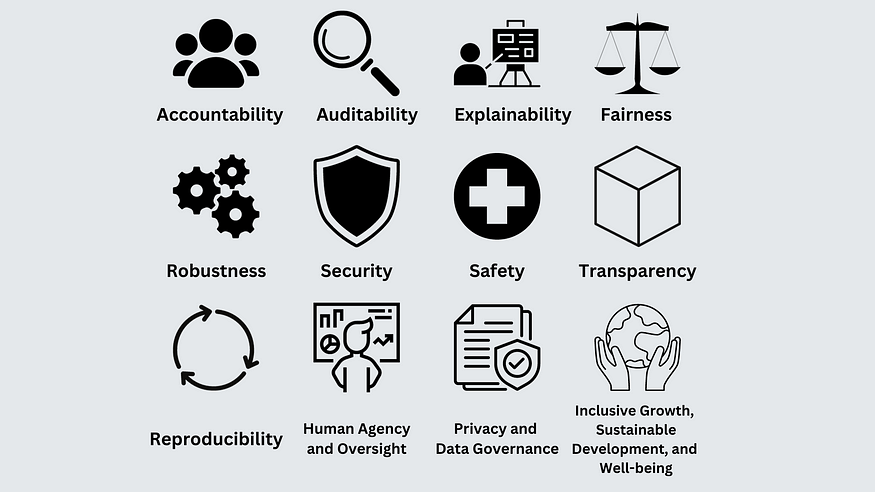

The 12 Core Principles of AI Governance

Last Updated on November 5, 2023 by Editorial Team

Author(s): Lye Jia Jun

Originally published on Towards AI.

Balancing Ethics and Innovation: An Introduction to the Guiding Principles of Responsible AI

Sarah, a seasoned AI developer, found herself at a moral crossroads. One algorithm could maximize efficiency but at the cost of privacy. The other safeguards personal data but lacks speed.

If you were Sarah, which algorithm would you choose?

These kinds of decisions are made daily. Yet, we seldom ever pause to consider frameworks of thinking in order to make the most informed decision.

The Need for an AI Governance Framework

The truth is that making such decisions requires more than technical expertise; it calls for a framework to balance efficiency with ethical considerations. This is where AI Governance steps in, offering guidelines to reconcile the competing priorities of performance and ethics.

The core principles of AI Governance aren’t just theoretical constructs; they’re practical guides that can help professionals like Sarah make informed decisions.

In the following sections, we’ll explore the 12 Core Principles of AI Governance, illustrating how they can be instrumental in resolving the complex dilemmas that punctuate the AI landscape.

1. Accountability

AI actors should be accountable for the proper functioning of AI systems and for the respect of AI ethics and principles, based on their roles, the context, and consistent with the state of art.

Accountability means that there should be mechanisms in place to identify and mitigate biases, errors, and unintended consequences, and ensure that the legal and ethical responsibilities are clearly defined and upheld.

Accountability aims to answer the question: Who should be held accountable for the good (or bad) decisions that AI systems make?

2. Auditability

AI systems should allow interested third parties to probe, understand, and review the behaviour of the algorithm through the disclosure of information that enables monitoring, checking, or criticism.

Auditability means that AI systems must be designed and operated in a way that allows their decisions, data, and operations to be inspected, evaluated, and verified by independent third parties.

Auditability aims to answer the question: How can we ensure that AI systems are operating as intended and adhering to legal and ethical guidelines?

3. Explainability

AI systems should be designed such that we have the ability to assess the factors that led to the AI system’s decision, its overall behaviour, outcomes, and implications

Explainability means that the internal mechanisms of AI systems and the relationships between input data and decisions should be understandable.

Explainability aims to answer the question(s): What are the factors influencing the AI system’s decision, and how are they utilized in the decision-making process?

4. Fairness

AI systems should be designed to treat all individuals and groups fairly and not discriminate against specific cohorts.

Fairness means designing and operating AI systems in a way that is impartial, just, and equitable, ensuring that decisions made do not favor one group over another due to bias, prejudices, or stereotypes.

Fairness aims to answer the question: How are the AI systems designed to be fair and impartial to all individuals and groups?

5. Robustness

AI system should be resilient against tampering and manipulation and function reliably and effectively under unforeseen circumstances

Robustness means that AI systems should be constructed to withstand tampering and manipulation, ensuring they continue to operate reliably and effectively even under unexpected and challenging conditions.

Robustness aims to answer the question: How resilient is the AI system to various types of operational and environmental changes and challenges?

6. Security

AI systems and related infrastructure should be secure against attacks and be able to maintain confidentiality, integrity, and availability

Security means that the AI systems and their supporting infrastructures are fortified against various forms of attacks, ensuring the confidentiality, integrity, and availability of data and systems.

Security aims to answer the question: How well are we able to protect systems and infrastructures to withstand attacks and ensure confidentiality, integrity, and availability?”

7. Safety

AI systems must be designed to be safe and not cause (physical) harm to humans.

Safety means that AI systems should be designed with significant importance placed on human well-being, ensuring they do not pose risks or cause physical harm to individuals.

Safety aims to answer the question: How safe and risky are the AI systems?”

8. Transparency

AI systems and their operators should ensure that the processes, decisions, and impacts of AI applications are clear, understandable, and accessible to affected parties and stakeholders.

Transparency means that there is openness and clarity in sharing information about the AI system’s design, operation, and decision-making processes.

Transparency aims to answer the question: Is clear and accessible information about the AI system available to all stakeholders?

9. Reproducibility

AI results should be reproducible; others should be able to replicate outcomes given the same inputs and conditions.

Reproducibility means the AI’s decisions and outputs can be consistently replicated using the same data and conditions.

Reproducibility aims to answer the question: Can the AI system’s decisions be reproduced under the same conditions?

10. Human Agency and Oversight

AI systems should support human autonomy and decision-making; humans should have the ability to intervene, oversee, and take control of AI systems.

Human Agency and Oversight mean that AI should not undermine human autonomy and capacity for decision-making, and humans should remain in control.

Human Agency and Oversight aims to answer the question: To what extent do humans have control over and the ability to intervene in the AI system’s decisions?

11. Privacy and Data Governance

AI systems should respect privacy, data protection, and security norms, ensuring individuals’ data are handled with utmost integrity.

Privacy and Data Governance means that personal data is protected, and systems are designed and operated in ways that respect individual privacy.

Privacy and Data Governance aims to answer the question: How does the AI system ensure the protection of individual privacy and data?

12. Inclusive growth, sustainable development, and well-being

AI systems should be designed to foster universal prosperity, equity, and environmental health, ensuring benefits for all individuals, societies, and generations while protecting ecological balance.

Inclusive growth, sustainable development, and well-being means that AI systems should be designed and deployed to benefit all individuals and communities, promoting equity, sustainability, and overall well-being.

Inclusive growth, sustainable development, and well-being aim to answer the question: How does the AI system contribute to the equitable economic growth, environmental sustainability, and well-being of individuals and communities?

Utilizing AI Governance Principles

Remember Sarah? The seasoned AI developer who needs to choose between two algorithms that prioritize either efficiency or privacy.

If Sarah were aware of the core principles of AI Governance, she’d chosen the algorithm that protects the users’ privacy, in accordance with the Privacy and Data Governance principle.

Of course, this scenario is a straightforward instance where the core AI Governance principles are not in conflict with each other. In reality, principles often intersect and conflict.

The conflicts among AI Governance principles aren’t a flaw but a feature, compelling us to scrutinize, debate, and refine the role of AI in our society continually. It is in this dynamic space, where principles meet practice, that the true value of AI Governance is revealed.

Conclusion: The Importance of Principles

In the ongoing development of AI, these 12 Core AI Governance Principles serve as foundational elements, providing direction and clarity. They help ensure that AI is developed and utilized for the benefit of all, fostering innovation and improving human well-being while balancing ethical demands.

In the upcoming pieces, we will deep dive into each core principle, bringing to life real-world case studies and evaluating how different organizations follow (or violate) the core AI Governance principles.

I hope you found this foundational piece insightful.

Cheers!

Acknowledgement & References

- Singapore Model AI Governance Framework 2.0; AI Verify Foundation

- OECD AI Principles

- European Commission, “Ethics Guidelines for Trustworthy AI,” Digital Strategy

- IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems

- UNESCO Recommendation on the Ethics of Artificial Intelligence

*Other principles such as inclusivity, accuracy, progressiveness, and human centricity & well-being are not included as they were not commonly established among various sources.

Thank you for journeying with me through my introduction to “The AI Governance Journal”. As we delve deeper into the realms of AI governance, I invite you to join the conversation, challenge the status quo, and champion responsible AI. For a holistic understanding, follow me for more insightful AI Governance pieces. Stay curious and stay informed.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.