Step 4: Logistic regression

Last Updated on July 25, 2023 by Editorial Team

Author(s): Rashmi Margani

Originally published on Towards AI.

Blending NB And SVM U+007C Towards AI

Naive Bayes(NB)-Support Vector Machine(SVM): Art Of State Result Hands-On Guide using Fast.ai

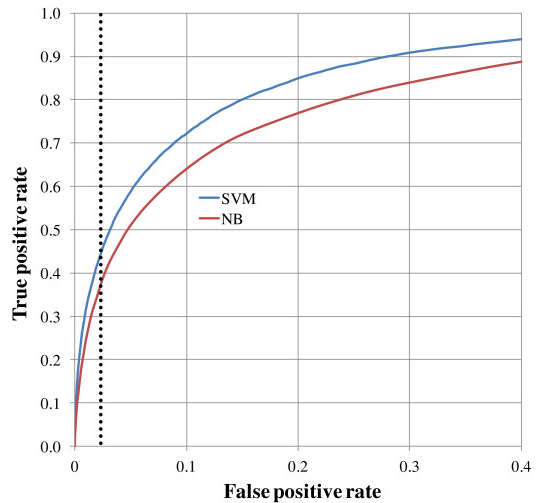

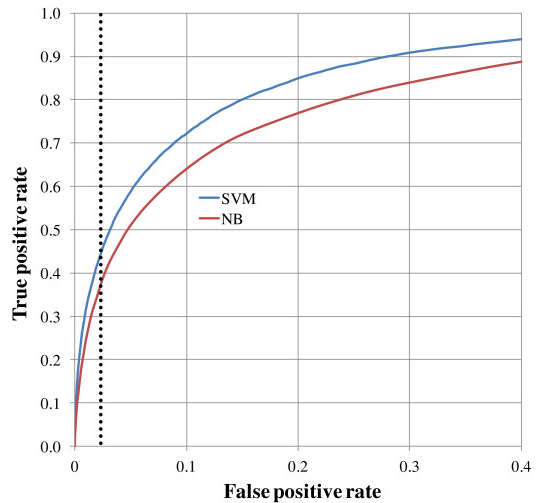

SVM with NB feature: State of art performance model variance

Before getting into Model variant of NB and SVM, will discuss when does NB performance better than SVM, which explains the reason for using NBSVM

NB and SVM have different options including the choice of kernel function for each. They are both sensitive to parameter optimization (i.e. different parameter selection can significantly change their output). So, if you have a result showing that NB is performing better than SVM. This is only true for the selected parameters. However, for another parameter selection, you might find SVM is performing better.

Variants of NB and SVM are often used as baseline methods for text classification, but their performance varies greatly depending on the model variant, features used and task/ dataset. Based on these observations, you can identify simple NB and SVM variants that outperform most published results on text datasets, sometimes providing a new state-of-the-art performance level.

Now, discussing the math behind NBSVM, how it is derived.

Let f (i) ∈ R U+007CV U+007C be the feature count vector for training case i with label y (i) ∈ {−1, 1}. V is the set of features, and f (i) j represents the number of occurrences of feature Vj in training case i.

count vectors formula as P = α + Summation( i:y (i))=1.

f (i) and Q = α + Summation( i:y (i))=−1 . And here f (i) for smoothing parameter α.

The log-count ratio is: r = log (p/U+007CU+007CpU+007CU+007C1 ) /(q/U+007CU+007CqU+007CU+007C1 )

Multinomial Naive Bayes (MNB)

In MNB, x (k) = f (k) , w = r and b = log(N+/N−). N+, N− are the number of positive and negative training cases.

However, finding shows that binarizing f (k) is better. Lets take x (k) = ˆf (k) = 1{f (k) > 0}, where 1 is the indicator function. ˆp, ˆq,ˆr are calculated using ˆf (i) instead of f (i)

Support Vector Machine (SVM)

For the SVM, x (k) = ˆf (k) , and w, b are obtained by minimizing

wT w + C Summation i ( max(0, 1 − y (i) (wTˆf (i) + b))2)

Where here L2-regularized L2-loss SVM is used to work the best and L1-loss SVM to be less stable.

SVM with NB features (NBSVM)

Otherwise identical to the SVM, except use x (k) = ˜f (k) ,

where ˜f (k) = ˆr *ˆf (k) is the elementwise product. While this does very well for long documents, now you can find that interpolation between MNB and SVM performs excellently for all documents and find results using this model:

w0 = (1 − β) ¯w + βw (4) where w¯ = U+007CU+007CwU+007CU+007C1/U+007CV U+007C

above is the mean magnitude of w, and β ∈ [0, 1] is the interpolation parameter. This interpolation can be seen as a form of regularization: trust NB unless the SVM is very confident.

Now let’s get into implementation, here I am using IMDB dataset, fast.ai NVSVM to classify the movie review into positive and negative classes

Step 1:

Tokenizing and term-document matrix creation

PATH='data/aclImdb/'

names = ['neg','pos']%ls {PATH}aclImdb_v1.tar.gz imdbEr.txt imdb.vocab models/ README test/ tmp/ train/%ls {PATH}trainaclImdb/ all_val/ neg/ tmp/ unsupBow.feat urls_pos.txt

all/ labeledBow.feat pos/ unsup/ urls_neg.txt urls_unsup.txt%ls {PATH}train/pos U+007C headtrn,trn_y = texts_labels_from_folders(f'{PATH}train',names)

val,val_y = texts_labels_from_folders(f'{PATH}test',names)

Here is the text of the first review

trn[0]" A formal orchestra audience is turned into an insane, violent mob by the crazy chantings of it's singers. Unfortunately it stays absurd the WHOLE time with no general narrative eventually making it just too off putting. Even those from the era should be turned off. The cryptic dialogue would make Shakespeare seem easy to a third grader. On a technical level it's better than you might think with some good cinematography by future great Vilmos Zsigmond. Future stars Sally Kirkland and Frederic Forrest can be seen briefly."trn_y[0]0

Step 2:

CountVectorizer converts a collection of text documents to a matrix of token counts (part of sklearn.feature_extraction.text).

veczr = CountVectorizer(tokenizer=tokenize)

fit_transform(trn) finds the vocabulary in the training set. It also transforms the training set into a term-document matrix. Now let’s apply the same transformation to the validation set, the second line uses just the method transform(val). trn_term_doc and val_term_doc are sparse matrices. trn_term_doc[i] represents training document I and it contains a count of words for each document for each word in the vocabulary.

trn_term_doc = veczr.fit_transform(trn)

val_term_doc = veczr.transform(val)

trn_term_doc<25000x75132 sparse matrix of type '<class 'numpy.int64'>'

with 3749745 stored elements in Compressed Sparse Row format>trn_term_doc[0]<1x75132 sparse matrix of type '<class 'numpy.int64'>'

with 93 stored elements in Compressed Sparse Row format>vocab = veczr.get_feature_names(); vocab[5000:5005]['aussie', 'aussies', 'austen', 'austeniana', 'austens']w0 = set([o.lower() for o in trn[0].split(' ')]); w0len(w0)

92

veczr.vocabulary_['absurd']

1297

Step 3:

Now defining Naive Bayes

Define the log-count ratio for each word here,

$r = \log \frac{\text{ratio of feature $f$ in positive documents}}{\text{ratio of feature $f$ in negative documents}}$

where the ratio of feature in positive documents is the number of times a positive document has a feature divided by the number of positive documents.

def pr(y_i):

p = x[y==y_i].sum(0)

return (p+1) / ((y==y_i).sum()+1)x=trn_term_doc

y=trn_yr = np.log(pr(1)/pr(0))

b = np.log((y==1).mean() / (y==0).mean())

Here is the formula for Naive Bayes.

pre_preds = val_term_doc @ r.T + b

preds = pre_preds.T>0

(preds==val_y).mean()0.80691999999999997

binarized Naive Bayes.

x=trn_term_doc.sign()

r = np.log(pr(1)/pr(0))pre_preds = val_term_doc.sign() @ r.T + b

preds = pre_preds.T>0

(preds==val_y).mean()0.83016000000000001

Here fitting logistic regression where the features are the unigrams.

m = LogisticRegression(C=1e8, dual=True)

m.fit(x, y)

preds = m.predict(val_term_doc)

(preds==val_y).mean()0.85504000000000002m = LogisticRegression(C=1e8, dual=True)

m.fit(trn_term_doc.sign(), y)

preds = m.predict(val_term_doc.sign())

(preds==val_y).mean()0.85487999999999997

the regularized version

m = LogisticRegression(C=0.1, dual=True)

m.fit(x, y)

preds = m.predict(val_term_doc)

(preds==val_y).mean()0.88275999999999999m = LogisticRegression(C=0.1, dual=True)

m.fit(trn_term_doc.sign(), y)

preds = m.predict(val_term_doc.sign())

(preds==val_y).mean()0.88404000000000005

Step5: Trigram with NB features

Our next model is a version of logistic regression with Naive Bayes features. For every document, now compute binarized features as described above, but this time using bigrams and trigrams too. Each feature is a log-count ratio. A logistic regression model is then trained to predict sentiment.

veczr = CountVectorizer(ngram_range=(1,3), tokenizer=tokenize, max_features=800000)

trn_term_doc = veczr.fit_transform(trn)

val_term_doc = veczr.transform(val)trn_term_doc.shape(25000, 800000)vocab = veczr.get_feature_names()vocab[200000:200005]['by vast', 'by vengeance', 'by vengeance .', 'by vera', 'by vera miles']y=trn_y

x=trn_term_doc.sign()

val_x = val_term_doc.sign()r = np.log(pr(1) / pr(0))

b = np.log((y==1).mean() / (y==0).mean())

Step 6: Here fitting regularized logistic regression where the features are the trigrams.

m = LogisticRegression(C=0.1, dual=True)

m.fit(x, y);preds = m.predict(val_x)

(preds.T==val_y).mean()0.90500000000000003np.exp(r)matrix([[ 0.94678, 0.85129, 0.78049, ..., 3. , 0.5 , 0.5 ]])

Then fit regularized logistic regression where the features are the trigrams’ log-count ratios.

x_nb = x.multiply(r)

m = LogisticRegression(dual=True, C=0.1)

m.fit(x_nb, y);val_x_nb = val_x.multiply(r)

preds = m.predict(val_x_nb)

(preds.T==val_y).mean()

0.91768000000000005

Step 7: fast.ai NBSVM

sl=2000# Here is how the model looks from a bag of words

md = TextClassifierData.from_bow(trn_term_doc, trn_y, val_term_doc, val_y, sl)learner = md.dotprod_nb_learner()

learner.fit(0.02, 1, wds=1e-6, cycle_len=1)A Jupyter Widget[ 0. 0.0251 0.12003 0.91552]learner.fit(0.02, 2, wds=1e-6, cycle_len=1)A Jupyter Widget[ 0. 0.02014 0.11387 0.92012]

[ 1. 0.01275 0.11149 0.92124]learner.fit(0.02, 2, wds=1e-6, cycle_len=1)A Jupyter Widget[ 0. 0.01681 0.11089 0.92129]

[ 1. 0.00949 0.10951 0.92223]

This NBSVM achieves 0.92129 accuracy.

Same NBSVM can be used to solve other NLP based problem, but different feature engineering need to be experimented to get the state of art result, I got top 8% in Kaggle toxic comment classification.

Thanks much for reading, Hope you enjoyed it.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.