MLOps — Ruling Fundamentals and few Practical Use Cases

Last Updated on May 24, 2022 by Editorial Team

Author(s): Supriya Ghosh

Machine Learning

MLOps — Ruling Fundamentals and few Practical Use Cases

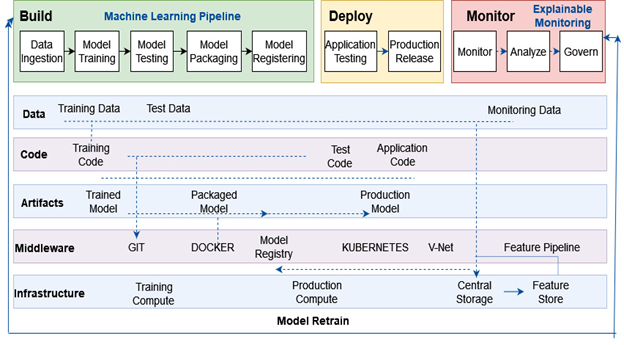

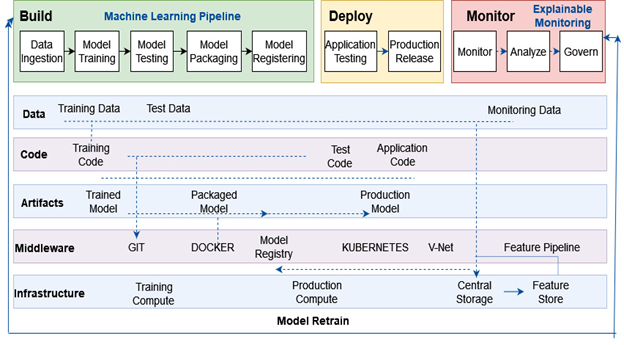

MLOps Workflow

Before I jump on to the Practical Use Cases of MLOps directly, let me pen down some foundations of MLOps.

Why MLOps emerged?

Engineers and Researchers across the globe were developing many high-level models by combining machine learning and artificial intelligence knowledge and techniques but deploying these models and attaining maximum benefit out of them at large scale were becoming more and more complex and challenging. Thus, to find a solution for all this, MLOps emerged.

MLOps is a Super-approach or a set of practices that manages the deployment of deep learning and machine learning models in large-scale production environments and ensures a quick turnaround from development to deployment and redeployment as required by the Business.

As day by day, its popularity is increasing, it is attracting the attention of more and more organizations who are looking to leverage the benefits of machine learning in their operations.

What is MLOps?

MLOps is considered as the child of DevOps and has been married to Operations, with full support from Data engineering hence the term coined together is

MLOps = ML(Machine Learning) + Ops(Operations)

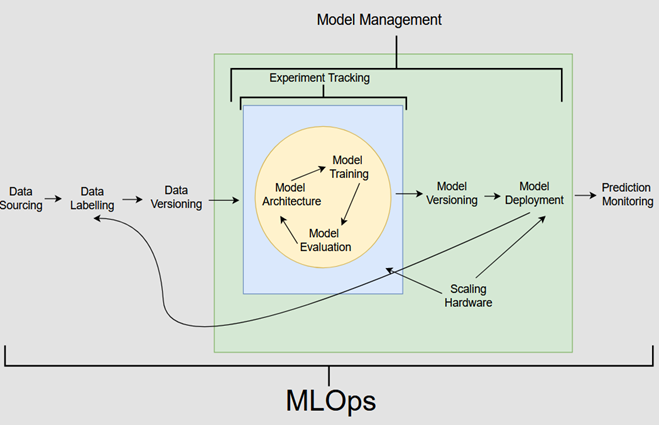

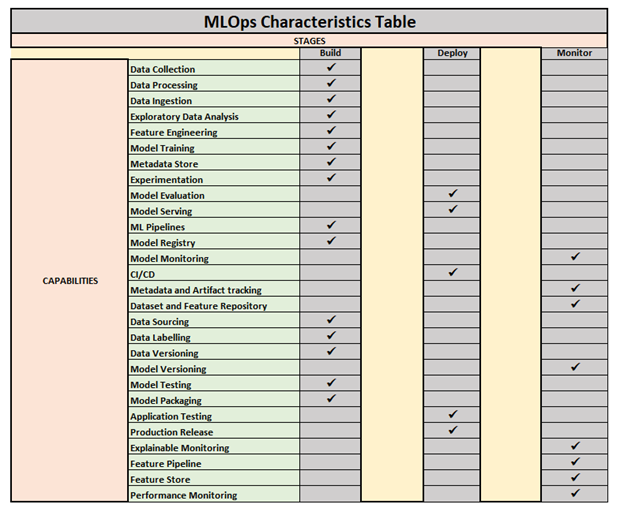

MLOps automates the processes of model development, deployment, monitoring and quality control, model governance, model retraining, and pipeline into a single platform.

In another way, it brings together data scientists, machine learning engineers, developers, and Operations professionals and makes their collaboration and communication easier to efficiently automate and manage ML and AI models and their lifecycle with in-built monitoring and governance.

What MLOps does?

Let me repeat once again that Machine learning models need to be capable of running in production enhancing efficiency and better decision making in business applications. Hence, organizations employ MLOps to scale the number of ML operations and applications by automating processes, validating, testing, and creating a repeatable process for managing ML in a dynamic environment.

Besides, MLOps empowers engineers and developers to take full responsibility and ownership of machine learning in production while freeing up data scientists for carrying out research and handling other relevant tasks.

Just like the traditional DevOps, whose goal is to streamline the delivery of software into production, the MLOps also aims to streamline the delivery of models and software with the added twist of making predictions based on machine-learned patterns from data.

Hence, MLOps encourages automation and continuous deployment just like DevOps and also adds unique ML capabilities such as model validation to enable high-quality predictions to be made in the environment. Without such an efficient deployment pipeline, these solutions are doomed to stay in the research lab and die off before being utilized in business.

It provides a foundation for organizations with ML and AI capabilities to maximize investments with their viable solutions and gain tremendous impact and value.

This is achieved by expediting the continuous production of ML models at scale, significantly reducing the time to deploy such intelligent applications in days or sometimes even hours.

MLOps also injects repeatability and audibility into the deployment pipeline, which can be beneficial if an ML model underperforms, or needs to be audited post-deployment. Having a proper deployment pipeline in place makes it easier to regenerate models and retrace steps if necessary.

What should be the MLOps approach by Organizations?

1. The first step is to create a collaborative culture with cross-functional teams working to meet their organization’s business goals.

2. It must be supported with the appropriate underlying technology to help team members work together and achieve those goals.

3. Each team member should have a defined role to play in the production pipeline. For example, data scientists prepare data, apply ML algorithms, and tune the models to make them more performant. Developers use those models as part of their applications, and operations ensure that models are approved and monitored in production.

4. Teams should be working toward a common goal that aligns with their organization’s primary objectives.

5. Depending on the organization, the goal could be centered on their use case.

6. The goal should not be optimizing a specific engineering metric, but a strategic organizational objective that MLOps teams are deemed to meet.

7. The ideal platform should support information sharing across teams and enable agile development processes.

8. Finally, Organization top management should support these cultural and strategic initiatives with technology that helps MLOps teams to easily build and deploy ML and AI-driven applications.

MLOps Use cases

1. Pilot Project/ Research Project

Whenever we are testing a proof of concept or carrying out a research or pilot project for ML, our focus is mainly on data preparation, feature engineering, model prototyping, and validation. But all these tasks are performed in multiple iterations to arrive at a reliable model. Data scientists often want to track and compare these iterations and set up experiments quickly and easily to arrive at a final solution.

Here MLOps extends its support by creating a pipeline for ML metadata storage, artifact tracking capability in order to debug, provide traceability matrix, share, and track experimentation configurations, and to manage all related ML artifacts through configuration management and version control systems integrated with the application.

2. Speech or Voice recognition Systems

MLOps approach can be applied in Speech or Voice recognition applications.

Generally, a speech recognition application functions by making use of context in recognizing emotions and tones of how individuals speak and train a model accordingly. But over time, it might go faulty, especially when individuals while speaking introduces new phrases and changes their usual style. This can lead to model decay and has to be identified by the team through continuous manual monitoring which can sometimes be tiresome and draining.

How MLOps can help with this is by automating the process of continuous monitoring of the speech recognition models' predictive performance and whenever performance falls below or approaches the defined threshold, the system triggers an alert. This immediately grabs the attention of the responsible team to train a new model using fresh data and then deploy it to replace the old production model.

3. Packaging Machines/Robots

Often, manufacturing companies employ machines or robots at the end of their assembly line for packaging their products. These robots use computer vision powered by machine learning for the analysis and packaging of products. For e.g., if the ML model is trained to recognize triangular and circular boxes of certain dimensions but to cater to the future demand, the company decides to introduce new packaging dimensions and shapes, then this leads to an issue that needs to be addressed as quickly as possible before the packaging system goes faulty.

Here MLOps helps the engineers and scientists to collaborate using continuous integration and continuous deployment (CI/CD) to create and deploy a new ML model in the shortest possible time using a defined ML pipeline before the new packaging dimensions or shapes are introduced into the assembly line.

A recommendation system often follows batch predictions as there is no need to score in real-time. The scores can be pre-computed and stored for later consumption, so latency is not much of a concern. But, since processing a large amount of data at a time takes place, hence throughput is important.

MLOps supports this with its strong well-established data processing capability techniques and ML pipelines along with model registry to provide such batch serving process with the latest validated model to use for scoring rather than any older versions because of unwanted human error.

5. Sudden outliers in the stock market

Taking an example of stock trading, suppose an ML model is trained to make predictions based on pharmaceutical products, but only with positive prices i.e., price increase. And suddenly it is noted that the pharmaceutical products start to follow a negative trend, i.e., price decrease. Definitely, the model will not perform perfectly upon encountering such a sudden negative price/downward trend. What needs to be done in this case is that team of developers and data scientists must take immediate action to train and redeploy a new ML model.

Here MLOps comes to rescue with much of the pipeline for training and deploying a new model already automated. In such a case engineers and scientists may not even need the assistance of developers to handle the pipeline. They can easily make use of already automated systems for updating, training, and deploying a new model immediately to avoid further negative effects.

6. A fraud detection model that is trained daily or weekly in order to capture recent fraud patterns.

A fraud detection model is a kind of frequent retraining system where model performance is heavily dependent on changes in the training data. Such retraining might be based on time intervals (for example, daily or weekly), or it can be triggered based on instances when new training data becomes available.

Such systems required MLOps with well-set up ML pipelines to connect multiple steps like data extraction, preprocessing, model training, and model evaluation capability to ensure that the accuracy of the newly trained model meets business requirements. As the number of trained models grows, model registry, metadata, and artifact tracking become immensely important to keep track of the training activities and model versions.

7. A promotion model to maximize conversion rate

Such systems often need frequent implementation updates which might involve changes to the ML framework itself which can lead to change in model architecture or a change in data processing step in the training pipeline. Such changes lead to ML workflow changes and require control to ensure that the new code is functional and that the new model performs as intended.

Here MLOps supports with efficient and intact CI/CD(continuous integration/ continuous deployment) process by accelerating the pace of ML experimentation to production and reducing the possibility of human error. As the changes are significant, online experimentation and model evaluation supports to ensure that the new release is performing as expected. Also, model registry, metadata, and artifact tracking operationalize and track frequent implementation updates.

Final Thoughts

A well-planned MLOps in the organization can lead to enhanced efficiency and productivity leading towards more than optimal ROI for the business. Also, they are a great way to unite cross-functional teams of developers and data scientists for collaboration and communication. All required is to embrace its unleashing potential systematically and in line with the production environment.

Thanks for reading !!!

I plan to write another piece to share a few more interesting information on MLOps.

You can follow me on medium as well as

LinkedIn: Supriya Ghosh

And Twitter: @isupriyaghosh

MLOps — Ruling Fundamentals and few Practical Use Cases was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.