London Commute Agent, From Concepts to Pretty Maps

Author(s): Anders Ohrn

Originally published on Towards AI.

A hope for Large Language Models (LLMs) is that they will close the gap between what is meant (the semantics) and its formulation (the syntax). That includes calling software functions and querying databases, which employ stringent syntax — a single misplaced character can cause hours of head-scratching and troubleshooting.

Tool-using LLMs is a great new approach. Any business-relevant task that deals with stated intentions or requests in part by function-calling can be augmented with a semantic layer to close the gap between meaning and formulation.

I will make it concrete: the task is to plan a journey or commute in London, UK.

There are functions and tools already to deal in part with that, like the Transport for London (TfL) web Application Programming Interfaces (APIs). However, these APIs “speak machine”. For a person with travel intent and needs the APIs are not enough. We can fill that gap with tool-using LLMs.

I describe the problem and solution generally in the next sections. I then show how to implement it with Anthropic’s LLMs. All the code will be accounted for and is available in a Github repo. Once this blog post concludes, you will understand tool-using LLM utility and have the means to build useful prototypes.

Principal-Assistant-Machine and the Semantic Layer

Imagine planning a commute between places in London by tube, bus, bike, taxi, walking etc. Imagine furthermore that you have preferences on the number of interchanges or how much to use a bike along the way etc.

The process to solve this task is visualized below, beginning with the principal (e.g. the human commuter) making a request, and concluding with the principal receiving from the assistant a journey plan in the form of a step-by-step navigation plan, a map or some other object.

Route optimization is a well-established problem with many algorithms to draw on. Computers execute this step given a precisely stated objective. This step is contained within the amber-coloured machine box in the illustration above.

The principal should not have to know the machine layer APIs or the algorithms. The principle should simply be served with plans that address the requests and fit their style and mode of information consumption. The principal swims in the domain of meaning and semantics, culture and language, while the machine is anchored in formula and syntax, algorithm and code.

The assistant deals with the efforts between the principal and the machine. The assistant possesses knowledge of tools and their proper use, and also how to interpret the principal, and, like a project manager, how to define units of work that add up and make sense.

It is the assistant layer that the tool-using LLMs can automate, scale and accelerate. Tasks that are rate-limited or cost-constrained by what goes on in the assistant layer are ripe for disruption.

In Simple Terms, What Does the Tool-Using LLM Do?

Most providers of advanced LLMs enable tool use, especially for their advanced models. In practice, these LLMs have been tuned to generate strings of text that conform to a very particular structure and syntax.

Why does that enable tool use? Because in the land of machine and software engineering, structured data and strict type and syntax rule supreme.

To create a tool-using LLM application, the input to the LLM has to include a specification of that very particular syntax. The user’s input prompt to the LLM is not all there is. The LLM that receives the full package — input prompt and syntax specification — can then evaluate whether to generate a free text response, a response in the particular syntax, or a combination of the two.

It is up to the application developer to convert the LLM-generated output into a function call, data query, or similar.

Compared to smooth back-and-forth chatting with an LLM (e.g. ChatGPT, Claude, Le Chat), an application with tool-using LLMs requires more work by the application developer. The syntax specifications, their relation to the functions to be invoked and what to do with the functions’ output require additional code and logic.

The tutorial below deals with this complete effort.

What London Journey Planning Looks Like with AI Assitant Layer

I will briefly illustrate the principal’s view of an implemented solution.

The animated image below illustrates a test conversation. The request by the principal (the green text box) is phrased in a way a human reader can understand. The request blends types of syntax and implicit references for time (“6 am”, “five”, “early evening”) and place (“home”, “Tate Modern museum”, “from there”).

The journey planner (I named it Journey Planner Vitaloid) correctly extracts the content of the request and maps it to three calls to the TfL API where the route optimization algorithms do their magic. The journey planner also uses the tools for map-making. The map it outputs is shown below:

The journey plan details are not described in the exchange above. However, detailed data is available, and stored in data structures of the application. So when I, the principal, reply that I desire a summary of the steps in one or more of the different journeys, the tool-using LLM interprets the request, generates the relevant function call, and then compactly and correctly summarizes the steps in the details returned from the function call.

In appearance, this is much like the well-known LLM-powered chatbots.

The distinction is that the tool-using LLMs equip the Journey Planner Vitaloid with the capacities of algorithms that are not only about semantic processing. Also, any real-time updates that Transport for London publishes through their APIs (like disruptions) can instantly be part of the journey planner’s input data.

While public transportation planning is just one example, it shows how AI language models equipped with tools can bridge the gap between human needs, phrased in human terms, and computer systems. With that combination, we can create practical, scalable assistant solutions. Though this approach takes more effort than using AI without tools, I’ll demonstrate in this tutorial that it is still quite doable using standard Python libraries and Anthropic’s Python library.

Human expert assistants can thus imbue a scalable computer system with the means to solve assistant tasks. Prompting and tool-use together add another dimension to AI.

The Approach to the Tutorial

I run this tutorial in a top-down fashion. That is, I begin with the most high-level object in the assistant layer and progressively move downwards until I reach the interaction with the machine layer.

For that reason, the code snippets I share may reference objects that have not been defined at that stage of the tutorial. These are the lower-level objects on which the higher-level objects depend. Don’t worry — we’ll get to those details in due time. Think of it as peeling back the layers one by one to reveal the full picture.

The complete code can be viewed in this Github repository.

Tutorial: Router Agent Instantiation

The application has one object communicating with the principal: the router agent. This agent in turn has access to three other agents as tools. The router engine interprets the request from the principal by invoking the LLM client and then prompts the other agents to address the subtasks inferred from the principal’s request.

The application is hence a small agent workflow.

So when the principal provides their request, the router agent launches a set of actions. The animated image below illustrates one case of data generation and data flow between the objects.

In the build_agents.py file, the following lines of code build the router agent object agent_router.

The Engine object is the combined unit of an LLM model, a system prompt and a toolset. It processes text, such as the principal’s request, and generates a text response. I will discuss how the engine works in detail later.

The toolset exists as the object SubTaskAgentToolSet. Its scope of concern is how to invoke the tools and the syntax to declare to the LLM model of the router agent what the available tools are.

Note also that the system prompt derives from a Jinja template, see for example these lines:

system_prompt_template = Environment(

loader=FileSystemLoader(PROMPT_FOLDER),

).get_template(system_prompt_template)

I follow the convention of creating and managing prompts using Jinja2 templates that others have described the benefits of.

Tutorial: Toolset to Invoke Tools and Declare Them

The tool-using LLM must be aware of available tools. That is:

- Name of the tools and the type of task each tool deals with.

- Name, structure and meaning of the arguments each tool requires to be invoked.

Most providers of LLMs use very similar designs to make their LLMs aware of tools. The JSON file below is the specification I use for Anthropic’s LLMs for the three agents as tools, which the router agent can engage as it processes text.

The three tools are specified to require a string input named input_prompt and the last of the three tools also requires an object named input_structured comprising two integers, jourey_index and plan_index.

Take special note of the description fields. These are mini-prompts that the LLM will interpret as it processes all its input text along with its tool specifications. For example, a prompt from the principal which requests in standard language the planning of a journey from point A to point B should:

- make the LLM identify by semantic matching with tool descriptions that the

journey_plannertool is the one to invoke. - make the LLM generate a string of particular structure and syntax with content that matches the specifications of the input schema of said tool.

Normal prompt engineering considerations apply. When a tool set is created, good-quality descriptions aid the LLM in making proper semantic matches.

Note that it is not the LLM that executes the tool function. The LLM generates the structured output string that conforms to the tool specification. It is the application developer that has to execute the tool function in a manner specified by the LLM output.

In the application, a single object encapsulates the tool specification syntax and the tool execution: ToolSet and its child classes.

In the code above the SubTaskToolSet from earlier is defined. In it you see three methods with names corresponding to the names in the earlier JSON tool specification file. Also, the input arguments are named the same as in the specification file.

These are in other words the functions that are going to be invoked if the LLM returns the appropriate string specifying tool-use.

From the parent class, the SubTaskToolSet inherits the __call__ method, which accepts the tool name and the keyword arguments and then executes the class method with the proper name.

Note also that the toolsets contain a property tool_spec which returns a dictionary corresponding with the tool specification JSON file shown earlier.

Tutorial: Engine To Process LLM Inputs and Outputs

The Engine is the main object that handles the back and forth with the LLM and when appropriate executes tools in the given ToolSet. The logic of the engine object is generally implemented , such that all four agents of the application are instances of the Engine class.

A design question: if a tool has been executed, should the agent interpret the output or is the tool output also the agent output?

The router agent will always contain an interpretation step. The reason is that the router agent has to synthesize the results of the subtasks into one complete response to the principal’s request.

The subtask agents, on the other hand, will be simpler. Their concern is to interpret the instructions and data given to them by the router agent and transform that into tool parameters and a tool function call. Once the tool output has been obtained, it is returned to the router agent.

We can imagine use cases and applications where subtask agents should interpret their tool outputs as well. This is a case though where I think constraints on the subtask agent output are useful. I can fully control what data is contained in the tool output — this is still in the realm of syntax and code. The router agent is the one and only agent tasked to bring it all together and respond to the principal in the principal’s language.

The code snippet below shows the first half of the Engine class. The process method is the one invoked in the SubTaskAgentToolSet above.

The first two-thirds of the process method does the assembly of the input prompt and setting parameters on how tools should be invoked (required for the Anthropic client, see later).

Note that MessageStack is a convenient data class I created — in essence, a Python dictionary with some extra bells and whistles for easier data access.

The classes MessageParam , TextBlock, and later ToolUseBlock and ToolResultBlockParam are part of Anthropic’s Python library and are data types that help identify the kind of LLM input/output the particular text string is.

Once the text to provide the LLM has been assembled, the agent sends the full package of data to the Anthropic LLM client within the what_does_ai_say method.

Anthropic’s LLM Python client is documented here. It is the first thing that is executed and it takes the various text inputs and the tool specifications from the ToolSet instance.

Theresponse object from the LLM is then processed as follows:

- Its text content is appended to the message collection, such that it can be made part of subsequent LLM calls.

- The response content can be comprised of multiple entries; if one such entry is a specification for a tool call, then the tool function is called using the

ToolSetinstance of the agent. - The tool output is appended to the message collection. Note that each specification for a tool call is given a unique ID, which has to be associated with the corresponding tool function output. If not, the LLM will not be able to interpret the tool output.

- If the LLM generated a specification for a tool call (evident from the value of

response.stop_reason), then the question is if the tool output should be interpreted. If yes, then recursively thewhat_does_ai_sayis called. Since the tool output has been added to the messages, the LLM will in the subsequent iteration be aware of the tool output. If no, then end the execution.

Once what_does_ai_say terminates successfully, the process method concludes by assembling either the tool outputs or the free text content generated by the LLM, and returns that as the agent output.

This code can be improved to better handle exceptions. Tools can fail and recursive functions should have some checks to ensure they do not get stuck in an infinite loop. But this is a prototype so I leave these enhancements for another time.

The Engine class implements a fairly general type of tool using LLM. It wraps calls to the Anthropic LLM in logic that handles:

- the stack of messages going back and forth to the LLM,

- how to recognize a tool call specification,

- how to pass said specification to the general interface of the toolset instance for execution of the function,

- how to deal with tool output, in particular, if it is to be returned or further interpreted.

Tutorial: Tool Using LLMs Are Ready To Go

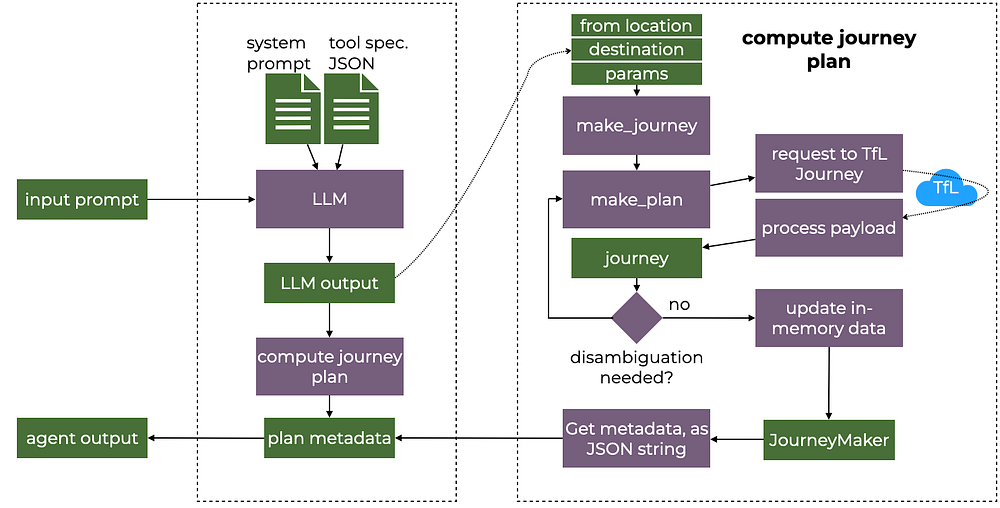

I show the image from before here again. The toolset and engine code define all the parts in this data flow.

The code so far has been general — nothing, except some naming, is specific to the London journey planning task.

Before any text can be generated based on machine layer output, the specific toolsets for the subtask agents have to be defined. So functions and tool specification JSON files needs to be written. Then, as shown above, we encapsulate all that within a ToolSet child class and we can join it to the Engine and the Anthropic LLMs.

If you have ideas for an assistant, the code so far can be adapted with only modest adjustments. The assistant layer and its semantic processing are ready.

But the machine layer is not always quite as tidy that it fits right into the ToolSet. That is the case for the London travel planner. So in the next sections, I will highlight additional practical considerations and how a number of design choices for the agents and their relations emerge. In other applications I expect similar considerations will appear.

Tutorial: Planner Agent and Slices of the TfL API

The planner agent toolset should contain functions to make requests to the TfL APIs, in particular the Journey API endpoint.

The Journey endpoint is not simple, though. Both input and output are comprised of nested and varied data. Rather than trying to make the LLM able to deal with all that complexity, I create “slices” of the TfL API — simpler interfaces intended for targeted use cases.

My justification for this design choice is that I expect the LLMs are then more likely to match the router agent’s instruction to a valid and correct call to the machine layer.

This is again a case where I impose constraints on the agent to focus it on the task I want it to solve. Is it necessary? Could I rather dump all information into the LLM and have faith in it to figure it all out? Only testing can tell, but my hunch is that constraints help.

I will not present a full dissection of the TfL Journey API and its payload. The reader finds the relevant logic in this file in my Github repo. Three key objects are created and will be used later in the code:

JourneyPlannerSearchwhich calls the TfL Journey API, given argument parameters.JourneyPlannerSearchParamswhich contains the validated argument parameters for the TfL Journey API.JourneyPlannerSearchPayloadProcessorwhich contains the logic to retrieve a selection of data from the possible return payloads of the TfL Journey API.

The Planner class interacts with these three objects in various places:

As seen in the make_plan method, the processing of the payload from the TfL API has an added complexity. It can return two types of outputs:

- The starting location and destination cannot be ambiguous. If they are not, then the status code that is returned from the TfL API endpoint is the normal 200 and the

Plannerinstantiates aPlan(see line 25 above). - If either the starting location or the destination is ambiguous, the TfL API returns a collection of disambiguation options, that is, best guesses of which locations are meant. The

Plannerdeals with that case by re-running the planner with all the suggested disambiguated locations.

In other words, the Planner can return several journeys.

In addition, the TfL API returns several plan options for each journey (typically four). And unless the plan involves no interchanges, the plan contains multiple legs. For some modes of transportation, like bikes, the leg can further comprise an ordered collection of steps.

Pretty nested stuff.

In order to manage this structured data, I create a custom data class Plan which in part looks like:

I also define a Journey data class, which contains the collection of Plan instances. I also define a JourneyMaker object, with a method make_journey which executes the Planner and gathers one or several journeys, which can then be retrieved from the JourneyMaker instance.

The details of all the objects are found in this file.

All this layering is meant to make the interface to the function of the tool that will be joined to the agent relatively easy and semantically clear. I do not want to confuse the LLM and dump all this complexity on it.

The toolset I ultimately define for the planner agent is:

The compute_journey_plans is the main tool function, which through the various abstractions and layers accounted for above, ultimately leads to a call to the TfL API and a processing of it payload until a collection of Journey instances and Plan instances are obtained.

Note that compute_journey_plan only returns metadata about the plans, not the plan details. Rather, the details are retrieved by other tools after the plans have been computed. Their corresponding functions are get_computed_journey and get_computed_journey_plan.

I make this choice because the amount of data in all the journeys and all the plans can be sizeable. If there are very many alternatives provided, then the router agent will either have to ask the principal for further specification or use some additional information inferred from the principal’s request, which of the plans to present.

Couldn’t we just as well dump all the journey plan data into the router agent’s LLM and let it sort it out? Why the intermediate step?

By now the reader will know that my hunch is to keep a few constraints in place and not overly confuse the LLM with nested semantics and potentially lots of irrelevant data for the task. But it is a hunch — I discuss these design questions generally at the end.

As was done for the router agent, the planner agent is built with each of its dependencies to other objects injected.

Tutorial: Output Artifacts and Passing Indices Between Agents

No doubt, there are many ways to communicate to the principal the plan that was made in response to the request. Going from prototype to product would include designing that and making it compatible with the principal’s preferred apps and modes of communication.

The semantic layer and the tool using LLMs can contribute to that as well.

So for illustration purposes, I implement three different tools for this prototype output artifact agent:

- Map creation, where the planned routes are drawn on the map.

- Calendar entry, to get reminders of the when and where of the plan.

- A fulsome text description of the route, where to turn, which trains or busses to board, where to alight etc.

I will not describe how each of these is implemented. The code is available in the files here for the interested reader.

I will highlight one design question, though, which I expect many other agent workflows have to consider as well.

After the journeys and their plans have been computed, only their metadata are returned to the router agent, as I noted in the previous section. The details of the plans are kept in memory within the JourneyMaker instance. They are retrieved by two integer indices: the journey index (0 if there was no disambiguation) and the plan index. You see that in one of the methods of the journey maker toolset, reproduced below:

def get_computed_journey_plan(self, journey_index: int, plan_index: int) -> str:

return self.maker[journey_index][plan_index].to_json(indent=4)

However, the router agent has to know that the output artifact agent requires these two indices as the router agent generates the instruction.

That is why the agents as tool JSON file looks a bit different for the output artifact agent. The file was shown in full above, the relevant section is this:

{

"name": "output_artefacts",

"description": "Generate the output artefacts for the journey planner, which communicate the journey plan to the user. Artefacts this tool can create are (1) map of London with the travel path drawn on it; (2) ICS file (a calendar file) that can be imported into the user's calendar; (3) detailed free text of the steps of the journey plan.",

"input_schema": {

"type": "object",

"properties": {

"input_prompt": {

"type": "string",

"description": "Free text prompt that contains the text relevant to the output artefact to create."

},

"input_structured": {

"type": "object",

"properties": {

"journey_index": {

"type": "integer",

"description": "The index of the journey which plans to turn into one more more output artefacts"

},

"plan_index": {

"type": "integer",

"description": "The index of the plan to turn into one more more output artefacts"

}

},

"required": ["journey_index", "plan_index"]

}

},

"required": ["input_prompt","input_structured"]

}

}

Therefore

- if the router agent has the relevant indices in its message stack, and

- if the router agent invokes the output artifact agent, then

- the chosen plan indices are passed as a structured data object (so not simply a free text prompt) from the router agent to the subtask agent in the objected named

input_structuredwith the mandatory fieldsjourney_indexandplan_index.

In other words, by the time the LLM of the output artifact agent is invoked, the prompt includes this structured content. It is therefore possible for that LLM to include these indices as it generates a tool specification string,

For example, the part of the tool specification JSON for the map drawing looks like:

{

"name": "draw_map_for_plan",

"description": "Draw the map of London for one (and only one) specific journey plan, wherein the trajectory of longitude and latitude line segments are drawn, each leg of a plan with different colour. By default the tool opens the webbrowser to display the map.",

"input_schema": {

"type": "object",

"properties": {

"journey_index": {

"type": "integer",

"description": "The index of the journey which plans to retrieve"

},

"plan_index": {

"type": "integer",

"description": "The index of the plan to retrieve and display on the map"

},

"browser_display": {

"type": "boolean",

"description": "Whether to display the map in the web browser or only to save it as a file; by default it is true"

}

},

"required": ["journey_index", "plan_index"]

}

}

It includes the two mandatory indices, which are contained in the prompt created by the router agent. There is also an optional boolean argument if the map should be shown in the browser upon creation or not, which the LLM can set if it infers the principal’s request imply one thing or the other.

Is this the best design to pass structured data between agents?

An alternative way would be to have another data object shared by the agents. We could imagine a data structure of assistant parameters, which the output artifact agent always loads into the prompt it sends to the LLM:

Draw a journey plan on the map of London as specified in the selected plan.

=== Variable values ===

{

"journey_index": 0,

"plan_index": 3

}

If the router or journey planner agent has write access to this imagined data object, then structured data can be passed between agents outside the text prompts they pass between each other.

At least for the current problem, the indices are not lost or distorted in the tests I run. Still, if agent workflows become highly nested with more structured data being passed between agents, would agent-to-agent prompting remain a reliable way to pass data between agents?

The Assistant’s View and the Very Engaged Router Agent

I illustrated earlier the principal’s view of a travel request to the Journey Planner Vitaloid. The swimlane diagram below illustrates the assistant’s view and work.

The boxes contain strings of varying kinds and content, which are created by the object of the lane each box is in, and the strings are passed to the object the arrow points to.

One feature of the current agent flow is that rather than creating all three planner agent specifications simultaneously, the router creates them one at a time. After each planner agent returns the string of metadata on the transit plan it computed, the router agent takes stock of the situation. That is, it processes the messages anew and determines that another planner agent call is needed, until all three journeys are planned.

This raises important design considerations around agent granularity and responsibility distribution. Using different prompts and tools specifications, it would be possible to make the router agent less engaged in the data flow and effectively hand off more work to the subtask agents. The assistant could be comprised of even more staggered agents and tools, each with narrowly defined responsibilities.

Conversely, the router agent could be equipped with all the tools of the subtask agents and thus be the author of all strings going back and forth. While the large context windows of most LLMs nowadays enable this consolidated design, it means that a great deal of data is processed by the LLM even when we know that data is not relevant to the solution. For example, when the prompt requests route planning, map-making tools are self-evidently unnecessary overhead.

The optimal configuration of agents and agent-tool relationships ultimately faces the same fundamental tradeoffs as traditional software architecture: operational costs, time to shipping MVP, and scalability requirements. Additionally, agent-specific considerations like prompt complexity, token usage efficiency, and the balance between agent autonomy and controlled delegation play crucial roles. As with many architectural decisions, the key lies in finding the right balance for your specific use case while maintaining flexibility for future evolution of the system.

The code described above and available on GitHub for further experimention means you can explore these questions for other kinds of assistants. The choices I made for the current prototype were hunches spawned from experience with, and anthropomorphic reasoning about, LLMs. To explore this systematically leads us to the topic of evals on which there are no shortage of opinions or commentary, so best left for another time.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.