LLM Chat Applications with Declarai, FastAPI, and Streamlit — Part 2 🚀

Last Updated on November 6, 2023 by Editorial Team

Author(s): Matan Kleyman

Originally published on Towards AI.

LLM Chat Applications with Declarai, FastAPI, and Streamlit — Part 2 U+1F680

Following the popularity of our previous Medium article (link U+1F517), which delved into deploying LLM Chat applications, we’ve taken your feedback on board. This second part introduces more advanced features, with a particular emphasis on streaming for chat applications.

Many of our readers expressed a desire for a streaming feature for chats. Streaming becomes crucial when dealing with extensive responses. Instead of making users wait for the entire response, streaming allows the reply to be sent as it’s being generated U+1F504. This ensures that users can access it instantly upon being dispatched from the webserver.

Thanks to recent enhancements in Declarai, we’re excited to leverage streaming, now available from version 0.1.10 onwards U+1F389.

Streaming in Action U+1F4F9

As demonstrated, streaming offers enhanced responsiveness and has become a standard in recent AI chat applications. Are you eager to see how we achieved this? Let’s dive into the code:

Declarai U+1F4BB

To utilize streaming, ensure the chatbot is declared with the streaming feature enabled by passing streaming=True .

import declarai

gpt_35 = declarai.openai(model="gpt-3.5-turbo")

@gpt_35.experimental.chat(streaming=True)

class StreamingSQLChat:

"""

You are a sql assistant. You are helping a user to write a sql query.

You should first know what sql syntax the user wants to use. It can be mysql, postgresql, sqllite, etc.

If the user says something that is completely not related to SQL, you should say "I don't understand. I'm here to help you write a SQL query."

After you provide the user with a query, you should ask the user if they need anything else.

"""

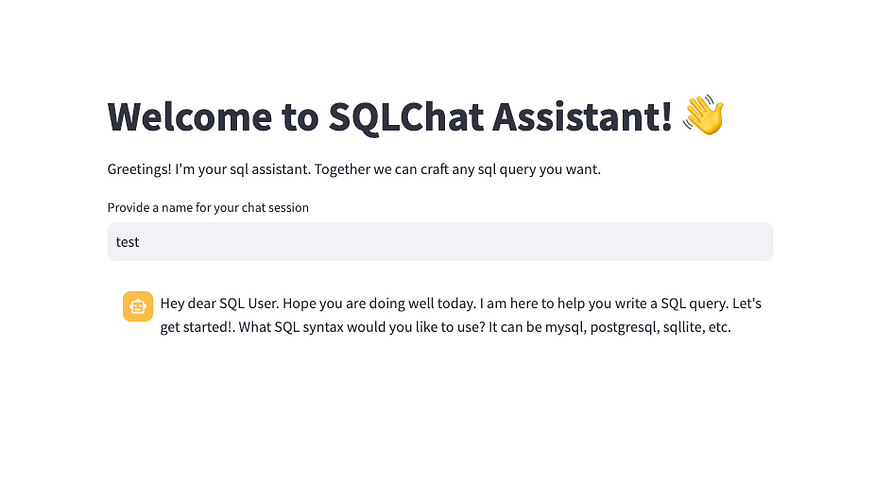

greeting = "Hey dear SQL User. Hope you are doing well today. I am here to help you write a SQL query. Let's get started!. What SQL syntax would you like to use? It can be mysql, postgresql, sqllite, etc."

When interacting with the chatbot using streaming:

chat = StreamingSQLChat()

res = chat.send("I want to use mysql")

for chunk in res:

print(chunk.response)

>>> Great

>>> Great!

>>> Great! MySQL

>>> Great! MySQL is

>>> Great! MySQL is a

>>> Great! MySQL is a popular

>>> Great! MySQL is a popular choice

>>> Great! MySQL is a popular choice for

>>> Great! MySQL is a popular choice for SQL

>>> Great! MySQL is a popular choice for SQL syntax

>>> Great! MySQL is a popular choice for SQL syntax.

>>> Great! MySQL is a popular choice for SQL syntax. How

>>> Great! MySQL is a popular choice for SQL syntax. How can

>>> Great! MySQL is a popular choice for SQL syntax. How can I

>>> Great! MySQL is a popular choice for SQL syntax. How can I assist

>>> Great! MySQL is a popular choice for SQL syntax. How can I assist you

>>> Great! MySQL is a popular choice for SQL syntax. How can I assist you with

>>> Great! MySQL is a popular choice for SQL syntax. How can I assist you with your

>>> Great! MySQL is a popular choice for SQL syntax. How can I assist you with your MySQL

>>> Great! MySQL is a popular choice for SQL syntax. How can I assist you with your MySQL query

>>> Great! MySQL is a popular choice for SQL syntax. How can I assist you with your MySQL query?

>>> Great! MySQL is a popular choice for SQL syntax. How can I assist you with your MySQL query?

What’s happening behind the scenes? By enabling streaming, OpenAI returns a generator, which produces items one at a time and only when required. Thus, as soon as a new chunk is available from OpenAI, it’s immediately processed.

FastAPI U+1F6E0️

For the streaming capability, we’ve introduced a distinct REST endpoint. This endpoint dispatches a StreamingResponse — essentially a generator where each chunk is a JSON containing the chunk’s details. It’s essential to specify the media_type as media_type="text/event-stream" which states the response type is of type stream.

@router.post("/chat/submit/{chat_id}/streaming")

def submit_chat_streaming(chat_id: str, request: str):

chat = StreamingSQLChat(chat_history=FileMessageHistory(file_path=chat_id))

response = chat.send(request)

def stream():

for llm_response in response:

# Convert the LLMResponse to a JSON string

data = json.dumps(jsonable_encoder(llm_response))

yield data + "\n" # Yielding as newline-separated JSON strings

return StreamingResponse(stream(), media_type="text/event-stream")

Streamlit App

On the front end, our goal is to display each new response chunk to the user as it’s received. This requires accessing the delta in response between each chunk of the response.

Here’s how it’s accomplished:

- Start with an HTTP POST request with

stream=True. - Iterate through each chunk and decode its JSON.

- Extract the

deltavalue and add it to the full response. This is basically the newly generated values. - Update the UI with the accumulated response.

res = requests.post(f"{BACKEND_URL}/api/v1/chat/submit/{session_name}/streaming",

params={"request": prompt},

stream=True)

with st.chat_message("assistant"):

message_placeholder = st.empty()

full_response = ""

buffer = ""

for chunk in res:

decoded_chunk = chunk.decode('utf-8')

buffer += decoded_chunk

while "\n" in buffer:

line, buffer = buffer.split("\n", 1)

parsed_chunk = json.loads(line.strip())

try:

full_response += parsed_chunk["raw_response"]["choices"][0]["delta"]["content"]

message_placeholder.markdown(full_response + "▌")

except KeyError:

pass

message_placeholder.markdown(full_response)

Ready to deploy your streaming chatbot U+1F916? Dive into the complete code in this repository —

GitHub – matankley/declarai-chat-fastapi-streamlit: An example how to build chatbot using declarai…

An example how to build chatbot using declarai for interacting with the language model, fastapi as backend server and…

github.com

Stay in touch with Declarai developments U+1F48C. Connect with us on Linkedin Page , and give us a star ⭐️ on GitHub if you find our tools valuable!

You can also dive deeper into Declarai’s capabilities by exploring our documentation U+1F4D6

Streaming — Declarai

Some LLM providers support streaming of the LLM responses. This is very useful when you want to get the results as soon…

declarai.com

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.