Kubernetes Made Easy With GPT-3

Last Updated on January 6, 2023 by Editorial Team

Last Updated on April 20, 2021 by Editorial Team

Author(s): Shubham Saboo

Natural Language Processing

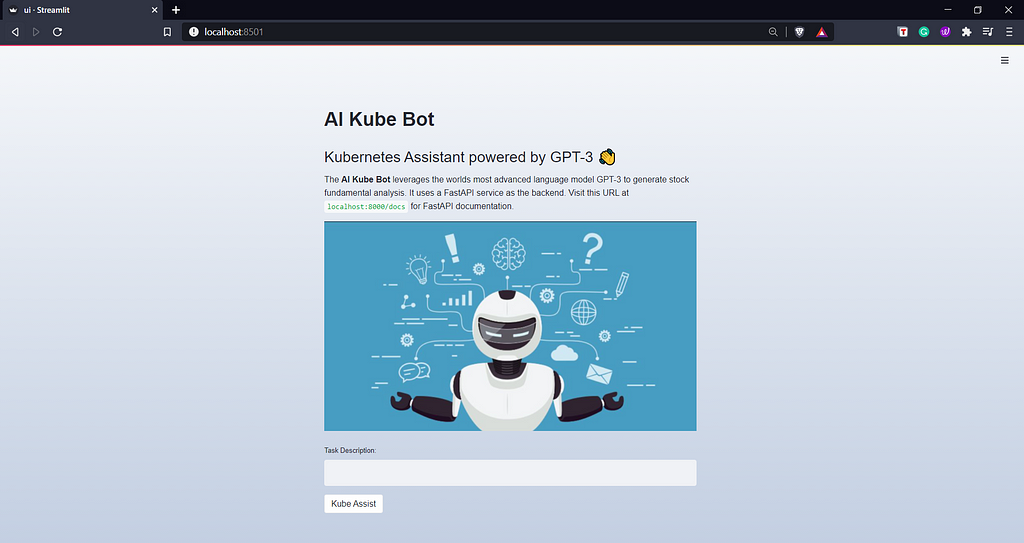

Auto-generate Kubernetes commands from plain English by leveraging the world’s most advanced language model.

Pre-Requisites

I have collected the dots in the form of articles, please go through the below articles in the same order to connect the dots and understand the key tech stack behind the intelligent Kube Bot:

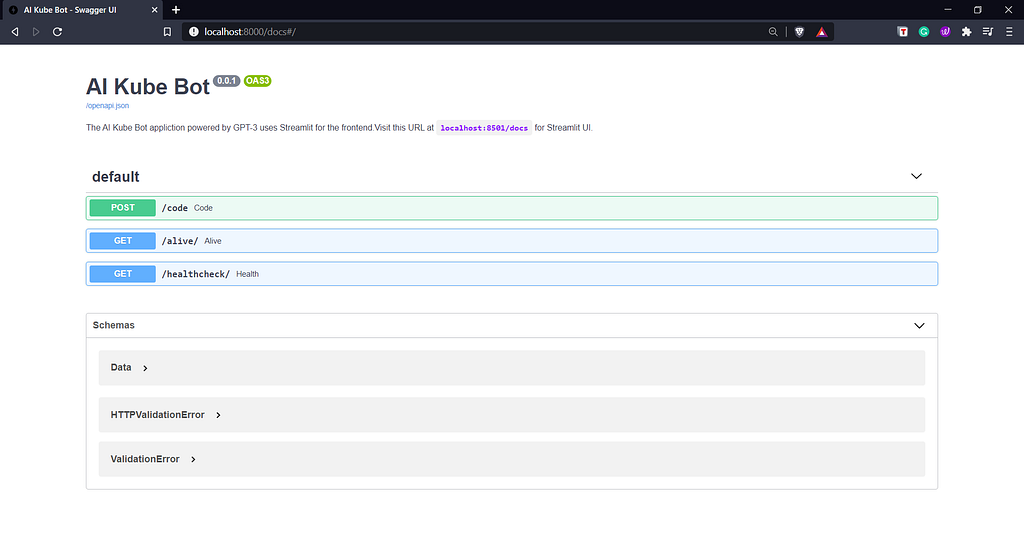

- FastAPI — The Spiffy Way Beyond Flask!

- Streamlit — Revolutionizing Data App Creation

- A Brief Introduction to GPT-3

What is Kubernetes?

Kubernetes is an open-source container orchestration platform that enables declarative configuration and automated deployment of containerized workloads and services. It has a large, rapidly growing ecosystem. Kubernetes services, support, and tools are widely available. It was originally developed by Google in 2014, which needed a new way to run billions of containers a week at scale.

Kubernetes simply orchestrates and manages container workflow like the beads are orchestrated to make a perfect necklace.

What is a Container?

Containers are similar to VMs, but they have relaxed isolation properties to share the Operating System (OS) among the applications. Therefore, containers are considered lightweight. Similar to a VM, a container has its own filesystem, the share of CPU, memory, process space, and more. As they are decoupled from the underlying infrastructure, they are portable across clouds and OS distributions.

A Container packs Software into standardized Units for development, shipment and deployment.

Containers are a good way to bundle and run your applications. In a production environment, you need to manage the containers that run the applications and ensure that there is no downtime. For example, if a container goes down, another container needs to start. Wouldn’t it be easier if this behavior was handled by a system?

Kubernetes to the Rescue!

Kubernetes provides you with a framework to run distributed systems flawlessly. It takes care of scaling and load-balancing for your application and provides you with storage orchestration capabilities embedded with a fail-safe mechanism.

What makes GPT-3 a good Candidate?

Ask any DevOps engineer what it is like to remember the Kubernetes commands for deploying and maintaining a Kubernetes cluster and how it is like to precisely write a deployment file where hundred other things can go wrong. You will always get the same answer that Kubernetes is like an ocean and there is no way to be through and through!

GPT-3 can learn and do things with few shots of training contrary to the conventional way of training an NLP model over a large corpus, which is difficult, time-consuming, and expensive. It is capable of generating text that is surprisingly human-like after only being fed a few examples of the task you are attempting to accomplish. Priming the GPT-3 API to think as a DevOps engineer can actually allow the model to generate accurate Kubernetes command and deployment files.

AI Kube Bot powered by GPT-3 takes care of all the heavy lifting and you just have to provide the task description in plain English to generate complex and accurate deployment files in YAML.

Application walkthrough

Now I will walk you through the AI Kube Bot application step by step:

While creating any GPT-3 application the first and foremost thing to consider is the design and content of the training prompt. Prompt design is the most significant process in priming the GPT-3 model to give a favorable and contextual response.

As a rule of thumb while designing the training prompt you should aim towards getting a zero shot response from the model, if that isn’t possible move forward with few examples rather than providing it with an entire corpus. The standard flow for training prompt design should look like: Zero Shot → Few Shots →Corpus based Priming.

In order to design the training prompt for the AI Kube Bot application, I have used the following training prompt structure:

- Description: An initial description of the context about what the Kube bot is supposed to do by adding a line or two about its functionality.

- Natural Language (English): This component includes a minimal one-liner description of the task that will be performed by the Kubernetes assistant. It helps GPT-3 to understand the context in order to generate proper Kubernetes commands and deployment code.

- Output: This component includes the Kubernetes command or deployment code corresponding to the English description provided as an input to the GPT-3 model.

Let’s see an example in action, to truly understand the power of GPT-3 in generating Kubernetes commands and deployment codes from pure english language. In the below example, we will generate the YAML files by providing minimal instructions to the AI Kube Bot.

Conclusion

GPT-3 from OpenAI has captured public attention unlike any other AI model in the 21st century. The sheer flexibility of GPT-3 in performing a series of generalized tasks with near-human efficiency and accuracy is what makes it so exciting. Finally, we can see a visible emerging trend of GPT-3 based applications that allow users to create code or design digital products using only natural language commands, pointing towards an exciting future of no-code applications.

References

- https://en.wikipedia.org/wiki/GPT-3

- https://openai.com/blog/openai-api

- https://kubernetes.io/docs/home

- https://www.docker.com/resources

If you would like to learn more or want to me write more on this subject, feel free to reach out.

My social links: LinkedIn| Twitter | Github

If you liked this post or found it helpful, please take a minute to press the clap button, it increases the post visibility for other medium users.

Kubernetes Made Easy With GPT-3 was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.