How To Create a Python Package for Fetching Weather Data

Last Updated on January 12, 2023 by Editorial Team

Author(s): Stavros Theocharis

Originally published on Towards AI.

An easy implementation of a python package for fetching weather data from any location

Markets across the board rely on weather reports for a wide variety of purposes. Investment forecasts, load demand planning, supply chain management, business analytics applications, transportation distribution demands, and time-series enhanced analysis are some of the many areas of business that might benefit from weather data.

Up until now, there have been a lot of occasions on which I needed to have access to certain weather data in order to make use of it. The majority of the time, I want to integrate it into some tasks involving time series (e.g., for seasonality reasons, etc.). In the past, I would look for weather data on the Internet and either download it by hand, make a quick script to scrape it from websites, or use an open application programming interface (API).

I have sometimes utilized Open-Meteo, an open-source weather API (for non-commercial use), and I have just learned about another open API that the National Aeronautics and Space Administration (NASA) provides as part of the “POWER” project (NASA ‘s larc-power project) in order to obtain meteorological data. In contrast to NASA’s API, which only provides historical data, Open-Meteo offers a wide variety of endpoints for both types of data.

NASA has long supported satellite systems and research that provide data critical to the study of climate and climatic processes through its Earth Science research program. This funding continues today. Estimates of surface solar energy fluxes and long-term climatological averages of meteorological parameters are included in these data sets. In addition, mean daily values of the primary solar and meteorological data are supplied in a manner suitable for time series.

In order to have a robust and fully functional script for using it each time at the corresponding project, I created a GitHub repository called “weather data retriever”. In this repository, I have made several enhancements so that it can be used easily and quickly as a package. My goal is to have a robust, fully functional package that can be used every time weather data is needed. Through this package, one can select to use Open-Meteo’s functionality or NASA’s functionality. In order to learn how to install and directly use it, you can follow the instructions inside “README.md” and also the quick-start Jupyter notebooks. In this article, I mainly explain the logic behind the functionality and the implementation of such a package.

So, let’s dive into the code…

Implementation

Helpful functions

As stated in the corresponding guides of NASA’S Larc Power Project and Open-Meteo for API use, it is necessary to use the longitude and latitude of an area in order to get this area’s weather data. In order to have an easy approach, we will construct a function using the “geopy package” that returns the longitude and latitude by inserting the name of the area:

from geopy.geocoders import Nominatim

from typing import Tuple, List, Dict, Union, Literal

def get_location_from_name(

name: str, use_bound_box: bool = False

) -> Tuple[str, Tuple[float, float]]:

nom_loc = Nominatim(user_agent="weather_data_retriever")

try:

location = nom_loc.geocode(name)

if use_bound_box:

coordinates = location.raw["boundingbox"]

# Coordinates has sorted the values as [latmin, latmax, lonmin, lonmax]

return location[0], tuple(coordinates)

else:

return location[0], location[1]

except Exception as e:

raise ValueError("Error in finding Area & Coordinates.", e)

Since we can also use bounding boxes of an area at our API call for NASA’s weather data, we also include the functionality of getting bounding boxes in our custom function.

So now, we can get the longitude and latitude values simply by entering the name of the area.

NASA’s API

The used bounding boxes have a limitation for our final request in NASA’s case. As I’ve seen, the maximum longitude should not be more than 10 points higher than the minimum:

def adjust_coordinates_on_limitations(

longitude_max: Union[float, str], longitude_min: Union[float, str]

) -> str:

"""

Check if a the max and min values have more than 10 points diff.

If yes adjust it beacuse the weather API has limitations.

"""

if float(longitude_max) > float(longitude_min) + 10:

longitude_max = float(longitude_min) + 10

return str(longitude_max)

NASA’s API provides only historical data and supports specific inputs for aggregation of the data, like “hourly”, “daily”, “monthly”, “climatology” and also specific date formatting (e.g., for using daily and hourly data, the date “2022–05–05” has to be given as “20220505”, and for monthly, climatology data, only the year part “2022” is supported).

def format_date_for_larc_power(

start_date: str,

end_date: str,

aggregation: Literal["hourly", "daily", "monthly", "climatology"],

) -> Tuple[str, str]:

"""

Formats the dates based on the aggregation in order to be ready to be used in Nasa weather request

"""

if aggregation not in ["hourly", "daily", "monthly", "climatology"]:

raise ValueError("Invalid aggregation value")

if (aggregation == "monthly") | (aggregation == "climatology"):

mod_start_date = start_date[0:4]

mod_end_date = end_date[0:4]

else:

mod_start_date = start_date.replace("-", "")

mod_end_date = end_date.replace("-", "")

return mod_start_date, mod_end_date

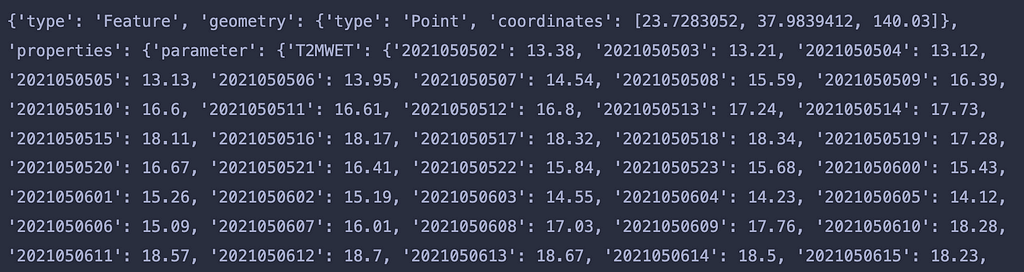

For each one of the aggregation types, a specific output comes as a response. For example, for a random request for “hourly” type aggregation, we get:

This has to be formatted based on the type of aggregation used in order to construct our pd.DataFrame object. So, let’s define some more functions:

from datetime import datetime

import pandas as pd

def convert_str_hour_date_to_datetime(str_hour_date: str) -> datetime:

"""Converts str hour (eg. "2022050501) to datetime"""

return datetime.strptime(str_hour_date, "%Y%m%d%H")

def convert_response_larc_power_dict_to_dataframe(

response_dict: Dict[str, Dict[str, float]], aggregation: str

) -> pd.DataFrame:

"""

Converts coming dict from response to dataframe based on aggregation

"""

weather_df = pd.DataFrame(response_dict).reset_index()

coming_columns = list(response_dict.keys())

weather_df.columns = ["date"] + coming_columns

if aggregation == "daily":

weather_df["date"] = pd.to_datetime(weather_df["date"])

elif aggregation == "hourly":

weather_df["date"] = weather_df.apply(

lambda x: convert_str_hour_date_to_datetime(x["date"]), axis=1

)

weather_df["date"] = pd.to_datetime(weather_df["date"])

return weather_df

Now, let’s define the main function:

import requests

import json

def get_larc_power_weather_data(

start_date: str,

end_date: str,

coordinates: Union[Tuple[float, float], Tuple[float, float, float, float]],

aggregation: Literal["hourly", "daily", "monthly", "climatology"] = "daily",

community: Literal["AG", "RE", "SB"] = "RE",

regional: bool = False,

variables: List[str] = [

"T2M",

"T2MDEW",

"T2MWET",

"TS",

"T2M_RANGE",

"T2M_MAX",

"T2M_MIN",

"RH2M",

"PRECTOT",

"WS2M",

"ALLSKY_SFC_SW_DWN",

],

) -> Union[pd.DataFrame, Dict[str, Dict[str, float]]]:

"""

This function retrieves NASA's historical weather data

"""

if aggregation not in ["hourly", "daily", "monthly", "climatology"]:

raise ValueError("Invalid aggregation value")

if community not in ["AG", "RE", "SB"]:

raise ValueError("Invalid community value")

# Basic modifications

formatted_variables = ",".join(variables)

mod_start_date, mod_end_date = format_date_for_larc_power(

start_date, end_date, aggregation

)

if regional:

base_url = r"https://power.larc.nasa.gov/api/temporal/{aggregation}/regional?parameters={parameters}&community={community}&latitude-min={latitude_min}&latitude-max={latitude_max}&longitude-min={longitude_min}&longitude-max={longitude_max}&start={start}&end={end}&format=JSON"

latitude_min = coordinates[0]

longitude_min = coordinates[2]

latitude_max = coordinates[1]

longitude_max = coordinates[3]

longitude_max = adjust_coordinates_on_limitations(longitude_max, longitude_min)

api_request_url = base_url.format(

latitude_min=latitude_min,

longitude_min=longitude_min,

latitude_max=latitude_max,

longitude_max=longitude_max,

start=mod_start_date,

end=mod_end_date,

aggregation=aggregation,

community=community,

parameters=formatted_variables,

)

else:

base_url = r"https://power.larc.nasa.gov/api/temporal/{aggregation}/point?parameters={parameters}&community={community}&longitude={longitude}&latitude={latitude}&start={start}&end={end}&format=JSON"

latitude = coordinates[0]

longitude = coordinates[1]

api_request_url = base_url.format(

longitude=longitude,

latitude=latitude,

start=mod_start_date,

end=mod_end_date,

aggregation=aggregation,

community=community,

parameters=formatted_variables,

)

try:

response = requests.get(url=api_request_url, verify=True, timeout=30.00)

except Exception as e:

print("There is an error with the Nasa weather API. The error is: ", e)

content = json.loads(response.content.decode("utf-8"))

if len(content["messages"]) > 0:

raise InterruptedError(content["messages"])

if regional:

return content

else:

selected_content_dict = content["properties"]["parameter"]

weather_df = convert_response_larc_power_dict_to_dataframe(

selected_content_dict, aggregation

)

return weather_df

And the entire pipeline:

def fetch_larc_power_historical_weather_data(

location_name: str,

start_date,

end_date,

aggregation: Literal["hourly", "daily", "monthly", "climatology"] = "daily",

community: Literal["AG", "RE", "SB"] = "RE",

regional: bool = False,

use_bound_box: bool = False,

variables_to_fetch: List[str] = ["default"],

) -> Union[pd.DataFrame, Dict[str, Dict[str, float]]]:

location, coordinates = get_location_from_name(location_name, use_bound_box)

if variables_to_fetch == ["default"]:

if aggregation == "hourly":

variables_to_fetch = l_power_base_vars_to_fetch

else:

variables_to_fetch = (

l_power_base_vars_to_fetch + l_power_additional_vars_to_fetch

)

return get_larc_power_weather_data(

start_date=start_date,

end_date=end_date,

aggregation=aggregation,

community=community,

regional=regional,

coordinates=coordinates,

variables=variables_to_fetch,

)

For more information about the returned weather variables and the different possible combinations, have a look at the repository’s README file and the corresponding notebook.

The data was obtained from the National Aeronautics and Space Administration (NASA) Langley Research Center (LaRC) Prediction of Worldwide Energy Resource (POWER) Project funded through the NASA Earth Science/Applied Science Program.

Open-Meteo’s API

When utilizing Open-Meteo, one may receive a wide variety of meteorological variables. In this instance, we will be making use of the most common ones. Furthermore, certain factors are only applicable to the analysis of historical data, whereas others may be discovered in the research of forecasted data. In this case, the aggregation may be “hourly,” or it could be “daily”:

def choose_meteo_default_variables(

aggregation: Literal["hourly", "daily"], case: Literal["forecast", "historical"]

) -> List[str]:

if aggregation == "hourly":

default_variables = [

"temperature_2m",

"relativehumidity_2m",

"dewpoint_2m",

"apparent_temperature",

"precipitation",

"rain",

"snowfall",

]

if case == "forecast":

default_variables.append("showers")

else:

default_variables = [

"temperature_2m_max",

"temperature_2m_min",

"apparent_temperature_max",

"apparent_temperature_min",

"sunrise",

"precipitation_sum",

"rain_sum",

]

if case == "forecast":

default_variables.extend(["showers_sum", "snowfall_sum"])

return default_variables

Let’s also define the function for adjusting the API use (based on historical or forecasted requests):

def build_meteo_request_url(

aggregation: Literal["hourly", "daily"],

parameters_str: str,

longitude: float,

latitude: float,

case: Literal["forecast", "historical"],

start_date: Union[str, None],

end_date: Union[str, None],

) -> str:

if case == "historical":

base_forecast_url = r"https://archive-api.open-meteo.com/v1/archive?latitude={latitude}&longitude={longitude}&start_date={start_date}&end_date={end_date}&{aggregation}={parameters_str}&timeformat=unixtime&timezone=auto"

api_forecast_request_url = base_forecast_url.format(

aggregation=aggregation,

parameters_str=parameters_str,

longitude=longitude,

latitude=latitude,

start_date=start_date,

end_date=end_date,

)

else:

base_forecast_url = r"https://api.open-meteo.com/v1/forecast?latitude={latitude}&longitude={longitude}&{aggregation}={parameters_str}&timeformat=unixtime&timezone=auto"

api_forecast_request_url = base_forecast_url.format(

aggregation=aggregation,

parameters_str=parameters_str,

longitude=longitude,

latitude=latitude,

)

return api_forecast_request_url

Here we also have the main function:

def get_open_meteo_weather_data(

coordinates: Tuple[float, float],

aggregation: Literal["hourly", "daily"],

case: Literal["forecast", "historical"],

parameters: List[str] = ["default"],

start_date: Union[str, None] = None,

end_date: Union[str, None] = None,

) -> Tuple[

pd.DataFrame, Dict[str, Union[str, float, Dict[str, Union[List[str], List[float]]]]]

]:

"""

This function retrieves open-meteo historical or forecasted weather data at a location point

"""

parameters_str = ",".join(parameters)

latitude = coordinates[0]

longitude = coordinates[1]

api_forecast_request_url = build_meteo_request_url(

aggregation=aggregation,

parameters_str=parameters_str,

longitude=longitude,

latitude=latitude,

case=case,

start_date=start_date,

end_date=end_date,

)

try:

response = requests.get(

url=api_forecast_request_url, verify=True, timeout=30.00

)

except:

raise ConnectionAbortedError("Failed to establish connection")

content = json.loads(response.content.decode("utf-8"))

weather_data_df = pd.DataFrame(content[aggregation])

weather_data_df["time"] = weather_data_df.apply(

lambda x: pd.to_datetime(x["time"], unit="s"), axis=1

)

return weather_data_df, content

More information about the use of the arguments can be found inside the repository.

The final main function that connects everything together is:

def fetch_open_meteo_weather_data(

location_name: str,

aggregation: Literal["hourly", "daily"],

case: Literal["forecast", "historical"],

variables_to_fetch: List[str] = ["default"],

start_date: Union[str, None] = None,

end_date: Union[str, None] = None,

) -> Tuple[

pd.DataFrame, Dict[str, Union[str, float, Dict[str, Union[List[str], List[float]]]]]

]:

location, coordinates = get_location_from_name(location_name, use_bound_box=False)

if "default" in variables_to_fetch:

parameters = choose_meteo_default_variables(aggregation=aggregation, case=case)

else:

parameters = variables_to_fetch

return get_open_meteo_weather_data(

start_date=start_date,

end_date=end_date,

aggregation=aggregation,

coordinates=coordinates,

parameters=parameters,

case=case,

)

Please look at the repository’s README file and the accompanying notebook for more information about the returned meteorological variables and the many ways these variables can be put together.

Final parts of the package

In order to be able to call the appropriate functions as modules, it is necessary to include an “__init__.py” file inside the same folder as the “.py” files that will include the above functions and pipelines.

This file needs to import the two main pipelines in order to be able to be called directly:

from weather_data_retriever.pipelines import (

fetch_larc_power_historical_weather_data,

fetch_open_meteo_weather_data,

)

You can also include if you wish, a “LICENSE” file for arranging the distribution and use of your package.

Finally, create a “setup.py” file:

from setuptools import setup, find_packages

with open("LICENSE") as f:

license = f.read()

setup(

name="weather_data_retriever",

version="1.0",

author="Stavros Theocharis",

description="Weather data retriever",

long_description="Multiple sources weather data retriever",

url="https://github.com/stavrostheocharis/weather_data_retriever.git",

packages=find_packages(exclude="tests"),

install_requires=[

"pandas",

"geopy",

"requests",

],

license=license,

)

And that’s it! Your new weather data package is ready to be used. More enhancements can be done, but this is a huge step, and it saved me a lot of time searching and adjusting scripts to get weather data.

Conclusion

When it comes to making use of weather data or predictions, there is a significant gap between getting them from a variety of sources and combining them together! Even for very simple projects, I had to connect several times to accounts or get tokens for the different apps, etc. Many times, all these apps did not also provide APIs that had been developed correctly.

In this article, I presented an easy implementation for creating a basic package for fetching weather data from two stable APIs provided by Open-Meteo and NASA. More features can be added in order to enhance functionality in the future.

How To Create a Python Package for Fetching Weather Data was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.