How Can AI Help Visually Impaired People See The World

Last Updated on December 30, 2023 by Editorial Team

Author(s): Shuyang Xiang

Originally published on Towards AI.

My Passion for AI and Challenges Faced by the Visually Impaired

For over six years, I’ve dedicated my time as a volunteer for an association aiding visually impaired individuals in their daily tasks — tasks that are seemingly simple for those of us with sight, such as shopping, reading, visiting a doctor, or merely taking a walk.

Lately, however, I find myself increasingly frustrated. On one side, my passion lies in mathematics and AI, yet on the other, my visually impaired friends continue to grapple with basic daily activities, reaping little benefit from the rapid advancements in AI. Instead of bridging the gap between us and them, it seems that new technologies are only widening it.

I have been contemplating for a while about how AI, particularly Large Language Models (LLMs), could play a role in narrowing the gap. This blog post marks my initial attempt, acknowledging that it’s still in its early stages of development. I aim to share my nascent ideas and implementations here, serving as the starting point for my exploration. I welcome discussions and hope that if someone is interested in taking the next step with me, it would be truly appreciated.

A Voice to Voice Vision Assistant

I am embarking on the development of a voice-controlled image assistant powered by LLMs as one of my initial initiatives. This project is driven by the recognition that visually impaired individuals often face challenges in performing basic tasks, such as object detection and color recognition. The purpose of this assistant is to provide a means for them to interact with an AI assistant independently of their visual limitations. By leveraging advanced algorithms in computer vision, the assistant aims to empower users by offering a virtual vision that transcends the reliance on their eyes, allowing them to experience the world through the lens of technology.

In essence, my idea is to construct an agent through Langchain on ChatGPT, coupled with a variety of image-related tools like color recognition and object detection. Departing from traditional text-based input and output, our interaction with the language agent will be facilitated through voice, using both speech recognition and text-to-speech tools.

Voice to voice

For transcribing Audio to Text as LLM’s input, we can use SpeechRecognition with google speech api.

recognizer = sr.Recognizer()

with sr.Microphone() as source:

recognizer.adjust_for_ambient_noise(source,duration=4)

audio=recognizer.listen(source)

user_input=recognizer.recognize_google(audio, language='en')

The code initializes a recognizer object from the speech_recognition library, serving as a tool for audio processing and speech recognition. This enables the transformation of audio signals into text. For a comprehensive understanding and exploration of additional recognition options, kindly refer to the detailed information provided in this accompanying blog post.

To convert the text output generated by the LLM into spoken words, we will employ gTTS (Google Text-to-Speech) and playsound modules.

response_voice = gTTS(text=response, lang='en', slow=False)

response_voice.save("response_voice.mp3")

playsound.playsound("response_voice.mp3")

The code utilizes gTTS to convert the response text into speech, saving the audio locally, and then plays it using playsound. While this initial prototype relies on gTTS, it’s worth noting that there are alternative options for achieving higher-quality voice synthesis, such as leveraging the AWS Polly engine for a more realistic text-to-speech experience.

Image tools

Much of our interaction with the world is shaped by our visual perception. Simple tasks like recognizing objects and identifying colors come effortlessly to us. However, when faced with a scenario devoid of visual input, even tasks as basic as selecting color-coordinated attire can pose a challenge.

In light of this, my intention is to construct a language agent enhanced by a suite of image-related tools. To articulate the tools accessible to the Language Model (LLM), we employ langchain tools:

from langchain.tools import tool

import torch

from torchvision import transforms

from torchvision.models.detection import fasterrcnn_resnet50_fpn

@tools.append

@tool

def object_detection(image_path: str):

"""Use this tool to dectect objects.

It will return the detected objects and their quantities."""

# TODO: find a way to search for the latest image

threshold=0.5

model = fasterrcnn_resnet50_fpn(pretrained=True)

model.eval()

# Load and preprocess the input image

image = Image.open(image_path).convert("RGB")

transform = transforms.Compose([transforms.ToTensor()])

image_tensor = transform(image).unsqueeze(0)

# Make predictions

with torch.no_grad():

predictions = model(image_tensor)

# Filter predictions based on confidence threshold

labels = predictions[0]['labels'][predictions[0]['scores'] > threshold]

labels=[COCO_INSTANCE_CATEGORY_NAMES[label] for label in labels]

labels = Counter(labels)

# Generate the formatted string

result = ', '.join(

f"{count}: {label}s" if count > 1 else f"{count} {label}" for label, count in labels.items())

return result

As described, the tool accepts an image path as input and provides the detected objects along with their respective quantities as output. It employs the pretrained model fasterrcnn_resnet50_fpn, which predicts bounding box coordinates and class probabilities. We selectively consider labels with probabilities surpassing a predefined threshold.

It’s essential to note that the model outputs integer labels, which may not be intuitive for users. To address this, we utilize a dictionary named COCO_INSTANCE_CATEGORY_NAMES to translate these integers into human-readable label names, such as “bike,” “bus,” and so forth.

Introducing our next tool, specifically designed for color recognition.

import cv2

from sklearn.cluster import KMeans

from webcolors import CSS3_NAMES_TO_HEX, hex_to_rgb, rgb_to_name

@tools.append

@tool

def cluster_colors(image_path):

"""Use this tool to find three main colors in the image"""

image = cv2.imread(image_path)

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB) # Convert to RGB format

# Reshape the image to a 2D array of pixels

pixels = image.reshape((-1, 3))

# Use K-means clustering to find dominant colors

kmeans = KMeans(n_clusters=3)

kmeans.fit(pixels)

# Get the RGB values of the cluster centers

dominant_colors = kmeans.cluster_centers_.astype(int)

# Convert RGB values to color names

color_names = []

for color in dominant_colors:

try:

color_name = rgb_to_name((color[0], color[1], color[2]))

except:

# If the color name is not found, find the closest CSS3 color name

color_name = find_closest_color_name(color)

color_names.append(color_name)

# Join the color names into a string, separated by commas

color_names_string = ', '.join(color_names)

return color_names_string

As outlined in the description, this tool accepts the image path as input and extracts the three predominant colors present in the image. This is achieved by employing K-means clustering to group all colors into three main clusters. Subsequently, the center of each cluster is utilized to identify the corresponding color name with the help of the webcolor library.

It’s noteworthy that in cases where the RGB value does not precisely correspond to a name in the webcolor dictionary, the tool intelligently finds the nearest matching color name instead. While the intricate details are omitted here, this approach ensures accurate color recognition across a diverse range of images.

Langchain Agent

We are now ready to construct an agent using the standard initialize_agent API provided by Langchain:

from langchain.memory import ConversationBufferMemory

from langchain.agents import initialize_agent, AgentType

agent = initialize_agent(

tools,

llm,

agent=AgentType.CHAT_CONVERSATIONAL_REACT_DESCRIPTION,

memory=conversational_memory,

verbose=True

)

The code establishes an agent by incorporating the image-related tools defined earlier.

Vision Assistant

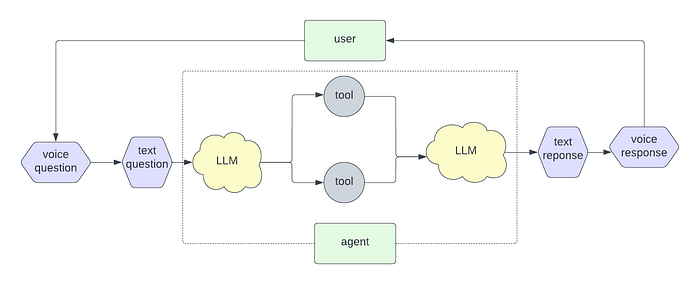

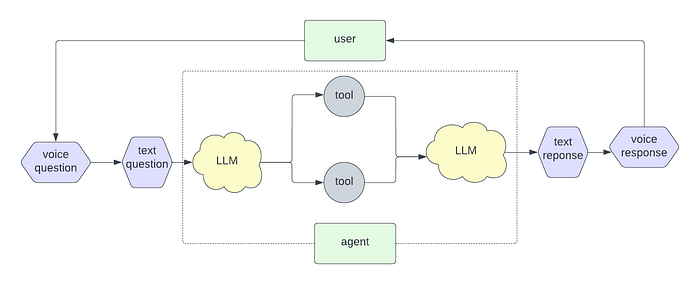

With all the necessary components in place, we are poised to create our voice-to-voice vision assistant. The model’s architecture is illustrated in the chart below:

For a visual demonstration of how it operates, you can refer to the video I’ve created:

Embarking on Inclusive AI

In the preceding chapter, I have developed a vision assistant empowered by LLM. This technology holds the potential to benefit visually impaired individuals by assisting them with tasks such as object detection and color recognition. Moreover, as the system evolves, it may encompass additional vision functionalities to further enhance its utility.

Nevertheless, it’s crucial to acknowledge that this remains a very early-stage prototype. My motivation for undertaking this initiative is to draw attention from the community toward the prospect of leveraging AI to aid marginalized groups within society, particularly those with visual impairments. I hold a modest hope that anyone sharing an interest in this subject might reach out, and together, we could potentially pave the way for a more comprehensive and mature project in the future.

The comprehensive implementation is available on my GitHub repository.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.