FuncReAct: ReAct Agent Using OpenAI Function Calling

Last Updated on November 6, 2023 by Editorial Team

Author(s): Vatsal Saglani

Originally published on Towards AI.

If you still don’t know what prompting is, then you are probably living under a rock or probably just woke up from a comma. Prompting, with respect to LLMs and Generative AI, refers to the act of formatting the commands you need to provide the model to receive the desired output. A good prompt can generate great results whereas a bad prompt can spoil the experience.

The responses from the LLM or any other Generative AI model are as good as the prompts (commands) provided to it. The output depends on how well a prompt is crafted and if everything is explained in a clear and concise manner.

It is similar to training a model, good data in — clean data used for training — outputs good prediction. If the data provided for training is not good, then the output will also be worse — junk in, junk out. Similarly, good prompts bring in better outputs.

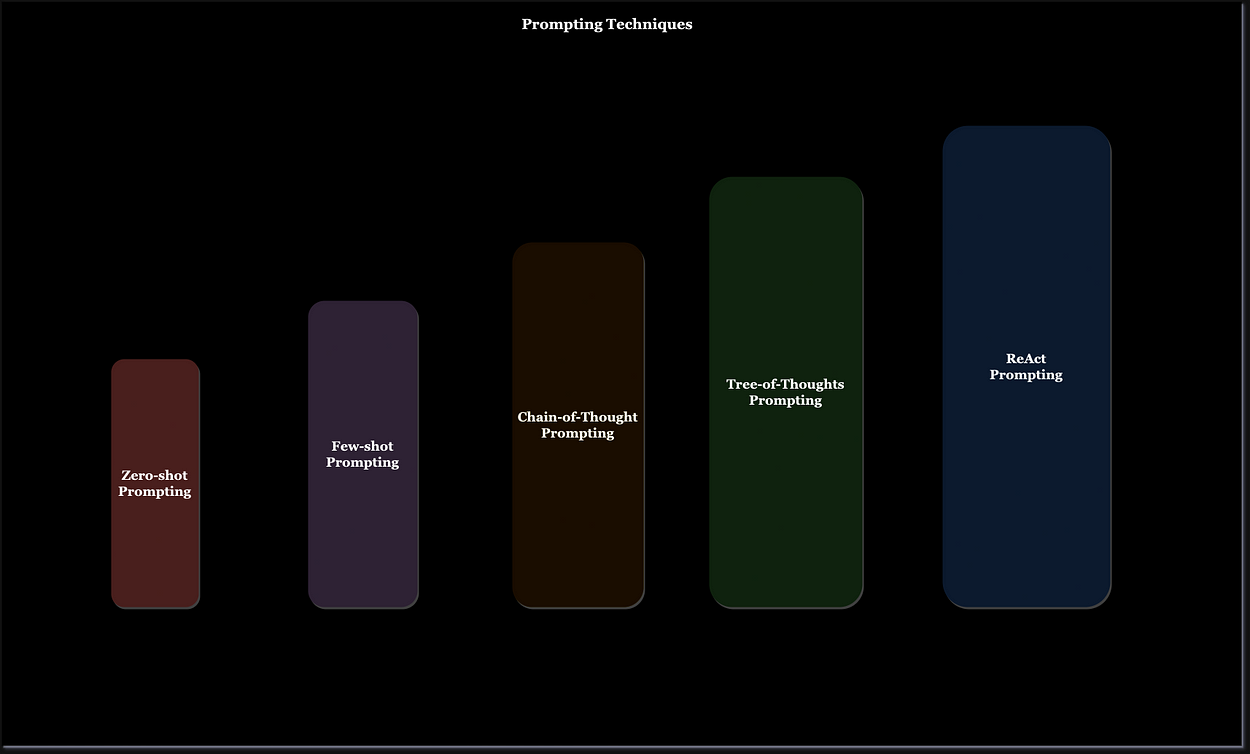

Since, the launch of ChatGPT there has been a lot of buzz around the term “prompt engineering”. In this blog, we will look at different prompt engineering techniques and get to know the basics of function calling using OpenAI API.

Before you dive deep into the content of this blog, I would like to mention that the entire content is divided into four parts, so we’ve four blogs. This was done to keep the content under 10–11 minutes of reading time. We will be discussing a lot throughout this series so it’s better for you to take breaks between the parts. So please be patient and go through all the parts and I am sure you will learn a lot.

- Part — 1: Understanding Prompt Engineering Techniques (this blog)

- Part — 2: Setting up the base for the ReAct RAG bot

- Part — 3: Individual ReAct RAG bot components

- Part — 4: Completing the ReAct RAG Bot

Just to intrigue you, I’ve added a working demo of the ReAct RAG agent we will be building during these 4 parts.

If you cannot wait for the next blogs, you can go through the FuncReAct repository to get an initial introduction to it.

Prompt engineering

Prompt engineering is relatively a new field and there are a lot of discoveries going on to generate better outputs from LLMs. Prompt engineering not only helps generate better outputs but also helps in providing extra tooling to the LLM.

Now one might think, what extra tooling? As most of the LLM APIs or trained LLMs have a limited amount of knowledge up until a certain point in time, their application to some real-world problem might be not useful. But with prompt engineering, we can provide the model the ability to search via search engines.

Also, a model might not be very good at a particular task out of the box, in that case, we can provide it with examples of what to do when and what should be the output.

Let’s look at different prompting techniques.

Zero-shot prompting

Models from the GPT family, starting from GPT-3 and other LLMs like Llama-2, Falcon, etc., are trained on large amounts of data and are trained to follow instructions. Thus, they have the “zero-shot” capability of performing tasks.

We can ask GPT-3.5-turbo, GPT-4, or even ChatGPT to classify the sentiment of a piece of text.

Instead, we can use function calls to achieve the same. We don’t need to, but let’s still try it.

Function calling

Let’s have a brief introduction to the function calling feature available with openai.

Suppose, we have a certain use case wherein we have multiple functions and arguments for each function are different. What function calling does is it classifies what function to call and what arguments to provide, based on the combination of system prompt, user message, function names, function descriptions, and parameters of each function.

import json

import openai

from typing import List, Dict, Union

class FunctionCall:

def __init__(self, api_key: str):

openai.api_key = api_key

def __call__(

self,

message: str,

system_prompt: str,

functions: List[Dict],

model: str,

function_call: Union[Dict, None] = None,

cnt: int = 0,

):

try:

messages = [

{"role": "system", "content": system_prompt},

{"role": "user", "content": message},

]

response = openai.ChatCompletion.create(

model=model,

messages=messages,

functions=functions,

function_call=function_call if function_call else "auto",

)

# print(response.choices[0].get("message"))

function_call = response.choices[0].get("message").get("function_call")

function_name = function_call.get("name")

function_args = json.loads(function_call.get("arguments"))

return function_name, function_args

except AttributeError as err:

# print(f"Exception: ", {response.choices[0].get("message")})

cnt += 1

if cnt > 2:

raise Exception("Error getting function name and arguments!")

return self.__call__(

message,

f"{system_prompt}.\nCALL A FUNCTION!.",

functions,

model,

)

Let’s look at this code line by line.

import json

import openai

from typing import List, Dict, Union

Here, we have our openai import along with json and other types.

class FunctionCall:

def __init__(self, api_key: str):

openai.api_key = api_key

The FunctionCall class is defined, which takes the OpenAI API key as the argument.

def __call__(

self,

message: str,

system_prompt: str,

functions: List[Dict],

model: str,

function_call: Union[Dict, None] = None,

cnt: int = 0,

):

The __call__ method is defined and takes the user message ( message), system_prompt, list of functions ( functions ), model, andfunction_call (function to call) as the arguments.

try:

messages = [

{"role": "system", "content": system_prompt},

{"role": "user", "content": message},

]

response = openai.ChatCompletion.create(

model=model,

messages=messages,

functions=functions,

function_call=function_call if function_call else "auto",

)

# print(response.choices[0].get("message"))

function_call = response.choices[0].get("message").get("function_call")

function_name = function_call.get("name")

function_args = json.loads(function_call.get("arguments"))

return function_name, function_args

In the try block, we send the user message along with the function names, system prompt, and function call to the openai.ChatCompletion.create method to get the function name and the function arguments.

except AttributeError as err:

# print(f"Exception: ", {response.choices[0].get("message")})

cnt += 1

if cnt > 2:

raise Exception("Error getting function name and arguments!")

return self.__call__(

message,

f"{system_prompt}.\nCALL A FUNCTION!.",

functions,

model,

)

If in the function_call the value provided is “auto,” and the prompt isn’t clear or doesn’t cover some edge cases. Then, it might not call a function but provide chat completion. In that case, we get the AttributeError exception, and we can force the model to call a function.

Now that we have the function call class ready, let’s write the function and its details like name, description, and schema.

To avoid writing function schemas by hand (manually), we can use something called pydantic. Pydantic is a data validation library for Python. We can define our function arguments and their types using pydantic models.

Let’s look at pydantic along with the function call.

from enum import Enum

import json

from pydantic import BaseModel

class Sentiments(Enum):

positive = "POSITIVE"

negative = "NEGATIVE"

neutral = "NEUTRAL"

class Sentiment(BaseModel):

category: Sentiments

SYSTEM_PROMPT = "Given a sentence in single backticks you have to classify the sentiment of the sentence into one of the following categories, POSITIVE, NEGATIVE, NEUTRAL"

print(Sentiment.schema())

fc = FunctionCall("YOUR_OPENAI_API_KEY")

function_name, function_argument = fc(

"Text: `The movie was great!`",

SYSTEM_PROMPT,

[

{

"name": "sentimentClassified",

"description": "Print the category of the sentiment of the given text.",

"parameters": Sentiment.schema(),

}

],

"gpt-3.5-turbo",

{"name": "sentimentClassified"},

)

print(f"FUNCTION NAME: {function_name}")

print(f"FUNCTION ARGUMENT: {json.dumps(function_argument, indent=4)}")

Let’s break this down step by step.

from enum import Enum

import json

from pydantic import BaseModel

We import Enum from enum to use it to define our categories. Then we have the regular json import. The BaseModel class imported from pydantic will help us with defining the arguments of a function along with the type of each argument.

class Sentiments(Enum):

positive = "POSITIVE"

negative = "NEGATIVE"

neutral = "NEUTRAL"

We define the Sentiments enum, which has three categories.

class Sentiment(BaseModel):

category: Sentiments

This Sentiment class has one argument named category and the category can take either of the three sentiments defined in Sentiments enum.

SYSTEM_PROMPT = "Given a sentence in single backticks you have to classify the sentiment of the sentence into one of the following categories, POSITIVE, NEGATIVE, NEUTRAL"

fc = FunctionCall("YOUR_OPENAI_API_KEY")

We initialize our system prompt to classify a text and then initialize our FunctionCall class with the OpenAI API key.

function_name, function_argument = fc(

"Text: `The movie was great!`",

SYSTEM_PROMPT,

[

{

"name": "sentimentClassified",

"description": "Print the category of the sentiment of the given text.",

"parameters": Sentiment.schema(),

}

],

"gpt-3.5-turbo",

{"name": "sentimentClassified"},

)

print(f"FUNCTION NAME: {function_name}")

print(f"FUNCTION ARGUMENT: {json.dumps(function_argument, indent=4)}")

To the initialized function call, we provide the text, system prompts, the functions (only one we have), the model name, and the function we want it to call.

The name of the function and the arguments are printed to the terminal. The output will look like the following.

FUNCTION NAME: sentimentClassified

FUNCTION ARGUMENT: {

"category": "POSITIVE"

}

Few-shot prompting

In few-shot prompting, we provide the model with a few examples. Let’s take the example of multi-label sentiment classification.

In the case of multi-label classification, an item — the text — can have one or more than one label. The LLM might not be trained on the labels we want to classify the text into. So in this case we can provide one or two examples in the prompt covering all the cases. This will help the LLM stick to the classes we provide and also provide better predicting.

For our use case, we will classify a given text into the following categories,

- Positive

- Happy

- Negative

- Sad

- Excited

- Angry

We can use the following prompt with examples as the system prompt.

SYSTEM_PROMPT = """Given a sentence in single backticks you have to classify the sentiment of the sentence into multiple labels. It can be positive, exciting, negative, angry, happy, and sad.

A sentence can have multiple labels. It is a multi-label classification problem.

Example 1

Text: `I absolutely love this new update!`

Labels:

is_positive: True

is_exciting: True

is_negative: False

is_angry: False

is_happy: True

is_sad: False

Example 2

Text: The cancellation of the event is incredibly disappointing

Labels:

is_positive: False

is_exciting: False

is_negative: True

is_angry: True

is_happy: False

is_sad: True

"""

Now that we have the prompt, let’s look at the function, its arguments, and the function call.

import json

from pydantic import BaseModel

class Sentiment(BaseModel):

is_positive: bool

is_exciting: bool

is_negative: bool

is_angry: bool

is_happy: bool

is_sad: bool

SYSTEM_PROMPT = """Given a sentence in single backticks you have to classify the sentiment of the sentence into multiple labels. It can be positive, exciting, negative, angry, happy, and sad.

A sentence can have multiple labels. It is a multi-label classification problem.

Example 1

Text: `I absolutely love this new update!`

Labels:

is_positive: True

is_exciting: True

is_negative: False

is_angry: False

is_happy: True

is_sad: False

Example 2

Text: The cancellation of the event is incredibly disappointing

Labels:

is_positive: False

is_exciting: False

is_negative: True

is_angry: True

is_happy: False

is_sad: True

"""

fc = FunctionCall("YOUR_OPENAI_API_KEY")

function_name, function_argument = fc(

"Text: `I can't believe she said that to me; it's infuriating!`",

SYSTEM_PROMPT,

[

{

"name": "sentimentClassified",

"description": "Print the categories of the sentiments of the given text.",

"parameters": Sentiment.schema(),

}

],

"gpt-3.5-turbo",

{"name": "sentimentClassified"},

)

print(f"FUNCTION NAME: {function_name}")

print(f"FUNCTION ARGUMENT: {json.dumps(function_argument, indent=4)}")

Again, let’s break down the above code line by line.

class Sentiment(BaseModel):

is_positive: bool

is_exciting: bool

is_negative: bool

is_angry: bool

is_happy: bool

is_sad: bool

Here, we have defined our function arguments to have boolean values for positive, negative, exciting, angry, happy, or sad. The model has to predict which sentiments the text is conveying and mark those as true.

fc = FunctionCall("YOUR_OPENAI_API_KEY")

function_name, function_argument = fc(

"Text: `I can't believe she said that to me; it's infuriating!`",

SYSTEM_PROMPT,

[

{

"name": "sentimentClassified",

"description": "Print the categories of the sentiments of the given text.",

"parameters": Sentiment.schema(),

}

],

"gpt-3.5-turbo",

{"name": "sentimentClassified"},

)

print(f"FUNCTION NAME: {function_name}")

print(f"FUNCTION ARGUMENT: {json.dumps(function_argument, indent=4)}")

The function call class ( FunctionCall ) is initialized and the required argument values are passed to the instance to get the output.

Let’s look at the output for the sentence we provided above.

FUNCTION NAME: sentimentClassified

FUNCTION ARGUMENT: {

"is_positive": false,

"is_exciting": false,

"is_negative": true,

"is_angry": true,

"is_happy": false,

"is_sad": false

}

The sentence we provided has a negative connotation and the person sounds angry. So the model has marked the text with is_negative and is_angry of true.

We can try for another sentence, “Text: `The surprise party made me jump with joy!`”

The output will be like the following.

FUNCTION NAME: sentimentClassified

FUNCTION ARGUMENT: {

"is_positive": true,

"is_exciting": true,

"is_negative": false,

"is_angry": false,

"is_happy": true,

"is_sad": false

}

The model predicts the positive connotation of the text along with the depiction of excitement ( is_excited ) and happiness ( is_happy ).

Let’s look at a more advanced example where function calling shines.

Few-shot advanced

Along with the labels, we also want to know which part of the sentence is bringing out the classified sentiment. In that case, we need to tweak the prompt with examples and the function argument model.

First, let’s update the Sentiment arguments. We introduce a new model SentimentArgs which provides the schema for an individual sentiment. The individual sentiment will have the status and from_part keys. The status key depicts the presence of that particular sentiment. The from_part key will be a string that will have the word or the combination of words that depicts the predicted sentiment.

class SentimentArgs(BaseModel):

status: bool

from_part: str

class Sentiment(BaseModel):

is_positive: SentimentArgs

is_exciting: SentimentArgs

is_negative: SentimentArgs

is_angry: SentimentArgs

is_happy: SentimentArgs

is_sad: SentimentArgs

Let’s tweak our prompt and provide examples.

SYSTEM_PROMPT = """Given a sentence in single backticks you have to classify the sentiment of the sentence into multiple labels. It can be positive, exciting, negative, angry, happy, and sad.

A sentence can have multiple labels. It is a multi-label classification problem. Along with the label the word or the combination of word that depicts that emotion should be extracted.

Example 1

Text: `I absolutely love this new update!`

Labels:

is_positive: {status: True, from_part: "love"}

is_exciting: {status: True, from_part: "absolutely love"}

is_negative: {status: False, from_part: ""}

is_angry: {status: False, from_part: ""}

is_happy: {status: True, from_part: "love"}

is_sad: {status: False, from_part: ""}

Example 2

Text: The cancellation of the event is incredibly disappointing

Labels:

is_positive: {status: False, from_part: ""}

is_exciting: {status: False, from_part: ""}

is_negative: {status: True, from_part: "disappointing"}

is_angry: {status: True, from_part: "disappointing"}

is_happy: {status: False, from_part: ""}

is_sad: {status: True, from_part: "disappointing"}

"""

The function initialization and calling the initialized object with the text will remain the same.

Let’s see the output for different sentences.

message: "`Text: Rainy days always make me feel a bit blue.`"

FUNCTION NAME: sentimentClassified

FUNCTION ARGUMENT: {

"is_positive": {

"status": false,

"from_part": ""

},

"is_exciting": {

"status": false,

"from_part": ""

},

"is_negative": {

"status": true,

"from_part": "a bit blue"

},

"is_angry": {

"status": false,

"from_part": ""

},

"is_happy": {

"status": false,

"from_part": ""

},

"is_sad": {

"status": true,

"from_part": "a bit blue"

}

}

message: "`Text: I can't believe she said that to me; it's infuriating!`"

FUNCTION NAME: sentimentClassified

FUNCTION ARGUMENT: {

"is_positive": {

"status": false,

"from_part": ""

},

"is_exciting": {

"status": false,

"from_part": ""

},

"is_negative": {

"status": true,

"from_part": "infuriating"

},

"is_angry": {

"status": true,

"from_part": "infuriating"

},

"is_happy": {

"status": false,

"from_part": ""

},

"is_sad": {

"status": false,

"from_part": ""

}

}

Here, we see for both texts, the model has figured out the labels. Along with that, it has also extracted the word or group of words that depict that label or sentiment.

Just like zero-shot and few-shot prompting techniques, there are other techniques like chain-of-thought, tree-of-thought, etc. You can check those out in the Prompt Engineering Guide.

In the next part, we will look at the ReAct prompting, which is a backbone to different agents out there.

Conclusion

In this article, we had a brief introduction to prompt engineering and saw basic prompt engineering techniques — zero-shot and few-shot prompting. We also got introduced to function calling and went through multiple hands-on examples of using function calling along with the prompting techniques. In the next part, we will learn about the ReAct prompting technique and implement it using function calling.

If you cannot wait for the next blog, you can go through the FuncReAct repository to get an initial introduction to it.

Other Parts

- Part 1 — Understanding Prompt Engineering Techniques

- Part 2 — Setting up the base for the ReAct RAG bot

- Part 3 — Individual ReAct RAG bot components

- The Finale (Part 4) — Completing the ReAct RAG Bot

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI