Detailed Guide of How To Set up MLflow on GCP in a Secure Way

Last Updated on July 24, 2024 by Editorial Team

Author(s): Yuki Shizuya

Originally published on Towards AI.

Introduction

I recently needed to set up an environment of MLflow, a popular open-source MLOps platform, for internal team use. We generally use GCP as an experimental platform, so I wanted to deploy MLflow on GCP, but I couldn’t find a detailed guide on how to do so securely. There are several points that are stuck for beginners like me, so I decided to share a step-by-step guide to securely set up MLflow on GCP. In this blog, I will share how to deploy MLflow on Cloud Run with Cloud IAP, VPC egress, and GCS FUSE. I referenced this great article [1, 2], and please note that this setup is not for free.

System Architecture

The overall architecture is the diagram below.

- Cloud Run for MLflow backend server

MLflow needs a backend server to serve the UI and enable remote storage of run artifacts. We deploy it on Cloud Run to save costs because it doesn’t need to run constantly.

- Cloud IAP + Cloud Load Balancing(HTTPS) for security

Cloud IAP authenticates only authorized users who have an appropriate IAM role. Intuitively, an IAM role defines fine-grained user access management. Since we want to deploy a service for internal team use, Cloud IAP suits this situation. When using Cloud IAP, we must prepare the external HTTP(S) load balancer, so we can configure both systems.

- Cloud Storage for MLflow artifact storage

MLflow needs to store artifacts such as trained models, training configuration files, etc. Cloud Storage is a low-cost, managed service for storing unstructured data (not table data). Although we can set global IP for Cloud Storage, we want to avoid exposing it outside; thus, we use GCS FUSE to be able to connect even without global IP.

- Cloud SQL for MLflow metadata database

MLflow also needs to store metadata such as metrics, hyperparameters of models, evaluation results, etc. CloudSQL is a managed relational database service, so it is suitable for such a use case. We also want to avoid exposing it outside; thus, we use VPC egress to connect securely.

Now, let’s configure this architecture step by step! I will use the gcloud CLI as much as possible to reproduce results easily, but I will use GUI for some parts.

1. Prerequisites

- Install the gcloud CLI from the official site

I used a Mac(M2 chip) with macOS 14.4.1 for my environment. So, I installed the macOS version. You can download it based on your environment. If you want to avoid setting up the environment in your local, you can also use Cloud Shell. For Windows users, I recommend using Cloud Shell.

- Install direnv from the official site

Direnv is very convenient to manage environment variables. It can load and unload them depending on the current directory. If you use MacOS, you can download it using Bash. Note that you must hook direnv into your shell to correspond to your shell environment.

- Create Google Cloud project and user account

I assume that you already have a Google Cloud project. If not, you can follow this instruction. Furthermore, you already have a user account associated with that project. If not, please follow this site, and please run the following command.

gcloud auth login

- Clone the git repository

I compiled the necessary files for this article, so clone it in your preferred location.

git clone https://github.com/tanukon/mlflow_on_GCP_CloudIAP.git

cd mlflow_on_GCP_CloudIAP

2. Define variables

For the first step, we configure the necessary variables to develop the MLflow environment. Please create a new file called .envrc. You need to set the following variables.

export PROJECT_ID = <The ID of your Google Cloud project>

export ROLE_ID=<The name for your custom role for mlflow server>

export SERVICE_ACCOUNT_ID=<The name for your service account>

export VPC_NETWORK_NAME=<The name for your VPC network>

export VPC_PEERING_NAME=<The name for your VPC peering service>

export CLOUD_SQL_NAME=<The name for CloudSQL instance>

export REGION=<Set your preferred region>

export ZONE=<Set your preferred zone>

export CLOUD_SQL_USER_NAME=<The name for CloudSQL user>

export CLOUD_SQL_USER_PASSWORD=<The password for CloudSQL user>

export DB_NAME=<The database name for CloudSQL>

export BUCKET_NAME=<The GCS bucket name>

export REPOSITORY_NAME=<The name for the Artifact repository>

export CONNECTOR_NAME=<The name for VPC connector>

export DOCKER_FILE_NAME=<The name for docker file>

export PROJECT_NUMBER=<The project number of your project>

export DOMAIN_NAME=<The domain name you want to get>

You can check the project ID and number in the ≡ >> Cloud overview >> Dashboard.

You also need to define the region and zone based on the Google Cloud settings from here. If you don’t care about network latency, anywhere is ok. Besides those variables, you can name others freely. After you define them, you need to run the following command.

direnv allow .

3. Enable API and Define IAM role

The next step is to enable the necessary APIs. To do this, run the commands below one by one.

gcloud services enable servicenetworking.googleapis.com

gcloud services enable artifactregistry.googleapis.com

gcloud services enable run.googleapis.com

gcloud services enable domains.googleapis.com

Next, create a new role to include the necessary permissions.

gcloud iam roles create $ROLE_ID --project=$PROJECT_ID --title=mlflow_server_requirements --description="Necessary IAM permissions to configure MLflow server" --permissions=compute.networks.list,compute.addresses.create,compute.addresses.list,servicenetworking.services.addPeering,storage.buckets.create,storage.buckets.list

Then, create a new service account for the MLflow backend server (Cloud Run).

gcloud iam service-accounts create $SERVICE_ACCOUNT_ID

We attach a role we made in the previous step.

gcloud projects add-iam-policy-binding $PROJECT_ID --member=serviceAccount:$SERVICE_ACCOUNT_ID@$PROJECT_ID.iam.gserviceaccount.com --role=projects/$PROJECT_ID/roles/$ROLE_ID

Moreover, we need to attach roles below. Please run the command one by one.

gcloud projects add-iam-policy-binding $PROJECT_ID --member=serviceAccount:$SERVICE_ACCOUNT_ID@$PROJECT_ID.iam.gserviceaccount.com --role=roles/compute.networkUser

gcloud projects add-iam-policy-binding $PROJECT_ID --member=serviceAccount:$SERVICE_ACCOUNT_ID@$PROJECT_ID.iam.gserviceaccount.com --role=roles/artifactregistry.admin

4. Create a VPC network

We want to instantiate our database and storage without global IP to prevent public access; thus, we create a VPC network and instantiate them inside a VPC.

gcloud compute networks create $VPC_NETWORK_NAME \

--subnet-mode=auto \

--bgp-routing-mode=regional \

--mtu=1460

We need to configure private services access for CloudSQL. In such a situation, GCP offers VPC peering, so we can use this function. I referenced the official guide here.

gcloud compute addresses create google-managed-services-$VPC_NETWORK_NAME \

--global \

--purpose=VPC_PEERING \

--addresses=192.168.0.0 \

--prefix-length=16 \

--network=projects/$PROJECT_ID/global/networks/$VPC_NETWORK_NAME

In the above code, addresses are anything fine if addresses satisfy the condition of private IP addresses. Next, we create a private connection using VPC peering.

gcloud services vpc-peerings connect \

--service=servicenetworking.googleapis.com \

--ranges=google-managed-services-$VPC_NETWORK_NAME \

--network=$VPC_NETWORK_NAME \

--project=$PROJECT_ID

5. Configure CloudSQL with a private IP address

Now, we configure CloudSQL with a private IP address using the following command.

gcloud beta sql instances create $CLOUD_SQL_NAME \

--project=$PROJECT_ID \

--network=projects/$PROJECT_ID/global/networks/$VPC_NETWORK_NAME \

--no-assign-ip \

--enable-google-private-path \

--database-version=POSTGRES_15 \

--tier=db-f1-micro \

--storage-type=HDD \

--storage-size=200GB \

--region=$REGION

It takes a couple of minutes to build a new instance. We don’t need a high-spec instance for CloudSQL because it is only used internally, so I used the smallest instance to save costs. You can ensure your instance is configured for private services access using the following command.

gcloud beta sql instances patch $CLOUD_SQL_NAME \

--project=$PROJECT_ID \

--network=projects/$PROJECT_ID/global/networks/$VPC_NETWORK_NAME \

--no-assign-ip \

--enable-google-private-path

For the next step, we need to create a login user so that MLflow backend can access.

gcloud sql users create $CLOUD_SQL_USER_NAME \

--instance=$CLOUD_SQL_NAME \

--password=$CLOUD_SQL_USER_PASSWORD

Furthermore, we must create the database where the data will be stored.

gcloud sql databases create $DB_NAME --instance=$CLOUD_SQL_NAME

6. Create Google Cloud Storage(GCS) without global IP address

We will create a Google Cloud Storage(GCS) bucket to store experiment artifacts. Your bucket name must be unique.

gcloud storage buckets create gs://$BUCKET_NAME --project=$PROJECT_ID --uniform-bucket-level-access --public-access-prevention

To secure our bucket, we add iam-policy-binding to the created one. Thus, the only service account we created can access the bucket.

gcloud storage buckets add-iam-policy-binding gs://$BUCKET_NAME --member=serviceAccount:$SERVICE_ACCOUNT_ID@$PROJECT_ID.iam.gserviceaccount.com --role=projects/$PROJECT_ID/roles/$ROLE_ID

7. Create secrets for credential information

We store credential information, such as CloudSQL URI and bucket URI, on Google Cloud secrets to securely retrieve them. We can create a secret by executing the following commands:

gcloud secrets create database_url

gcloud secrets create bucket_url

Now, we need to add the exact values for them. We define CloudSQL URL in the following format.

"postgresql://<CLOUD_SQL_USER_NAME>:<CLOUD_SQL_USER_PASSWORD>@<private IP address>/<DB_NAME>?host=/cloudsql/<PROJECT_ID>:<REGION>:<CLOUD_SQL_NAME>"

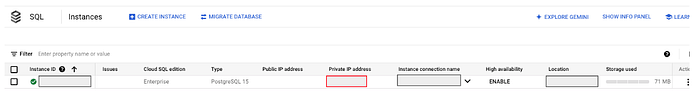

You can check your instance’s private IP address through your CloudSQL GUI page. The red line rectangle part is your instance’s private IP address.

You can set your secret using the following command. Please replace the placeholders in your setting.

echo -n "postgresql://<CLOUD_SQL_USER_NAME>:<CLOUD_SQL_USER_PASSWORD>@<private IP address>/<DB_NAME>?host=/cloudsql/<PROJECT_ID>:<REGION>:<CLOUD_SQL_NAME>" | \

gcloud secrets versions add database_url --data-file=-

For the GCS, we will use GCS FUSE to mount GCS directly to Cloud Run. Therefore, we need to define the directory we want to mount to the secret. For example, “/mnt/gcs”.

echo -n "<Directory path>" | \

gcloud secrets versions add bucket_url --data-file=-

8. Create Artifact Registry

We must prepare the artifact registry to store a Dockerfile for the Cloud Run service. First of all, we create a repository of it.

gcloud artifacts repositories create $REPOSITORY_NAME \

--location=$REGION \

--repository-format=docker

Next, we build a Dockerfile and push it to the artifact registry.

gcloud builds submit --tag $REGION-docker.pkg.dev/$PROJECT_ID/$REPOSITORY_NAME/$DOCKER_FILE_NAME

9. Prepare domain for an external load balancer

Before deploying our container to Cloud Run, we need to prepare an external load balancer. An external load balancer requires a domain; thus, we must get a domain for our service. Firstly, you verify that other services are not using the domain you want to use.

gcloud domains registrations search-domains $DOMAIN_NAME

If another service uses it, consider the domain name again. After you check whether your domain is available, you need to choose a DNS provider. In this blog, I used Cloud DNS. Now, you can register your domain. It costs $12~ per year. Please replace <your domain> placeholder.

gcloud dns managed-zones create $ZONE \

--description="The domain for internal ml service" \

--dns-name=$DOMAIN_NAME.<your domain>

Then, you can register your domain. Please replace <your domain> placeholder again.

gcloud domains registrations register $DOMAIN_NAME.<your domain>

10. Deploy Cloud Run using GUI

Now, we deploy Cloud Run using a registered Dockerfile. After this deployment, we will configure the Cloud IAP. Please click Cloud Run >> CREATE SERVICE. First, you must pick up the container image from your Artifact Registry. After you pick it up, the service name will automatically be filled in. You set the region as the same as the Artifact registry location.

We want to allow external load balancer traffic related to the Cloud IAP, so we must check it.

Next, the default setting allows us to use only 512 MB, which is not enough to run the MLflow server (I encountered a memory shortage error). We change the CPU allocation from 512 MB to 8GB.

We need to get the secret variables for the CloudSQL and GCS Bucket path. Please set variables following the image below.

The network setting below is necessary to connect CloudSQL and GCS bucket (VPC egress setting). For Network and Subnet placeholder, you must choose your VPC name.

In the SECURITY tab, you must choose the service account defined previously.

After scrolling to the end of the setting, you will see the Cloud SQL connections. You need to choose your instance.

After you set up, please click the CREATE button. If there is no error, the Cloud Run service will be deployed in your project. It takes a couple of minutes.

After deploying the Cloud Run service, we need to update and configure the GCS FUSE setting. Please replace the placeholders corresponding to your environment.

gcloud beta run services update <Your service name> \

--add-volume name=gcs,type=cloud-storage,bucket=$BUCKET_NAME --add-volume-mount volume=gcs,mount-path=<bucket_url path>

So far, we haven’t been able to access the MLflow server because we haven’t set up an external load balancer with Cloud IAP. Google offers a convenient integration with other services for Cloud Run. Please open the Cloud Run page for your project and click your service name. You will see the page below.

After you click ADD INTEGRATION, you will see the page below. Please click Choose Custom domains — Google Cloud Load Balancing.

If there are any services you haven’t granted, please click GRANT ALL. After that, please enter the domain you got in the previous section.

After you fill in Domain 1 and Service 1, new resources will be created. It takes 5~30 minutes. After a while, a table is created with the DNS records you need to configure: use this to update your DNS records at your DNS provider.

Please move to the Cloud DNS page and click your zone name.

Then, you will see the page below. Please click the ADD STANDARD.

Now, you can set the DNS record using the global IP address shown in a table. The resource record type is A. TTL sets the default value and sets your global IP address in the table to IPv4 Address 1 placeholder.

After you update your DNS at your DNS provider, it can take up to 45 minutes to provision the SSL certificate and begin routing traffic to your service. So, please take a break!

If you can see the screen below, you can successfully create an external load balancer for Cloud Run.

Finally, we can configure Cloud IAP. Please open the Security >> Identity-Aware Proxy page and click the CONFIGURE CONSENT SCREEN.

You will see the screen below, please choose Internal in User Type and click CREATE button.

In the App name, you need to name your app and put your mail address for User support email and Developer contact information. Then click SAVE AND CONTINUE. You can skip the Scope page, and create.

After you finish configuring the OAuth screen, you can turn on IAP.

Check the checkbox and click the TURN ON button.

Now, please return to the Cloud Run integration page. When you access the URL displayed in the Custom Domain, you will see the authentication failed display like below.

The reason why you got this is that we need to add another IAM policy to access our app. You need to add “roles/iap.httpsResourceAccessor“ to your account. Please replace <Your account>.

gcloud projects add-iam-policy-binding $PROJECT_ID --member='user:<Your account>' --role=roles/iap.httpsResourceAccessor

After waiting a few minutes until the setting is reflected, you can finally see the MLflow GUI page.

11. Configure programmatic access for IAP authentication

To configure the programmatic access for IAP, we use an OAuth client. Please move to APIs & Services >> Credentials. The previous configuration of Cloud IAP automatically created an OAuth 2.0 client; thus, you can use it! Please copy the Client ID.

Next, you must download the service account key created in the previous process. Please move to the IAM & Admin >> Service accounts and click your account name. You will see the following screen.

Then, move to the KEYS tab and click ADD KEY >> Create new key. Set key type as “JSON” and click CREATE. Please download the JSON file and change the filename.

Please add the lines below to the .envrc file. Note that replace placeholders based on your environment.

export MLFLOW_CLIENT_ID=<Your OAuth client ID>

export MLFLOW_TRACKING_URI=<Your service URL>

export GOOGLE_APPLICATION_CREDENTIALS=<Path for your service account credential JSON file>

Don’t forget to update the environment variables using the following command.

direnv allow .

I assume you already have a Python environment and have finished installing the necessary libraries. I prepared test_run.py to check that the deployment works correctly. Inside test_run.py, there is an authentication part and a part for sending parameters to the MLflow server part. When you run test_run.py, you can see the dummy results stored in the MLflow server.

This is the end of this blog. Thank you for reading my article! If I missed anything, please let me know.

References

[1] Vargas, A., How to launch an MLFlow server with Continuous Deployment on GCP in minutes, Medium

[2] MLflowをGoogle Kubernetes Engineで動かす快適な実験管理ハンズオン, CyberAgent AI tech studio

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.