Data Scraping in the Spotlight: Are Language Models Overstepping by Training on Everyone’s Content?

Last Updated on July 24, 2023 by Editorial Team

Author(s): Viggy Balagopalakrishnan

Originally published on Towards AI.

While scraping enabled models to get where they are, cleanly sourced data is going to become more and important

As I wrapped up the research for this piece and was about to start writing, OpenAI had a perfect announcement to go with it — they are temporarily disabling the “Browse with Bing” feature on ChatGPT. If you haven’t used it before, this is a feature available to paying Plus users. Plus gives you access to primarily two things:

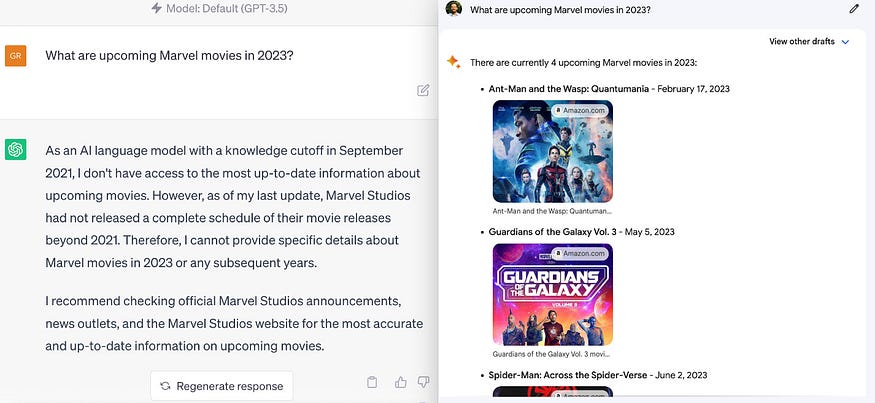

- Browse with Bing — By default, ChatGPT does not connect to real-time website data (eg. if you ask it what are upcoming Marvel movies in 2023, it won’t give you an answer because its training data stops at Sept 2021). Browse with Bing goes beyond this limitation by leveraging real-time information from sites across the web, which OpenAI now gets access to given their partnership with Microsoft Bing

- Plugins — These are integrations built into ChatGPT by independent companies to expose their capabilities through ChatGPT’s UI (eg. OpenTable lets you search for restaurant reservations, Kayak lets you search for flights from within ChatGPT if you use their plugins); These are experimental at this point and are “cool” features but users haven’t really found them useful (yet).

Therefore, Browse with Bing is particularly important for ChatGPT because its biggest competitor Google Bard has the ability to use real-time data from Google Search. See example responses from ChatGPT vs Bard for the Marvel movies in 2023:

So, you can see why it’s non-trivial for OpenAI to disable Browse with Bing (even temporarily). The reasoning is what’s interesting:

We have learned that the ChatGPT Browse beta can occasionally display content in ways we don’t want. For example, if a user specifically asks for a URL’s full text, it might inadvertently fulfill this request. As of July 3, 2023, we’ve disabled the Browse with Bing beta feature out of an abundance of caution while we fix this in order to do right by content owners. We are working to bring the beta back as quickly as possible, and appreciate your understanding!

It’s interesting because it brings into spotlight a larger issue: Companies like OpenAI and Google Bard are using a large amount of data to train their models but it’s unclear whether they have the permissions to use this data and how they are compensating creators / content platforms for use of this data.

In this article, we’ll unpack a few things:

- What are Large Language Models (LLMs) and why do they need data?

- Where are they getting this data from?

- Why should companies like OpenAI, Google care about how they source data?

- What strategies are content platforms adopting to respond to this?

At the end of the article, you will hopefully walk away with a fuller picture of this rapidly evolving topic. Let’s dive in.

What are Large Language Models and why do they need data?

We’ll start with a simple explainer of how Machine Learning models work — let’s say you want to predict how late your upcoming flight’s arrival time will be. A very basic version can be human guess work (eg. if weather sucks or if the airline sucks, it’s likely late). If you want to make that more reliable, you can take real data on flight arrivals times and pattern match it again various factors (eg. how arrival times related to airline, destination airport, temperature, rainfall etc.).

Now you can take this one step further, use the data and create a math equation to predict this. For example: Delay minutes = A * airline reliability score + B * busy-ness of an airport + C * amount of rainfall. How do you calculate A, B, C? By using the large volume of past arrival time data you have and doing some math on it.

This equation in math terms is called a “regression” and is the one of most commonly use basic machine learning models. Note that the model is basically a math formula comprising of “features” (eg. airline reliability score, busy-ness of an airport, amount of rainfall) and “weights” (eg. A, B, C which show how much weight each variable adds to the prediction).

The same concept can be extended to other more complex models — like “neural networks” (that you might have heard in the context of deep learning) or Large Language Models (often abbreviated to LLMs and are the underlying models for all text-based AI products such as Google Search, ChatGPT and Google Bard).

We won’t go into too much detail but each of these models, including LLMs, are a combination of “features” and “weights”. The most performant models have the best combination of features and weights, the way to get to that combination is through training with a TON of data. The more data you have, the more performant the model. Therefore, having a massive volume of data is critical and companies that train these models need to source this data.

Where are they getting this data from?

Broadly, data sources can be broadly categorized into:

- Open Source Data: These are high volume data sources that are typically available for commercial purposes, including LLM training. Examples of large open source data include Wikipedia, CommonCrawl (an open repository of web crawl data), Project Gutenberg (free eBooks), BookCorpus (free books written by unpublished authors) to name a few.

- Independent Content Websites: These include a broad set of websites such as news publications (think Washington Post, the Guardian), creator-specific platforms (think Kickstarter, Patreon, Medium) and user-generated content platforms (think Reddit, Twitter). These typically have more restrictive policies when it comes to scraping their content, especially if it’s used for commercial purposes.

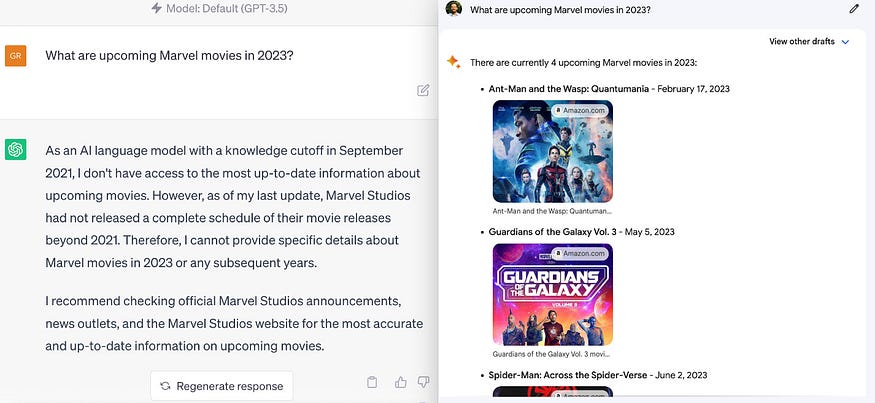

In an ideal world, LLM companies would explicitly list out all the data sources they have used / scraped and do so in compliance with the policies of whoever owns the content. However, several of them have been non-transparent about it, the biggest offender being OpenAI (maker of ChatGPT). Google published one dataset it used for training, called C-4. The Washington Post put together a neat analysis of this data, here are the top 30 sources based on their analysis:

Most of this data was acquired from scraping and content platforms contend that this data was scraped in violation of their terms of use. They are clearly unhappy about it, especially given the amount of upside the LLM companies are able to capture from the data.

Why should companies like OpenAI, Google care about how they source data?

Okay, content providers are complaining. So what? Should companies with LLM products care about this, besides wanting to be “fair” out of the goodness of their hearts?

Data sourcing is becoming increasingly critical for two major reasons.

Legal Complications: Companies developing LLMs are starting to find themselves embroiled in lawsuits from content creators and publishers who believe their data was used without permission. Legal battles can be costly and tarnish the reputation of the companies involved. Case in point:

- Microsoft, GitHub, and OpenAI are being sued for allegedly violating copyright law by reproducing open-source code using AI

- Getty Images sues AI art generator Stable Diffusion

- AI art tools Stable Diffusion and Midjourney targeted with copyright lawsuit

[side note: Stable Diffusion, Midjourney are AI image generators and not language generators and therefore not “LLMs” but the same principles of what constitutes a model and how they are trained is the same]

Making headway with Enterprise Customers: Enterprise customers employing LLMs or their derivatives need to be assured of the legitimacy of the training data. They do not want to face legal challenges due to the data sourcing practices of the LLMs they use, especially if they cannot pass on the liability of those lawsuits to the LLM providers.

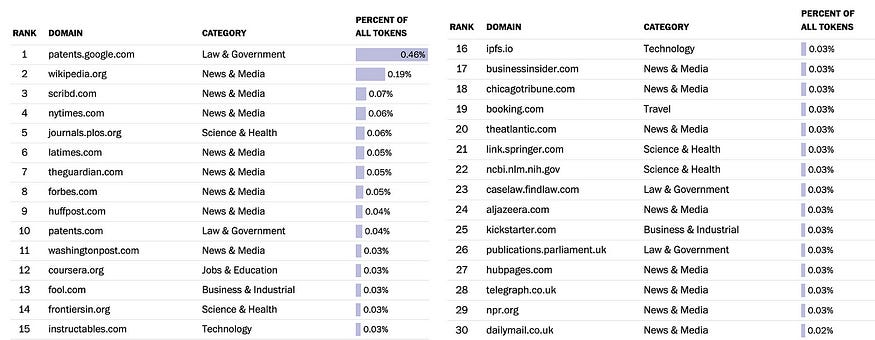

Can you really build effective models with all of these messy data sourcing constraints? That’s a fair question. A masterclass in applying these principles is the recent announcement of Adobe Firefly (it’s a cool product and in open beta, you can play around with it) — the product has a wide set of features including Text to image, i.e. you can type a line of text and it will generate an image for you.

What makes Firefly a great example is:

- Adobe only uses images that are part of Adobe Stock that they already have the licenses for, plus open source images that are not license restricted. In addition, they have also announced that they want to build generative AI in a way that enables creators to monetize their talents and that they will announce a compensation model for Adobe Stock contributors once Firefly is out of beta

- Adobe will indemnify its customers for Firefly outputs (starting with the text to image feature) — if you haven’t heard the term “indemnify” before, in simple terms, Adobe is saying they are confident they have cleanly sourced the data going into their models and are therefore willing to cover any legislation that might come up if someone sues an Adobe customer for using Firefly output.

One criticism of the clean data sourcing approach has been that it will hurt the quality of output generated by the models. The opposite side of that argument is that high quality data owned by content providers can provide better quality input to model training (garbage in, garbage out is real when it comes to model training). In the image below, left is an output from Adobe Firefly, right is from OpenAI’s Dall-E. If you compare the two, they are quite similar and Firefly’s output is arguably more realistic, which goes to show that high quality language models can be built off of just cleanly sourced data.

What strategies are content platforms adopting to respond to this?

Several companies that have large volume of content have come out strongly expressing that they intend to charge AI companies for using their data. It’s important to note that most of them have not come up with an anti-AI stance (i.e. they are not saying AI is going to take over our business, so we are shutting down access to content). They are mostly pushing for a commercial construct that defines how the access of this data will occur and how they will get compensated for it.

StackOverflow, arguably the most popular forum that programmers use when they need help, plans to begin charging large AI developers for access to the 50 million Q&A content on its service. StackOverflow CEO Prashanth Chandrasekar laid out some reasonable arguments:

- The additional revenue will be vital to ensuring StackOverflow can keep attracting users and maintaining high-quality information, which will also help future chatbots by generating new knowledge on the platform

- StackOverflow will continue to license data for free to some people and companies, and only looking to charge companies developing LLMs forcommercial purposes

- He argues that LLM developers are violating Stack Overflow’s terms of service, which he believes falls under a Creative Commons license that requires anyone later using the data to mention where it came from (which LLMs don’t do)

Reddit came out with a similar announcement (alongside their controversial changes to API pricing that shut down several third party apps). Reddit CEO Steve Huffman told the Times “The Reddit corpus of data is really valuable but we don’t need to give all of that value to some of the largest companies in the world for free”.

Twitter stopped free access to their APIs earlier this year, and also announced a recent change that limits the number of tweets a user can see in a day, in an attempt to prevent unauthorized scraping of data. Though the execution and rollout of the policies leave much to be desired, the intent is clear that they do not intend to provide free data access for commercial purposes.

Another group that has come out with a united front and critique of LLMs is news organizations. The News/Media Alliance (NMA), which represents publishers in print and digital media in the US, has published what they are calling AI principles. While there isn’t much tactical detail here, the message they are trying to get across is clear:

GAI (Generative AI) developers and deployers should not use publisher IP without permission, and publishers should have the right to negotiate for fair compensation for use of their IP by these developers.

Negotiating written, formal agreements is therefore necessary.

The fair use doctrine does not justify the unauthorized use of publisher content, archives and databases for and by GAI systems. Any previous or existing use of such content without express permission is a violation of copyright law.

Again, their arguments have not been to shut these down but to have commercial agreements in place to use this data in compliance with copyright law, and they also make the argument that compensation frameworks (for example, licensing) already exist in the market today and therefore will not slow innovation.

Conclusion

This is just the beginning. Platforms with high volume of content are likely to seek compensation for their data. Even companies that have not yet announced this intent but already have other forms of data licensing programs (eg. LinkedIn, Foursquare, Reuters) are likely to adapt them for AI/LLM companies.

Though this development may seem like a hindrance to innovation, it is a necessary step for the long-term sustainability of content platforms. By ensuring they are compensated fairly, content creators can continue to produce quality content, which in turn will feed into making LLMs more effective.

Thank you for reading! If you liked this piece, do consider subscribing to the Unpacked newsletter where I publish weekly in-depth analyses of current tech and business topics. You can also follow me on Twitter @viggybala. Best, Viggy.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.