Create a Boolean Image Classifier Fast With Any Data Set, With a Brief Explanation of the Convolutional Neural Network (CNN) With Code

Last Updated on July 17, 2023 by Editorial Team

Author(s): Nafiu

Originally published on Towards AI.

Hi everyone, in this post, we are going to look at a type of neural network called the Convolutional Neural Network. Here we will learn how it works in detail and what you should consider when building it. And to make things interesting, we will train a machine-learning model and make predictions in real-time.

Table of contents

What you will learn

- How CNN works

- How to prepare images for training

- How to implement a CNN

CNN overview

A Convolutional Neural Network, also known as a ConvNet, is a type of deep neural network designed for finding patterns in images, image recognition, and computer vision tasks. Although CNN's are specifically designed for processing images, they can also be used for classifying non-image data, such as audio data. However, in this post, we will focus on processing images with a CNN

Things you should be familiar with before diving deep into CNN

1. Batch size

Batch_size is the number of samples that will be spread through the network.

Eg: Imagine you have 160 training samples, and your batch size is 32. In this case, the network takes the first 32 samples from the training dataset (1st to 31st) and will train the network. Next, it will take the next set of samples (33rd to 65th) from the training dataset and will train the network. This process continues until all batches are processed and trained

2. Input_shape

The input shape contains 4 parameters; those are batch size, height, width and channels. The batch size, height, and width are selected in the image processing phase, where we resize the images and make some other necessary requirements for our dataset. The fourth parameter, “channels,” depends on the type of image we use. If we use grayscale images, the channel is 1, for RGB images it is 3, since an RGB image is represented as a 3D tensor.

Imagine you are using RGB images and the size of the preprocessed image is 256, then the input_shape would look like this input_shape=(256,256,3), as you can see we have not specified a batch_size so we can use any batch_size when training the model. We will discuss this later when we build our model and go through the summary of the model.

3. Filter

The so-called feature detector is a grid matrix that moves over the image pixels and extracts features from the input image to create an output feature map. This feature map is the input for the next pooling layer. (We will get into more details about the pooling layer when we talk about the architecture.) There can be multiple filters in one convolutional layer, the size of the filters is specified in each layer, and the movement of the filters is described by Stride.

4. Stride

Is the number of steps that the filter will take across the input image in order to extract the feature

Example:

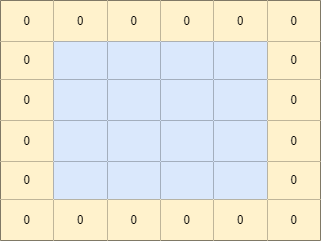

Imagine we have an input image of 6×6, and we selected the filter size to be 3×3 and the stride as 1. This means the 3×3 filter will move across the 6×6 image one pixel by pixel to form the output feature map. Let's visualize that:

This example is based on grayscale images. If the image is an RGB image, the filters should have 3 channels since RGB images are 3 channels deep and each filter will go through its corresponding channel and will create the output feature map by adding all of it to one.

5. Padding

Adds an additional outer layer of pixels of zeros to each side of the output feature map in order to maintain the feature map as same as the input image.

We won’t be using padding when we implement our model, but let’s see how we can add padding to a convolution layer. The easiest way to add padding is by using “valid” and “same”, valid means no padding, and same means add padding.

6. Sigmoid Activation function

The sigmoid Activation function is used to add non-linearity to an ML model, in simple terms, it takes the real output number and transforms it into a range between 0 and 1.

7. Relu Activation function

The rectified linear unit known as Relu, the most popular nonlinear activation function used in deep-learning neural networks. This works by converting all the negative output values to the default value of 0 and retaining all the positive values to overcome the vanishing gradient problem, which also allows the models to learn faster.

CNN architecture

Let’s start with the types of layers that are involved while creating a CNN; normally a simple CNN would have 3 Layers which are: a convolution Layer, a pooling layer, and a fully connected layer, followed by a Flatten layer before the Dense layer and also an activation layer added to each convolution and Dense Layer. Let’s see what each of these layers does…

Convolution Layer

This is the main building block of CNN. There can be multiple convolution layers in a CNN. The first layer of the CNN must be a convolution layer. This layer will take images as input to begin the process. The main key point that we should consider while implementing this layer: is the input image shape, filter, and stride. We have already discussed this in the prerequisites part of this post but anyways, let’s brush it up.

- Input image shape:

Here you should consider the batch_size, width, height, and depth of the image since the depth of the image changes according to the type of image we use therefore, this parameter depends on the type of image we use.. scroll up for more - filter

There can be multiple filters/kernels in a single convolution layer therefore, you should describe the number of filters, the size of each filter, and the stride for each filter. Filters are used to scan through the image and extract important features. scroll up for more

In simple terms, the purpose of this convolution layer is to extract the important features from images and creates the output feature map that needs to be fed into the pooling layer

Pooling layer

The second most important layer used in a CNN, the pooling layer, takes the feature map and passes it through another filter to reduce the dimensions of the feature map and generates a pooled feature map after extracting the most important features from the input feature map.

Two types of pooling layers:

Max pooling:

In max pooling, the filter will get the maximum element from the input feature map covered by a filter

Average pooling:

In Average pooling, the filter will calculate the average of the input feature map covered by a filter

Example: imagine the input feature map is 4×4 and the size of the pooling filter is 2×2 (default) with default stride the output will be as follows:

Flatten layer

This layer converts the final pooled feature map into one dimension. In order to prepare the input for the next dense layer

Dense Layer

There can be Dense multiple layers in a CNN. This layer uses the flattened feature map to extract detected features from it. The Relu activation function will be used in every dense layer except for the last one since it’s the layer that makes the final classification.

Connecting the dots

The CNN starts with a convolution layer. In fact, a CNN can have multiple convolution Layers each of these layers will be followed by a pooling layer. The first convolution layer will take the image as an input, and when the process begins, the job of the convolution and pooling layer is to extract important features from the image. Next comes the flattened layer, this is where the fully connected layer begins. The output from the last pooling layer will be fed into this layer to convert the last pooled feature map into one dimension of data which will then be passed through the Dense layer in order to classify images. There can be Dense multiple layers in a CNN also Relu activation is used in each convolution and Dense layer except for the final dense layer, where the softmax (or other) activation function will be used according to our needs.

Implementation

For demonstration, we are going to use the gender classification dataset from Kaggle, you can get it from here: https://www.kaggle.com/datasets/cashutosh/gender-classification-dataset

Download and unzip the archive.zip file in the working directory. This dataset has two folders, Training and Validation; inside each of these folders, there are two more folders named male and female, which have many images according to the correct gender.

Here we will use the power of python programming to get those images, process them and create and train our model.

Let’s get started.

Importing required libraries

import tensorflow as tf

import numpy as np

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Conv2D, MaxPooling2D, Dense, Flatten, Dropout

Loading data and processing images

To make things fast and easy, we will use the ImageDataGenerator API from TensorFlow to load and set up our dataset for training.

Set the batch size as 32 — explained here

BATCH_SIZE = 32

initialize the ImageDataGenerator API. in the train dataset generator, we will set the validation split as 2%, which we will use later as a test dataset.

TrainTest_Datagen = ImageDataGenerator(

rescale=1./255,

validation_split=0.2 # we will use this as test data

)

Validation_datagen = ImageDataGenerator(

rescale=1./255,

)

Call the API with the required information, such as the data directory, image size, batch_size, and class mode.

Set the image size as 256×256 and the class_mode as binary. Let’s see the code.

train_data = TrainTest_Datagen.flow_from_directory(

'./Training',

target_size=(256,256),

batch_size=BATCH_SIZE,

class_mode='binary',

subset='training'

)

Test_data = TrainTest_Datagen.flow_from_directory(

'./Training',

target_size=(256,256),

batch_size=BATCH_SIZE,

class_mode='binary',

subset='validation'# set as test data

)

Val_data = Validation_datagen.flow_from_directory(

'./Validation',

target_size=(256,256),

batch_size=BATCH_SIZE,

class_mode='binary',

)

Check the target variables generated from the image generator

train_data.class_indices

Modeling and Training

We will use TensorFlow sequential API to create our model, well before we build it, lets discuss its architecture.

- Three convolution layers, with the number of filters increasing from 16, 32 to 64, and each layer with Relu activation function applied to it followed with Max pooling layer after each convolution layer. So Three Three convolution layers and Three pooling layers.

- Next, flatten layer.

- Two dense layers. First, one with 256 neurons with Relu activation function applied to it, and second has 1 neuron with sigmoid activation function applied to it

Let’s see the code.

model = Sequential([

Conv2D(16, (3,3), 1, activation='relu', input_shape = (256,256,3)),

MaxPooling2D(),

Conv2D(32, (3,3), 1, activation='relu'),

MaxPooling2D(),

Conv2D(64, (3,3), 1,activation='relu'),

MaxPooling2D(),

Flatten(),

Dense(256, activation='relu'),

Dense(1, activation='sigmoid')

])

Compile the model and view the summary.

model.compile(optimizer="adam",loss='binary_crossentropy',metrics = ['accuracy'])

model.summary()

Train the model we will train our model under 20 epochs and 1175 steps per epoch for training datasets and 364 steps per epoch for validation datasets also we will use EarlyStopping to avoid overfitting while training the model.

es = tf.keras.callbacks.EarlyStopping(monitor='val_loss', patience=5, verbose=1)

history = model.fit(

train_data,

steps_per_epoch=1175,

epochs=20,

validation_data = Val_data,

validation_steps=364,

callbacks=[es]

)

Here we can see our model has stopped training once it reaches the 8th epoch.

Evaluating our model

Here we will use our test dataset to evaluate our model and check its accuracy. The next piece of code does just that

test_loss, test_acc = model.evaluate(Test_data, verbose=0)

print('\nTest accuracy:', np.round(test_acc * 100,3))

Well, we got 94.7% in accuracy, which is awesome.

But wait, how can we use this model in real-time?

Real-time prediction

First, let’s write a function to process our images and call the model and print out the results for us.

def RealPredict(img_path):

img_test = tf.io.read_file(img_path)

img_test = tf.io.decode_jpeg(img_test , channels = 3)

img_test = tf.image.resize(img_test , [256 , 256] , method="nearest")

yhat = model.predict(np.expand_dims(img_test, 0))

if yhat < 0.5:

print(f'Predicted the person a female')

else:

print(f'Predicted the person a male')

Let’s run the function and see if we get the correct results.

Yay, Hooray, our model works perfectly.. Well done

Well, this is the end of this tutorial about CNN and image classification, I hope you enjoyed it. Don’t forget to follow me since I will be posting great guided tutorials about machine learning and full-stack development.

link to Github repo: https://github.com/nafiu-dev/boolean_image_classifier_FAST-

you can connect with me here:

https://www.instagram.com/nafiu.dev

Linkedin: https://www.linkedin.com/in/nafiu-nizar-93a16720b

My other posts:

End to End full stack project from backend, frontend and machine learning to ethical hacking…

Hi, I welcome you all to this series of building an end to end project starting from backend development, front-end…

medium.com

Stock market prediction using LSTM; will the price go up tomorrow. Practical guide

The goal of this tutorial is to create a machine learning model to predict the future value of a stock traded on a…

medium.com

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.