AI on the Edge — An AI Nerd Series |#01-Pilot

Last Updated on July 24, 2023 by Editorial Team

Author(s): Rakesh Acharya

Originally published on Towards AI.

AI on the Edge — An AI Nerd Series U+007C#01-Pilot

The advent of Edge Computing and the need to bring AI to Edge

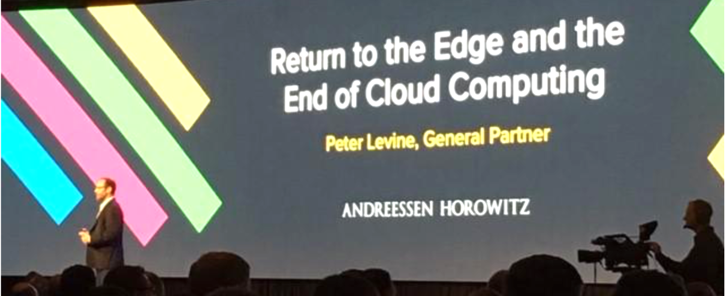

In the beginning, there was one big computer. Soon we started connecting to it using terminals ( the UNIX era). Next, we had personal computers, and this was the first time regular people owned hardware that did the work. Today we’re firmly in the Cloud Computing era, which means today’s world is centralized with a central cloud doing all the required processing. The truly amazing things about the cloud are a large percentage of all companies in the world now rely on the infrastructure, hosting, machine learning, and compute power of a very select few cloud providers: Amazon, Microsoft, Google, and IBM. But things got little stirred up when Peter Levine of Andressen Horowitz presented his interesting working theory at a16z; His presentation was titled “The End of Cloud Computing”(!!!)

Everything that’s popular in technology always gets replaced by something else. — Peter Levine

The advent of Edge Computing is something we have to pay attention to, as it is a buzzword to connote the realization by the tech giants about exhausted Cloud space. So most of the disruptive opportunities in the Cloud lie at the Edge.

Moving towards the Edge

In recent years, the Internet of Things (IoT) — devices and sensors collecting and receiving data, has proliferated into new and vast applications such as autonomous vehicles, video surveillance, logistics, agriculture, consumer electronics, augmented and virtual reality, industrial automation, battlefield technology, etc. According to the Alliance of Internet of Things Innovation, by 2021, so-called “the age of the IoT,” there will be 48 billion (!!!) devices connected to the internet. This expansion caused a shift in the processing requirements associated with it. Cloud Computing — mega data centers, few and far between, which enable businesses to process and store their information and applications remotely, fall short from providing an adequate solution, especially where the data is mission-critical or requires zero latency.

Edge Computing market size is expected to reach USD 29 billion by 2025. — Grand View Research

For these applications, “Edge Computing” provides the answer by adding computational capabilities at the periphery (edges) of the network in closer proximity to the device being served or as part of it. There is no need to wait anymore for the “smarts” to be generated hundreds or thousands of miles away. Latency at the edge is eliminated. Edge Computing refers to the computations that take place at the ‘outside Edge’ of the internet, as opposed to Cloud Computing, where computation happens at a central location. Edge Computing typically executes close to the data source, for example, onboard or adjacent to a connected camera. A self-driving car is a perfect example of Edge Computing. In order for cars to drive down any road safely, it must observe the road in real-time and stop if a person walks in front of the car. In such a case, processing visual information and making a decision is done at the Edge, using Edge Computing.

AI on the Edge

Edge AI will allow real-time operations, including data creation, decision, and action, where milliseconds matter. Real-time operations are important for self-driving cars, robots, and many other areas. Reducing power consumption and thus improving battery life is super important for wearable devices. Edge AI will reduce costs for data communication because fewer data will be transmitted. By processing data locally, you can avoid the problem with streaming and storing a lot of data to the cloud that makes you vulnerable from a privacy perspective.

Example: A handheld power tool is, by definition, on the edge of the network. The Edge AI software application that runs on a microprocessor in the power tool processes data from the power tool in real-time. The Edge AI application generates results and stores the results locally on the device. After working hours, the power tool connects to the internet and sends the data to the cloud for storage and further processing. One of the key properties in the example above is to have long battery life. If the power tool would continuously stream data to the cloud, the battery would be drained in no time.

Welcome to AI on the Edge

So that’s just an introduction about Edge AI, in the upcoming posts, I will be covering some very deep concepts related to AI algorithms that can be used on Edge devices. It must be noted that the field of Edge Computing is growing at a rapid pace; this series is focused only on the AI perspective of Edge. In this series, we will be covering concepts about AI algorithms for Edge, Deep Learning Model Compression, Hardware Acceleration for DL applications. So stay tuned to dive into the deep tech of AI on the Edge !!.

References :

You Need to Move from Cloud Computing to Edge Computing Now!

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.