AI Agents

Author(s): Heidar (Amir) Pirzadeh

Originally published on Towards AI.

Today, AI agents are at the forefront of innovation across major companies. Imagine you’ve been tasked with transforming an existing SaaS business to be powered by AI agents. Don’t worry — I’m here to help you!

But why should you trust me, well, I began working on AI agents eight years ago, long before they became a buzzword. Here’s a cringy draft pitch of my venture at SAP, literally called Smart Agents!

We worked hard to make the Smart Agents work. We faced many challenges and we used many technologies and methods including statistical models, taxonomies, language models, natural language generation, reinforcement learning to overcome each type. We finally delivered AI agents that were focused on doing quality assurance for different products at SAP. Through this process, I formed my own philosophy of AI Agents, their architecture, and a framework that enables Agents to deliver tasks, collaborate on goals, behave smoothly, and become a base for a diverse workforce…

Ok now, let’s start with the basics: What exactly is an AI agent?

What is an AI Agent

An AI Agent is an autonomous software entity that performs tasks, makes decisions, and interacts (or collaborates) with other AI or human agents or systems to achieve specific business goals. As the name suggests, AI agents can use AI technologies to understand and process natural language, learn from data, and adapt to new information, enabling them to automate complex processes and provide more personalized, efficient, or resilient experiences.

1. Identity & Goals

This defines the agent’s unique role, purpose, and objectives. Identity specifies the agent’s function within the system, and Goals provide it with a clear direction, guiding its actions and prioritizations.

Identity defines the fundamental motivations for the agent’s behavior and choices and will be used to ensure that the agent’s actions remain aligned with its overarching mission, differentiating its role from other agents.

Identity and Goals serve as the agent’s “guiding compass,” influencing every other component by setting a foundational intent for actions and decisions.

2. Override Control & Rules

A set of operational guidelines, ethical constraints, and emergency protocols that govern the agent’s behavior, with an override mechanism allowing higher authorities to intervene in exceptional situations.

This component ensures the agent adheres to established boundaries, legal standards, and safety measures, acting as its “code of conduct.” The override function enables an external authority to make immediate, high-priority decisions or alter the agent’s course of action when necessary, even if this deviates from the agent’s execution plan or predefined goals.

3. Memory

A dynamic repository that includes both short-term and long-term memory, allowing the agent to retain specific details from recent and past interactions. Short-term memory helps the agent maintain context within a single interaction, enhancing responsiveness by remembering details specific to the current session. Long-term memory, on the other hand, enables the agent to adapt and improve by retaining information over extended periods, supporting continuity and personalization across interactions.

An example of short-term memory usage is the agent remembers a recent complaint from a customer about a defective item, allowing it to provide relevant follow-up support. If a customer contacts support repeatedly about delayed shipments, the agent references long-term memory to acknowledge this pattern and offer more proactive solutions, such as expedited shipping options or loyalty discounts.

Memory allows the agent to learn and adapt by recalling relevant past information. It enhances continuity within sessions (short-term) and across multiple interactions (long-term), setting it apart from the Knowledge Base (static information) and Mental Model (agent’s real-time understanding of the environment).

4. Mental Model

A real-time, continuously updated representation of the agent’s environment and its current state. This component synthesizes immediate contextual information into a coherent “snapshot” that informs decision-making.

It also provides situational awareness, helping the agent make decisions based on the current conditions in shared environments. It acts as an internal “map” of the agent’s surroundings that could be used for its sanity checks to raise a flag or pause if the mental model doesn’t match the reality of the environment. Distinct from memory or perception, the mental model is based on the agent’s own perspective, reflecting its unique understanding of the world through its interactions. It’s not an omniscient view of all activities or a universal state but rather a subjective and localized view built from what the agent knows based on its own interactions and updates it has directly received or perceived.

5. Knowledge Base

A static repository of domain-specific knowledge, factual information, and reference data that serves as the agent’s foundation for understanding concepts and terminology within its domain. Knowledge bases could be internal, external, or even innate (an agent trained on the domain data). The knowledge base, for the most part, remains consistent and unchanging, acting as a stable reference unlike memory or perception, giving the agent context and background knowledge it can rely on regardless of the situation.

6. Anticipation

A proactive component that enables the agent to project potential future states and monitor the environment for these expected conditions. Anticipation includes environmental and temporal monitoring and serves as the basis for self-initiation. This component enables the agent to initiate actions when it detects that the environment is reaching a projected or desired state, fostering responsiveness and autonomy. In its basic form, it could be as simple as a schedule on which the agent should run or an event that the agent should wait for. It could be as sophisticated as the agent’s “forecasting” tool, empowering it to act proactively based on anticipated conditions, distinct from immediate perception or static planning.

7. Actions

Actions are internal operations that the agent performs within its own ecosystem or shared agent systems to manage, coordinate, and track its own processes. Actions involve tasks such as marking a task as complete, updating progress, sending status reports, or coordinating with other agents via an interface. These are activities focused on maintaining the agent’s internal state and communication within the agent ecosystem, independent of the external environment.

Actions are distinct from Tools because they do not involve interacting with the external application layer or work environment. Let me give you an example, a customer support agent can execute a refund for a returned order through the eCommerce platform API (which are Tools). After completing the refund, the agent uses an Action to mark the inquiry as “resolved” in a shared backlog (which is an Interface). This is an internal update and does not affect the external environment; it only reflects the agent’s own progress within its ecosystem.

8. Interface

The dedicated component for managing communication between the agent, other agents, and humans. The Interface supports various channels, including conversational interactions, event emissions, and status updates. The interface exposes APIs for agents to use as Actions (similar to how the external world exposes APIs for agents to use as Tools).

Facilitates structured, transparent interactions, enabling the agent to share information about progress, status, and task outcomes with other agents/humans in the agents ecosystem.

8. Modalities

Modalities define the format or representation of information that the agent can handle, such as text, voice, images, or documents. Modalities allow the agent to process, interpret, and present information in various forms, both internally and when interacting with external tools. Modalities make the agent versatile in how it processes and outputs information, enabling it to format content in ways compatible with different tools, interfaces, and communication needs.

Back to our example of the customer support agent that can process refunds for returned orders. The agent can anticipate a voice call from customers. The agent needs to understand the voice modality. Once done with completing the refund, the agent can use the modalities to generate a voice response that will then be sent using existing tools as a response to the customer.

10. Planning

The strategic component that generates actionable plans (execute plans) based on the agent’s goals. Planning coordinates tools, actions, and resources for effective task completion. It incorporates reflection and self-assessment from past actions, refining strategies based on performance to improve outcomes.

Serves as the agent’s “strategist,” crafting a path to accomplish objectives. It could be as basic as accepting a blueprint (an execution plan comprised of a sequence of tools to be used) or as sophisticated as being informed by self-reflection and evaluation to come up with dynamic strategies of using tools available at the agent exposal, making it a forward-looking, adaptive function.

11. Execution Plan

An execution plan is, most of the time, the result of the Planning component, a specific, actionable script that dictates the sequence of actions and tools the agent should use to achieve its goals within a particular scenario. It serves as a step-by-step guide or a “playbook,” translating high-level strategies into concrete steps aligned with goals, rules, and available resources in a coherent manner. Now, you can imagine that the playbook could be written by someone else (let’s say a human) and handed to the agent to follow. As long as the references are correct the agent should be able to use that as a blueprint and deliver the results!

12. Tools

Tools are external resources or interfaces that the agent accesses to perform specific tasks within the external environment or application layer. These include APIs, external databases, and other application interfaces that extend the agent’s functional capabilities, allowing it to gather information, manipulate data, or complete work in the external environment.

Tools enable the agent to interact with the task environment (application layer), allowing it to execute specialized functions it cannot perform independently. They serve as resources that let the agent access and impact data or processes outside its internal ecosystem. Tools operate exclusively within the external environment, distinct from Actions, which are contained within the agent’s ecosystem. Tools allow the agent to perform work that influences the broader application layer, like in our example, such as processing refunds, retrieving order details, or updating customer information. When a customer support agent executes a refund, it uses a Tool — a wrapper around the eCommerce platform API — to process the refund within the external system. This impacts the external environment by modifying the customer’s order status.

Comparing AI Agent Architectures

Now that we’ve explored my take on AI agents, you might be wondering how this stacks up against other definitions out there. It would be interesting to see where our ideas align and where they might differ.

Our definition of an AI Agent in this text, emphasizes its role as an autonomous software entity that not only performs tasks and makes decisions but also interacts and collaborates with other AI or human agents to achieve specific business goals. This aligns with the textbook definition from sources like “Artificial Intelligence: A Modern Approach” by Russell and Norvig, where an agent is described as anything that perceives its environment through sensors and acts upon it through actuators to achieve goals.

However, our definition extends the traditional concept by highlighting the agent’s ability to understand and learn from data, and adapt to new information. This perspective focuses on practical applications that automate complex processes and enhance personalization, efficiency, and resilience in business contexts.

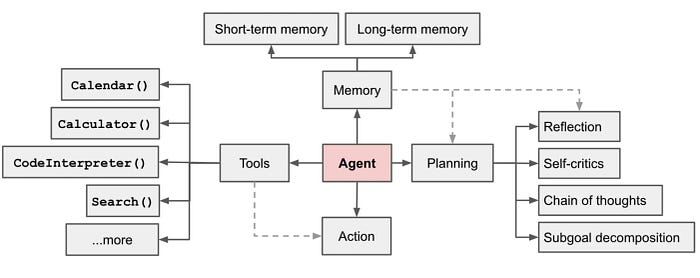

Talking about the architecture, many of the articles reference the following diagram as the AI Agent architecture or to be more accurate LLM Agents (or generative agents) where the LLM acts as their “brains,” equipped with planning, memory, and tool-use capabilities. For example, they can deconstruct complex tasks, remember past conversations, and access external APIs for up-to-date info. There’s even mention of multi-agent systems where specialized agents collaborate, much like a virtual team handling different roles.

Additionally, works like ReAct from Yao et al. and Generative Agents by Park et al. demonstrate powerful approaches for reasoning and simulating human behaviors. Gato, developed by DeepMind, represents a step toward creating a generalist agent capable of performing multiple tasks across various domains. It integrates different modalities, allowing it to process and generate text, images, and other data forms. Language Agent Tree Search (LATS) represents one of the more sophisticated recent architectures. LATS incorporates a modified version of Monte Carlo Tree Search (the reinforcement learning approach behind AlphaGo) to allow language agents to explore multiple pathways to achieve their goals. This framework enables agents to prioritize high-reward paths, incorporate feedback, and backtrack if necessary.

On the framework side, LangChain has emerged as a versatile framework that simplifies the development of large language model (LLM)-powered applications by providing tools and abstractions for building complex AI agents. LangChain enables agents to interact with external data sources and APIs, facilitating reasoning and task execution. More aligned with part of the vision we laid out in this article, LangGraph and extension of LangChain, further enhances these capabilities by supporting stateful, multi-actor applications. Crew.ai is anther open-source framework designed to facilitate the development and management of multi-agent AI systems. It enables AI agents to assume specific roles, share goals, and operate cohesively, much like a well-coordinated team.

So, how does our proposed architecture fit into this landscape?

A combination of these frameworks could be used as a base to initiate your journey into to an agent based architecture. However, I believe we need a framework that can provide a more comprehensive roadmap for building AI agents that can build a viable workforce for a company and not just individual agents that can take some tasks. Starting with an agent’s Identity & Goals, we give it a clear purpose and direction. It’s like assigning a specific role to someone — you want them to know exactly what they’re responsible for and what they’re aiming to achieve. Override is another crucial difference for safety and compliance. Imagine if our customer support agent started issuing refunds without any checks — that could spiral out of control quickly. By setting up an override mechanism, we ensure the agent operates within safe boundaries and can be guided or halted if needed. When it comes to memory, we introduce a Mental Model. This lets the agent keep its own PoV of the world when it’s changing the world creating expectations that could justify the next steps that they take. This will be essential to understand and explain why a step was taken what was the mental model and how and if it matched the reality of the world. Another unique aspect of our architecture is Anticipation. This allows the agent to be proactive rather than just reactive. Think of a sales agent who notices you often buy a certain product and reminds you when it’s time to restock — acting before you even realize you need it.

While others approach to tool integration focuses on adding external functionalities (like calculators and code interpreters) to language models, treating them as extensions of the model’s capabilities. In contrast, in our model, by distinguishing between Actions and Tools, we clarify how the agent functions internally versus how it interacts with external systems. Actions are like the internal repository of operations available to the agent — updating its status, coordinating with other agents — while tools are how it engages with the outside world, like adding an item to a cart or processing the refund of a returned item. The Interface and Modalities components ensure the agent can communicate effectively across various channels — be it text, voice, or images — making it more adaptable to different user needs.

Finally, the Planning we talked about is not that different than what is established by others, reflecting on past actions, learn from mistakes, selecting from what is available at disposal, and adjusting strategies accordingly. We also have the Execution Plan is an executable recipe that can be performed by an agent to achieve its goals, while it is usually the result of the planning we recognize that it could be directly handed to the agent to follow — much like receiving an order to follow from a higher authority.

In contrast to other definitions that might focus mainly on environmental interaction or leveraging LLMs, our architecture aims for a holistic approach. It considers not just what the agent does, but also how and why it does it, ensuring alignment with business objectives, safety protocols, and user expectations. By integrating all these components, we can build AI agents that are not only capable but also reliable and aligned with their intended tasks. This way, agents truly enhance our systems and provide valuable services, rather than just performing isolated functions.

References:

Generative Agents: Interactive Simulacra of Human Behavior Joon Sung Park, Joseph C. O’Brien, Carrie J. Cai, Meredith Ringel Morris, Percy Liang, Michael S. Bernstein

Artificial Intelligence: A Modern Approach Russell and Norvig

ReAct: Synergizing Reasoning and Acting in Language Models Shunyu Yao, Student Researcher, and Yuan Cao, Research Scientist, Google Research, Brain Team

LLM Powered Autonomous Agents Lilian Weng

Language Agent Tree Search Unifies Reasoning Acting and Planning in Language Models Andy Zhou, Kai Yan, Michal Shlapentokh-Rothman, Haohan Wang, Yu-Xiong Wang

A Generalist Agent DeepMind: Scott Reed, Konrad Żołna, Emilio Parisotto, Sergio Gómez Colmenarejo, Alexander Novikov, Gabriel Barth-Maron, Mai Giménez, Yury Sulsky, Jackie Kay, Jost Tobias Springenberg, Tom Eccles, Jake Bruce, Ali Razavi, Ashley Edwards, Nicolas Heess, Yutian Chen, Raia Hadsell, Oriol Vinyals, Mahyar Bordbar, and Nando de Freitas

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.