3 Commands to Secure Your ML Models from Malicious Pickles

Last Updated on January 19, 2023 by Editorial Team

Author(s): Cait Lyra

Originally published on Towards AI.

First of all, what is a pickle

Basically, it is a Python object. I don’t know much about pickle, so it’s hard for me to explain what it is and how they could hurt our computer.

I found some links to references and put them at the bottom of the page as references. You can check them out later. Before that, I asked ChatGPT to help me figure out what a “pickle” is in Python.

In Python, a “pickle” is a way to store and retrieve a Python object. It converts the object into a byte stream, which can be saved to disk or sent over a network, and then later reconstituted back into an identical copy of the original object using the “unpickling” process. Pickling and unpickling are typically used for data persistence, as well as for sending data between processes. The `pickle` module provides functions for working with pickled data.

Why does using pickle in the ML model contain security risks?

Using pickle to serialize and deserialize machine learning models can introduce security risks because pickle is a powerful and flexible format that can execute arbitrary code. This means that if an attacker can craft a malicious pickle file and convince a user to open it, they could potentially execute arbitrary code on the user’s machine.

For example, an attacker could craft a pickle file that, when unpickled, causes the system to delete all files in the current directory. Or, attacker could craft a pickle file that, when unpickled, causes the system to run a shell command and exfiltrate data from the machine.

How to keep yourself safe from pickle

The basic principle is that only download models from reliable sources.

Hugging Face has a built-in pickle scan, which will show if the model contains a pickle or not. And the suggestion that was given in the documentation is Don’t use pickle.

I understand; you just can’t help, right?

I want to use the model that downloads from the internet but adds a bit more safety, so I searched several options for pickle scanning.

When you use Automatic1111 WebUI, a safety check is built in. But DiffusionBee, which is a great, stable GUI app for Mac that makes it easy to start making AI art, doesn’t have a pickle scan built-in, so I have to do it myself.

There are several resources you can find online to help you detect pickles:

And I really depend on GUI, so I like the second tool. Here’s where the problem comes in.

Although it is open-source and available on GitHub, it only includes a Windows.exe file. Thus, I can’t simply download and execute it on my Mac. However, because the developer made it open-source, we might try to execute it. The only problem left now is — I know nothing about programming.

Luckily, the magical ChatGPT might know.

Let’s ask if it has any clue about it?

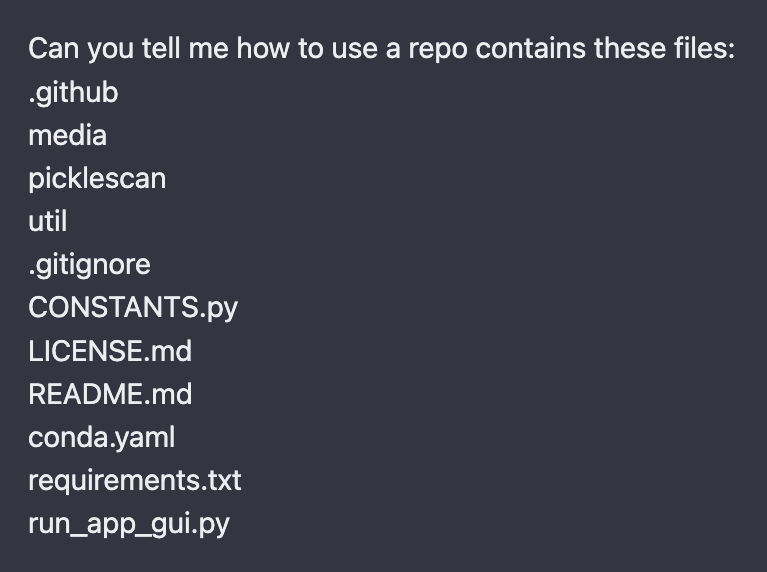

First, I got these explanations, which were good but didn’t help me:

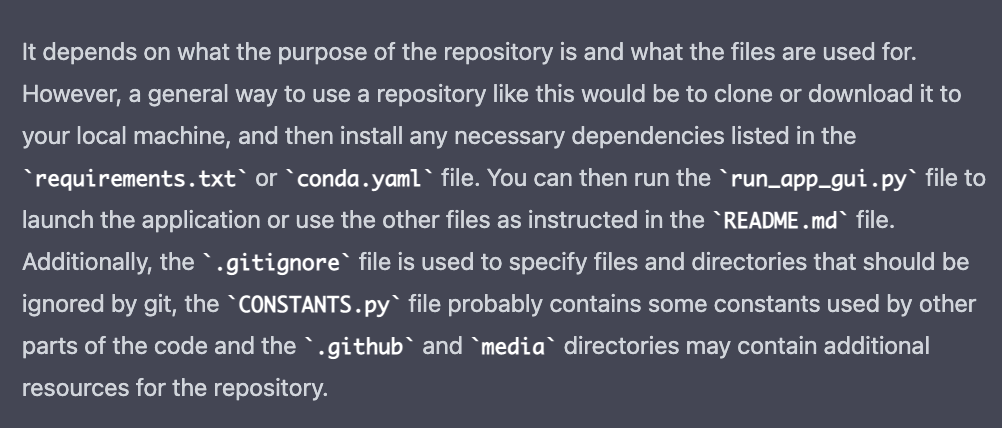

Then I asked how to execute these files and got pretty decent answers.

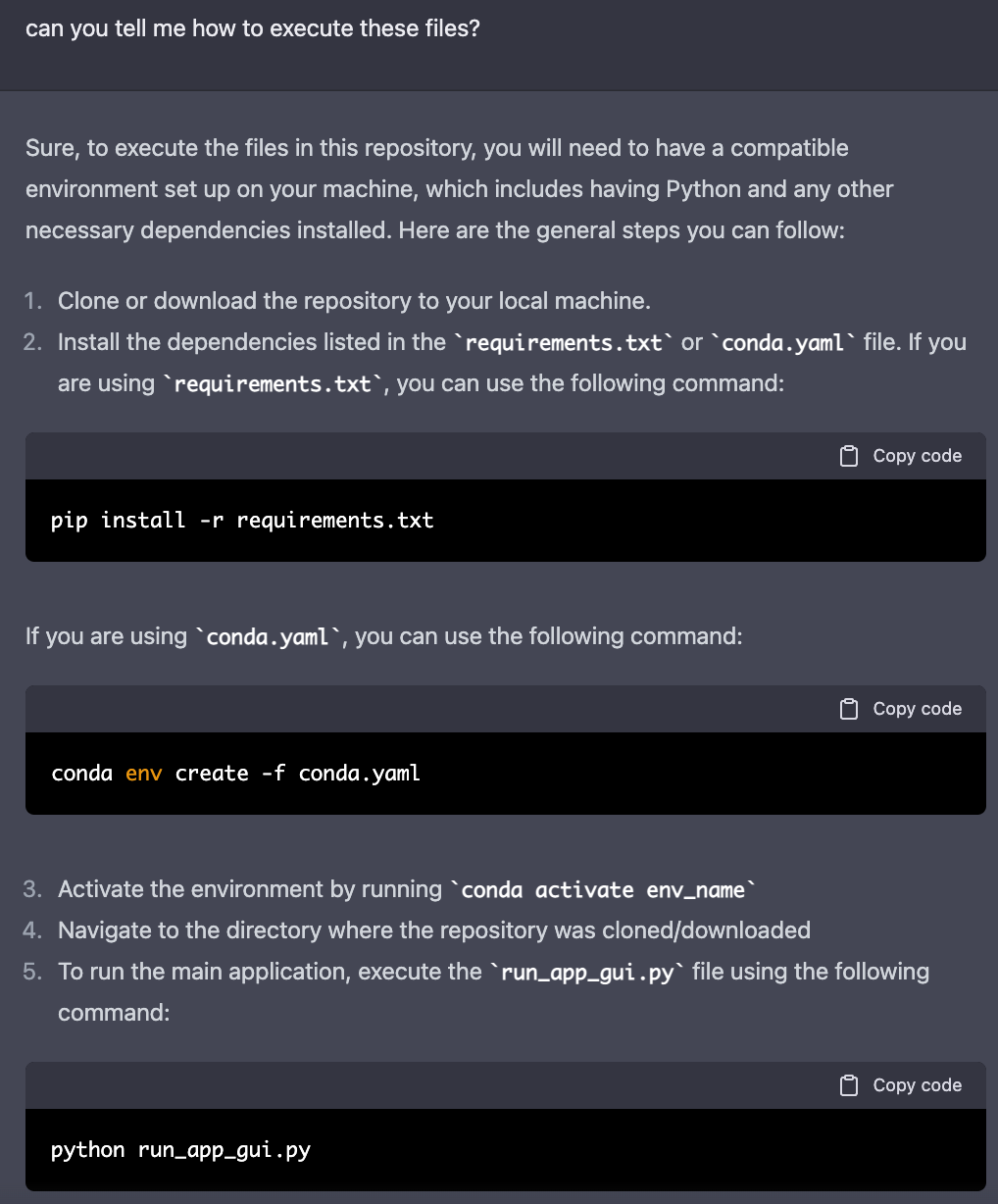

okay, it appears that glimmer of hope appears. I have conda but I don’t know what the env_name is. Let’s keep asking to see if ChatGPT could also help me with this, and it does.

Now we got all the pieces to run the application!

According to the conda.yaml, the env_name is sdpsgui.

Here are the most important 3 commands we need:

conda env create -f conda.yaml

conda activate sdpsgui

python run_app_gui.py

I believe you can nail it by now, but if you are not familiar with a terminal like me, here are the steps, and we could do it together.

Let’s give it a spin.

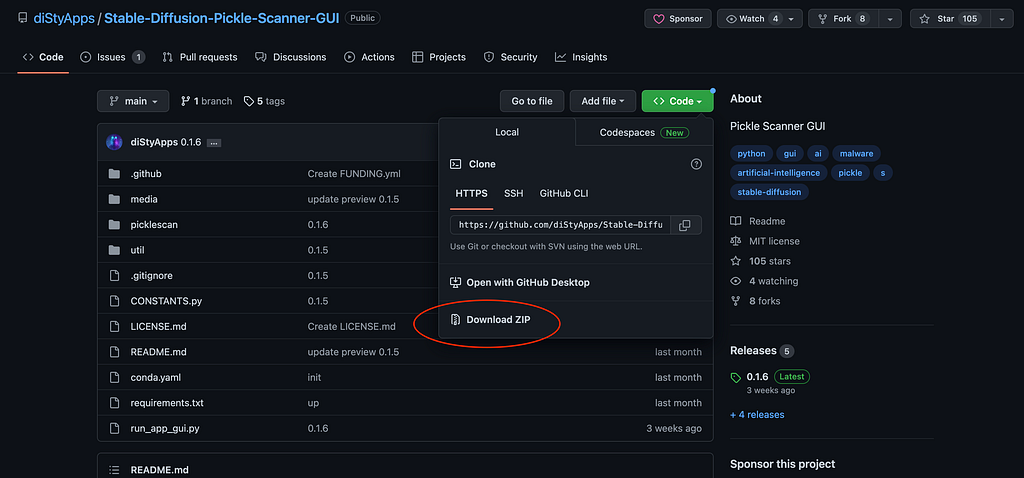

First, we go to Stable-Diffusion-Pickle-Scanner-GUI and hit the code button to download the ZIP file.

Unzip them, and open your terminal, go to where your unzip folder is.

For example, mine is under /Download/Stable-Diffusion-Pickle-Scanner-GUI-0.1.6

If you never use a terminal before, the way to go to your folder is to type cd + folder_name

So I have to go to the download folder first:

cd Download

Then go to the folder where the Stable Diffusion Pickle Scanner GUI is:

cd Stable-Diffusion-Pickle-Scanner-GUI-0.1.6

Once you are in the right place, your terminal might looks like this:

(base) [your_computer_name] Stable-Diffusion-Pickle-Scanner-GUI-0.1.6 %

and you can run the 3 important commands now (type the command after % and hit enter).

First, we create a new conda environment:

conda env create -f conda.yaml

Then we activate the environment with the following:

conda activate sdpsgui

Finally, let’s open the GUI with the following:

python run_app_gui.py

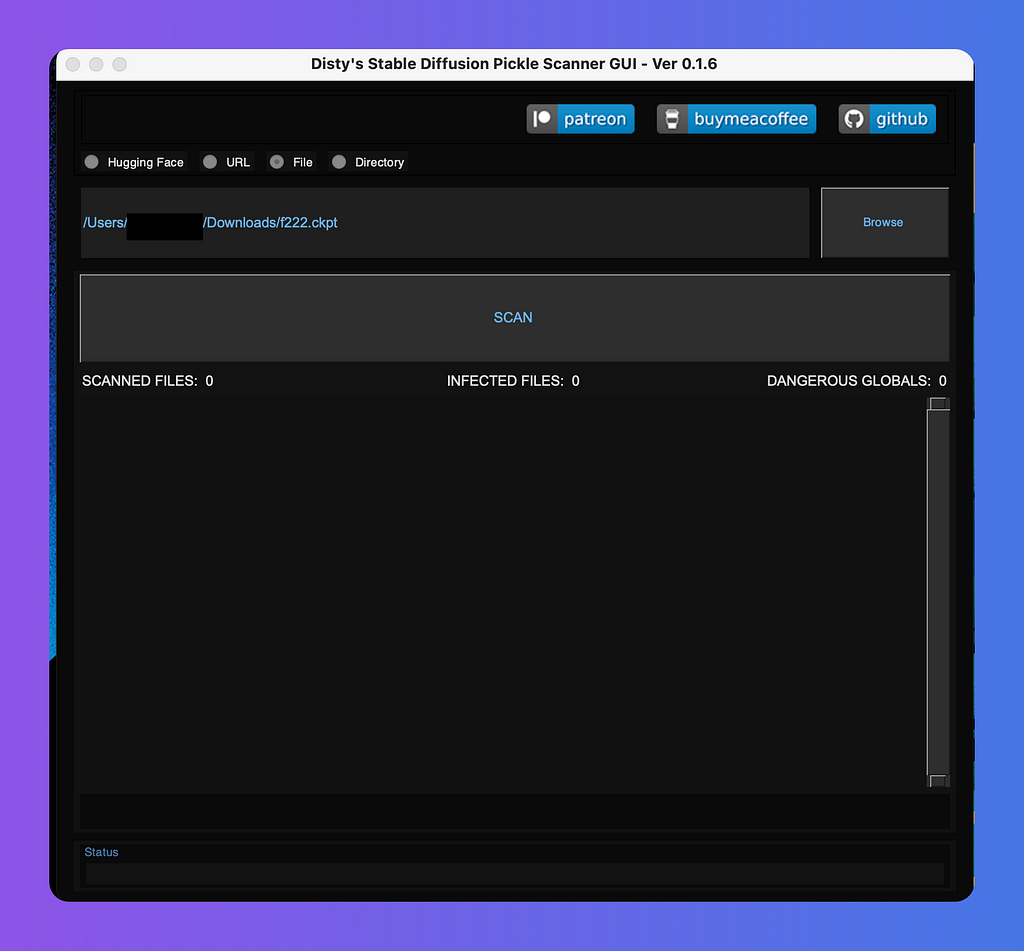

TADA!

We successfully open the app on Mac.

If you think this is useful, please give the developers a GitHub star or buy them a coffee to thank them.

- https://github.com/diStyApps/Stable-Diffusion-Pickle-Scanner-GUI

- https://github.com/mmaitre314/picklescan

Reference

- Reddit article: Keep yourself safe when downloading models, Pickle malware scanner GUI for Stable Diffusion

- Stable-Diffusion-Pickle-Scanner-GUI

- Original Pickle Scan Repo: https://github.com/mmaitre314/picklescan which you can also download it from pip install: https://pypi.org/project/picklescan/0.0.7/

- Stable Diffusion Pickle Scanner

- Hugging Face Pickle Scanning

- Python official doc of pickle — Python object serialization

- Embrace The Red: Machine Learning Attack Series: Backdooring Pickle Files

- ColdwaterQ: Backdooring Pickles: A decade only made things worse

- Never a dill moment: Exploiting machine learning pickle files

- Fickling on GitHub

- TensorFlow Remote Code Execution with Malicious Model

3 Commands to Secure Your ML Models from Malicious Pickles was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.